What is Lexical Analysis?

Lexical analysis, also known as scanning, tokenization, or lexing, is the first step in the compilation process. It involves breaking a program's source code into a series of tokens or lexemes, which are the smallest meaningful units of code.

Lexical analysis simplifies the subsequent parsing process by identifying and eliminating comments, whitespace, and other irrelevant elements. It also reduces the complexity of source code, making it easier for the parser to process.

How does a Lexical Analysis Work?

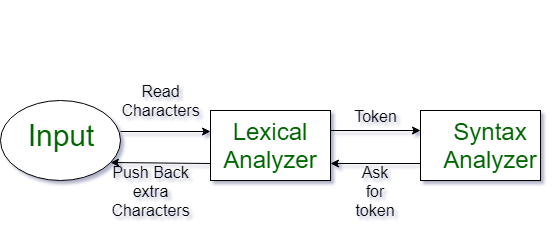

The source code is read character by character to identify lexemes or tokens. It includes such as keywords, identifiers, literal values, operators, and delimiter symbols.

These tokens are then passed to the next phase of the compilation process, parsing.

What is Lexical Analyzer?

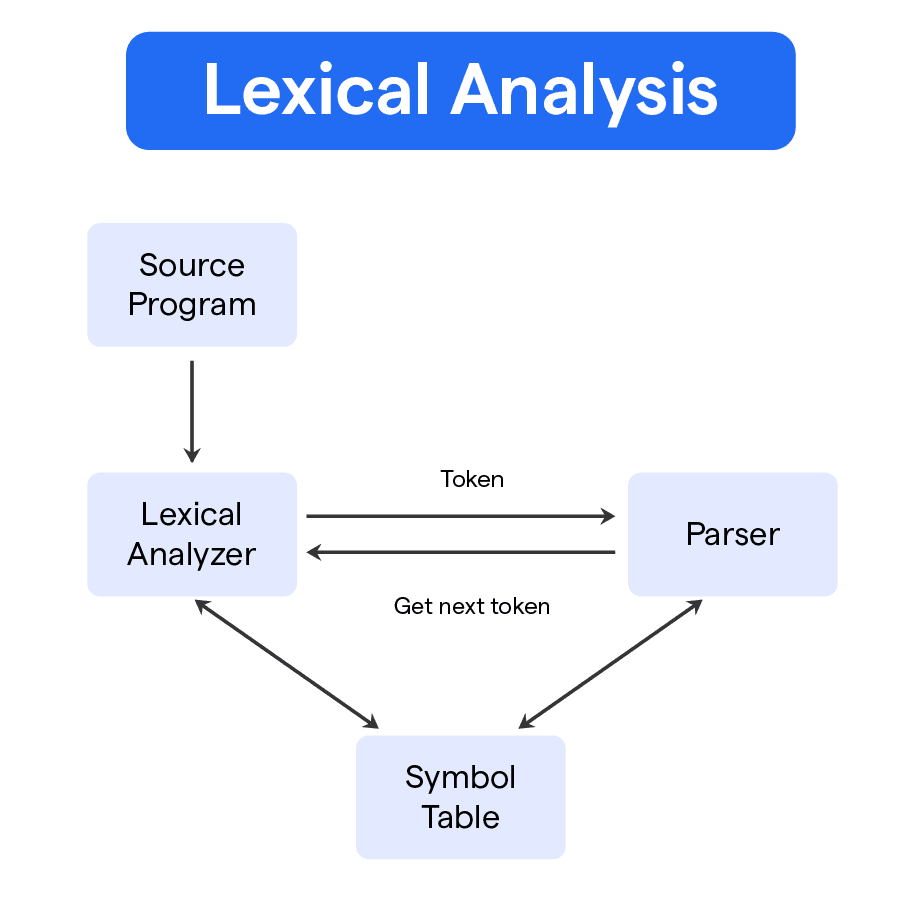

A lexical analyzer is often called a scanner or lexer. It is a program or component of a compiler responsible for carrying out lexical analysis during the compilation process.

Key Components of Lexical Analysis

The key components of lexical analysis are the following:

Tokens

Tokens, or lexemes, are the smallest meaningful units of code identified during lexical analysis.

They can represent keywords, literals, operators, and delimiters used in programming languages.

Finite Automata

Finite automata are abstract models that represent the behavior of a system or process with a limited number of states and transitions.

They are used in lexical analysis to model the rules and identify valid tokens based on the input character sequence.

Regular Expressions

Regular expressions are patterns used to match and categorize text data.

In lexical analysis, regular expressions help define the sentence structure and rules for identifying valid tokens.

Symbol Table

A symbol table is a data structure used in the compilation process to store and manage symbols or identifiers, including their types, scopes, and other relevant attributes.

It plays a vital role in various stages of compilation, including lexical analysis and semantic analysis.

What is Lexical Analysis Process?

The lexical analysis process is follows as:

- Input Buffering: Input buffering is the method of reading a predetermined number of input characters into a buffer.

This allows for efficient character processing and minimizes file I/O operations.

- Token Recognition: Token recognition is the process of identifying and classifying lexemes into corresponding tokens.

It is done on the basis of the programming language's syntax rules.

- Handling Ambiguities: During token recognition, ambiguities could arise where a sequence of characters could be interpreted as multiple valid tokens.

The lexer needs to identify and resolve such ambiguities to produce correct tokens.

- Output Generation: Once the tokens are identified, the lexer generates output in the form of token sequences, which are passed on to the subsequent parsing phase.

The Key Techniques Of Lexical Analysis

The key techniques of Lexical analysis are the following:

Deterministic Finite Automata (DFA)

Deterministic Finite Automata (DFA) is a technique used in lexical analysis where a finite automaton transitions between states based on the input character sequence deterministically.

It is efficient in terms of time and memory usage.

Nondeterministic Finite Automata (NFA)

Nondeterministic Finite Automata (NFA) is another technique that models token recognition using finite automata.

Unlike DFA, NFA allows multiple possible state transitions for a given input character, enabling the recognition of different tokens using fewer states.

Lex

Lex is a widely used software tool for generating lexical analyzers from regular expressions.

It provides a user-friendly interface for defining tokens and simplifies the lexer generation process.

Conversion from NFA to DFA

To leverage the efficiency of a DFA, lexical analyzers often convert an NFA, which is more expressive, into an equivalent DFA.

The process involves identifying equivalence classes and mapping them to deterministic states.

The Role of Lexical Functional Grammar in Lexical Analysis

Lexical Functional Grammar (LFG) is a linguistic framework that focuses on the structure and meaning of sentences.

Developed in the 1980s by Joan Bresnan and Ronald Kaplan, LFG emphasizes the relationship between syntax (sentence structure) and semantics (meaning analysis).

Meaning Analysis

Lexical Functional Grammar's f-structure facilitates meaning analysis by linking syntactic functions to their semantic roles.

This meaning analysis approach allows for a more precise interpretation of how different parts of a sentence contribute to its overall meaning.

Thus aiding overall in lexical analysis in the understanding of complex sentences and ambiguities.

Linguistic Framework

Lexical Functional Grammar is unique in its dual-level representation of grammatical structure: constituent structure (c-structure) and functional structure (f-structure).

The c-type sentence structure represents the hierarchical organization of phrases. Meanwhile the f-type sentence structure captures grammatical relations and functions, such as subject, object, and tense.

Syntactic Analysis

Syntactic analysis in LFG involves examining both c-type sentence structure and f-type sentence structure to uncover the underlying grammatical rules.

This dual-level syntactic analysis provides a robust framework. With this it can understand the complexities of human language, making lexical analysis a powerful approach in modern linguistic theory.

Benefits and Challenges of Lexical Analysis

In this section, you’ll find the benefits and challenges of lexical analysis:

Benefits of Lexical Analysis

The benefits of lexical analysis are the following:

- Streamlines the downstream compilation process by converting raw source code into structured tokens.

- Facilitates better error handling by detecting and reporting lexical errors early in the compilation process.

- Reduces the complexity of source code for parsing and subsequent stages.

Challenges in Lexical Analysis

The challenges of lexical analysis are the following:

- Handling ambiguous character sequences that could be interpreted as multiple valid tokens.

- Designing efficient and scalable lexical analyzers capable of processing large source files while maintaining performance.

- Ensuring robust error detection and reporting for a better user experience.

Lexical Analysis in Other Applications

Here are the usages of lexical analysis in other applications:

- Natural Language Processing: In natural language processing (NLP), lexical analysis takes on the role of tokenization, converting sentences into words or tokens for further processing.

- Data Mining and Text Analytics: Lexical analysis can help identify and extract meaningful information from large datasets in data mining and text analytics.

- Pattern Matching and Search: The techniques employed by lexical analysis, such as regular expressions and finite automata, can be used in pattern matching algorithms and search utilities to find and classify specific text data.

- Security and Malware Detection: With the increasing sophistication of malware, lexical analysis can play a crucial part in identifying malicious code embedded within seemingly harmless data.

Frequently Asked Questions (FAQ)

What is Lexical Analysis and why is it important?

Lexical Analysis is the first step in the compilation process, in which a program's source code is broken down into tokens or lexemes.

It simplifies and streamlines the subsequent parsing process while facilitating better error handling.

What is the role of a lexical analyzer, and how does it work?

A lexical analyzer is a program responsible for conducting lexical analysis during the compilation process.

It scans the source code character by character and identifies tokens based on the syntax rules defined by the programming language.

What are the key components involved in lexical analysis?

Key components in lexical analysis include tokens, regular expressions, finite automata, and symbol tables.

What are the techniques used for implementing lexical analyzers?

Deterministic Finite Automata (DFA) and Nondeterministic Finite Automata (NFA) are two widely used techniques for implementing lexical analyzers.

Additionally, Lex is a software tool for generating lexical analyzers from regular expressions.

How is lexical analysis applied in other areas besides compilation?

Lexical analysis is applicable in natural language processing, data mining, text analytics, pattern matching, search, and security, such as malware detection.