What is Latent Dirichlet Allocation?

Latent Dirichlet Allocation (LDA) is a technique used in natural language processing to group similar text together.

Think of it like a sorting hat that uses statistics to assign individual items (like words in a document) into categories (like topics for a collection of documents).

For instance, if you feed a pile of news articles into LDA, it might recognize the words "stocks," "market," and "trading" frequently appear together and group them under the umbrella of "finance" - all without any prior instruction.

It's a powerful tool for uncovering hidden patterns in text data.

Key Features and Components of LDA

Here's an in-depth look at the integral components and features of the Latent Dirichlet Allocation model that make it a powerful tool for topic modeling and text analysis.

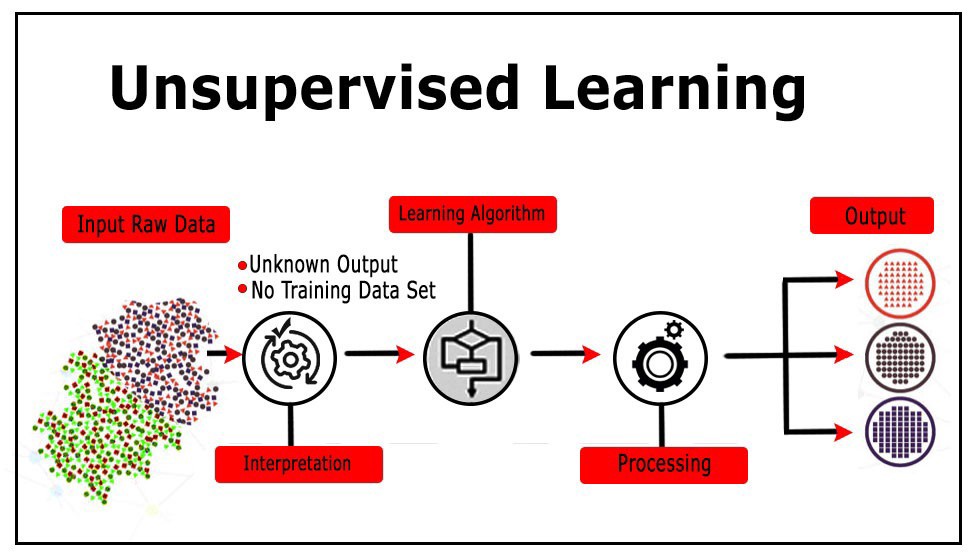

Unsupervised Learning

LDA is an innovative example of unsupervised learning, being able to organize vast amounts of text data without prior labeling or category distinctions.

Probabilistic Modeling Core

At LDA's core is probabilistic modeling, decoding underlying thematic structures in documents by assigning probabilities to word and topic combinations.

Generative Process

The cornerstone of LDA is its generative process, algorithmically reverse-engineering the creation of documents from mixed topics and topic-based word distributions.

Utilization of Dirichlet Distribution

LDA leverages the Dirichlet distribution to predict topical nuances within documents and their related word associations, thereby revealing unseen thematic structures.

Essential Hyperparameters

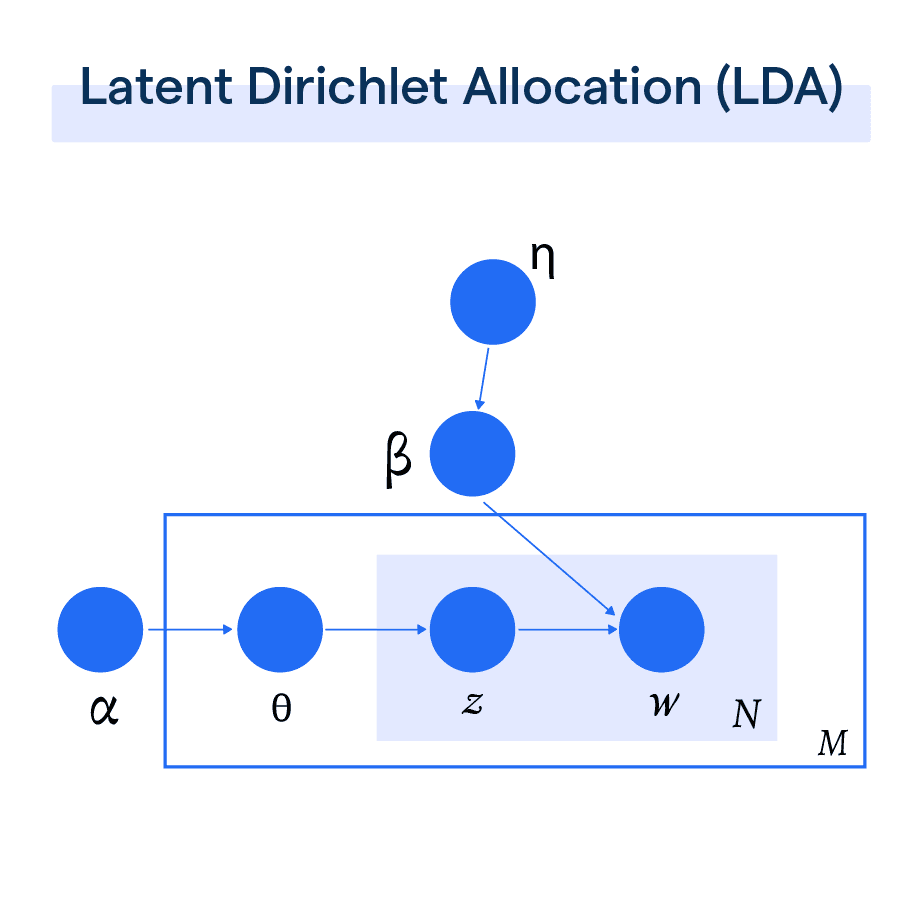

Alpha and Beta, LDA's crucial hyperparameters, dictate topic distributions and word allocations, significantly affecting the accuracy and granularity of the results.

Collapsed Gibbs Sampling Implementation

Collapsed Gibbs sampling is a favored technique for parameter estimation in LDA, gently recalibrating topic-word and document-topic distributions for each word.

Online Variational Bayes

This optimization method helps efficiently infer the model's parameters, especially in real-time applications with large or streaming data sets.

Semantic Understanding

LDA’s forte lies in its semantic understanding, recognizing patterns to group related words and documents into discernible topics.

Scalability

Another plus is LDA's scalability, with its capacity to process vast text data and wide range of topics, making it invaluable to search engines or massive databases.

Transformation of Text Mining

Finally, LDA's impact on the text mining sphere, covering sentiment analysis, document categorization, and recommender systems, affirms its importance in natural language processing.

Parameters and Variables in LDA

In this section, we'll explore the crucial parameters and variables involved in Latent Dirichlet Allocation (LDA), a popular technique for topic modeling.

Document-Topic Probabilities

In LDA, each document in the dataset is assumed to be a mixture of a certain number of topics. The probabilities of these topics within each document form a crucial part of the LDA model.

Topic-Word Probabilities

This represents the probability of each word within a particular topic. This is another core aspect of LDA, where it assigns probabilities to words for each of the topics, creating a link between words and their corresponding topics.

Dirichlet Prior Parameters

Dirichlet Prior Parameters, typically known as Alpha and Beta, influence topic distributions. Alpha affects the mixture of topics in a document, i.e., low Alpha results in documents containing fewer topics, while a high Alpha leads to a mixed bag of topics. Beta, on the other hand, influences the word distribution within topics.

Number of Topics

The number of topics (k) you choose for the LDA model is a critical parameter. An optimal number of topics can accurately represent the variety of content themes without overfitting or underfitting.

Word Assignments

The assignment of words to different topics is a fundamental part of the LDA process. These assignments are done probabilistically, and they form the basis from which the document-topic and topic-word probabilities are derived.

Through an understanding of these parameters and variables, you can effectively exploit LDA for uncovering hidden thematic structures in large text collections, paving the way for rich insights and organized data.

Inference in LDA

In this section, we'll delve into the process of inference in Latent Dirichlet Allocation (LDA), a critical step for gaining insights from topic models.

Estimating Topic Distributions

Once LDA assigns topics to words probabilistically, the model estimates the topic distributions. This process determines which topics are more prevalent in the dataset, offering a broad understanding of predominant themes.

Determining Document-Topic Associations

Through inference, LDA can provide an analysis of which topics are associated with each document. These associations allow us to understand the major themes of each document in the dataset, providing context and structure.

Revealing Word-Topic Associations

The inference also uncovers word-topic associations. By doing so, LDA can determine the relevance of particular words to specific topics. This provides a clearer picture of topic constructs, illuminating the main ideas represented by each topic.

Assessing Model Quality

Inference in LDA also involves assessing the quality of the model, ensuring it effectively captures the structure and themes of the data. Techniques such as perplexity measurement or topic coherence can be employed to evaluate the model's performance.

Making Predictions on New Data

Once the model is trained, inference allows it to make predictions on new, unseen data. Given a new document, the model can infer the proportions of the document’s topics based on the word-topic probabilities it's learned.

In a nutshell, inference in LDA allows the model to extract insightful patterns and relationships from the text data, making it a valuable tool for text mining and analysis.

Frequently Asked Questions (FAQs)

What is Latent Dirichlet Allocation (LDA) and how does it work?

LDA is a topic modeling algorithm that automatically discovers hidden topics in a collection of documents. It assumes each document is a mixture of topics, and each topic is characterized by a distribution over words.

Why is LDA useful in text analysis and natural language processing?

LDA helps in understanding the themes and topics present in a large text corpus. It can be used for document classification, recommendation systems, and information retrieval, enabling efficient analysis of textual data.

How do I interpret the output of LDA?

The output of LDA provides a set of topics, each represented by a distribution over words. These topics can be examined to identify common themes and understand the main content within the documents.

What are the key assumptions made by LDA?

LDA assumes that each document is a mixture of a small number of topics, and each topic is characterized by a distribution over words. It also assumes that the distribution of topics in each document and the distribution of words in each topic follow a Dirichlet distribution.

How do I determine the optimal number of topics in LDA?

Determining the optimal number of topics in LDA is challenging. Various approaches, such as perplexity, coherence scores, and human judgment, can be used to evaluate and select the number of topics that best captures the underlying structure of the data.