What is Dependency Parsing?

Before diving into the deep aspects of dependency parsing, it is essential to understand its fundamental definition.

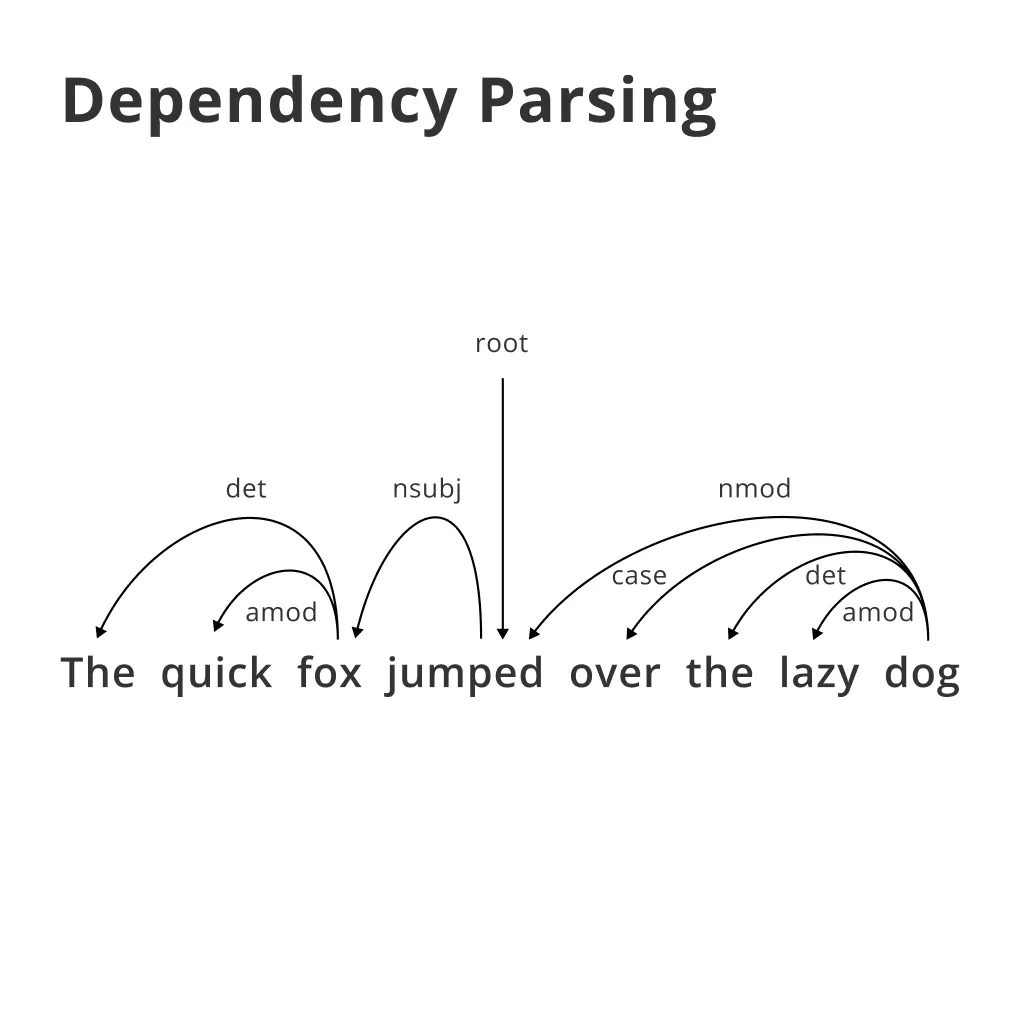

Dependency parsing, at its core, is a technique used in natural language processing and linguistics that helps in determining the grammatical structure of a sentence. It highlights the relationships (dependencies) between "head" words and words that modify those heads.

Essential Concepts

- Head

The central word of a sentence or phrase determines the syntactic type and semantic interpretation.

- Dependent

Words that modify or affect the head's meaning. They depend on heads for their meaning and function.

- Dependency

The grammatical relationship between the head and its dependents.

Parsing Trees and Grammar

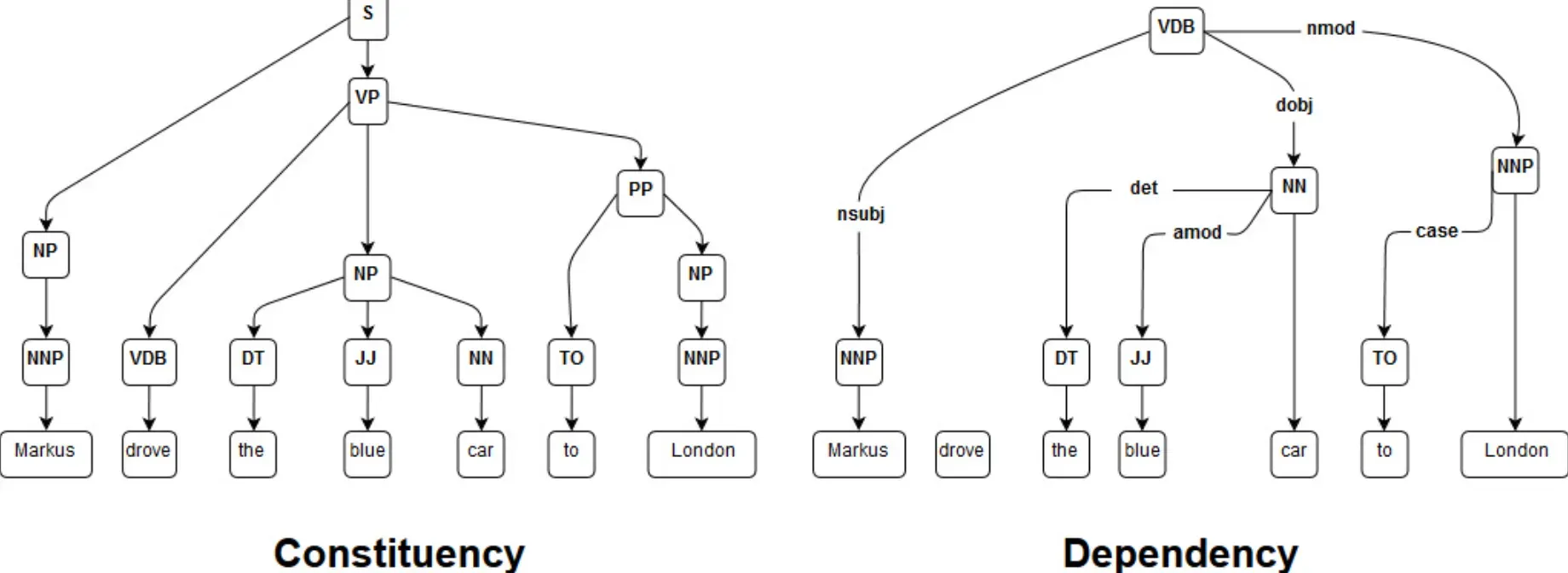

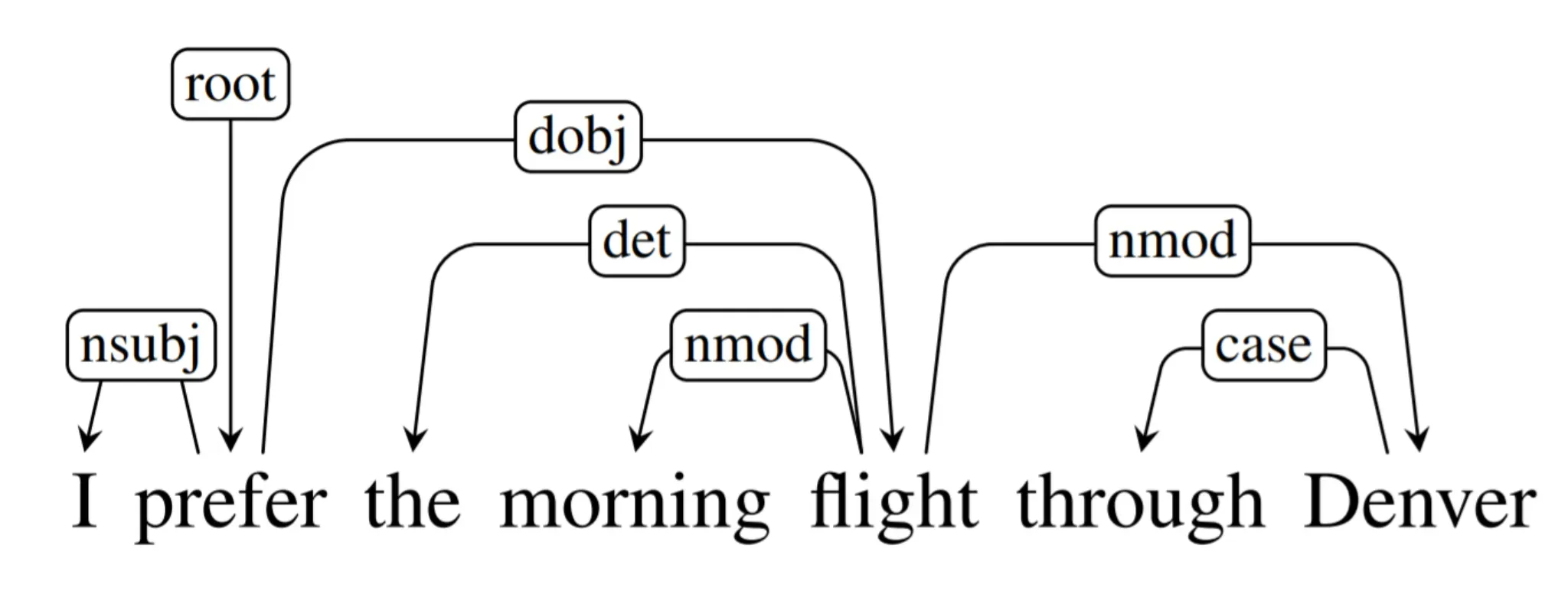

A dependency parse is typically represented as a tree. The hierarchy of the tree illustrates the relationships between different parts of the sentence.

In contrast to Constituency Parsing, which looks at syntactic constituents or groups of words, Dependency Parsing focuses more on the grammatical relationships between individual words.

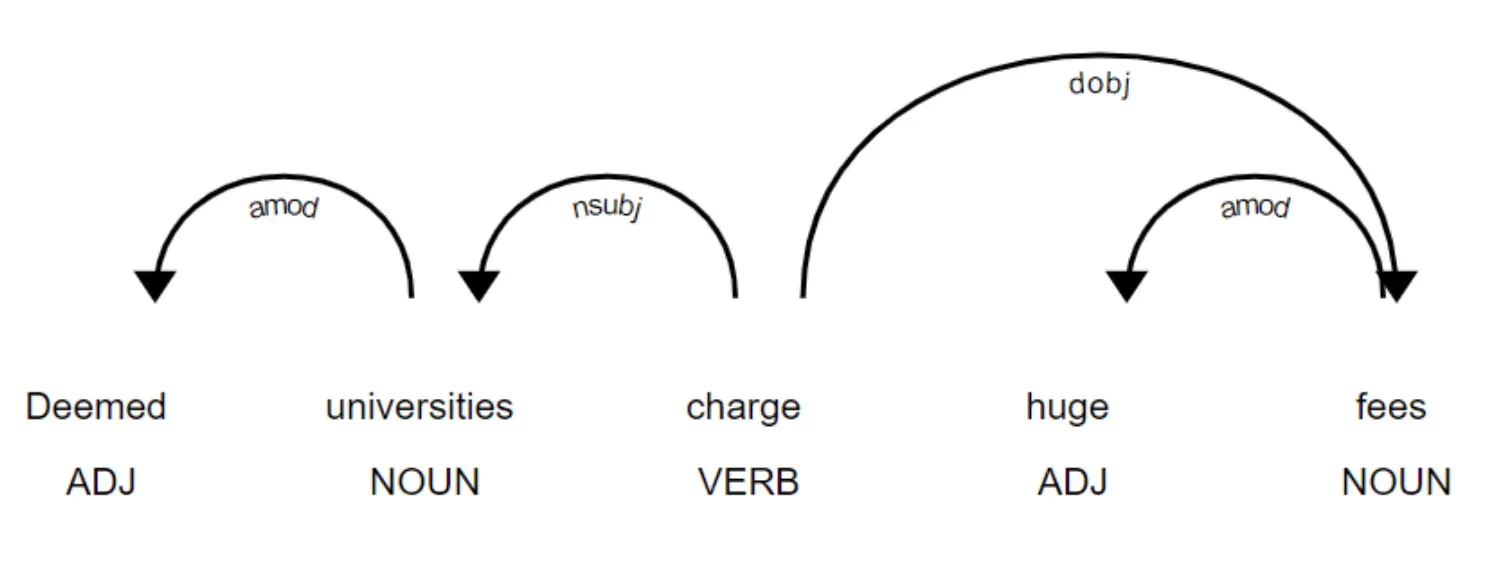

Types of Dependencies

Common types of dependencies include the nominal subject (nsubj), direct object (dobj), and determiner (det), among many others. These labels provide an additional understanding of the relationship between words.

Grammatical Vs. Semantic Parsing

Though related, grammatical dependency parsing and semantic parsing are not the same. The former focuses on grammatical relationships based on syntax, while the latter ventures more into the meaning of sentences.

Who Uses Dependency Parsing?

Now, let's look at who often utilizes dependency parsing and for what reasons.

Linguists and Scholars

Linguists and language scholars use dependency parsing while studying sentence structure and grammar to understand language better.

NLP Engineers

Natural Language Processing (NLP) engineers use dependency parsing algorithms in machine learning models to help computers understand human language.

Search Engines

Search engines use dependency parsing to improve search results by better understanding the syntax of query requests.

Translation Software

Translation software, like Google Translate, utilizes dependency parsing for accurate translations by understanding the grammatical structure of the input language.

Chatbots

Chatbots employ dependency parsing to enhance their conversational capabilities by comprehending the user inputs more accurately.

Why Dependency Parsing?

So why exactly do we need dependency parsing?

Improved Machine Understanding of Language

Dependency parsing is crucial to teach machines how to understand and interpret human language, a key part of Natural Language Understanding (NLU).

Precision in Translation

This technique provides more precise machine translation by accurately understanding sentence structures.

Enhanced Information Retrieval

Dependency parsing allows better information retrieval, not just based on keywords, but also considering the context.

More Human-like Chatbots

For chatbots and AI assistants, dependency parsing provides more human-like responses by understanding the query's semantic intent.

AI in e-Learning

In AI-powered e-Learning systems, dependency parsing helps personalize content by understanding user queries and providing relevant responses.

Where is Dependency Parsing Applied?

There are numerous applications of dependency parsing in modern-day technology.

Natural Language Understanding (NLU)

NLU arguably forms the biggest share of dependency parsing applications, from machine translation to text summarization.

Information Extraction

In information extraction systems, dependency parsing helps extract structured information from unstructured data sources like text.

Sentiment Analysis

In sentiment analysis, dependency parsing is used to determine the subject of a sentiment and the polarity of that sentiment (positive, negative, or neutral).

Text-to-Speech Systems

For text-to-speech systems, dependency parsing aids in reading out text in a manner that sounds more human-like, based on the understanding of sentence structures.

Question Answering Systems

Dependency parsing forms the backbone of AI-driven question-answering systems, facilitating accurate responses by interpreting the question's semantics.

How Does Dependency Parsing Work?

To comprehend how dependency parsing works, it's essential to delve into the process.

Tokenization

The process begins with tokenization, where the input text is divided into individual words or tokens.

Part-of-Speech (POS) Tagging

In this step, each word is tagged with its appropriate part of speech (noun, verb, adjective, etc.)

Dependency Identification

Here is where the primary task of identifying dependencies, i.e., relationships between different words, takes place.

Tree Formation

A dependency tree is then formed, representing the dependencies in a graphical way.

Output

The output is a parsed structure of the input sentence, highlighting the grammatical relationships between its different parts.

Best Practices in Dependency Parsing

Make sure to follow these best practices for optimal results with dependency parsing.

Use a Good Dependency Parser

For maximum accuracy, use a reliable and proven dependency parser like the one provided by Stanford NLP or spaCy.

Understand Your Application

Understand the application at hand and use the appropriate type of dependency parsing, whether projective or non-projective, accordingly.

Train Your Models

If using dependency parsing in machine learning, ensure proper and extensive training of your models for better parsing accuracy.

Use the Right Tools

Utilize the right tools for visualization of your dependency trees for easier analysis.

Keep Updating Your Knowledge

Stay up-to-date with advances in the field of dependency parsing for improved parsing capabilities.

Challenges in Dependency Parsing

Despite its efficiencies, dependency parsing comes with certain challenges.

Language Variations

Different languages have different syntax, which can pose challenges for dependency parsing.

Embedding Semantic Information

While dependency parsing primarily caters to syntax, embedding semantic information is a challenge that needs to be addressed.

Handling Ambiguities

Natural language is often ambiguous, which can make dependency parsing difficult.

Complexity in Recursive Structures

Recursive structures in sentences add to the complexity of dependency parsing.

Dealing with Unseen Data

When dealing with unseen or out-of-domain data, dependency parsers may fail to deliver.

Trends in Dependency Parsing

Lastly, let's look at some trends shaping the future of dependency parsing.

Deep Learning Models

Deep learning models are increasingly being used for dependency parsing to deliver improved parsing results.

Multilingual Dependency Parsing

Efforts are being made towards developing parsers that can work with multiple languages.

Enhanced Semantic Handling

Trends indicate a shift towards better handling of semantic information in dependency parsing.

Real-time Parsing

With improvements in computational capabilities, real-time dependency parsing is becoming a reality.

AI Integration

Integration of AI in dependency parsing promises a future of more accurate and context-aware parsing.

Frequently Asked Questions (FAQs)

How does dependency parsing uncover sentence structure?

Dependency parsing identifies the grammatical structure of a sentence by establishing relationships between "head" words and words which modify those heads.

What makes dependency parsing crucial for natural language understanding?

It’s crucial because it reveals the syntactic structure that helps understand the role of each word in a sentence, enhancing machine comprehension.

Can dependency parsing support multiple languages?

Yes, dependency parsing models, especially those based on Universal Dependencies, are designed to support syntactic analysis across diverse languages.

How do dependency parsers handle ambiguous constructions?

Parsers use statistical or machine learning techniques to predict the most likely structure based on large corpora of annotated training data.

Is dependency parsing relevant to machine translation?

Indeed, understanding sentence structures through dependency parsing is vital in machine translation for maintaining the grammatical accuracy of the translated text.