What is API Rate Limiting?

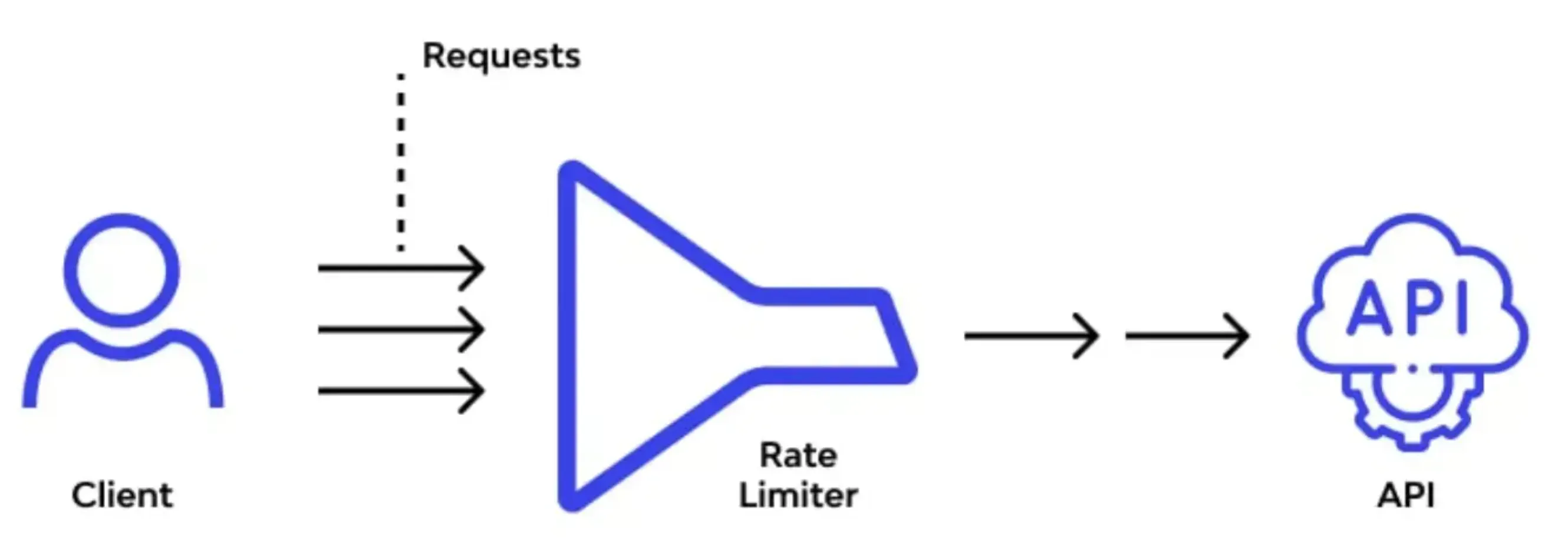

In simple terms, API rate limiting is a technique that API providers use to control the number of requests a client (user or application) can make to an API in a particular period.

The Significance of API Rate Limiting

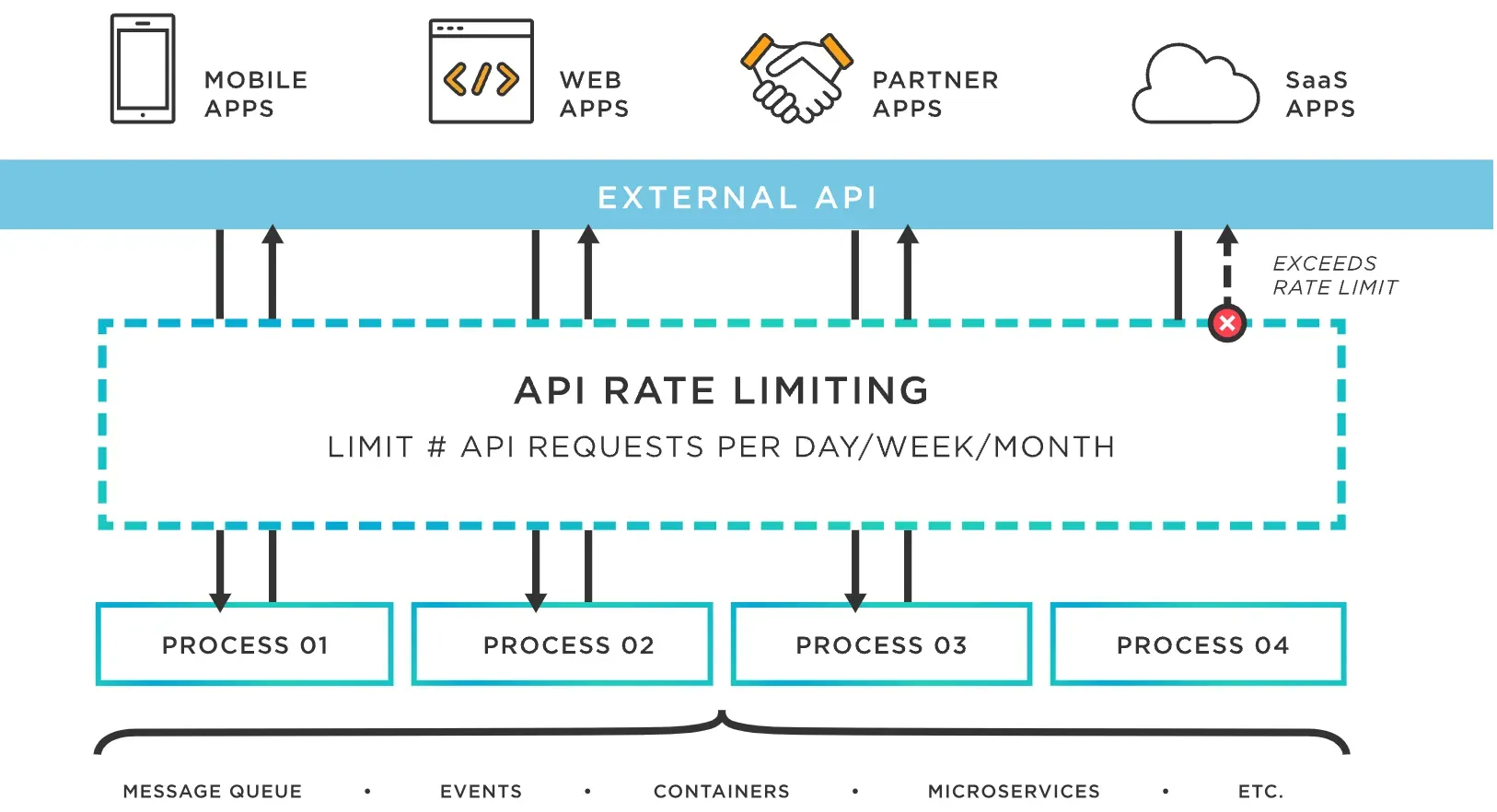

Rate limiting is a crucial necessity to prevent API abuse, protect server resources, handle scalability, and maintain quality of service.

It's about managing data traffic and creating a balanced environment that ensures fairness, stability, and protection against unexpected spikes and potential security threats.

How Does API Rate Limiting Work?

API rate limiting sets a specified number of requests clients can make in a given timeframe. Once a client hits this limit, the server will return a response indicating the rate limit has been exceeded.

Capitalizing on API Rate Limiting

Companies, developers, and users can benefit from API rate limiting. It protects applications from overwhelming demand, controls data transmission, manages resources efficiently, and ensures system stability.

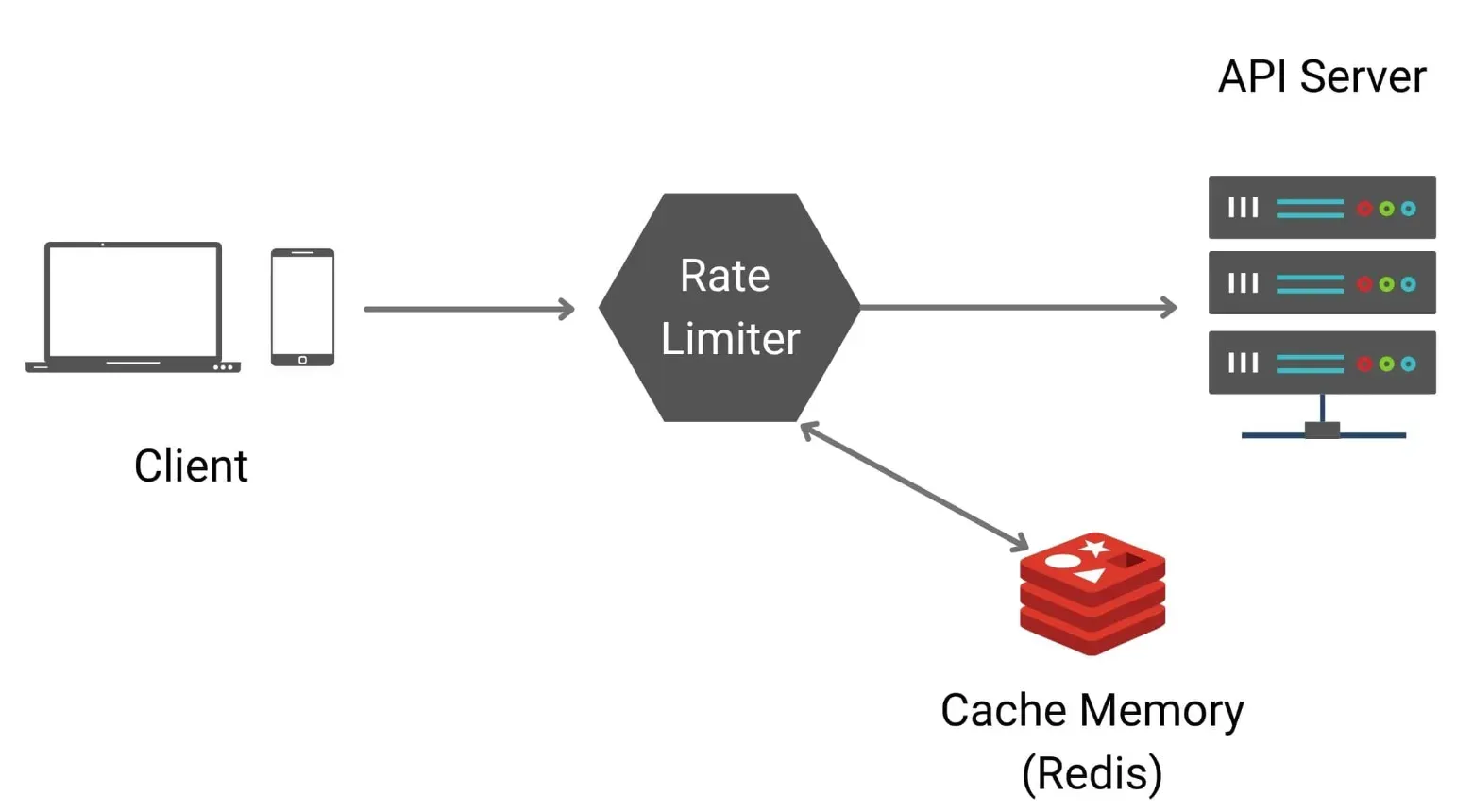

Implementing API Rate Limiting

After understanding the basics, we can now delve into the process of implementing API rate limiting.

Establishing a Rate Limit Policy

Determining the rate limit involves considering various factors, such as user requirements, server capacity, historical traffic patterns, and business objectives.

Configuring Rate Limiting Rules

API providers configure rules stipulating the number of allowed requests per client, often varying these between types of clients, request methods, and services.

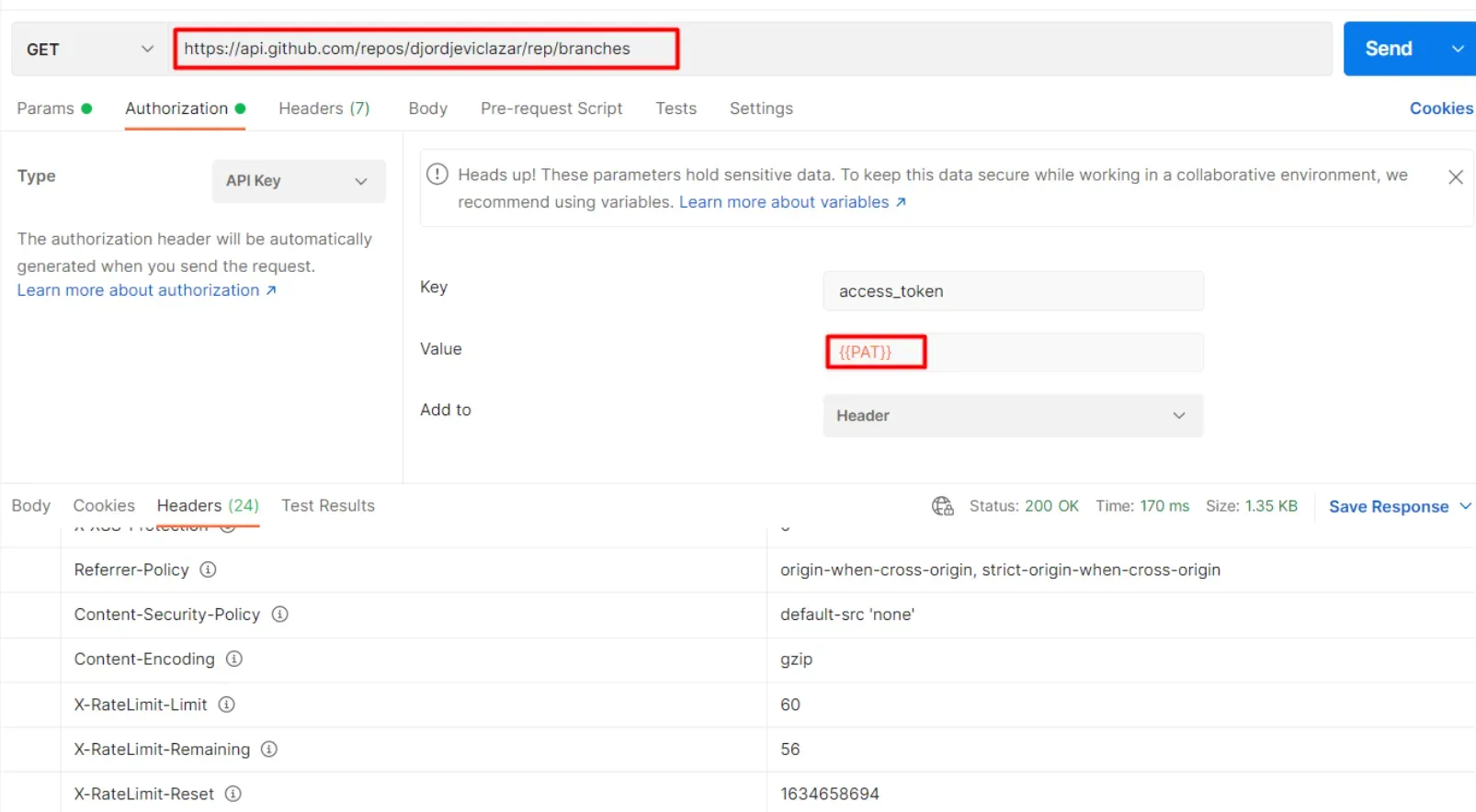

Conveying Rate Limit Information to Clients

To ensure transparency, providers often communicate rate limit info to clients through HTTP headers or error messages when clients exceed the established limit.

Adjusting and Optimizing Rate Limiting

Based on the experience learned from implementing rate limiting, the policy may be fine-tuned over time to strike the ideal balance between usability and resource sustainability.

API Rate Limiting Techniques

Once we have a grip on the implementation, it's good to learn about the different types of API rate-limiting techniques.

Fixed Window Rate Limiting

This method sets a fixed number of allowed requests within a specific timeframe. When the window resets, the counter starts again, regardless of when the requests were made.

Sliding Window Rate Limiting

Also known as the rolling window rate limiting, this method tracks requests over a rolling time window that moves with each request, which makes this technique smoother and more flexible than the fixed window.

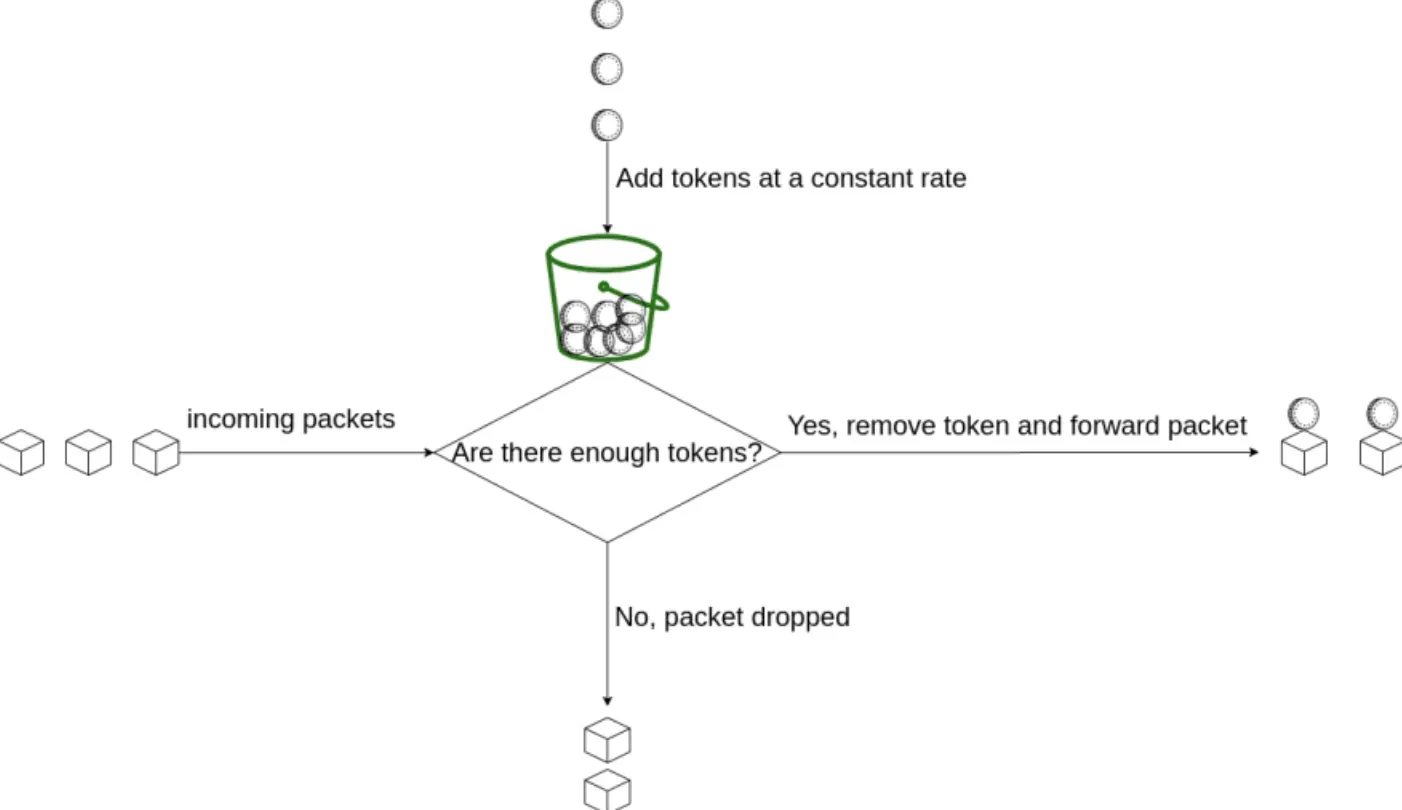

Token Bucket Rate Limiting

Makes use of tokens that are filled at a specified rate. Each request requires a token, and if the bucket runs out, the client must wait for new tokens to be generated.

Leaky Bucket Rate Limiting

This method uses an analogy of a dripping bucket, meaning requests fill the bucket, and when the bucket leaks or overflows, new requests are throttled or blocked until the bucket has enough room.

Suggested Reading: What is Leaky Bucket Theory & its Applications?

Handling Common Challenges in API Rate Limiting

API rate limiting isn't bulletproof and may sometimes cause issues that need navigating. Here's how:

Preventing Unfair Usage

Some users may abuse the system by connecting via multiple IP addresses or client tokens. API providers can prevent this by monitoring usage patterns and identifying misuse.

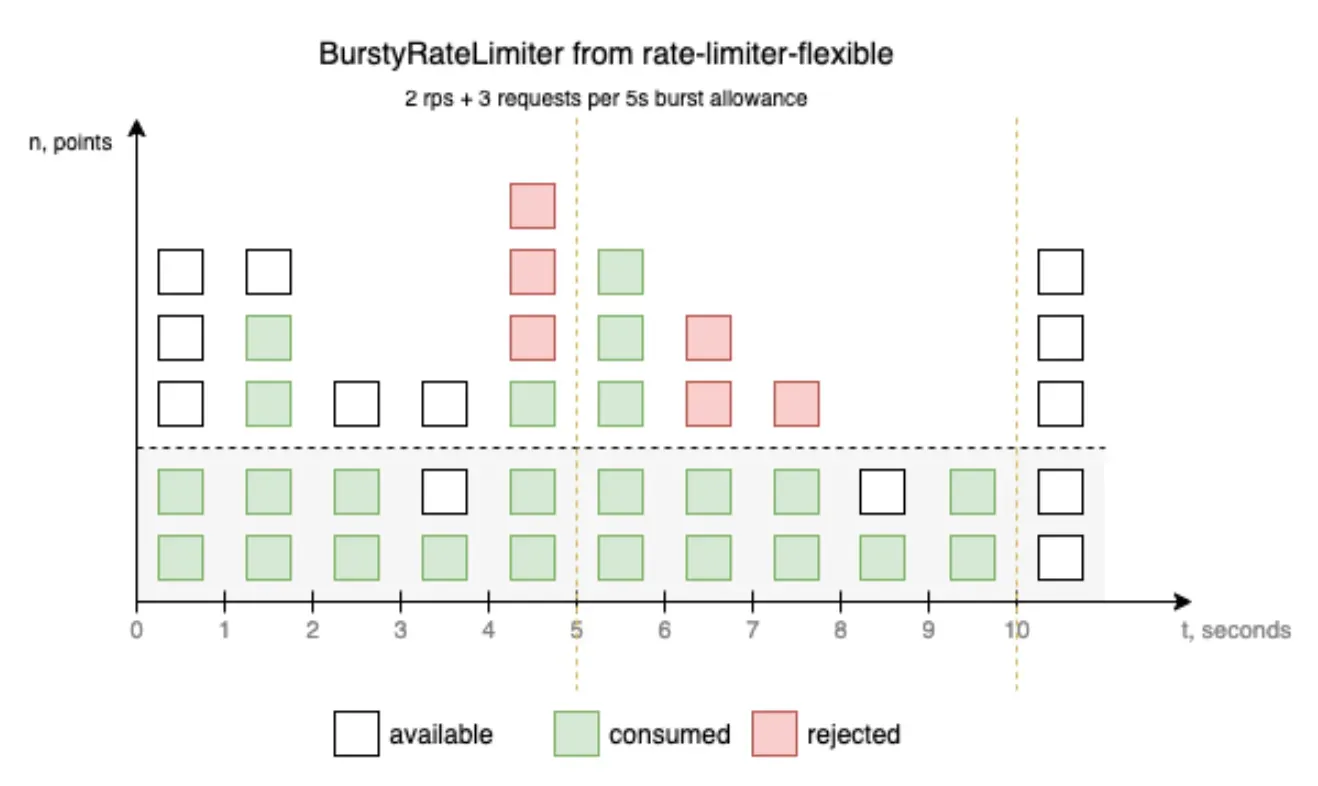

Overcoming Limitations in Burst Traffic

During periods of increased traffic, a sudden burst of requests can exceed the rate limit. This issue can be managed by implementing dynamic rate limiting or using a load balancer.

Avoiding Blocking Legitimate Traffic

Strict rate limiting can inadvertently restrict legitimate traffic. API providers must be careful to strike a balance and fine-tune their policy to minimize the inadvertent blocking of valid requests.

Handling Varying User Expectations

Some users may require a higher request limit than others. Customizing rate limits based on the user's need can help manage different expectations and requirements.

Best Practices for API Rate Limiting

Implementing rate limiting effectively requires some best practices to ensure fairness and usability.

Being Transparent with Users

Communication about your rate limit policy to the users is essential. Informing them about their current rate limit status via HTTP headers, error messages, or even through a dedicated API endpoint can enhance the user experience.

Implementing Graceful Degradation

Instead of blocking a user entirely once they hit the rate limit, you can choose to slow down their requests or lower the priority. This method is often useful to preserve usability while still controlling resource usage.

Studying Usage Patterns

Keeping an eye on how the users interact with your API can help shape your rate limiting policy. Understanding peak usage times, most accessed endpoints, and typical user behavior will help in setting effective and efficient rate limits.

Using Third-Party Middleware

Several frameworks and libraries can help implement rate limiting seamlessly without the need to code from scratch, making life easier and more efficient.

Well-Known Examples of API Rate Limiting

To better understand API rate limiting, let's look at a few examples of favored platforms and how they manage rate limiting:

GitHub API

The GitHub API employs a rate limit of 5000 requests per hour for authenticated requests, while for unauthenticated requests, it's considerably lower.

Twitter API

Depending on the API endpoints, Twitter's rate limit ranges from 15 to 900 requests per 15-minute window, further divided based on user-level and app-level access.

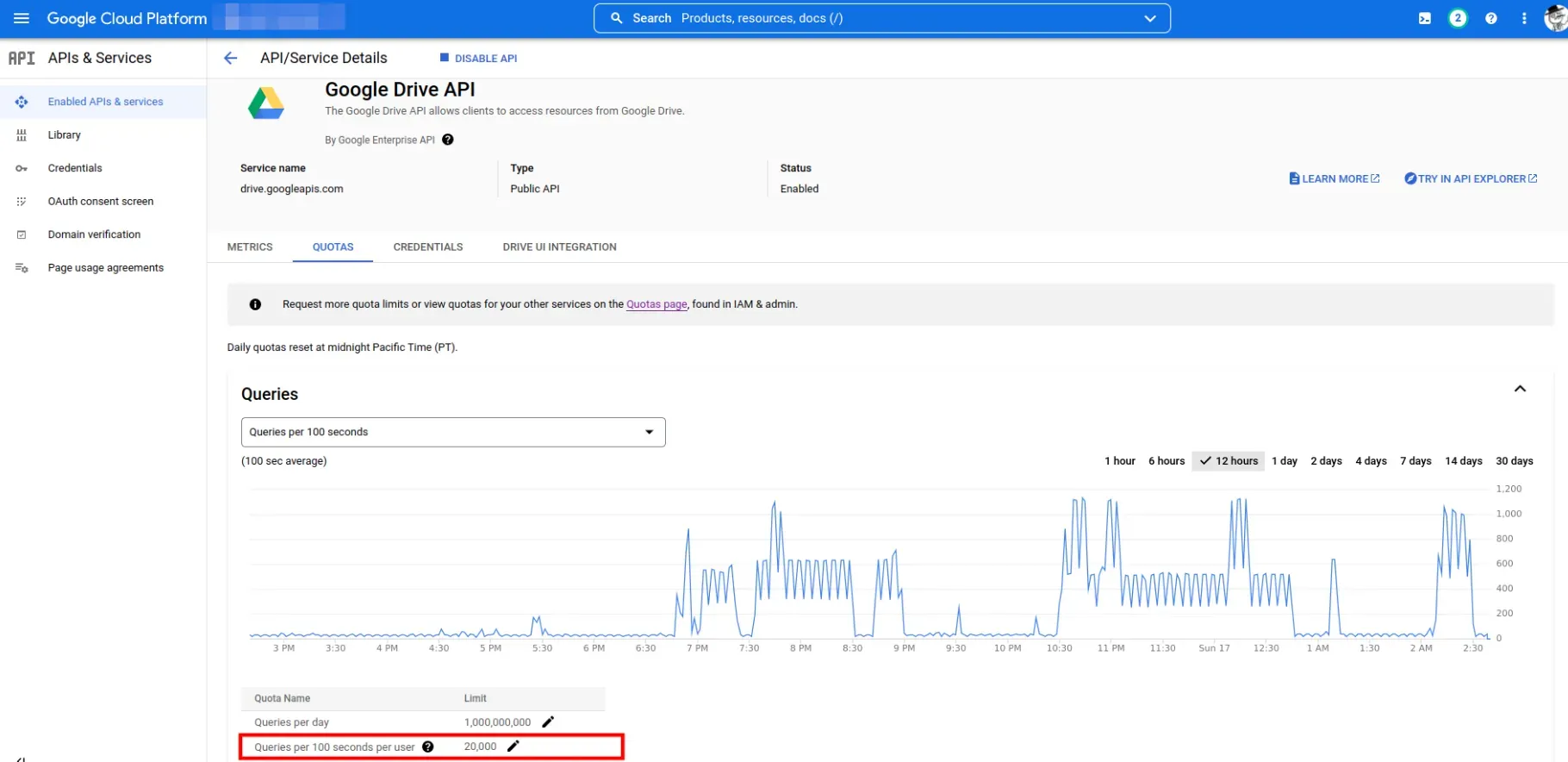

Google Drive API

The Google Drive API has a complicated understanding of user, application, and method-specific quotas, which partners can request to increase.

Facebook Graph API

The Facebook Graph API calculates call count, time, and CPU-based rate limits and varies them based on factors such as the app's history or user feedback.

Frequently Asked Questions (FAQs)

What is API rate limiting?

API rate limiting is the technique of controlling the number of requests a user or application can make to an API within a certain timeframe.

Why is API rate limiting necessary?

API rate limiting is necessary to ensure stability, combat abuse, manage server resources, maintain quality of service, and handle the scalability of an API.

What methods can be used for rate limiting?

Typical API rate limiting methods include fixed window, sliding window, token bucket, and leaky bucket rate limiting.

How are users informed about rate limit status?

Users are usually informed about their rate limit status through HTTP headers or error messages when a limit is exceeded.

Which popular platforms use API rate limiting?

Many well-known platforms employ API rate limiting, including GitHub, Twitter, Google Drive, and Facebook.