Introduction

Prompt engineering refers to the art of carefully crafting the text prompts that users input to LLMs to produce desired responses.

As LLMs continue explosively transforming industries from creative writing to computer programming, prompt design unlocks their true potential.

Recent research by OpenAI found that well-designed prompts can more than double model accuracy, reduce errors by 47% and cut unsafe responses by 60%.

The prompts tech leaders use also critically impact AI ethics and safety as models emulate given examples.

Survey data from Stratifyd shows 78% of executives now view developing prompts as crucial in leveraging LLMs strategically. With the global conversational AI market estimated by Reports and Data to reach $102 billion by 2030, prompt engineering separates trailblazers.

However, prompts must be uniquely tailored not just for different use cases, but also across various models like Claude, GPT-3, PaLM, LaMDA and more based on their underlying architectures. Certain techniques like examples, demonstratives and contextual framing work better for some over others.

In this guide, we’ll break down the elements of effective prompts, customizing strategies optimized for leading LLMs, ethical considerations, and real-world business applications to drive ROI. Let’s dive into mastering this vital skill!

What is Prompt Engineering?

Prompt engineering is a critical concept in the world of AI that refers to the process of crafting effective instructions or prompts for AI models.

Understanding Different Model Strengths

AI models have come a long way in recent years, with significant advancements in natural language processing, image generation, and code prediction.

Let's take a closer look at some of the strengths of different types of models.

Text-Based Powerhouses: GPT-3, Jurassic-1 Jumbo, and their Kin

GPT-3 and its large-scale variants, such as Jurassic-1 Jumbo, are some of the most powerful text-based models for natural language processing.

These models excel in generating text that sounds natural and human-like, making them ideal for a wide range of text-related applications.

Code Whisperers: LaMDA, Codex, and the Rise of Code Generation

Models, such as LaMDA and Codex, are making significant strides in code generation.

These models can understand natural language descriptions of code and generate functional code snippets. These models have the potential to revolutionize the way programming and coding are done.

Creative Visionaries: Dall-E 2, Imagen, and the World of Image Generation

Dall-E 2 and Imagen are models that excel at image generation. Thus creating visually appealing and stunning images from textual descriptions.

These models can create images that are beyond the imagination of even the most creative humans, potentially revolutionizing art, design, and advertising.

Tailoring the Prompt: Strategies for Each Model Type

Different types of AI models have varying strengths and capabilities. To optimize their performance and generate desired outputs, it's important to tailor the prompts accordingly.

Let's explore strategies for prompt customization for different model types.

Guiding Creative and Factual Writing

When working with text-based models, such as GPT-3 and Jurassic-1 Jumbo, you can guide them to generate creative and factual writing by providing specific instructions.

For creative writing, you can ask the model to generate a story with specified characters, settings, and themes.

For factual writing, you can ask the model to summarize a given text or answer specific questions based on a provided context.

Precise Instructions for Code Generation

Models like LaMDA and Codex are adept at generating functional code. To get accurate results, it's crucial to provide precise instructions.

Clearly mention the programming language and specify the input-output requirements. Break down the problem into smaller steps or provide an example solution.

This helps the model understand the task at hand and generate the desired code.

Crafting Prompts for Compelling Visuals

When working with image generation models like Dall-E 2 and Imagen, crafting prompts that evoke strong visual imagery is key.

Use descriptive language to clearly communicate the desired visual elements. Specify colors, shapes, textures, and any other important details.

You can also provide reference images or explain the mood or concept behind the visual in order to guide the model's generation process effectively.

Best Practices and Pro Tips To Master Prompt Engineering

To get the most out of prompt engineering, here are some best practices and pro tips to consider.

Clarity is Key: Avoiding Ambiguity and Setting Clear Expectations

Avoid ambiguous instructions that could lead to unintended outputs. Be as precise as possible and anticipate potential misunderstandings.

Clearly state the required format, output length, or any other specific requirements. Setting clear expectations ensures that the model understands the task accurately and generates appropriate responses.

Suggested Reading:

Exploring Advanced Techniques in GPT Prompt Engineering

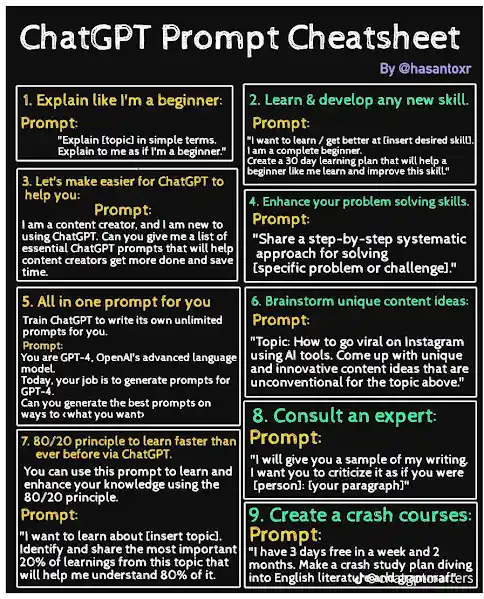

Examples Speak Volumes: Showcasing Effective Prompts for Different Tasks

Providing examples can be incredibly helpful in guiding the model. Showcase well-crafted prompts for different tasks to demonstrate the desired input-output relationship.

Models can often learn from patterns and examples, making it easier for them to generate accurate and relevant responses. Use concrete and relatable examples to develop a shared understanding with the model.

And, finding prompt engineers for your business need not be that tough. Meet BotPenguin, the home of AI-driven chatbot solutions

BotPenguin houses experienced ChatGPT developers with 5+ years of expertise who know their way around NLP and LLM bot development in different frameworks.

And that's not it! They can assist you in implementing some of the prominent language models like GPT 4, Google PaLM, and Claude into your chatbot to enhance its language understanding and generative capabilities, depending on your business needs.

Embrace Iteration: Refining your Prompts for Optimal Results

Prompt engineering is an iterative process. Refine and improve your prompts based on the model's responses.

Experiment with different phrasing and instructions to find what works best. Continuously test and evaluate the outputs to identify areas for improvement.

By iterating and refining the prompts, you can achieve better outcomes and enhance the overall performance of the model.

Ethical Considerations: Responsible Use of Prompts and Potential Biases

We must consider potential biases in prompts and outputs. Biased prompts can lead to biased responses.

Be mindful of the data used to train the models and the potential impact this can have on the outputs.

Strive for fairness, inclusivity, and representation in the prompts to ensure that the model generates outputs free from harmful biases and promotes responsible AI development.

Suggested Reading:

10 Techniques for Effective AI Prompt Engineering

Exploring Novel Techniques and Tools

Prompt engineering is constantly evolving, and novel techniques and tools are emerging to enhance its effectiveness.

Let's explore some of these advancements that can take your prompt engineering to the next level.

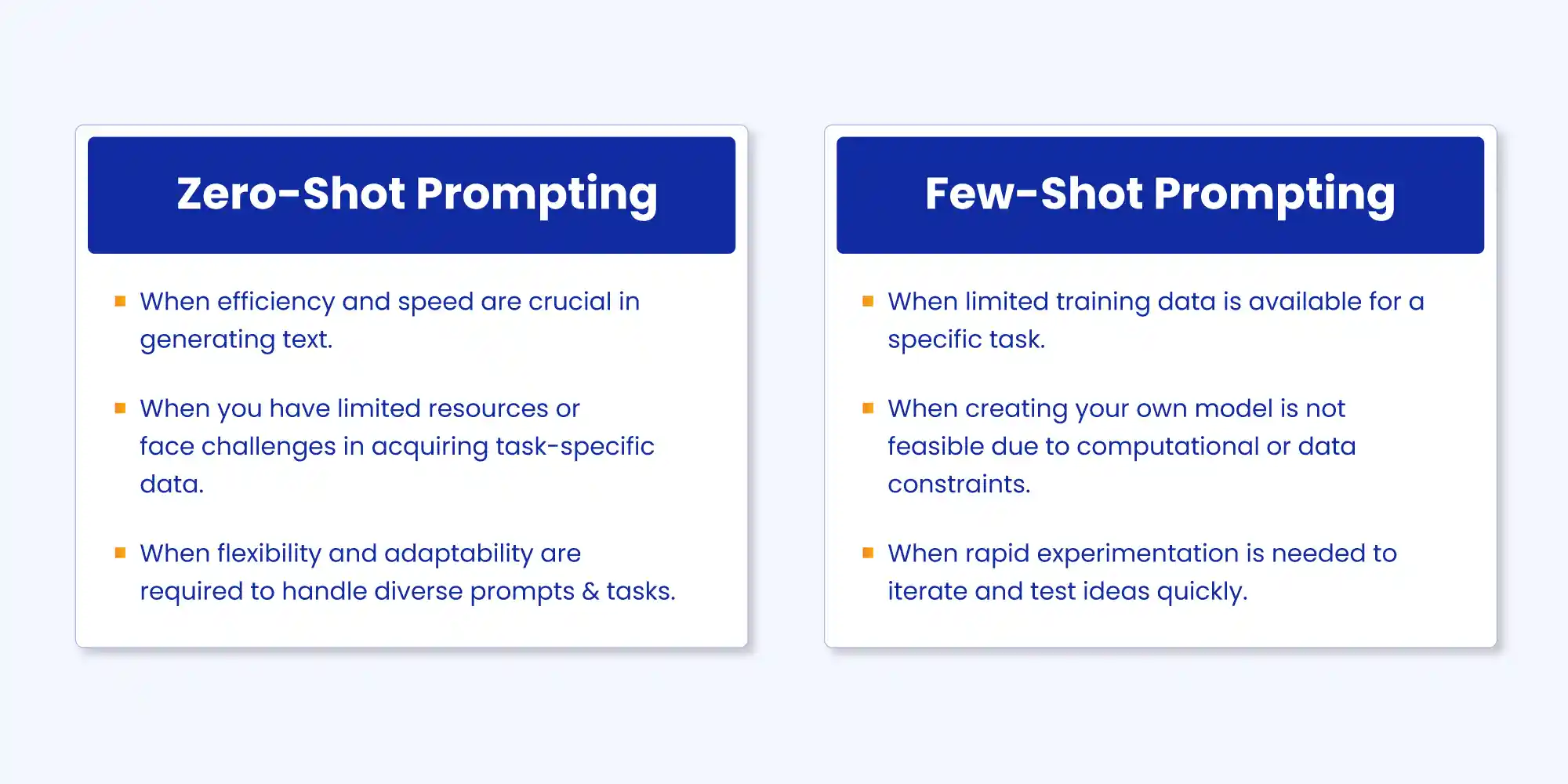

Zero-Shot and Few-Shot Prompting: Utilizing Minimal Information for Complex Tasks

Zero-shot and few-shot prompting techniques allow you to accomplish complex tasks with minimal information.

With zero-shot prompting, you can prompt the model to perform a task it was not explicitly trained on.

For example, you can ask a language model to translate from one language to another without any specific translation training.

Few-shot prompting, on the other hand, involves providing a small amount of task-specific examples to guide the model's responses. These techniques are powerful when you need flexibility and adaptability in your prompts.

Temperature Control: Balancing Creativity and Coherence

Temperature control is an important parameter in prompt engineering that influences the level of creativity and coherence of the model's outputs.

By adjusting the temperature, you can control the randomness of the model's responses.

Higher temperatures result in more diverse and creative outputs, but they may also introduce incoherence.

Lower temperatures, on the other hand, produce more focused and coherent responses. Finding the right balance between creativity and coherence depends on the specific task and desired outcomes.

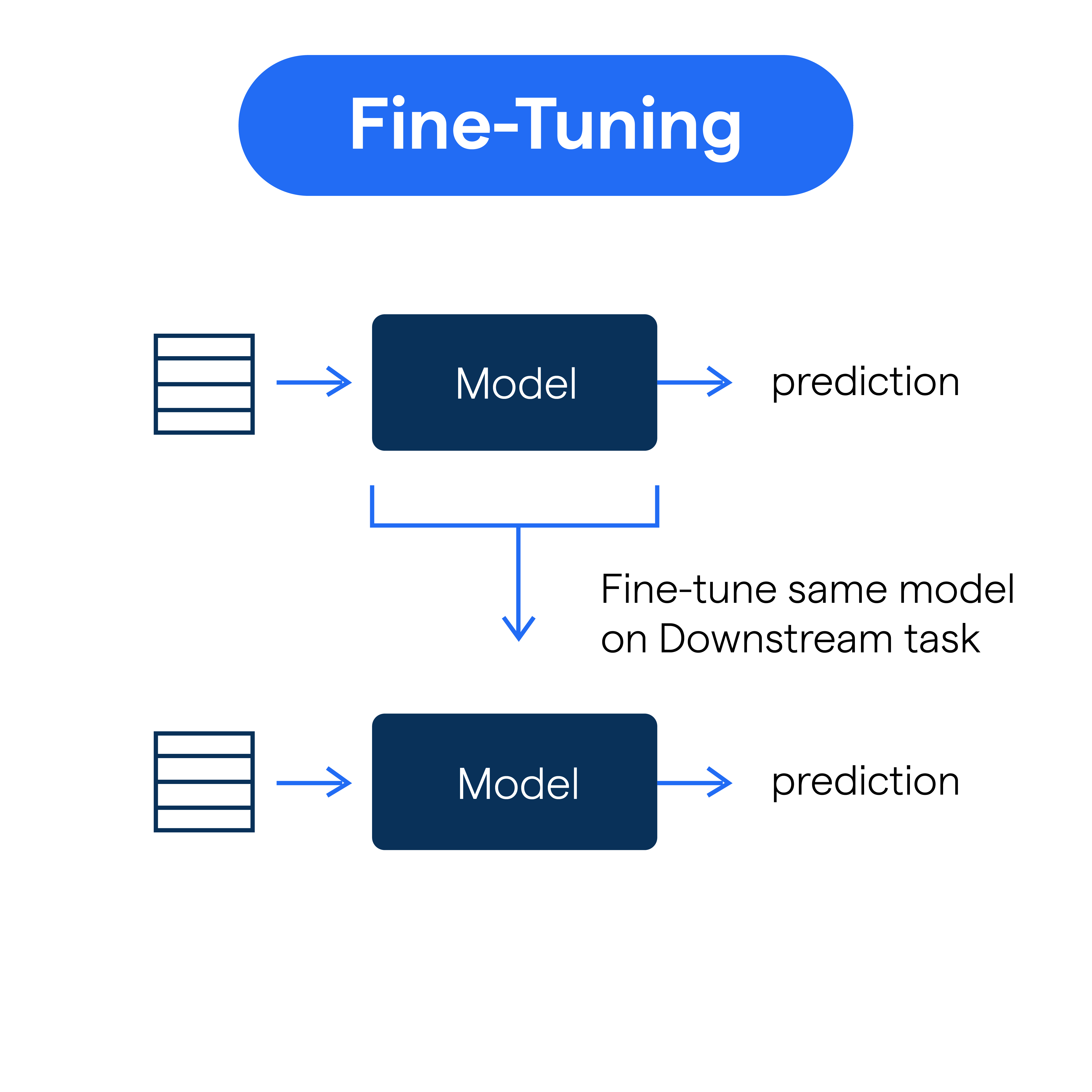

Advanced Embeddings and Fine-Tuning: Pushing the Boundaries of Performance

Advanced embeddings and fine-tuning techniques can significantly improve the performance of AI models.

Embeddings capture the semantic meaning of words and can be used to guide model behavior.

By fine-tuning pre-trained models on task-specific data, you can tailor the model to better understand and respond to specific prompts.

Advanced embeddings and fine-tuning methods enable models to capture nuanced relationships and context, resulting in more accurate and context-aware outputs.

Suggested Reading:

How Prompt Engineering AI Works: A Step-by-Step Guide

Emerging Tools and Platforms: Streamlining the Prompt Engineering Process

As the field of prompt engineering expands, new tools and platforms are emerging to streamline the process.

These tools provide intuitive interfaces and pre-designed templates that make it easier to craft effective prompts.

They often come with built-in features for collaboration, version control, and prompt optimization.

These emerging tools and platforms simplify and accelerate the prompt engineering workflow, empowering users to generate high-quality outputs with more efficiency.

Conclusion

In conclusion, crafting prompts is as much an art as a science. Small tweaks can vastly sway AI responses. Leading enterprises are investing heavily into dedicated prompt engineering teams and infrastructure.

Recent projections by Gartner expect 60% of organizations to establish prompt management procedures and best practices by 2025 given the massive impacts prompt quality has on AI success.

Emerging startups like Anthropic, Curai, and Quairo are also building specialized tools for no-code prompt templating, collaboration, and monitoring to enhance experimentation and control.

The market potential ahead also looks promising. According to an Ark Investment Management analysis, the overall economic value unlocked by generative AI across areas transformed by prompts could reach over $200 billion annually by 2030.

But ultimately, an ethical prompt-first approach grounded in safety, quality, and transparency will differentiate responsible leaders. As models become exponentially more advanced courtesy of engineering milestones like constitutional AI, designing prompts focused on producing social good will prove pivotal.

So whether just beginning your AI journey or a seasoned ML engineer, make mastering prompt design a priority. The future will rely on prompts as much as models themselves!

Frequently Asked Questions (FAQs)

What is prompt engineering and why is it important for AI models?

Prompt engineering involves crafting and refining prompts or instructions to achieve the desired output from AI models. Properly engineered prompts can improve model performance, control biases, and produce more accurate and useful results.

How can prompt engineering help in mitigating biases in AI models?

By carefully crafting prompts, prompt engineering can help reduce biases in AI models. It allows for the explicit specification of desired attributes, minimizes potentially biased phrasing, and ensures fairness and inclusivity in the generated outputs.

Are there any best practices to follow when engaging in prompt engineering?

Best practices include being explicit and specific in prompts, providing clear context, avoiding leading or suggestive language, using evaluation sets to measure biases, and iterating on prompt design to achieve optimal performance.

Can prompt engineering be done for different types of AI models?

Yes, prompt engineering is applicable to a wide range of AI models, including text-based language models, chatbots, and recommendation systems. It helps shape the behavior and output of these models according to specific requirements.

How to improve my prompt engineering skills?

Improving prompt engineering skills involves studying existing research and case studies, experimenting with different phrasings and contexts, seeking feedback and insights from the AI community, and staying up-to-date with the latest advancements in the field.