Introduction

Master advanced prompting strategies to unlock GPT's true potential.

Discover how hierarchical prompting breaks down complex tasks, conditional prompting shapes responses with precision, and chain-of-thought prompting unveils GPT's reasoning process.

But that's just the beginning. Dive into fine-tuning prompts for optimal results through iterative improvement and user feedback.

Explore creative text generation, nuanced question answering, and control code generation – all tailored to your specific use case.

Don't let ambiguity hold you back. Learn to craft unambiguous prompts that guide GPT toward your desired outcomes. Mitigate bias in generated text and find the perfect balance between control and open-endedness.

Embark on this prompting journey and harness the full capabilities of GPT. Elevate your results to new heights and experience the true magic of advanced prompt engineering.

Keep reading to discover the secrets behind crafting prompts that deliver exceptional performance.

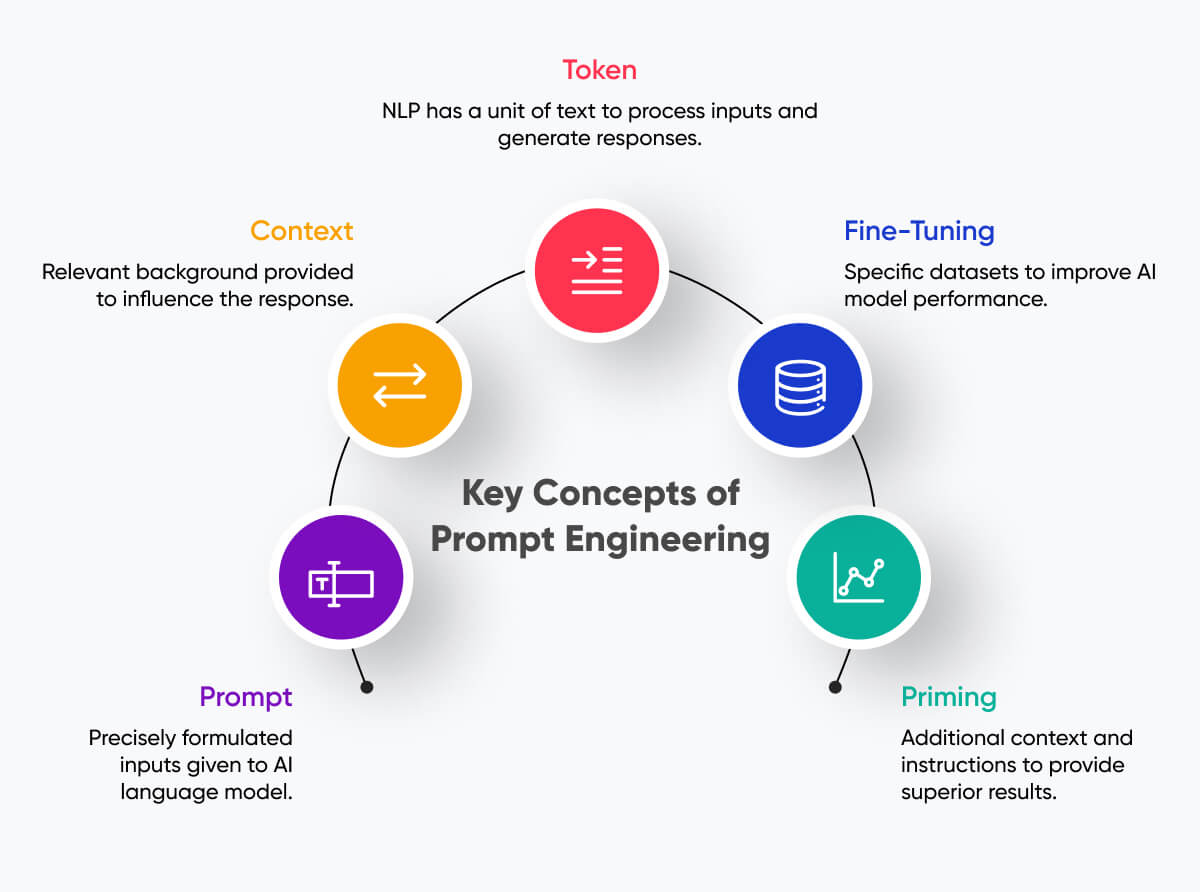

GPT Prompt Engineering

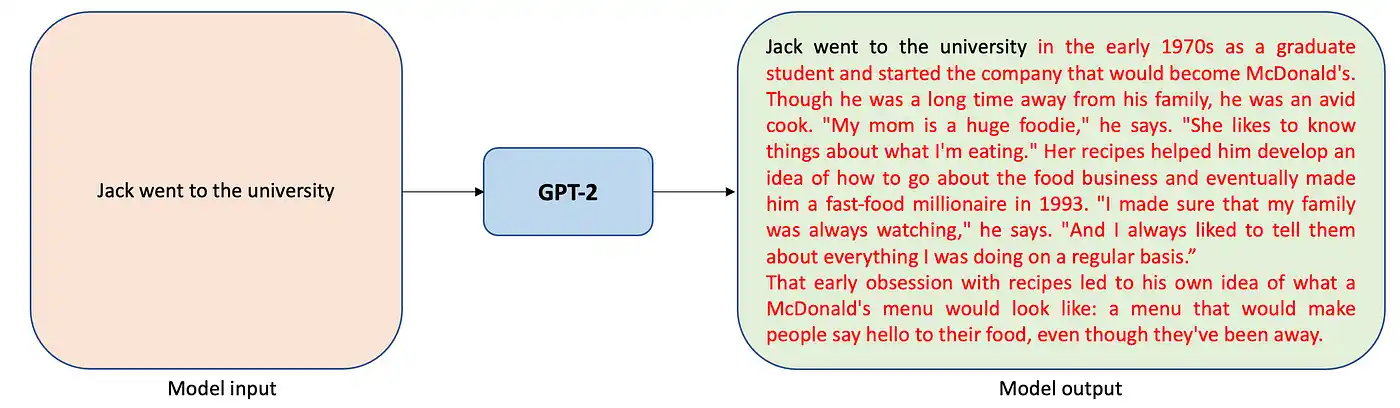

GPT Prompt Engineering involves crafting clear and specific instructions to guide the GPT language model in generating desired outputs.

As powerful as GPT is, it needs precise prompts to understand the task and provide accurate responses.

By carefully constructing prompts, we can ensure that GPT understands our intentions and produces the desired results.

Benefits of Advanced Prompt Engineering Techniques

Advanced Prompt Engineering techniques offer several benefits when working with GPT (Generative Pre-trained Transformers).

These techniques help improve performance, provide better control over the generated text, and enhance creativity.

By utilizing advanced prompts, we can maximize the potential of GPT and achieve desired outcomes.

Beyond the Basics: Advanced Prompting Strategies

Let's explore some advanced prompting strategies that go beyond the basics and further enhance the effectiveness of ChatGPT Prompt engineering.

Hierarchical Prompting: Breaking Down Complex Tasks

Hierarchical Prompting is a technique that involves breaking down complex tasks into smaller subtasks and providing intermediate prompts.

By doing so, we guide GPT through step-by-step instructions, enabling it to grasp the task's structure and nuances.

This approach is beneficial for tasks that require multi-step processes or multiple components.

Conditional Prompting: Shaping Responses with Specific Conditions

Conditional Prompting allows us to shape GPT's responses by providing specific conditions or constraints.

By incorporating conditional statements or instructions, we can guide GPT in generating responses that fulfill specific requirements.

This technique grants us more control over the output, making it ideal for situations where we need GPT to follow specific guidelines.

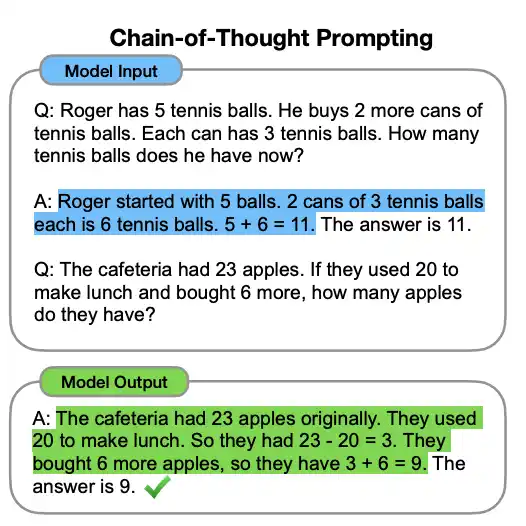

Chain-of-Thought Prompting: Unveiling GPT's Reasoning Process

Chain-of-thought prompting is a strategy that provides step-by-step prompts for GPT's reasoning process.

By breaking down the reasoning steps required to reach an answer or solution, we can guide GPT in generating more logical and coherent responses.

This technique is beneficial for tasks that demand clear and structured reasoning.

With these advanced prompting strategies, we can harness the full potential of GPT and extract more accurate, controlled, and creative responses.

Next, we will cover how to fine tune prompts for optimal results in GPT Prompt Engineering.

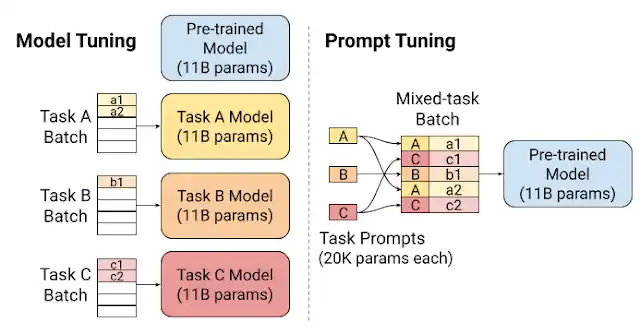

Fine-Tuning Prompts for Optimal Results

To achieve optimal results with GPT, it's crucial to analyze its outputs and identify any weaknesses in the provided prompts.

By carefully examining the generated text, we can pinpoint areas where the instructions may have been unclear or ambiguous.

This analysis helps refine the prompts and provide clearer instructions for better performance.

Iterative Prompt Improvement Through Feedback and Testing

Prompt improvement is an iterative process that involves continuously refining prompts based on user feedback and testing.

By gathering data on how users interact with the chatbot and evaluating the quality of the responses, we can identify areas for prompt refinement.

This iterative approach allows us to fine-tune the prompts over time, ensuring that the chatbot provides more accurate and relevant outputs.

Next, we will cover some advanced techniques for specific use cases in GPT Prompt Engineering.

Advanced Techniques for Specific Use Cases

Let's explore some advanced techniques in GPT Prompt Engineering that can be particularly useful for specific use cases.

These techniques enable us to leverage GPT's capabilities to their fullest potential.

Creative Text Generation: Unleashing GPT's Creativity

You can tap into GPT's (Generative Pre-trained Transformers) creativity by prompting it in different formats and styles regarding creative text generation.

Experimenting with prompts that encourage imaginative storytelling or unconventional thinking can unlock GPT's ability to generate innovative and engaging narratives.

This technique is ideal for applications that require unique and captivating content creation.

Question Answering with Nuance: Guiding GPT Towards Accurate Answers

To enhance GPT's accuracy in question answering, we can provide specific instructions that guide it towards generating precise and contextually appropriate answers.

By specifying the desired answer types and providing relevant context, you can help GPT generate responses that address the actual intent behind the questions.

This technique improves the reliability and usefulness of the chatbot in answering user queries.

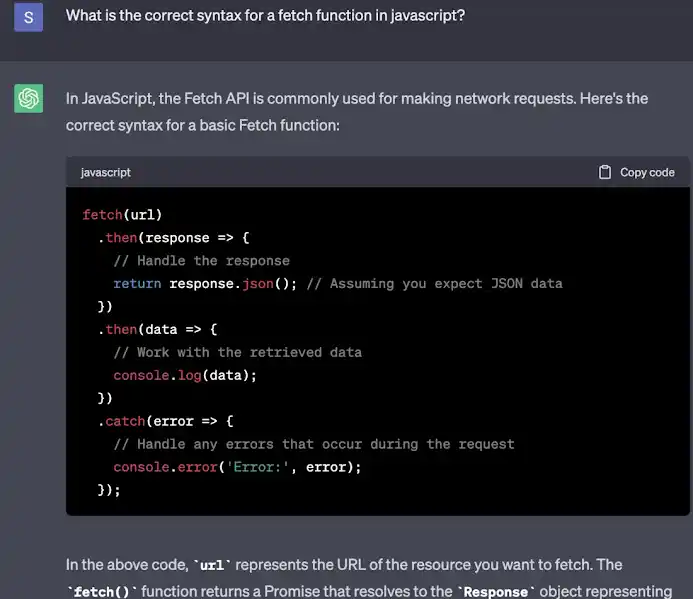

Code Generation with Control: Prompting for Specific Programming Languages and Styles

You can tailor prompts to elicit specific programming languages and coding styles from GPT regarding code generation.

By providing instructions that require code blocks or snippets in a particular language, you can guide GPT in producing code outputs that match our desired requirements.

This technique ensures the chatbot generates code that aligns with the preferred programming language and coding conventions.

By utilizing these advanced techniques in ChatGPT Prompt engineering, we can harness the power of GPT to meet specific use case requirements and achieve optimal results.

Next, we will address some challenges in advance GPT Prompt Engineering.

Suggested Reading:

How Prompt Engineering AI Works: A Step-by-Step Guide

Addressing Challenges in Advanced Prompt Engineering

One of the challenges in prompt engineering is overcoming ambiguity in prompts.

Providing clear and precise instructions is essential to avoid misinterpretations by the GPT language model.

By using straightforward and unambiguous language, we can guide GPT in understanding our intentions accurately.

This ensures the generated text aligns with the desired outcomes and avoids confusion or misunderstandings.

Mitigating Bias in Generated Text: Prompting for Fair and Inclusive Results

Another challenge in prompt engineering is mitigating bias in the generated text.

GPT learns from a vast amount of training data, which may contain inherent biases from the real world. So prompt GPT to produce fair and inclusive results to address this.

By carefully selecting the training data and prompting GPT with instructions to encourage unbiased and respectful responses, you can minimize the potential for bias in the generated text.

Balancing Control with Open-Endedness: Finding the Sweet Spot

Finding the right balance between control and open-endedness is yet another challenge in prompt engineering.

While you want to guide GPT towards specific outcomes, encourage its creativity and ability to generate diverse responses.

It's essential to strike a balance that allows for exploration and innovation while maintaining focus on the desired task.

Hence, find the sweet spot that achieves both control and open-endedness by experimenting with different prompts and instructions.

Conclusion

In conclusion, advanced techniques in GPT prompt engineering enhance your chatbot experience!

Creating clear and specific instructions allows you to guide the GPT language model to generate accurate and creative responses tailored to your needs.

With strategies like hierarchical, conditional, and chain-of-thought prompting, you can shape GPT's outputs and maximize its capabilities.

Improve your prompts for better results by analyzing outputs, refining instructions, and making iterative improvements based on user feedback.

Whether you're looking to generate creative narratives, answer questions with nuance, or produce code in specific languages, advanced prompt engineering techniques have covered you.

To truly make the most of GPT, overcome challenges such as ambiguity, bias, and balancing control with open-endedness.

Don't miss out on the opportunity to enhance your chatbot experience—start learning advanced techniques in GPT prompt engineering today!

Frequently Asked Questions (FAQs)

What is GPT Prompt Engineering and why is it important for advanced techniques?

GPT Prompt Engineering refers to the strategic crafting and optimization of prompts to maximize the performance and control of GPT models.

It is crucial for achieving desired outcomes and fine-tuning the generated responses.

What are some advanced techniques in GPT Prompt Engineering?

Advanced techniques in GPT Prompt Engineering include prompt engineering with control codes, question-answering setups, prefix tuning, and prompt reformulation.

These techniques enhance the model's responsiveness and ability to generate accurate and targeted outputs.

How can prompt engineering with control codes be used to influence the model's responses?

ChatGPT Prompt engineering with control codes allows you to provide explicit instructions to GPT models, guiding their responses to be aligned with specific attributes such as tone, sentiment, or style, resulting in more controlled and tailored outputs.

What is prefix tuning and why is it useful in GPT Prompt Engineering?

Prefix tuning involves pre-defining a partial prompt to set the context and guide the model's generation in ChatGPT Prompt engineering.

It helps prevent the model from veering off-topic and produces more focused and coherent responses.

Why is prompt reformulation important in GPT Prompt Engineering?

Prompt reformulation involves experimenting with different prompt structures and phrasing to elicit more accurate and desired responses from ChatGPT Prompt engineering models.

It enables fine-tuning the prompts to optimize the quality of the generated outputs.