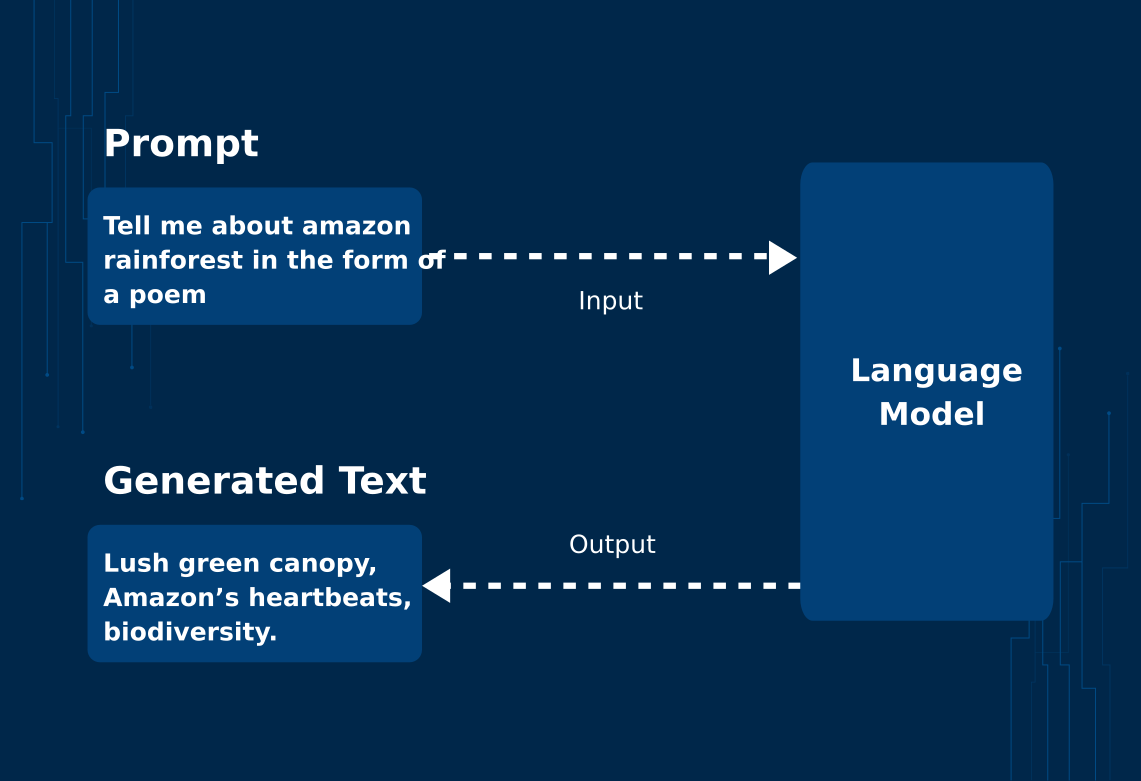

Prompt engineering is crafting effective instructions or queries to elicit desired responses from AI language models.

It's like giving the model a nudge in the right direction to generate the output you want. By mastering prompt engineering, you can unleash the true power of AI and achieve remarkable results.

Prompt engineering opens up a world of possibilities and benefits in AI. You can steer the AI model toward generating content that meets your requirements by providing clear instructions.

Whether you're a content creator, a chatbot developer, or an information seeker, prompt engineering can help you save time, enhance creativity, and improve the quality of AI-generated output.

Let’s dive in!

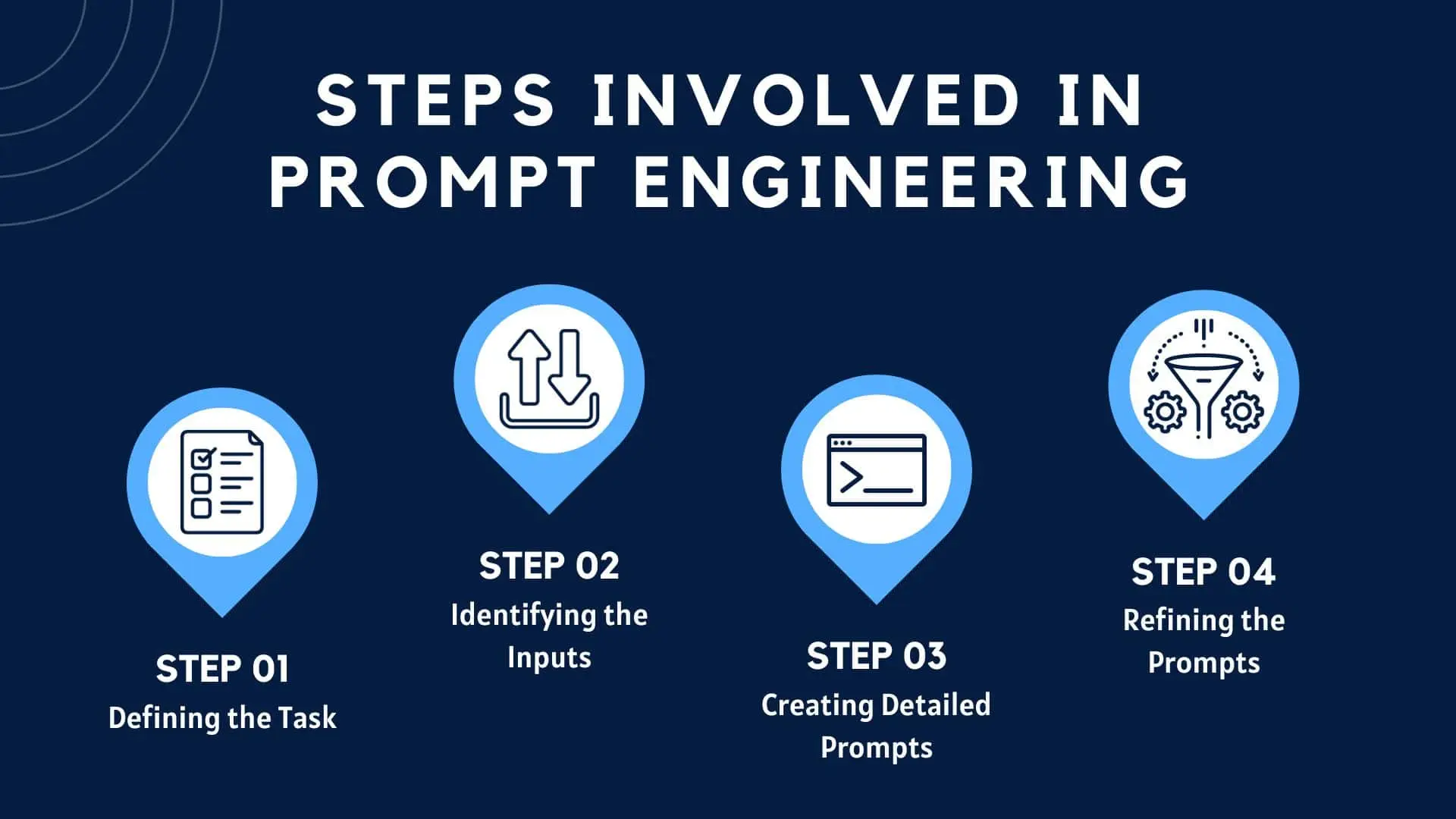

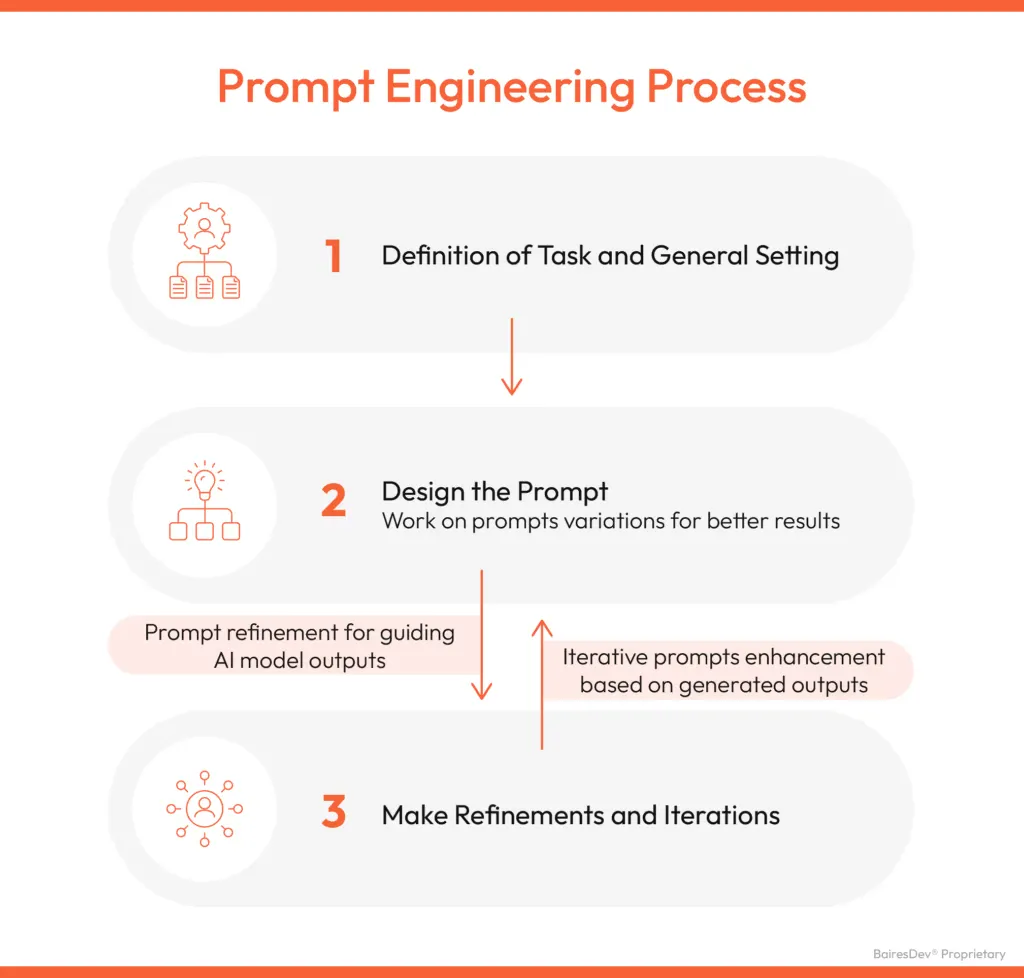

How Does Prompt Engineering Work: A Step-by-Step Guide

When it comes to understanding how Prompt Engineering works, it's important to have a clear step-by-step guide that takes you through the process.

In this section, we will walk you through each stage of Prompt Engineering, providing you with valuable insights on the technology and its application in the engineering field.

Let's dive in and explore the world of Prompt Engineering together.

Step 1

Defining Your Objective

The first step in the Prompt Engineering process is to identify your objective.

Establishing the Project's Purpose

You must first determine the purpose of the project.

This requires identifying how you want the AI language model to perform – whether it's generating creative content, summarizing information, or answering specific questions – and ensuring the purpose aligns with the capabilities of the model.

Envisioning the Desired AI Output

Clarify what you want the AI model to produce when given specific prompts. In prompt engineering, understanding the desired output is integral to crafting an effective prompt.

For instance, if your goal is to generate conversational responses, the prompts must be crafted to guide the model accordingly.

Setting Feasible Objectives

When defining your objective, ensure it is feasible and aligns with the AI model's strengths and capabilities.

In prompt engineering, this means understanding the limitations of the language model being used and adapting the objective accordingly to create effective prompts.

Establishing Measurable Metrics

Determining measurable metrics is essential to track the efficacy of the engineered prompts.

For instance, measuring the relevance or accuracy of the generated text can serve as valuable criteria for evaluating the AI model's performance in generating the desired output.

Documenting the Prompt Engineering Objective

Maintaining documentation of your objective in prompt engineering ensures that all stakeholders have a clear understanding of the goals and expectations related to the language model and its responses.

This documentation also serves as a guideline for refining or optimizing the prompts in the future.

Step 2

Crafting Effective Instructions or Queries

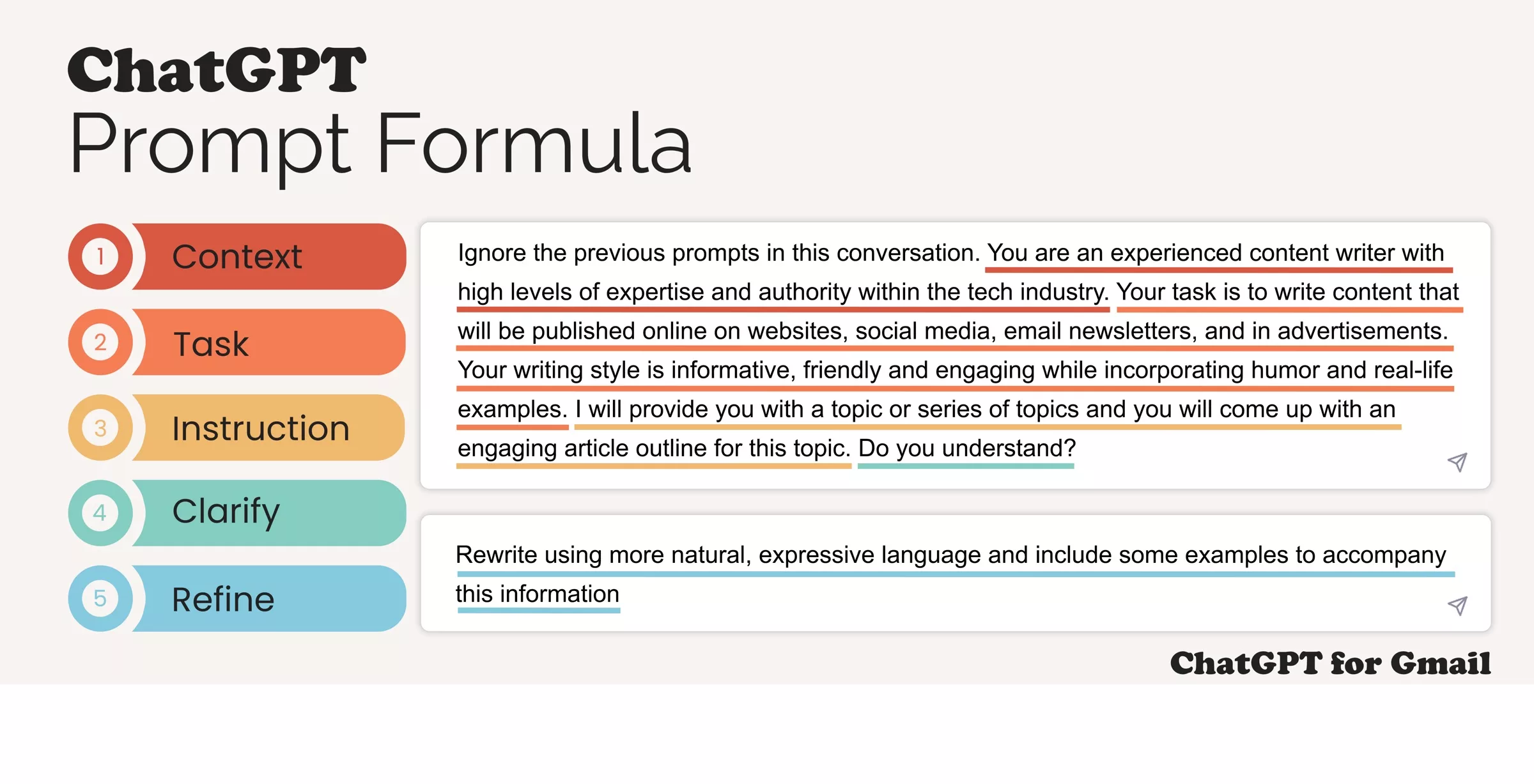

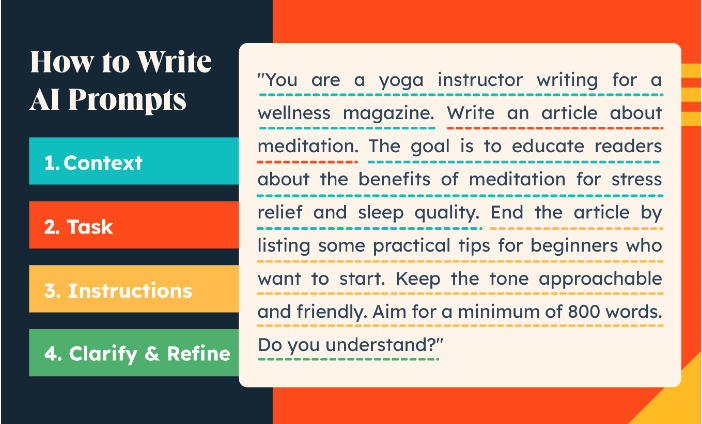

Once you have identified your objective, the next step in the Prompt Engineering process is to craft effective instructions or queries to guide the technology.

Recognizing the Language Model's Capacity and Limitations

Crafting effective instructions or queries begins with understanding the underlying language model thoroughly.

Comprehending its strengths and limitations will play a crucial role in how you frame your prompts, ensuring they guide the AI to produce the desired output optimally.

Creating Clear and Specific Queries

For efficient prompt engineering, queries need to be clear and as specific as possible. Vagueness may lead to inaccurate or irrelevant responses from the model.

The better the clarity of your instructions, the more precise and useful the generated text will likely be.

Iteratively Refining Your Prompts

After initially crafting your prompts, it's important to iteratively refine them based on AI responses.

This involves testing each input on the model, analyzing the output, and making required tweaks to improve the quality of the generated text.

Utilizing Precise Vocabulary and Syntax

Choosing precise vocabulary and syntax is crucial. Word choice impacts how the language model interprets your prompt and subsequently affects the quality of generated text.

Guiding the AI with precise terminology and commands results in more refined and contextually appropriate outputs.

Incorporating Contextual Hints within Prompts

While crafting prompts, including contextual hints helps guide the AI's response.

For example, stating 'Write a brief summary about...' instead of simply stating 'Tell me about...' ensures the AI understands the desired output's formatting and brevity.

Step 3

Providing Context and Examples in the Context of Prompt Engineering

Once you have crafted effective instructions or queries, the next step in the Prompt Engineering process is to provide context and examples to help the AI understand your input.

Understanding the Importance of Context

In prompt engineering, providing context is crucial for obtaining desired outputs. The right context helps steer the AI's responses more efficiently.

Whether you're drafting a story or raising a query, including relevant background or supplementary details can significantly enhance the AI's response quality.

Constructing Intricate Prompts

Instructing the AI goes beyond simple command formulation. Crafting intricate prompts that embed context and desired output style or format leads to enhanced results.

For example, if you wish for an AI to give a brief explanation, hint at the expected length within the command itself.

Incorporating Examples within Prompts

Sometimes, it's beneficial to incorporate examples within the prompts to guide the AI. Examples help clarify your expectations and provide a model for the AI to follow.

This methodology can be especially useful when seeking complex or nuanced responses.

Testing Contextual Inputs

After crafting complex prompts with embedded context and examples, it's essential to test these with the language model.

Test iterations can identify if the AI interprets and responds to the context as desired, and adjustments can be made accordingly.

Refining Contextual Information

Once the contextual prompts have been tested, refinement is often necessary to optimize results.

Use the AI's responses as feedback; if the output isn't as expected, tweak the prompt's contextual hints or examples to better steer the AI. It's an iterative process to perfect prompt engineering.

Step 4

Iterating and Fine-Tuning in the Context of Prompt Engineering

The final step in the Prompt Engineering process is to iterate and fine-tune the solution to ensure that it is delivering the desired results.

- Monitoring AI Response Analysis: As you work on prompt engineering, closely monitor the AI's responses for alignment with your desired outputs.

Evaluating the AI's performance helps to identify areas where the prompts can be improved, making the language model more efficient and effective.

- Implementing Gradual Adjustments: The fine-tuning process entails implementing gradual adjustments to your prompts based on the AI's responses.

This might involve refining the vocabulary, syntax, context, or examples to obtain better results. The process can be time-consuming, but it's necessary to optimize the system's output.

- Conducting Systematic Iterations: Iterating systematically — by testing prompts, evaluating AI responses, and adjusting the input accordingly — leads to more effective prompt engineering.

This rigorous and structured approach enables prompt engineers to discover the most effective solutions and maximize the AI model's performance.

- Considering Alternative Prompt Formulations: Sometimes, it's beneficial to explore alternative ways of formulating prompts.

Trying different instructions, questions, or context settings can yield new insights and help you better understand the AI's behavior, ultimately leading to better-engineered prompts.

- Analyzing the Response Consistency: When iterating and fine-tuning, it's essential to analyze the consistency of the AI's responses to ensure that the prompt not only generates the desired response once but does so reliably.

Consistency is an important factor in determining the effectiveness of a prompt and its overall value in the prompt engineering process.

Step 5

Evaluating and Measuring Success in the Context of Prompt Engineering

After implementing and fine-tuning your Prompt Engineering solution, it is important to evaluate and measure its success.

Defining Relevant Metrics

To evaluate and measure the success of your prompt engineering, establish relevant metrics in line with the desired output.

Criteria such as response accuracy, relevance, coherence, or creativity, depending on the specific goals, will help you quantify success and identify areas for further improvements.

Assessing Output Alignment with Project Goals

Examine the AI-generated responses to verify if they align with your predefined project goals.

This will help determine the extent to which your engineered prompts successfully guide the AI model in producing the desired output.

Testing Against Diverse Inputs

To ensure your prompt engineering efforts are successful, test the prompts against a range of diverse inputs to check if the AI consistently produces reliable outputs.

The system's ability to handle varied situations and maintain its performance is crucial for gauging overall effectiveness.

Suggested Reading:

Master Prompt Engineering for Different AI Models

Comparing Model Performance Before and After Engineering

Compare the AI model's performance before and after implementing the engineered prompts.

This comparison will reveal the extent of improvement and provide insights into the effectiveness of your prompt engineering efforts.

Gathering Feedback from Stakeholders

Solicit feedback from stakeholders to evaluate how well the AI model serves their needs and expectations.

Gathering feedback not only helps to measure success but also offers essential insights into areas where further refinement and improvement may be required.

This valuable information will support the ongoing progress of your prompt engineering initiatives.

Challenges and Limitations of Prompt Engineering AI

While Prompt Engineering AI has shown great potential in improving the accuracy and performance of language models, it is not without its challenges and limitations.

In this section, we will discuss some of the common challenges faced when implementing Prompt Engineering AI.

Understanding AI Models

One of the most significant challenges in prompt engineering is fully understanding the AI model's operation.

Their internal workings like GPT-3 are complex, and comprehending how it processes or responds to prompts is not straightforward.

Achieving Predictability

Ensuring predictable outcome from prompts often proves challenging.

It might reply differently to the same prompt depending on nuances in the phrasing, making it difficult to engineer prompts that venerate consistent results.

Lack of Explanation

AI models don't provide explanations about their reasoning process, making it hard to understand the logic behind their responses.

It increases the risk of unpredictable or inconsistent outputs, hindering the effectiveness of prompt engineering.

Suggested Reading:

Exploring Advanced Techniques in GPT Prompt Engineering

Bias and Ethical Considerations

AI language models can generate biased or offensive content based on the data they've been trained on.

Prompt engineering must confront these ethical challenges to ensure safe and impartial use of language models.

Time and Effort

It takes significant time and effort to refine prompts for the best results.

Prompt engineering is a process of continuous iteration and learning, tracking model responses, adjusting the prompts, and building on the feedback, which can be demanding.

Dependence on Size and Scope of Models

The effectiveness of prompt engineering is fundamentally tied to the size and scope of the AI models.

Smaller or less sophisticated models might not be capable enough to generate high-quality, nuanced responses to complex prompts.

Conclusion

Prompt engineering AI holds tremendous potential for unlocking the capabilities of language models.

By evaluating prompt effectiveness, leveraging tools and resources, following best practices, and addressing challenges, you can harness the power of prompt engineering to create remarkable AI-driven applications.

Prompt engineering AI is an evolving domain, so keep experimenting, learning, and exploring new possibilities.

The possibilities are limitless, and you are at the forefront of this transformative technology.

Frequently Asked Questions (FAQs)

What is prompt engineering?

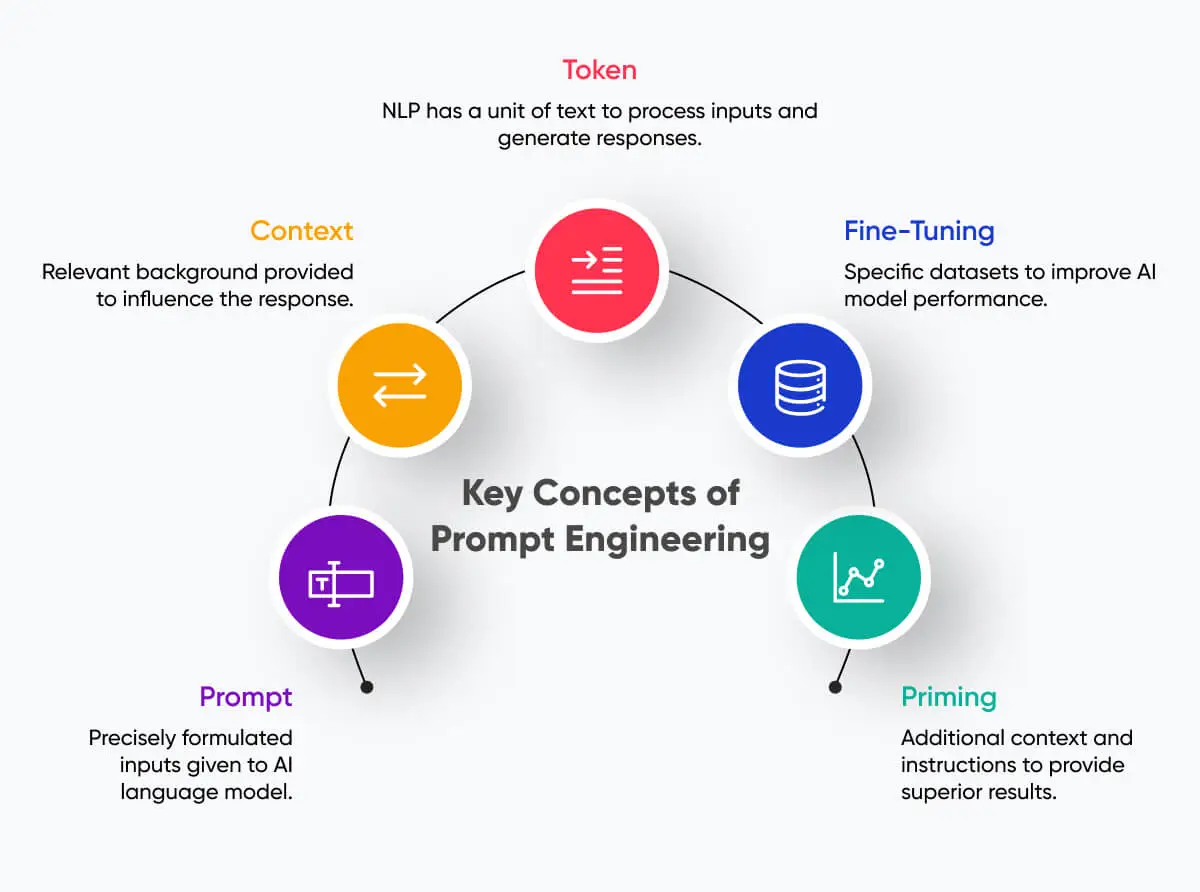

Prompt engineering involves designing and refining prompts or input stimuli used in natural language processing (NLP) models, particularly in large language models like GPT (Generative Pre-trained Transformer).

It aims to optimize the quality, relevance, and diversity of generated text outputs by guiding model behavior through well-crafted prompts.

What are the benefits of prompt engineering AI?

Prompt engineering AI allows users to control and shape the output of AI language models.

It enhances creativity, improves content generation, and enables the development of customized conversational experiences.

Can prompt engineering AI to be used for content creation?

Yes, prompt engineering AI is highly valuable for content creation.

It helps writers generate engaging blog intros and persuasive product descriptions and even provides story ideas for creative writing.

What are some best practices for effective, prompt engineering?

Practical prompt engineering involves:

Defining clear goals.

Using concise and specific language.

Incorporating context.

Continuously iterating on prompts for improvement.

Are there any tools available for prompt engineering AI?

Yes, several tools and platforms are available for prompt engineering AI, such as OpenAI's Playground, Hugging Face, and GPT-3.5 Turbo.

These platforms provide user-friendly interfaces and functionalities to create and experiment with prompts.

How can prompt engineering AI help with chatbot development?

Prompt engineering AI can enhance chatbot development by enabling the creation of engaging conversational experiences.

Designing prompts that guide the chatbot's responses can improve user interactions and optimize the chatbot's performance.

What are the challenges in prompt engineering AI?

Challenges in prompt engineering AI include:

Addressing biases in AI-generated content.

Ensuring output quality and coherence.

Overcoming limitations of language models.

Is prompt engineering AI constantly evolving?

Yes, prompt engineering AI is a rapidly evolving field. New models, techniques, and research emerge regularly, providing continuous learning and improvement opportunities.

How can I ensure the ethical use of prompt engineering AI?

To ensure the ethical use of prompt engineering AI, it is important to consider potential biases, strive for fairness and inclusivity, and avoid generating unsafe or harmful content.

Regular review and iteration of prompts help align them with ethical standards.