Language models like BERT are incredible feats of technology, capable of understanding and generating human-like text. But they're not perfect.

BERT has limitations that hold it back from true language mastery.

In this post, we'll explore what BERT can't do - from a lack of common sense reasoning to an inability to grasp context and nuance. We'll dive into BERT's struggles with creativity, bias, and the real world.

You'll gain insights into BERT's inner workings and learn where it falls short. Plus, we'll compare BERT to other language models and discuss alternatives to overcome its shortcomings.

If you're curious about the boundaries of language AI or want to understand BERT's weaknesses, keep reading. This post illuminates the limitations lurking behind BERT's impressive capabilities.

Limitations of BERT

BERT excels at generating fluent text but needs more true understanding.

This section will help you grasp nuance, context, and logical reasoning beyond the given information. You'll also explore BERT's limitations in common sense, creativity, and originality.

So, let us start with the first limitation.

True Understanding

BERT, or Bidirectional Encoder Representations from Transformers, is a powerful language model that has garnered significant attention for its ability to generate human-like text.

However, it is essential to note that BERT needs a proper understanding of language.

While it excels at pattern recognition and can produce coherent sentences, it does not possess a more profound comprehension of the meaning or intent behind words.

One of BERT's limitations is its inability to differentiate between homonyms or ambiguous statements. With context, Google BERT may be able to interpret words with multiple meanings accurately.

This can lead to misleading or incorrect interpretations, as BERT relies heavily on statistical patterns rather than proper comprehension.

Another aspect where Google BERT needs to improve in proper understanding is its inability to grasp inference, implication, or context beyond the information given.

While it performs well in structured tasks such as answering factual questions or translating language, it may need help to comprehend subtle nuances or illogical statements in open-ended dialogue.

BERT's lack of common-sense reasoning prevents it from fully comprehending the world in the way humans do.

Common Sense Reasoning

One of Google BERT's significant limitations is its difficulty handling common-sense reasoning. While BERT can provide accurate responses to factual questions or generate coherent text, it cannot grasp basic logical reasoning or infer knowledge not explicitly stated.

This limitation hinders BERT from engaging in more complex tasks that require human-like common-sense understanding.

For example, when asked, "What happens to water when it freezes?" BERT can correctly answer that water turns into ice.

However, if asked, "Can you eat a house?" BERT may need help identifying the statement's absurdity and providing an incorrect or nonsensical response.

This limitation arises because BERT is trained on large amounts of text data and needs more humans' extensive background knowledge and reasoning abilities.

Creativity and Originality

Although BERT can generate human-like text and effectively paraphrase existing information, it is incapable of true creativity or originality.

Google BERT combines and rearranges words and phrases from its training data to form coherent sentences. It does not possess the ability to generate truly novel ideas or concepts.

While BERT excels at tasks such as language translation or summarization, it is limited by its pre-training objective, which is to predict missing words in sentences based on the surrounding context.

As a result, BERT is inherently constrained to the patterns it has learned from its training data and cannot produce genuinely original thoughts.

Bias and Fairness

BERT, being a language model, can inherit biases from the data on which it is trained. This can result in potentially discriminatory or unfair outputs.

Addressing and mitigating these biases is essential to ensure fairness and inclusivity. Careful selection of training data and implementing debiasing techniques are crucial steps in reducing biases within BERT models.

Detecting and mitigating biases in BERT models requires thorough training data analysis, and examining potential biases in different aspects such as gender, race, or other sensitive attributes.

By understanding and actively working to address biases, we can aim for more equitable and unbiased language generation.

Physical World Interaction

While Google BERT has shown excellent capability in processing and understanding natural language, it cannot interact with the physical world. BERT models mainly focus on language comprehension and generation tasks but cannot perform actions or control objects in the real world.

For example, BERT may be able to process instructions on how to drive a car, but it cannot physically operate a vehicle or experience the challenges of driving. This limitation restricts BERT's practical applications in scenarios that require physical interaction.

Explainability and Transparency

Although BERT is a powerful language model, its decision-making process can be difficult to interpret and understand.

This lack of explainability and transparency brings challenges when debugging errors or ensuring responsible and accountable use of BERT models.

Due to the complexities of deep learning, it is often challenging to trace back how BERT arrives at its conclusions. This opacity can create concerns about bias, fairness, and the potential for unintended outputs.

The lack of transparency in BERT's decision-making process hinders trust and hampers effective troubleshooting when issues arise.

Limited Adaptability

BERT models are typically trained for specific tasks and require substantial retraining to be applied to new applications or domains. This limits their adaptability and flexibility when faced with new contexts or requirements.

Adapting a BERT model to a different task or domain often requires extensive retraining on new, domain-specific data. This resource-intensive process can hinder the practicality and efficiency of deploying BERT models in various applications.

Now, we will compare Google BERT with other language model and their limitations.

Comparison with other language models and their limitations

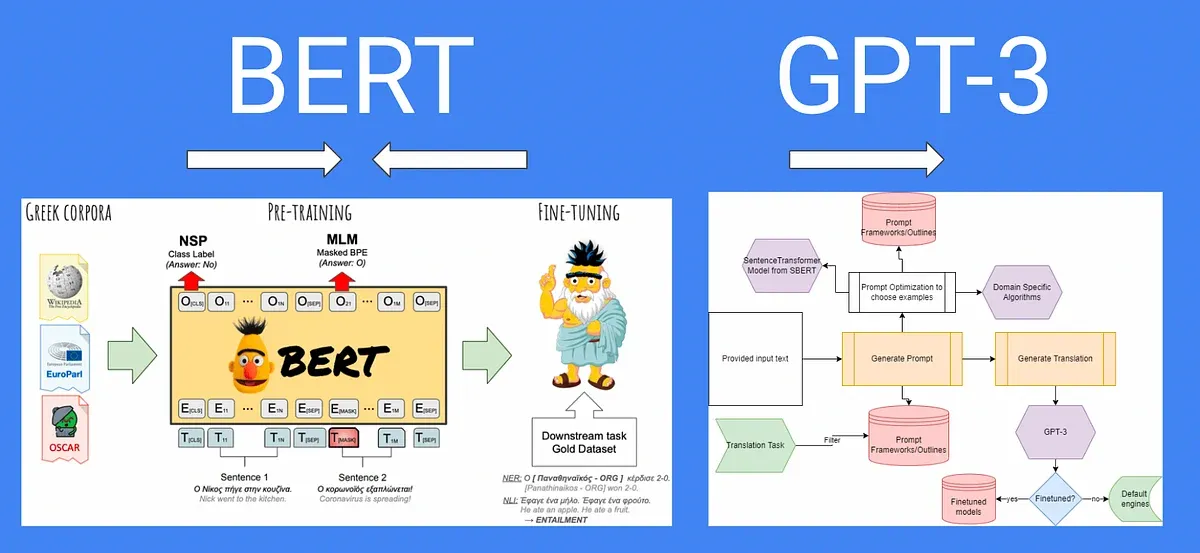

Compared to other language models, BERT LLM's limitations become more apparent. GPT-3, for example, excels at generating coherent and contextually accurate text but may struggle with factual accuracy.

On the other hand, Transformer-XL showcases better handling of long-range dependencies, making it more suitable for tasks involving longer texts.

These comparisons emphasize that no single language model is without its own set of limitations, and choosing the appropriate model depends on the specific requirements of the task at hand.

Lastly, we will look at some possible alternative models for Google BERT.

BotPenguin houses experienced ChatGPT developers with 5+ years of expertise who know their way around NLP and LLM bot development in different frameworks.

And that's not it! They can assist you in implementing some of the prominent language models like GPT 4, Google PaLM, and Claude into your chatbot to enhance its language understanding and generative capabilities, depending on your business needs.

Possible improvements or alternative approaches for BERT

To address the limitations of BERT LLM, researchers and developers have explored various improvements and alternative approaches.

Fine-tuning techniques, such as domain-specific fine-tuning or using additional training datasets, can help mitigate some of the vocabulary limitations by tailoring the model to specific contexts.

Implementing more robust tokenization strategies or adopting character-level embeddings can also aid in handling out-of-context words and improve performance on longer or historical texts.

Additionally, alternative models like XLNet or Roberta have been introduced, aiming to overcome some of BERT LLM's limitations.

These models incorporate different pretraining objectives or architectural modifications to enhance contextual understanding and address specific challenges.

Conclusion

BERT is an impressive language model, but it has limitations. As we've explored, it struggles with true understanding, common-sense reasoning, creativity, and real-world interaction.

While powerful, it lacks the depth of human comprehension.

However, researchers are actively working to address these shortcomings. By fine-tuning BERT, implementing new techniques, or exploring alternative models, we can push the boundaries of what language AI can achieve.

The quest for more human-like language understanding continues. While BERT showcases remarkable capabilities, there's room for growth and innovation in natural language processing.

Stay tuned as we uncover solutions to BERT's limitations and move towards brilliant language models.

Suggested Reading:

LLM Use-Cases: Top 10 Industries Using Large Language Models

Frequently Asked Questions (FAQs)

What are the limitations of BERT LLM in understanding context beyond a certain length?

BERT LLM struggles with long-range dependencies, often failing to capture intricate relationships between distant words in a text, which can hinder its comprehension of nuanced context in lengthy passages.

Can BERT LLM adequately handle rare or out-of-vocabulary words?

BERT LLM's vocabulary is limited to what it was trained on, making it less effective at processing rare or unseen words, potentially leading to misinterpretations or inaccuracies in understanding text containing such vocabulary.

How does BERT LLM perform in understanding context in languages it wasn't trained on?

BERT LLM's effectiveness significantly decreases when applied to languages it wasn't trained on, as it lacks the necessary linguistic knowledge and contextual understanding, often leading to poor performance and inaccurate interpretations.

Can BERT LLM effectively handle tasks involving structured or tabular data?

No, BERT LLM is primarily designed for processing natural language text and lacks the ability to effectively interpret structured or tabular data formats, limiting its applicability in tasks that require understanding such data representations.

How does BERT LLM handle tasks requiring commonsense reasoning or world knowledge?

BERT LLM's performance in tasks requiring commonsense reasoning or world knowledge is limited, as it lacks explicit mechanisms for incorporating such knowledge, often resulting in superficial or incorrect responses when faced with scenarios requiring deeper understanding of common knowledge.