Introduction

Ever wondered how to create a custom chatbot that engages in human-like conversations?

Look no further! In this blog, we'll guide you through the process of building a chatbot using LLama 2, a powerful language model.

LLama 2 is a game-changer in chatbot development. It's easy to use and packed with features that make your chatbot stand out. From understanding context to generating coherent responses, LLama 2 is your key to creating a chatbot that feels almost human.

But why choose LLama 2 over traditional methods? It offers advanced natural language processing, personalization, and scalability. This means your chatbot can understand and interpret user inputs better, provide tailored responses, and handle multiple users simultaneously.

This blog will explore various use cases for the LLama chatbot, from customer service to education and entertainment.

We'll also guide you through setting up your development environment, installing required libraries, and obtaining a LLama 2 model.

But that's not all! We'll also discuss designing your chatbot, integrating LLama 2, training and fine-tuning it, and deploying and managing it.

By the end of this blog, you'll have all the tools you need to create a custom chatbot that engages and delights users.

So, let's dive in and discover the power of LLama 2 in chatbot development!

Understanding LLama 2 and Chatbot Development

LLama 2 is an advanced tool for creating chatbots.

It is designed to make developing chatbots easier and more efficient. LLama 2 stands out because it has critical features that empower chatbot creation.

Key Features of LLama 2 for Chatbot Development

The key features include:x

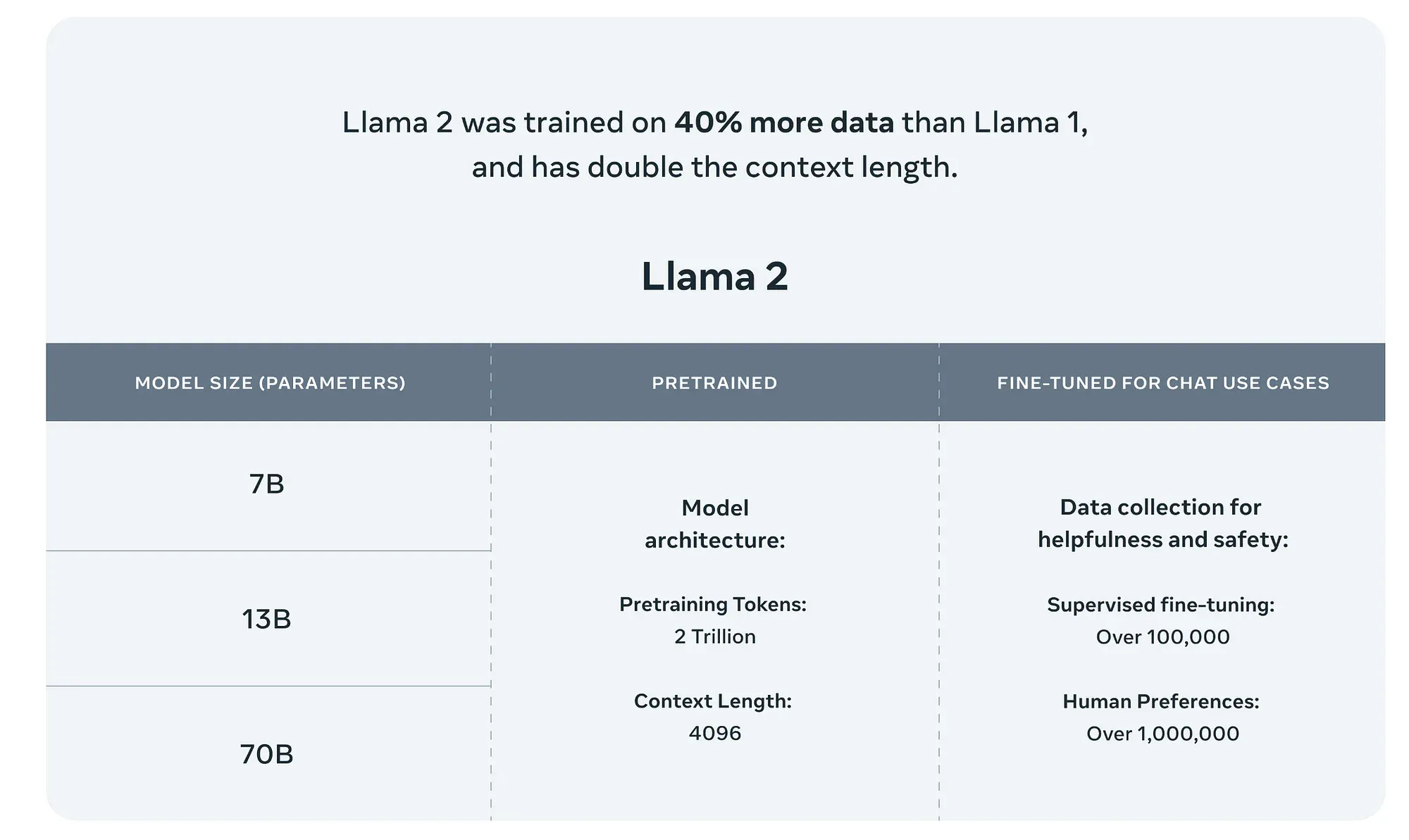

- Large Language Model Capabilities: LLama 2 leverages a powerful large language model that allows chatbots to understand and generate human-like text.

This model is trained on vast amounts of data, enabling chatbots to respond effectively to a wide range of user inputs.

- Text Generation: With LLama 2, chatbots can generate coherent and contextually relevant responses.

This makes conversations feel more natural and human-like, ensuring a smoother user experience.

- Conversational Context: LLama 2 can understand and track the context of a conversation.

This allows chatbots to maintain a coherent dialogue with users over multiple turns, resulting in more meaningful and personalized interactions.

Why Use LLama 2 for Chatbots?

There are several advantages to using LLama 2 over traditional chatbot methods. Let's take a look at some of them:

Advantages of LLama 2 over Traditional Chatbot Methods

The advantages include:

- Natural Language Processing: LLama 2 employs advanced natural language processing techniques.

Chatbots built with LLama 2 can more accurately understand and interpret user input and decipher the nuances of language, allowing for more effective communication.

- Personalization: LLama 2 enables chatbots to personalize their responses based on user preferences and previous interactions.

This creates a more tailored and engaging experience for users, increasing their satisfaction and encouraging them to continue using the chatbot.

- Scalability: LLama 2 allows for the creation of chatbots that can handle a large number of users simultaneously.

This scalability is essential, especially for businesses that anticipate high chatbot usage. LLama 2 ensures the chatbot remains responsive and performs well, even during peak times.

Examples of Use Cases for LLama 2 Chatbots

LLama 2 can be applied to various industries and purposes. Here are a few examples:

- Customer Service: LLama chatbot excel in customer service scenarios.

They can provide instant and helpful responses to customer queries, improving customer satisfaction and reducing the workload on customer support teams.

- Education: LLama 2 can be used to create chatbots that assist in educational settings.

These chatbots can provide personalized learning experiences, answer questions, and guide students through learning materials.

- Entertainment: LLama chatbot can be used in entertainment applications like gaming or interactive storytelling.

They can engage users in dynamic and immersive conversations, enhancing the overall experience.

Next, we will see how to set up the LLama chatbot development environment.

Setting Up Your LLama 2 Chatbot Development Environment

Before you develop your LLama chatbot, you'll need to decide which development platform suits your needs: local or cloud-based.

Let's explore the advantages and disadvantages of each option.

Advantages and Disadvantages of Local Development

Local development involves setting up LLama 2 on your computer. Here are some pros and cons to consider:

Advantages

The advantages includes:

- You have full control over your development environment.

- You can work offline without relying on an internet connection.

- It may be more cost-effective if you already have the necessary hardware.

Disadvantages

The disadvantages includes:

- It requires more technical expertise to set up and maintain.

- Limited processing power and memory may impact performance.

- Scaling your chatbot may be more challenging.

Benefits and Considerations for Cloud-Based Development

On the other hand, cloud-based development leverages online platforms for hosting and running LLama 2.

Here are some benefits and considerations:

Benefits

The benefits includes:

- Easy setup and scalability, as cloud platforms handle infrastructure management.

- High processing power and memory resources are available.

- Collaboration with team members is simplified.

Considerations

The limitations includes:

- Costs may increase depending on usage and platform pricing.

- Internet connectivity is required for development and deployment.

- Dependency on the cloud platform providers.

Installing Required Libraries

You'll need to install libraries to start building chatbots with LLama 2. Here's a step-by-step guide for library installation, along with code examples!

Step-by-Step Guide for Library Installation

The steps include:

Step 1

Install Streamlit for building interactive user interfaces:

pip install streamlit

Step 2

Install the llama-cpp-python library for LLama 2 integration:

pip install llama-cpp-python

Troubleshooting Common Installation Issues

Encountering issues during library installation is not uncommon. Here are some common problems and their possible solutions:

- If you encounter permission errors, try running the installation command with administrative privileges or using a virtual environment.

- If dependencies conflict, you can try specifying the library versions explicitly during installation.

- In case of network errors, check your internet connection or use a different mirror or repository for package downloads.

Obtaining a LLama 2 Model

To develop chatbots with LLama 2, you'll need a language model. There are both free and paid options to consider.

Free and Open-Source LLama 2 Models

Free and open-source LLama 2 models are available, but they may have some performance limitations and considerations.

Keep in mind:

- The performance and capabilities of these models may not match those of paid models.

- The level of customization and fine-tuning options may be limited.

- They can still provide a good starting point for developing basic chatbots or experimenting with LLama 2.

Paid LLama 2 Models with Advanced Features

Paid LLama 2 models offer more advanced features and customization options. Here are some considerations when selecting a paid model:

- Compare the pricing plans to find the most suitable option for your budget and usage requirements.

- Evaluate the advanced features, such as increased response quality or additional conversational context capabilities.

- Consider the support and documentation provided by the model provider.

Next, we will cover how to design your LLama chatbot.

Designing Your Chatbot Using LLama 2 Language Model

To design an effective chatbot, it's crucial to determine its purpose and target audience first.

Let's explore the process of identifying specific needs and goals for your chatbot and understanding user demographics and expectations.

Identifying Specific Needs and Goals for Your Chatbot

Start by clarifying the purpose of your chatbot. What problem or task should it help users with? Is it customer support, providing information, or assisting with a specific task?

Understanding the specific needs your chatbot is designed to address will shape its functionality and features.

Next, define your goals. What outcomes do you want to achieve with your chatbot? Are you aiming to increase customer satisfaction, reduce response time, or drive sales conversions? Clear goals will guide the design and development process.

Suggested Reading: LLaMa-2 Use Cases: How to Power Your Business with AI

Understanding User Demographics and Expectations

Knowing your target audience is crucial for creating a chatbot that resonates with users. Consider the demographics of your user base, such as age, gender, location, and language preferences.

Understanding your users' expectations and communication style will help you tailor the chatbot's tone and language accordingly.

Scripting Conversation Flows and User Journeys

Once you clearly understand your chatbot's purpose and audience, it's time to script the conversation flows and map out user journeys.

This involves designing how the chatbot will interact with users and guide them through the conversation.

Mapping Out Conversation Branches and Decision Trees

Creating conversation branches helps your chatbot navigate user queries and respond appropriately.

Visualize the different paths users can take and define decision points where the chatbot needs to make choices or ask clarifying questions.

By mapping out these branches and decision trees, you ensure that your chatbot can handle a variety of user inputs and provide helpful and relevant information at each step.

Designing Engaging and Informative Dialogue Options

The dialogue options presented to users should be engaging and informative. Craft conversational prompts and questions that encourage users to continue the conversation and provide the necessary information to fulfill their requests.

Consider using natural language and avoiding jargon or complicated language that may confuse users. Provide clear instructions and options to guide users toward their desired outcome.

Designing the User Interface

The design of your chatbot's user interface plays a vital role in its overall user experience.

Consider whether a text-based or graphical interface best suits your chatbot's purpose and target audience.

Next, we will cover how to integrate LLama 2 with the chatbot.

Integrating LLama 2 with Your Chatbot

Now that you have decided to integrate LLama 2 with your chatbot, let's explore how you can send user input to LLama 2 for processing.

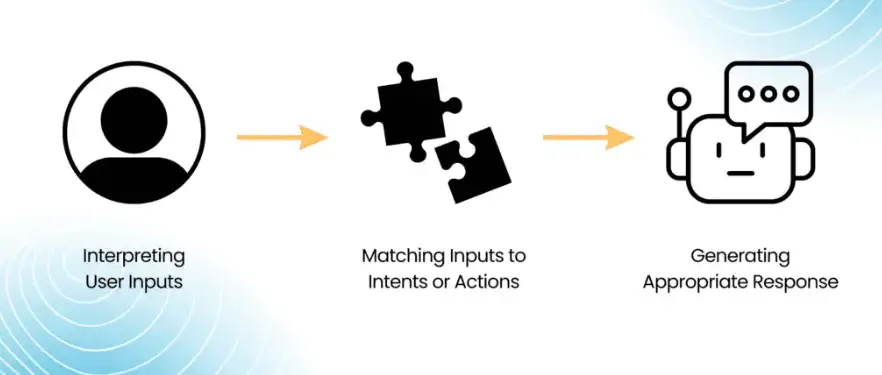

This involves pre-processing the user input for optimal results and formatting it to ensure compatibility with LLama 2.

Pre-Processing User Input for Optimal Results

Before sending user input to LLama 2, it's essential to clean the text data.

This means removing unnecessary characters, correcting spelling mistakes, and appropriately handling punctuation. Cleaning the user input can improve the accuracy of LLama 2's responses.

Additionally, it is crucial to identify the intent behind the user input. Is the user asking a question, making a statement, or requesting assistance? Understanding the intent helps LLama 2 generate more relevant and contextual responses.

Formatting User Input for LLama 2 Compatibility

To ensure compatibility with LLama 2, you must format the user input appropriately. This typically involves converting the user input into a specific format that LLama 2 can understand and process.

Check the documentation provided by LLama 2 to understand the required format and follow the guidelines to prepare the user input accordingly.

Understanding LLama 2's Response Generation

LLama 2's response generation relies on various factors that influence the quality and relevance of the responses.

Let's explore these factors to help you better understand LLama 2's response generation process.

Factors Influencing LLama 2's Response

One crucial factor is context. LLama 2 considers the previous conversation history and the immediate context to generate coherent responses consistent with the ongoing conversation.

Additionally, LLama 2's response generation is influenced by its training data. The model is trained on a large corpus of text, which helps it understand language patterns and generate more contextually appropriate responses.

Interpreting and Utilizing Confidence Scores in Responses

LLama 2 provides confidence scores with its responses, which indicate the level of certainty in the generated response. These confidence scores can help you gauge the reliability of the generated response.

Confidence scores can be beneficial in scenarios where accuracy is crucial, such as when providing critical information or addressing legal and medical queries.

By setting a confidence threshold, you can filter out responses with lower confidence scores to ensure accuracy and reliability.

Handling Different Conversation Scenarios

Different conversation scenarios require different approaches in managing user interactions.

Let's explore strategies for handling open-ended questions and flexible conversations, as well as managing closed-ended questions and menu-driven interactions.

Strategies for Open-Ended Questions and Flexible Conversations

Open-ended questions and flexible conversations allow users to freely express their thoughts and provide detailed information.

Prompt users to elaborate on their queries and encourage them to share any relevant context. This approach enables LLama 2 to generate comprehensive and contextually appropriate responses.

Suggested Reading:LaMDA vs. GPT-3: Which AI Language Model is Better?

Managing Closed-Ended Questions and Menu-Driven Interactions

In scenarios where users ask closed-ended questions or engage in menu-driven interactions, provide predefined options or a set of choices for users to select from.

This helps limit the scope of the conversation and allows LLama 2 to provide prompt and accurate responses based on the available options.

By understanding these conversation scenarios and implementing the appropriate strategies, you can ensure smooth and effective interactions between your chatbot and LLama 2.

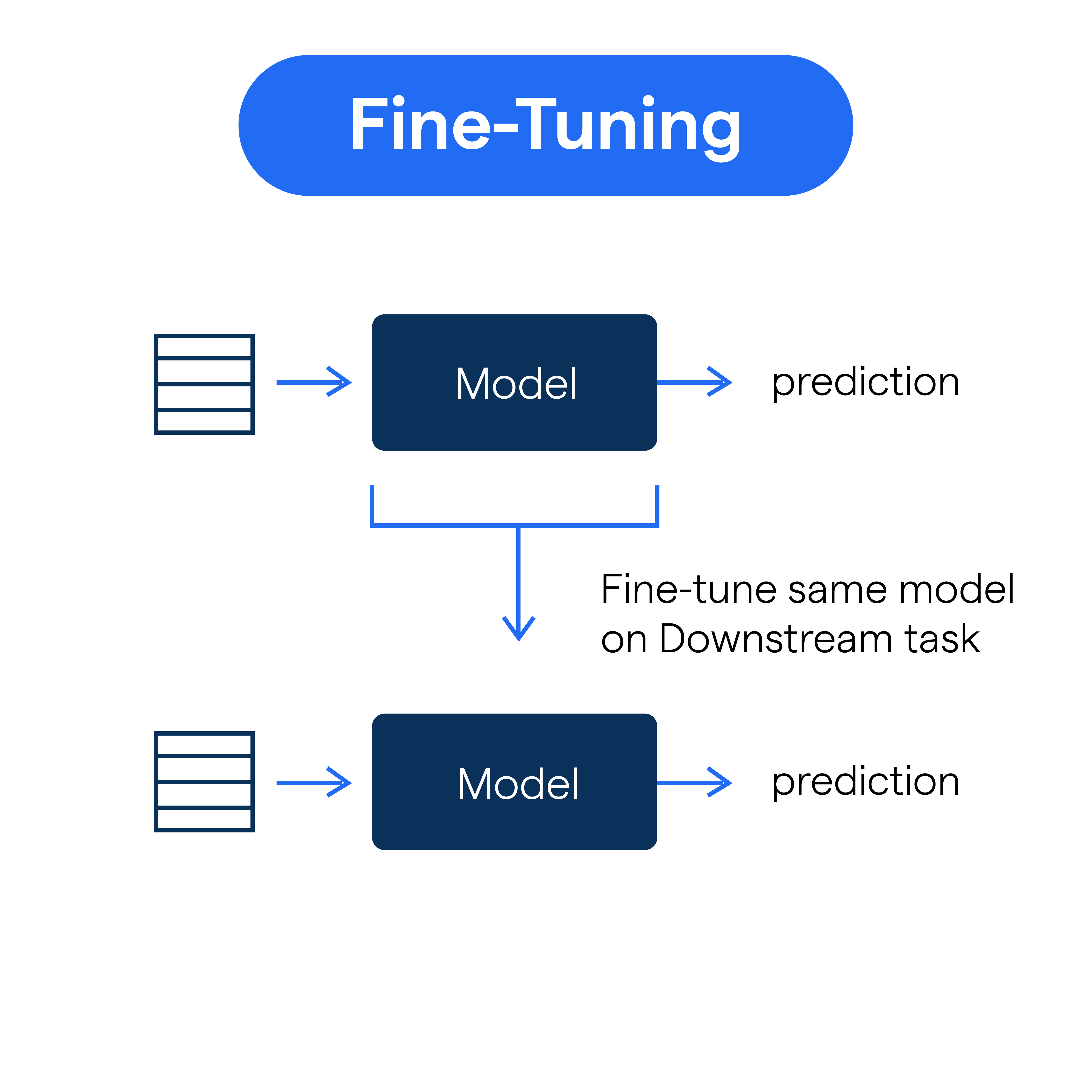

Training and Fine-Tuning Your Chatbot

To effectively train your chatbot with LLama 2, you'll need to provide sample data that consists of dialogue examples and user intents.

Types of Data Required for Effective Training

Dialogue examples are conversations between users and the chatbot. They should represent various scenarios and cover topics relevant to your chatbot's purpose.

These examples help LLama 2 understand how to generate responses based on different user inputs.

User intents refer to the intentions behind the user's input. They help LLama 2 grasp the context and generate responses that align with the user's needs.

By providing a variety of user intents, you enable LLama 2 to understand better and respond to different types of queries.

Techniques for Fine-Tuning Chatbot Responses

After training your chatbot, you can enhance its performance by fine-tuning its responses using advanced techniques.

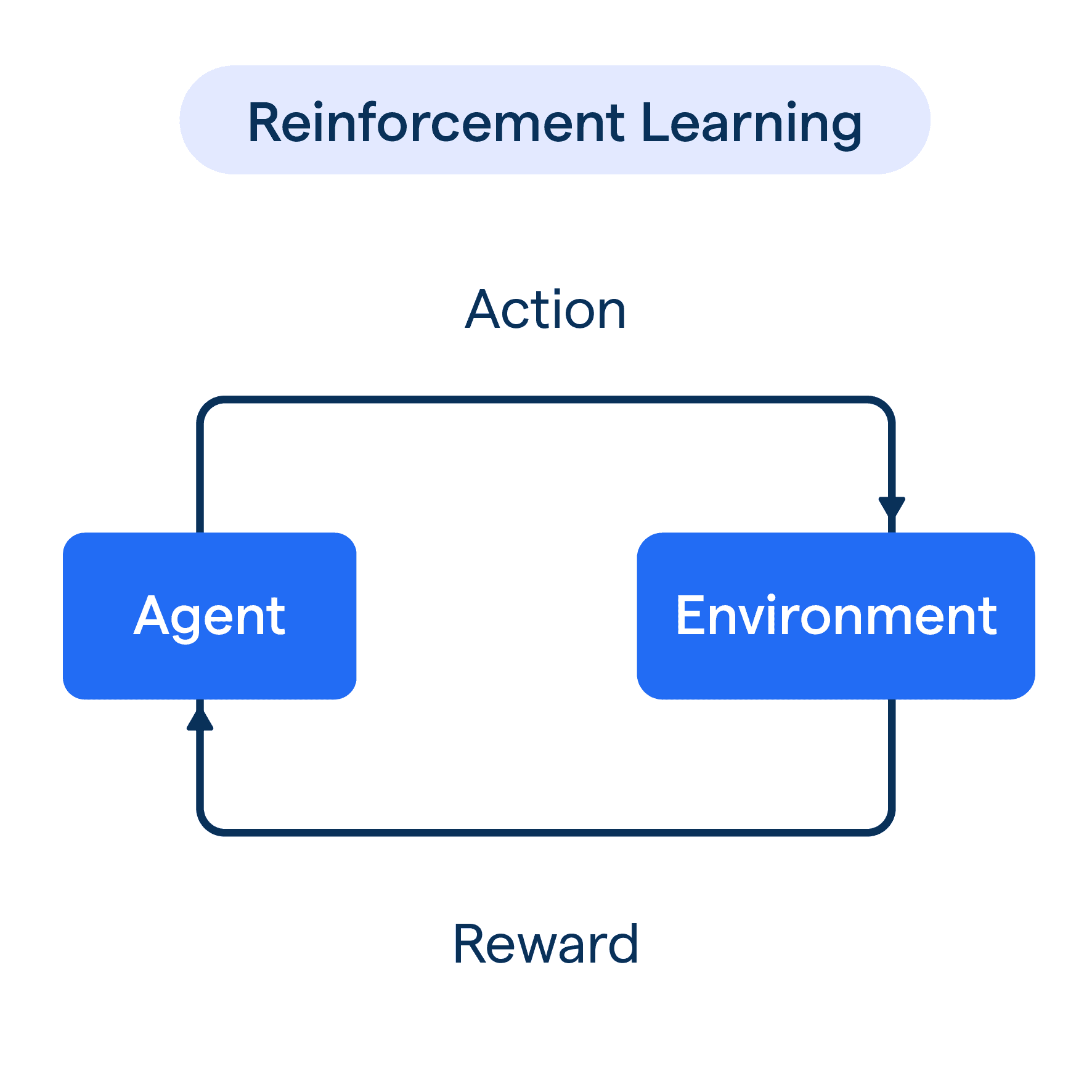

Utilizing Reinforcement Learning for Improved Performance

Reinforcement learning is a technique that involves training the chatbot through trial and error.

By providing rewards or penalties based on the quality of the generated responses, you can guide the chatbot in improving its performance over time.

This technique allows the chatbot to learn from user interactions and adapt its responses to maximize user satisfaction.

By continuously refining the chatbot's responses using reinforcement learning, you can enhance its performance and make it more effective in addressing user queries.

Refining Responses Based on User Feedback and A/B Testing

User feedback is a valuable resource for fine-tuning your chatbot. Encourage users to provide feedback on the chatbot's responses and use their input to improve.

This feedback can help you identify areas where the chatbot may generate inaccurate or unsatisfactory responses.

You can also conduct A/B testing by comparing different versions of the chatbot's responses to determine which version performs better.

This iterative process of refining and optimizing responses based on user feedback and testing allows you to enhance the chatbot's performance and improve user satisfaction continuously.

Evaluating and Testing Your Chatbot's Performance

Evaluating your chatbot's performance is crucial to ensuring its effectiveness and user satisfaction.

You can use various metrics to measure chatbot success, including accuracy, user satisfaction, and task completion.

Metrics for Measuring Chatbot Success

Accuracy measures how well the chatbot understands user intents and generates correct responses.

It is essential to regularly evaluate the accuracy of the chatbot's responses to identify areas for improvement.

User satisfaction can be assessed through user surveys or feedback ratings. Monitoring user satisfaction helps you gauge how well the chatbot meets user expectations and identify any areas of dissatisfaction.

Developing a Testing Plan for Thorough Evaluation

To thoroughly evaluate your chatbot's performance, develop a testing plan encompassing different scenarios and edge cases.

This plan should include a range of test inputs and expected outputs to ensure comprehensive testing.

Regularly test your chatbot against the defined scenarios and metrics and adjust based on the results. By consistently evaluating and testing your chatbot's performance, you can identify areas for improvement and continuously enhance its capabilities.

Next, we will cover how to deploy and manage chatbots.

Suggested Reading:Custom LLM Development: Build LLM for Your Business Use Case

Deploying and Managing Your Chatbot

When deploying your chatbot, you have a few options: integrate it into an existing website or messaging app or consider a standalone deployment.

Integrating Your Chatbot into Existing Platforms

Integrating your chatbot into these platforms can be a seamless option if you already have a website or a messaging app.

This allows users to access the chatbot through familiar channels, making it convenient to interact with your chatbot while engaging with your existing platform.

Monitoring and Maintaining Chatbot Performance

After deploying your chatbot, monitoring and maintaining its performance is crucial to ensuring it continues to serve its purpose effectively.

Suggested Reading:Top 10 Promising Applications of Custom LLM Models in 2024

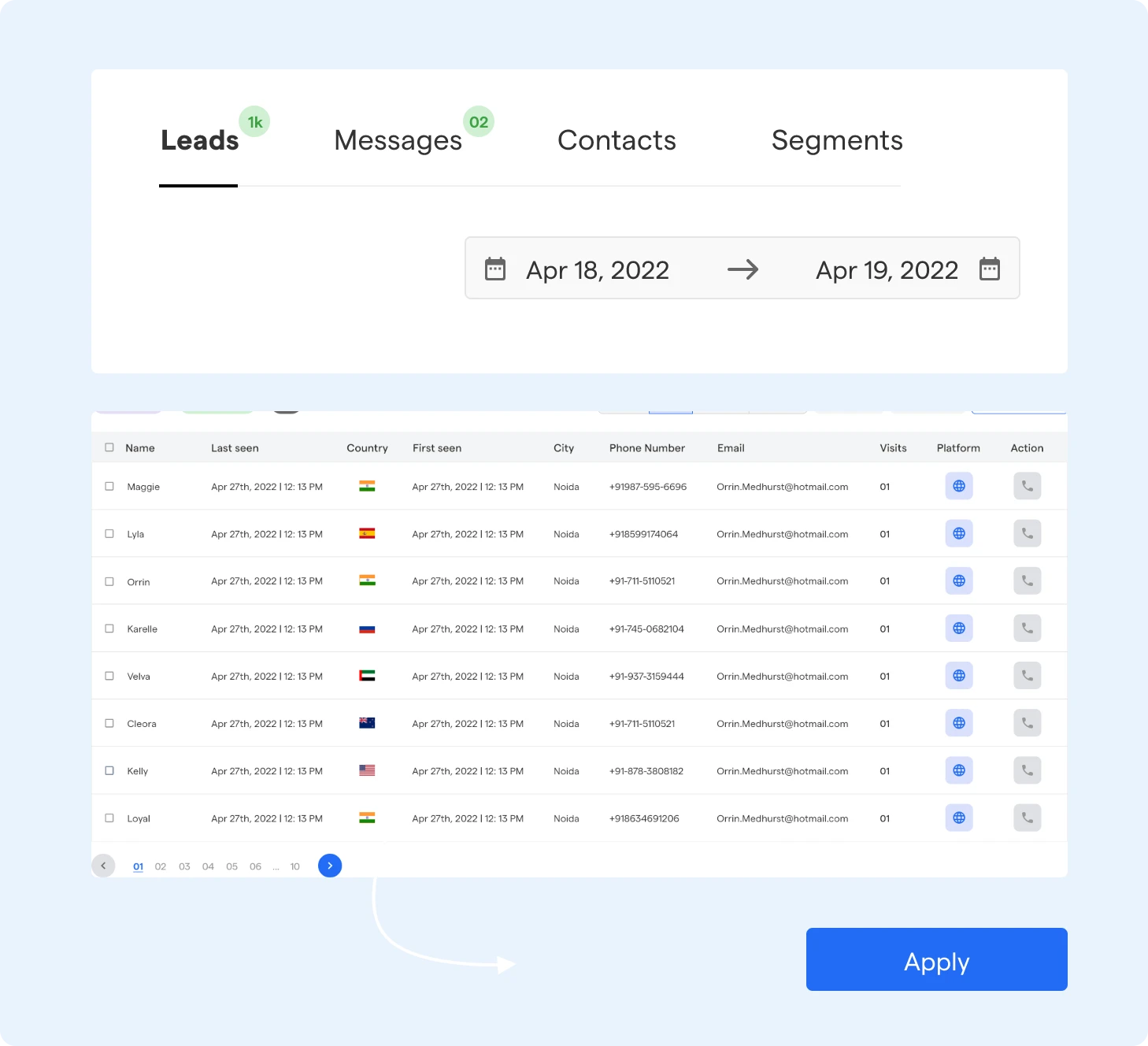

Tracking Key Metrics and User Interactions

Monitoring key metrics such as user engagement, response times, and conversion rates can provide valuable insights into your chatbot's performance.

By monitoring these metrics, you can identify areas that need improvement or refinement.

It's also important to track user interactions with your chatbot. Analyzing user inputs and responses can help you understand how users are interacting with your chatbot and if there are any recurring patterns or issues that need to be addressed.

Conclusion

In conclusion, building a custom chatbot using LLama 2 offers numerous benefits, from advanced natural language processing to personalization and scalability.

With its powerful language model, text generation, and conversational context capabilities, LLama 2 empowers you to create chatbots that engage users in meaningful, human-like conversations.

Whether you want to improve customer service, education, or entertainment, LLama 2 is the perfect tool for your chatbot development needs.

Looking to take your chatbot to the next level? Look no further than BotPenguin, the premier chatbot service. With BotPenguin, you can quickly develop, deploy, and manage your chatbot, ensuring optimal performance and user satisfaction.

Try BotPenguin today and see the difference for yourself!

Frequently Asked Questions (FAQs)

What is LLama 2 Language Model?

LLama 2 Language Model is a powerful natural language processing tool that enables developers to build custom chatbots with advanced conversational capabilities.

How can LLama 2 Language Model be used to build chatbots?

LLama 2 Language Model can be used to build chatbots by training the model with relevant data, defining conversational flows, and integrating it into chatbot platforms like Facebook Messenger or Slack.

Can LLama 2 Language Model handle multilingual chatbots?

Yes, LLama 2 Language Model has multilingual support, allowing you to build chatbots that can understand and respond in multiple languages, enhancing user experiences globally.

How accurate is LLama 2 Language Model in understanding user queries?

LLama 2 Language Model has been trained on vast amounts of data, making it highly accurate in understanding user queries and providing relevant responses, improving the overall chatbot interaction.

Does LLama 2 Language Model have deployment options for different platforms?

Yes, LLama 2 Language Model offers deployment options for various platforms, including web applications, mobile apps, and messaging platforms, making it flexible for different project requirements.