Whether you're a language enthusiast, coder, or creative writer, LlaMA 2 is set to be a game-changer.

This cutting-edge large language model can generate text, translate languages, and even write code. But how does it work, and why should you care?

In this beginner's guide, we'll peek behind the curtain to reveal LlaMA 2's inner workings. You'll discover how artificial neurons and deep learning give LlaMA 2 its uncanny linguistic abilities.

We'll break down key technologies like Autoregressive transformers and RMSNorm that enable efficient, stable performance.

We provide actionable tips on setting up your environment, fine-tuning for custom tasks, and tapping into the HuggingFace community.

Whether you're new to large language models or looking to level up your skills, this blog will guide you from installation to real-world application.

What is LlaMA 2?

LlaMA 2 can generate text, translate languages, write creative content, and answer your questions informally.

It's like having a personal research assistant and wordsmith rolled into one, powered by billions of connections—think of them as brain cells—that have been trained on a massive dataset of text and code.

Compared to other popular LLMs like GPT-3, LlaMA 2 boasts some unique features.

It's open-source, meaning anyone can access and experiment with it, unlike its closed-source counterparts.

It's also incredibly efficient, requiring less computing power to run, making it more accessible for those without beefy machines.

Why Should You Learn About LlaMA 2?

So, why should you, as a curious human, care about LLMs like LlaMA 2? The possibilities are as vast as the language itself. Imagine:

Personalized education: LLMs could tailor learning experiences to individual needs, making education more effective and engaging.

Enhanced creativity: Writers, artists, and musicians could use LLMs as brainstorming partners or even co-creators.

Revolutionized communication: Imagine breaking down language barriers in real-time or having AI assistants that truly understand your intent.

And, there is one more interesting use case of language models, i.e. automating customer services via AI chatbots.

With the advent of AI, businesses are constantly turning their attention towards automating various business tasks. And one such task is customer service.

Now, if you want to begin with chatbots but have no clue about how to use language models to train your chatbot, then check out the NO-CODE chatbot platform, named BotPenguin.

With all the heavy work of chatbot development already done for you, BotPenguin allows users to integrate some of the prominent language models like GPT 4, Google PaLM, and Anthropic Claude to create AI-powered chatbots for platforms like:

- WhatsApp Chatbot

- Facebook Chatbot

- Wordpress Chatbot

- Telegram Chatbot

- Website Chatbot

- Squarespace Chatbot

- Woocommerce Chatbot

- Instagram Chatbot

These are just a few glimpses into the future that LLMs like LlaMA 2 are shaping. But before we get lost in the daydreams, let's peek under the hood and understand how this linguistic marvel works.

Inside of LlaMA 2

LLMs like LlaMA 2 are built on a complex foundation of interconnected units called artificial neurons.

These neurons, just like their biological counterparts, process information and learn from patterns.

By analyzing massive amounts of text and code, these neurons become adept at understanding and generating language.

But how does this learning happen? It's all about a process called training. Imagine throwing countless words and sentences at LlaMA 2, showing it examples of good and bad writing, different languages, and various writing styles.

Over time, the connections between its artificial neurons adjust, allowing it to grasp the intricacies of language.

Key Technologies

While the basic structure of LLMs might seem similar, each one has its own unique flavor. LlaMA 2 relies on several key technologies:

Autoregressive transformers: These are like mini prediction engines that guess the next word in a sequence based on what came before. By constantly refining these predictions, the model learns to generate coherent and grammatically correct text.

RMSNorm: Imagine information flowing through a river. RMSNorm acts like dams, regulating the flow and preventing the data from getting overwhelmed, ensuring stable and efficient learning.

SwiGLU: This technology helps the model process information even faster, making it more efficient and requiring less computing power.

Different Sizes, Different Strengths: Choosing the Right Tool

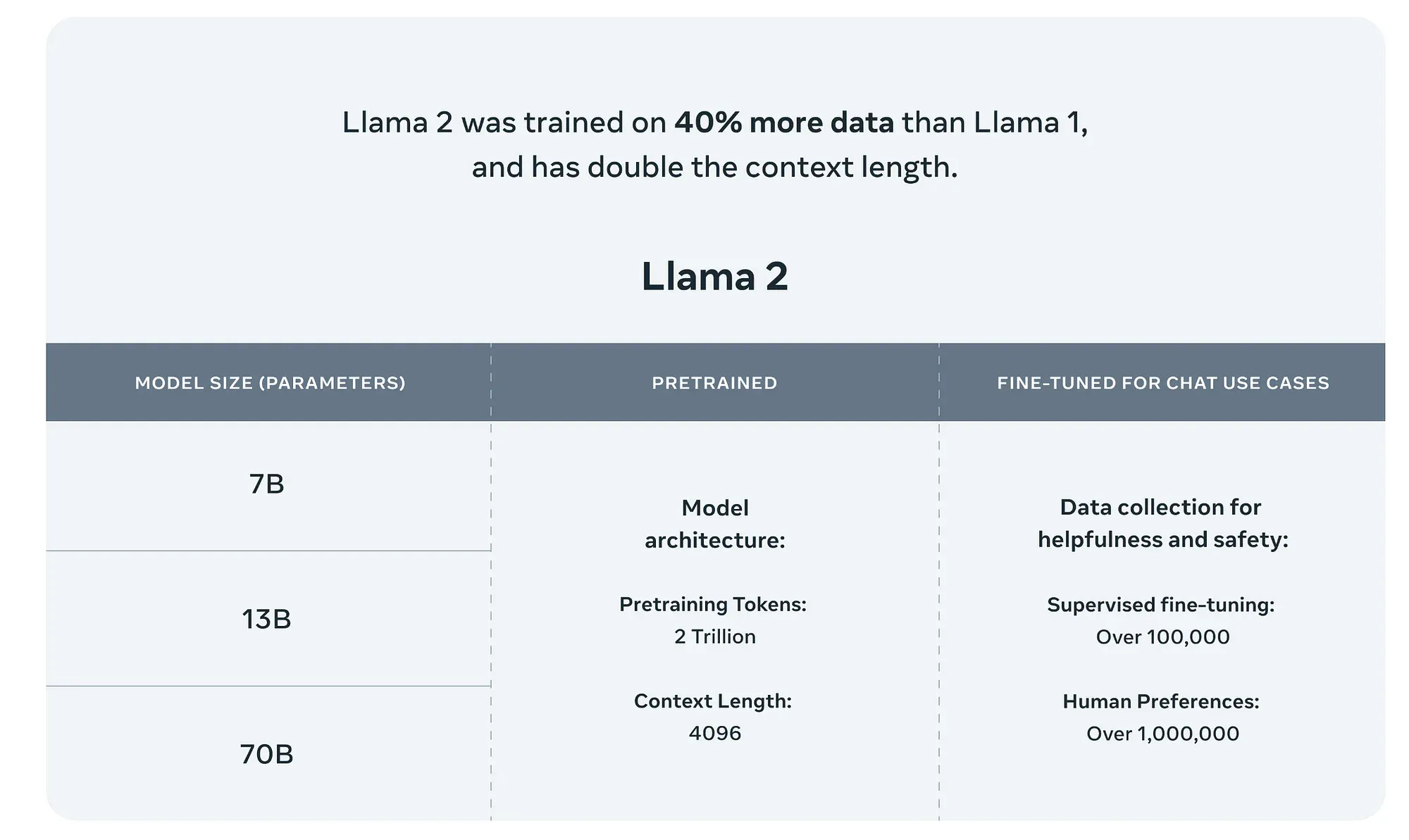

Just like humans come in all shapes and sizes, LLMs have different scales. LlaMA 2 has three versions: 7B, 13B, and 65B parameters.

More parameters usually mean better performance, but also require more powerful machines to run. So, the choice depends on your needs.

The 7B version is lightweight and perfect for experimenting or running on smaller devices.

The 13B is a good balance between power and efficiency, while the 65B beast tackles complex tasks like translation and creative writing with impressive accuracy.

Next, we will cover setting of LlaMA 2 Environment.

Suggested Reading:

Setting Up Your LlaMA 2 Environment

Imagine LlaMA 2 as a superpowered friend for text and code. To hang out with this pal, you'll need some tools:

Hardware: A decent computer with some muscle (RAM and processing power) is ideal. Think of it as a spacious playground for your ideas.

Software: Python is the key here. It's like a special language your friend understands.

Libraries: Transformers and tokenizers are like handy toolkits to work with text and code in a way LlaMA 2 loves.

Where to use LlaMA 2?

You can choose from:

Hugging Face: Imagine a bustling online community where everyone's experimenting with language models. You can tap into their work and share your own discoveries.

Google Colab: Think of it as a temporary workspace in the cloud, perfect for quick experiments without installing anything on your own machine.

Setting Up Your Toolkit

Remember those Transformers and tokenizers? It's time to bring them on board. With commands like "pip install transformers" in your terminal (a command window),

you'll have them ready to play.

Explore LlaMA 2': What Can It Do?

Hold on tight, the fun begins now!

Text Generation: Give LlaMA 2 a starting sentence, and watch it weave a story, write a poem, or even craft a funny tweet. You can even guide it with specific styles or formats.

Question Answering: Got a question? Ask LlaMA 2! It can handle open-ended inquiries, factual searches, and even answer in different formats like bullet points or summaries.

Translation: Need to break language barriers? LlaMA 2 can translate between many languages, and with some training, you can even improve its accuracy for specific domains.

Code Generation: Stuck on a coding problem? Let LlaMA 2 suggest solutions or even generate entire code snippets. Remember, it's still under development, so use it as a helpful assistant, not a magic coding machine.

Next, we will cover how to customize and fine-tune your code.

Fine-Tuning Llama2

Prepare the Environment: Start by installing necessary Python libraries in your Google Colab environment, such as accelerate, peft, bitsandbytes, transformers, and trl. Then, load and import the required modules from these libraries.

Configure the Model and Dataset: Choose a readily accessible model (like the NousResearch/Llama-2-7b-chat-hf from Hugging Face) and a compatible dataset (for instance, the mlabonne/guanaco-llama2-1k).

Load the Dataset: Import your chosen dataset directly from the source (e.g., Hugging Face) in this case.

Apply Quantization Techniques: Implement 4-bit precision fine-tuning using the QLoRA technique. This step is necessary for optimization and to enable the model to run efficiently on consumer-grade hardware.

Initialize the Model and Tokenizer: Load the LLaMA 2 model and corresponding tokenizer from the source (e.g., Hugging Face). Make sure that the pad token is matched with the end of sequence (EOS) token. Turn off the model's caching feature for better performance.

Set Up PEFT Parameters: Configure Parameter-Efficient Fine-Tuning (PEFT) via a suitable configuration, such as LoraConfig. This step reduces the number of parameters that need to be updated during the training process.

Define Training Parameters: Specify your training settings and hyperparameters. Make sure to set appropriate values for parameters like the learning rate, weight decay, number of training epochs, batch size, and more, based on your specific needs and resources.

Perform Fine-Tuning: Implement Supervised Fine-Tuning (SFT) to fine-tune the model on your dataset. This process uses the TRL (Transformers as Reinforcement Learning) library and should be executed using the training parameters specified in the previous step.

Resources and Community

Hugging Face offers comprehensive documentation and tutorials to guide you through the fine-tuning process.

These resources provide step-by-step instructions, code snippets, and explanations to help you understand and apply the techniques effectively.

Active Community Forums and Discussions

Engage with the active Hugging Face community through forums and discussions.

Pose your questions, share your experiences, and learn from others who successfully fine-tuned models.

The community is supportive and eager to help you overcome any challenges you may encounter.

Additional Learning Materials and Courses

In addition to the official documentation, you can find supplementary learning materials and courses to deepen your understanding of fine-tuning and customization.

These resources cover various aspects, from beginner-level introductions to advanced techniques, allowing you to expand your knowledge and skills.

Remember, fine-tuning and customization can greatly enhance the performance of pre-trained models, making them more valuable and tailored to your specific needs.

Conclusion

The future is here - LlaMA 2 puts groundbreaking linguistic AI in your hands today.

With this guide, you now have the knowledge to install, customize, and apply LlaMA 2 for unlimited possibilities.

Whether you're a coder, writer, student, or language enthusiast, LlaMA 2 is your portal to generate content, answer questions, translate text, and more.

Now is the time to explore, experiment, and engage with the welcoming HuggingFace community to shape the future of language AI.

Don't just observe this revolution from the sidelines - step up and put LlaMA 2 to work for you. The potential is limited only by your imagination - get started with LlaMA 2 today!

Suggested Reading:

Frequently Asked Questions (FAQs)

What is LlaMA 2 and how can it benefit beginners?

Meta LlaMA 2 is an AI-powered tool that assists beginners in various fields. It can provide helpful recommendations, offer personalized learning experiences, and assist with problem-solving, all aimed at accelerating the learning process.

How to get started with LlaMA 2?

To start with Llama 2, install Python and necessary libraries, download model weights from the Github repo, convert them for Hugging Face, write a Python script for loading and running the model, and finally, run your script in a Conda environment to perform tasks with Llama 2.

Can LlaMA 2 be customized to suit any specific learning preferences?

Yes, Meta LlaMA 2 is designed with customization in mind. You can personalize your learning experience by specifying your preferred topics, learning styles, and goals, enabling LlaMA 2 to provide tailored recommendations and content.

Is LlaMA 2 suitable for all types of learners?

Yes, Meta LlaMA 2 caters to learners of all types, whether you prefer visual, auditory, or hands-on learning styles. It offers diverse resources and adapts to your learning preferences to maximize understanding and retention.

How is progress tracked on LlaMA 2?

LlaMA 2 keeps track of your progress through various metrics such as completed courses, quizzes, and assessment scores. This data helps the platform improve its recommendations and tailor content to your needs.

How to export a project in LlaMA 2?

To export your project, go to the "File" menu and click on "Export". Choose the desired export format and settings, select the destination to save the exported file, and click "Export". Done!