Introduction

It goes without saying that generative AI is exploding in popularity for enterprises tuning LLMs like Anthropic's Falcon. According to McKinsey, AI augmentation has the potential to create over $13 trillion in additional global economic value by 2030.

Recent research by Capgemini also found 82% of businesses utilizing AI achieve over 10% increases in sales and conversion rates. However, realizing that ROI requires customizing models to each organization's unique needs.

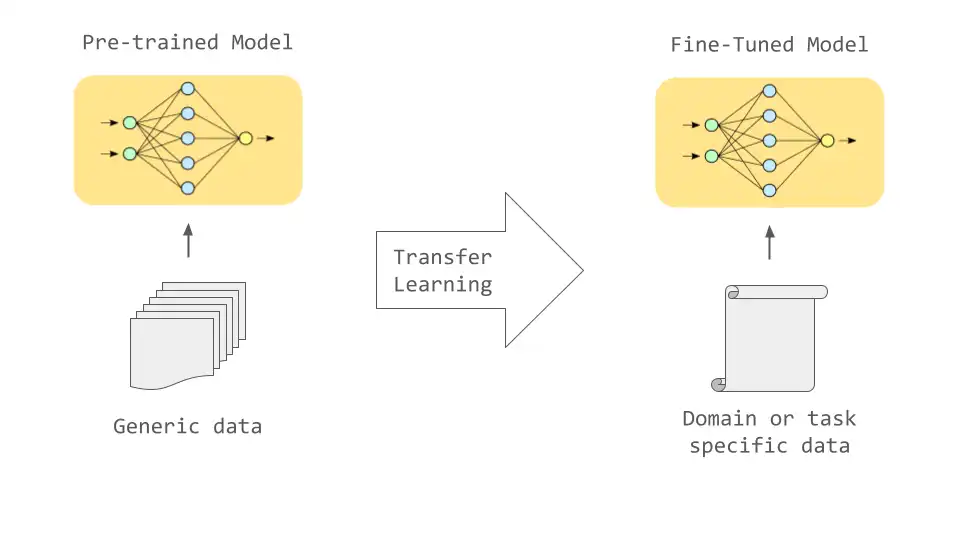

That's where fine-tuning comes in. The process of taking a trained Foundational model like Falcon and enhancing it with company-specific data to optimize performance for specialized domains.

Through tight iteration loops showing the AI examples of desired content, the model learns nuanced company style guides, industry terminology, branding guidelines, and high-priority issues to address.

Techniques like prompt-based learning, semantic similarity preference ranking, and reinforcement learning give stakeholders intuitive levers for molding models to their workflows. This powers everything from tailored customer conversations to automated report generation and analysis.

So continue reading, to know more about fine-tuning Falcon LL for your business use cases.

Benefits of Using Falcon LLM for Businesses

Using Falcon LLM can bring several benefits to businesses.

With its advanced language processing capabilities, Falcon LLM can revolutionize information retrieval. It can provide highly relevant and context-aware search results. This saves time for users and enhances their overall satisfaction.

Falcon LLM also excels in enhancing natural language understanding. Its deep understanding of language allows it to engage in meaningful conversations, accurately comprehend user queries, and provide insightful responses.

This can greatly improve user experiences in various applications, including chatbots, virtual assistants, and customer support systems.

It can understand user intent, tailor responses, and provide relevant information in a conversational manner. This enhances user satisfaction, streamlines processes, and creates more efficient and engaging interactions.

Defining Your Business Use Case

Before utilizing Falcon LLM, it's important to identify specific tasks and goals that align with your business needs.

Identifying Specific Tasks and Goals for the LLM

Consider the areas where language processing can greatly enhance your operations, such as information retrieval, customer support, or content generation.

By defining clear use cases, you can ensure that you're using Falcon LLM in a targeted and effective manner.

Considering Data Availability and Quality for Fine-Tuning

To optimize the performance of Falcon LLM, you'll need to fine-tune it using your own dataset. When preparing your dataset, consider the availability and quality of the data.

Make sure that the data is aligned with your specific use case and encompasses the language patterns and nuances relevant to your business domain.

High-quality and well-curated data is crucial for training a powerful and accurate language model.

Preparing Your Dataset for Fine-Tuning

To prepare your dataset for fine-tuning Falcon LLM, start by collecting relevant text data that aligns with your use case.

Gathering Relevant Text Data Aligned with your Use Case

Look for publicly available data, documents, or texts that are specific to your industry or domain.

It's important to ensure that the dataset covers a wide range of language elements and scenarios to enhance the model's understanding and generalization capabilities.

Cleaning and Pre-Processing the Data for Optimal Training

Once you have gathered your dataset, it's essential to clean and pre-process the data to optimize the training process.

This involves removing any noise, inconsistencies, or irrelevant information that could hinder the model's learning.

Additionally, tokenization and normalization techniques can be applied to ensure standardized representations of words and sentences.

By carefully preparing the dataset, you can ensure the quality and effectiveness of the fine-tuning process.

Suggested Reading:

Falcon 40B vs GPT-3: Which Language Model is Better?

Choosing the Right Fine-Tuning Approach

When fine-tuning Falcon LLM, you have different approaches to consider. Two common options are full fine-tuning and adapter-based methods.

Full Fine-Tuning vs. Adapter-Based Methods

When fine-tuning Falcon LLM, there are two main approaches to consider: full fine-tuning and adapter-based methods.

Full fine-tuning involves training the entire language model from scratch on your specific dataset. This can be time-consuming and resource-intensive, but it allows for the greatest degree of customization and optimization.

Adapter-based methods are a more streamlined approach. They involve training small, task-specific adapters on top of the pre-trained Falcon LLM. This method is faster and requires fewer resources but may be less customizable and may not perform as well as full fine-tuning in certain scenarios.

Selecting the Appropriate Library and Tools (Hugging Face, PEFT)

There are several libraries and tools available to assist with fine-tuning Falcon LLM. Hugging Face and PEFT are two popular choices.

Hugging Face is a comprehensive library that offers a range of pre-trained LLMs and fine-tuning scripts.

It also provides access to a large community of developers and researchers who contribute to the ongoing development of the library. This makes it an excellent choice for those looking for a robust and well-supported fine-tuning solution.

PEFT, or Pre-Existing Fine-Tuning, is an adapter-based fine-tuning method that streamlines the process by reusing pre-trained adapters.

This method is efficient and effective for many common NLP tasks, making it a great choice for those looking for a quick and flexible solution.

Setting Up Your Development Environment

Before you can begin fine-tuning Falcon LLM, you need to set up your development environment.

Start by installing the necessary libraries and frameworks, such as Python, TensorFlow, and the Hugging Face Transformers library.

Installing Necessary Libraries and Frameworks (Transformers, PyTorch)

To begin fine-tuning Falcon LLM, you'll need to set up your development environment. This involves installing any necessary libraries and frameworks, such as Transformers and PyTorch.

Transformers is a library built by Hugging Face that provides a range of pre-trained language models and fine-tuning tools.

PyTorch is a popular deep learning framework used for building and training neural networks. Both of these tools are essential for fine-tuning Falcon LLM.

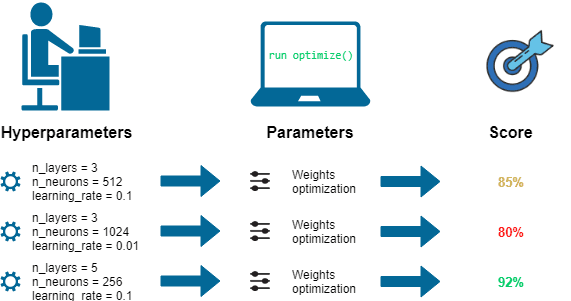

Configuring Training Parameters and Hyperparameters

Once you have your development environment set up, it's time to configure the training parameters and hyperparameters.

This includes setting the learning rate, batch size, and number of epochs. You'll also need to identify the appropriate hyperparameters for your specific use case.

Hyperparameters can greatly affect the accuracy and performance of the fine-tuned model, so it's important to carefully tune these values to optimize the training process.

This may involve performing experiments and analyzing performance metrics to identify the optimal configuration.

Fine-Tuning the Falcon LLM

Now it's time to dive into the fine-tuning process itself. Fine-tuning Falcon LLM involves training the pre-trained model on your dataset, adapting it to your specific use case.

Launching the Training Process and Monitoring Progress

With your development environment set up and your training parameters configured, it's time to launch the training process and monitor progress. This involves running the fine-tuning script and tracking metrics such as loss, accuracy, and perplexity.

During the fine-tuning process, you may encounter issues such as overfitting or slow convergence. Monitoring the training progress can help you identify and troubleshoot these issues.

Tracking Performance Metrics and Adjusting Hyperparameters

Once the fine-tuning process is complete, you'll need to evaluate the performance of the fine-tuned model. This involves testing the model on a holdout dataset and calculating metrics such as F1 score or AUC.

If the performance of the model is not satisfactory, you may need to adjust the hyperparameters and run additional experiments to further optimize the model.

By following these steps and using the appropriate libraries and tools, you can fine-tune Falcon LLM to perform specific NLP tasks that are aligned with your business needs.

And, if you want to begin with chatbots but have no clue about how to use language models to train your chatbot, then check out the NO-CODE chatbot platform, named BotPenguin.

With all the heavy work of chatbot development already done for you, BotPenguin allows users to integrate some of the prominent language models like GPT 4, Google PaLM and Anthropic Claude to create AI-powered chatbots for platforms like:

- WhatsApp Chatbot

- Facebook Chatbot

- Wordpress Chatbot

- Telegram Chatbot

- Website Chatbot

- Squarespace Chatbot

- Woocommerce Chatbot

- Instagram Chatbot

Evaluating and Deploying the Fine-Tuned Model

Once you have fine-tuned the Falcon LLM, it's important to rigorously test and analyze its performance.

Use a separate test set or holdout dataset to evaluate how well the model performs on unseen data.

Conducting Rigorous Testing and Error Analysis

Once you have fine-tuned the Falcon LLM, it is crucial to thoroughly test and analyze its performance. This involves using a holdout dataset or separate test set to evaluate how well the model performs on unseen data.

By measuring metrics such as accuracy, precision, recall, and F1 score, you can assess the model's ability to make accurate predictions.

It is also important to dig deeper and conduct error analysis to understand the types of mistakes the model is making. This analysis can help identify areas for improvement and guide further fine-tuning iterations.

Integrating the Model into your Business Application

After evaluating the performance of the fine-tuned model, the next step is to integrate it into your business application. This could involve developing an API or embedding the model within your existing software infrastructure.

Considerations for integration include ensuring compatibility with programming languages and frameworks your application relies on. It is also important to have a clear understanding of the input and output format expected by the model, and to preprocess and post process data accordingly.

By seamlessly integrating the fine-tuned model into your business application, you can leverage its powerful language processing capabilities to enhance user experiences and drive business value.

Best Practices and Important Considerations

Maintaining data privacy and security is crucial during the fine-tuning process.

Anonymize or use synthetic data to mitigate privacy risks, and comply with legal requirements.

Data Privacy and Security Concerns during Fine-Tuning

When fine-tuning Falcon LLM, it is crucial to prioritize data privacy and security.

Be mindful of the sensitive nature of the data you use for fine-tuning and ensure that it is handled securely.

Consider implementing techniques such as data anonymization or using synthetic data to mitigate privacy risks.

It is also important to adhere to any legal or regulatory requirements related to data privacy during the fine-tuning process.

Avoiding Bias and Ensuring Ethical Use of the LLM

Bias is a critical concern when working with language models.

Fine-tuning Falcon LLM requires careful consideration of potential biases present in both the pre-training data and the fine-tuning data.

To mitigate biases, it is crucial to carefully curate and analyze the training data, ensuring it represents a diverse range of perspectives.

Additionally, continuous monitoring and iterative fine-tuning can help identify and rectify biases that arise during the fine-tuning process.

Ethical use of the LLM entails ensuring that its predictions and outputs are not harmful or discriminatory. Regularly auditing and evaluating the model's performance on real-world data can help identify any unintended negative consequences and inform adjustments to mitigate harm.

Suggested Reading:

Falcon LLM vs. Other Language Models: A Comparative Analysis

Going Beyond the Basics

Explore advanced techniques like multi-task learning, where the model trains on multiple related tasks simultaneously, leveraging shared knowledge.

Exploring Advanced Fine-Tuning Techniques (Multi-Task Learning)

Once you've mastered the basics of fine-tuning Falcon LLM, you may want to explore more advanced techniques, such as multi-task learning.

Multi-task learning involves training the language model to perform multiple tasks simultaneously. This can be beneficial if you have multiple related tasks that can leverage shared knowledge.

For example, you could fine-tune the model on both sentiment analysis and text classification tasks, allowing it to learn representations that capture both sentiment and topic information.

By leveraging multi-task learning, you can enhance the model's performance across different NLP tasks and achieve greater efficiency in training by sharing parameters and pre-trained knowledge.

Continuing Evaluation and Optimization for Long-Term Success

Fine-tuning Falcon LLM is not a one-time, set-and-forget process. For long-term success, it is important to continuously evaluate and optimize the model's performance.

Monitor the model's performance in production and gather user feedback to identify areas for improvement. This feedback can help inform future fine-tuning iterations.

By embracing an iterative approach and continuously optimizing the fine-tuned model, you can ensure its ongoing effectiveness and adaptability.

Conclusion

Fine-tuning Falcon LLM opens up exciting possibilities for leveraging cutting-edge language processing capabilities.

Full fine-tuning and adapter-based methods offer different trade-offs in terms of customization and resource requirements. Libraries like Hugging Face and methods like PEFT provide valuable tools and resources for fine-tuning Falcon LLM effectively.

Configuring training parameters and hyperparameters is crucial for achieving optimal performance. Evaluating and monitoring the fine-tuned model's performance ensures its suitability for real-world applications.

Considerations such as data privacy, bias, and ethical use are vital throughout the fine-tuning process.

By following best practices and remaining mindful of important considerations, you can harness the power of Falcon LLM to create applications that elevate user experiences and drive meaningful impact.

Frequently asked questions (FAQs)

Can Falcon LLM be fine-tuned to cater to specific business use cases?

Yes, Falcon LLM can be customized and fine-tuned to meet the unique requirements and goals of your business. Its flexible nature allows for personalized configuration.

What are the benefits of fine-tuning Falcon LLM for my business?

Fine-tuning Falcon LLM ensures a higher level of accuracy and efficiency in detecting and preventing threats specific to your business. It enables targeted protection and reduces false positives.

How to identify the specific areas in which Falcon LLM needs fine-tuning?

By analyzing historical data and understanding your business operations, you can identify patterns, vulnerabilities, and areas where Falcon LLM may require adjustments to optimize its performance.

What steps should I follow when fine-tuning Falcon LLM?

Start by defining your objectives, collect relevant data, identify features to include, and then evaluate, refine, and iterate the model. Collaborate with CrowdStrike experts for guidance throughout the process.

How long does it typically take to fine-tune Falcon LLM for a business?

The time required for fine-tuning Falcon LLM can vary depending on factors such as data availability, complexity of use case, and resources allocated. A timeframe can be estimated after assessing these factors.