Creating robust and efficient deep learning models is a paramount challenge. Keras is one of the most popular solutions and user-friendly libraries for building deep learning models.

According to the 2023 Kaggle Machine Learning Survey, over 50% of machine learning practitioners use Keras. Keras is an open-source Python framework that provides a high-level interface to design and train neural networks effortlessly.

One of Keras's key features is the ability to create Sequential Models. Sequential model is a fundamental architecture for building a wide range of neural networks.

Sequential Models in Keras offer a structured and intuitive way to stack layers of neurons. The MDPI 2023 survey of machine learning practitioners found that 75% of respondents use Keras, and 60% of those users use sequential models.

Building sequential models with Keras is a common approach in deep learning for creating neural networks. But how to build one? A practical guide is required for it.

So, continue reading to learn more about building sequential models with Keras.

What is Keras?

Keras is an open-source deep-learning platform that makes neural network creation easier. It is an interface for quickly developing, training, and deploying machine learning models.

Keras is a neural network design tool created as a component of the TensorFlow ecosystem. It provides a user-friendly, high-level API for beginners and seasoned professionals.

Because of its modular architecture, which offers a variety of pre-built layers, optimizers, and loss functions, users can build sophisticated models by stacking layers.

Keras is frequently used in research and business for problems such as image recognition, natural language processing, etc. It enables GPU acceleration for quicker training.

How Keras Works: Simplifying Deep Learning Development

In this section, we'll take a closer look at how Keras works.

- The High-Level API of Keras

Keras provides a high-level API that allows users to build and train deep learning models efficiently. It acts as a user-friendly interface, abstracting away the complexities of lower-level frameworks like TensorFlow or Theano.

- Modular Design

Neural networks are constructed by stacking customizable layers. Doing this makes model creation and experimentation straightforward.

- Pre-built Components

Keras provides a library of pre-defined layers, optimizers, and loss functions, simplifying model design.

- Integration with TensorFlow

Initially an independent library, Keras is now tightly integrated with TensorFlow. It allows seamless utilization of TensorFlow's capabilities.

- Cross-Platform

Keras supports both CPU and GPU acceleration. It enhances training speed and scalability.

- Accessibility

Keras's simplicity makes it accessible to beginners and experts, fostering rapid, deep learning model development.

- Widespread Use

Keras is widely adopted in research and industry for various applications, from image recognition to natural language processing.

- Community Support

It benefits from an active community, ensuring continuous updates and improvements.

Benefits of Using Keras

So, why should you choose Keras for your deep learning projects? Keras is like having a personal assistant for your deep learning needs. It offers a range of benefits to it a popular choice among developers:

- Easy to Use

With Keras, you can immediately develop and train models because it is beginner-friendly. Even if you're new to deep learning, working with it is easy, thanks to its excellent documentation and straightforward syntax.

- Flexibility

A large selection of pre-built layers, activation functions, and loss functions are available with Keras. It allows you the freedom to alter your models to meet your requirements.

Keras has you covered whether you're working on time series analysis, natural language processing, or image classification.

- Fast Prototyping

With Keras, you can rapidly prototype your deep learning models. Its modular architecture allows you to add or remove layers easily, experiment with different architectures, and iterate on your models until you find the perfect fit.

- Seamless Integration

Keras seamlessly integrates with other popular deep learning frameworks like TensorFlow. It allows you to leverage these frameworks' power while enjoying Keras's simplicity.

Understanding Sequential Models

In this section, we'll explore the key components of sequential models and how they work.

What is a Sequential Model?

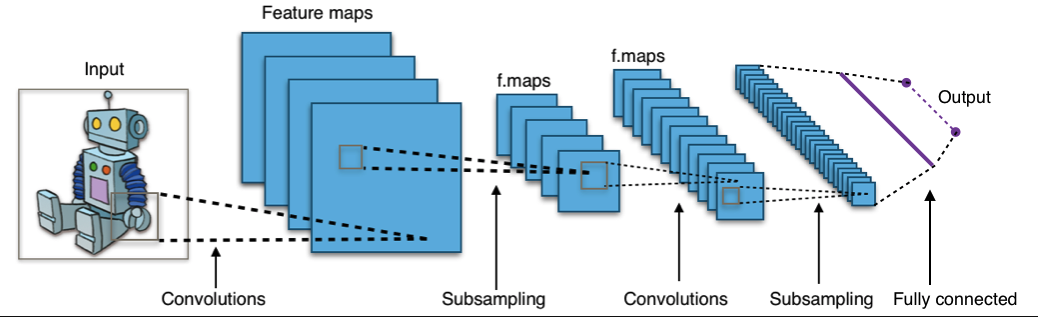

A sequential model is a stack of linear layers where each layer's output feeds into the following layer. Defining and comprehending the information flow across the model is simple due to its sequential structure.

The input data is subjected to a specific operation in each stack tier, progressively altering it to create the desired output.

A fundamental class of deep learning model is the sequential model. They have uses for tasks like sequence prediction, time series analysis, and natural language processing.

A 2021 analysis of GitHub code repositories found that sequential models are the most commonly used type of Keras model. It accounts for over 50% of all Keras models.

Key Components of Sequential Models

To understand sequential models better, let's closely examine their key components.

- Input Layer

In a sequential model, the input layer is the top layer and the place where the data enters. For the model to properly process the input data, it defines the shape and type of the data.

The input layer takes in the input data and sends it to the following levels for additional processing.

- Hidden Layers

The intermediary levels between the input and output layers are known as hidden layers. On the supplied data, they do intricate computations to extract useful features and representations.

Depending on the complexity of the issue and the amount of data accessible, the number and size of hidden layers can change.

- Output Layer

In a sequential model, the output layer is the last layer and creates the desired output.

Depending on the kind of problem you're solving, the output layer's node count will vary.

For example, in a binary classification task, the output layer may have a single node expressing the probability of the positive class.

The output layer of a multi-class classification task could contain several nodes, each representing a different class's probability.

Getting Started with Keras

In this section, we'll see how to get started with Keras.

Installing Keras and its Dependencies

Before building unique models with Keras, ensure it gets appropriately installed on your system. Here's a step-by-step guide to get you up and running:

Step 1

Install Python

Keras is a Python library, so install Python on your machine. Users can download the latest version of Python from the official website. Then, follow the installation instructions.

Step 2

Install Keras

Once Python gets installed, open your command prompt or terminal. Then, run the following command to install Keras: pip install keras. Keras will be downloaded and installed automatically, along with its dependencies.

Step 3

Install Backend Engine

Keras can work with different backend engines, such as TensorFlow or Theano. You can choose the backend engine that suits your needs and install it separately.

For example, to install TensorFlow, run pip install TensorFlow.

Importing the Necessary Libraries

With Keras installed, it's time to import the necessary libraries and get on with some code! Open your favorite Python IDE or Jupyter Notebook and create a new Python script.

At the beginning of your script, import the following libraries:

import keras

from keras.models import Sequential

from keras.layers import Dense

These libraries will give users the tools to build sequential models. Keras is designed to be user-friendly, and these libraries make it even easier to work with.

Loading the Dataset for Model Training

Now that you have Keras set up and libraries imported. It's time to load the dataset to train the sequential model.

The dataset you choose will depend on the issue you seek to resolve. Many datasets are online for picture classification, sentiment analysis, and time series forecasting.

The keras.datasets module, which offers a selection of well-liked datasets, can import a dataset.

For instance, you can use the following code to load the MNIST dataset of handwritten digits:

from keras.datasets import mnist

# Load the MNIST dataset

(x_train, y_train), (x_test, y_test) = mnist.load_data()

Split the data set into testing and training sets, with the input data (x_train and x_test) and the corresponding labels (y_train and y_test). Explore the dataset and understand its structure before proceeding with model training.

Preparing Data for Sequential Models

Here is how you prepare data for sequential models.

Data Normalization

Data normalization is a technique for scaling the values of your supplied data to a defined range. Doing this ensures that each feature contributes equally to the model training process and no feature dominates the others.

There are various normalization methods available, including z-score normalization and min-max scaling. Your technique will rely on your data's characteristics and your model's demands.

One-Hot Encoding

One-hot encoding is a data preparation technique that represents categorical variables as binary vectors. Users frequently come across categorical variables in real-world datasets that cannot be used as direct inputs to their models.

These categorical variables are transformed into a binary vector representation through one-hot encoding, where a binary value represents each category. It allows the models to learn from categorical data and make accurate predictions effectively.

Handling Missing Values

Real-world datasets are often plagued with missing values, which can cause issues during model training. To preserve the data's integrity, handling missing values is critical.

Users can opt to either eliminate the impacted samples or impute the missing values, depending on the type of missing values. Users can use techniques like mean imputation or regression imputation.

The technique choice depends on the specific dataset and the impact of missing values on the overall analysis.

Building a Simple Sequential Model

In this section, we'll walk you through building a simple sequential model.

Step 1

Choosing the Right Architecture

It's critical to comprehend the sequential model's architecture before beginning any coding.

Sequential models consist of a stack of layers that are connected sequentially. This architecture is suitable for most common deep learning tasks.

Step 2

Importing the Necessary Libraries

Users must import the required libraries to get started. Keras is a Python-based high-level neural network API. Hence, the Keras library must be imported.

Import additional libraries, such as Matplotlib for data visualization and NumPy for numerical computations.

Step 3

Defining the Model

Now, let's define the sequential model. Use the Sequential class from Keras to create an empty model.

Then, users can add layers to our model using the add method. Users can start with a few dense layers for a simple sequential model.

Step 4

Compiling the Model

Once you have defined your model, there is a need to compile it before training. Compiling the model involves specifying the loss function, optimizer, and metrics.

The loss function measures the model performance on the training data. Meanwhile, the optimizer determines how the model is updated based on the loss function.

Use the metrics to evaluate the performance of the model.

Step 5

Training the Model

With the model compiled, it's time to train it on the data. Users need to split the data into training and validation sets.

Use the training set to update the model's weights. Meanwhile, the validation set evaluates the model's performance during training. Users can use the fit method to train the model for several epochs.

Improving Sequential Models

Now that you have a basic understanding of building sequential models let's explore some techniques to improve their performance.

Regularization Techniques

Regularization techniques prevent overfitting, where the model performs well on the training data. However, this technique fails to generalize to new, unseen data. There are two popular regularization techniques: dropout and L2 regularization.

Dropout randomly sets a fraction of input units to 0 during training. L2 regularization of the sequential model adds a penalty term to the loss function. It does this to discourage large weights.

Hyperparameter Tuning

Hyperparameters are variables set by the user rather than being learned by the model. These are batch sizes, number of layers, learning rate, and neurons per layer.

Tuning these hyperparameters can significantly impact the performance of your model. Techniques like grid and random search help find the optimal combination of hyperparameters.

Transfer Learning

Transfer learning uses a previously trained model as a base point for a new assignment.

Transfer learning can help your model perform better by utilizing the information from a large dataset, mainly when you have limited training data.

Model Evaluation and Interpretability

Once you have trained your model, evaluating its performance is essential. The model evaluation metrics such as accuracy, precision, recall, and F1 score would assist in evaluating.

Additionally, explore techniques to interpret the predictions made by your model, such as feature importance and visualization of activation maps.

Handling Sequential Data

When working with sequential data, such as time series or natural language data, it's crucial to understand how to handle and preprocess this type of data before building your sequential models. So, let's see how to handle sequential data.

Data Preprocessing

Before feeding the sequential data into our models, we need to preprocess it to ensure it is in a suitable format. It may involve steps such as tokenization, padding, and encoding.

Tokenization involves splitting the text (data) into individual words or characters. Meanwhile, padding ensures that all sequences have the same length. Encoding converts the text into numerical representations that the model can understand.

Feature Engineering

In addition to preprocessing, feature engineering is crucial in extracting meaningful information from sequential data. It can involve techniques such as creating lag features for time series data or using word embeddings for natural language data.

Feature engineering helps the model capture essential patterns and relationships within the data.

Handling Imbalanced Data

In some cases, sequential data may be imbalanced, meaning that certain classes or categories are underrepresented compared to others. It can lead to biased models that perform poorly on minority classes.

Explore techniques such as oversampling, undersampling, and class weighting to address this issue and improve the model's performance on imbalanced data.

Advanced Techniques for Sequential Models

The advanced techniques for sequential models are as follows:

Transfer Learning with Sequential Models

Transfer learning is a powerful sequential model technique that allows users to leverage pre-trained models on large datasets. It allows them to apply to specific sequential tasks.

Users can use pre-trained models, such as those trained on image or text data, and adapt them to their sequential data. It can save time and computational resources while improving the model's performance.

Implementing Attention Mechanisms

Attention mechanisms have gained popularity in sequential models, especially in natural language processing tasks. Attention allows the model to focus on relevant parts of the input sequence, giving more weight to important information.

Also, explore different attention mechanisms, such as self-attention and hierarchical attention. It will show how they can improve the model's performance.

Using Pre-trained Word Embeddings

Word embeddings, such as Word2Vec or GloVe, capture semantic relationships between words. It can enhance the representation of words in our sequential models.

Use pre-trained word embeddings and integrate them into the models. It can help the model understand the context and meaning of words. It will lead to better performance on natural language tasks.

Conclusion

In this blog post, we have explored the process of building sequential models with Keras. We started by understanding how Keras works and the concept of sequential models. We then delved into the practical aspects of building and improving sequential models.

We discussed the necessary libraries to import and how to define a sequential model using Keras's `Sequential` class. We also covered the steps of compiling the model, training it on data, and evaluating its performance using dropout and L2 regularization techniques.

Furthermore, we explored the importance of hyperparameter tuning and discussed techniques like grid and random search to find our models' optimal combination of hyperparameters. We also touched upon the concept of transfer learning and how it can apply to sequential models.

Handling sequential data was another crucial aspect we covered. We discussed techniques like data preprocessing, feature engineering, and handling imbalanced data to ensure our models perform well on sequential datasets.

Lastly, we explored advanced techniques for sequential models, including attention mechanisms and pre-trained word embeddings. These techniques can significantly enhance the performance of our models in tasks like natural language processing.

So, follow the guidelines in this article to build robust and accurate sequential models using Keras. Remember to experiment, iterate, and fine-tune your models to achieve the best results.

Frequently Asked Questions (FAQs)

What is Keras, and why is it popular?

Keras is a high-level API for building deep learning models. It is popular for its user-friendly interface and seamless integration with frameworks like TensorFlow.

When should I use sequential models?

Sequential models suit tasks involving sequence prediction, time series analysis, and natural language processing.

What are the key components of sequential models?

The key components include the input, hidden, and output layers.

How do I preprocess sequential data?

Preprocessing techniques include data normalization, one-hot encoding, and handling missing values.

How can I improve the performance of sequential models?

Techniques like dropout, L2 regularization, and hyperparameter tuning can enhance model performance.