Introduction

PyTorch has emerged as a powerful framework for deep learning, offering flexibility, speed, and ease of use.

With its intuitive interface and dynamic computation graph, PyTorch has become a popular choice for researchers and practitioners alike. Everyone wants to join the bandwagon of PyTorch for their Deep learning models but gets lost in translation.

But, not to worry! In this blog post, we will explore the best ways to leverage PyTorch to improve your deep-learning models.

From getting started with PyTorch and understanding its core concepts, to preprocessing data and building models, this article has it all. You will find info on advanced techniques such as regularization, optimization algorithms, and model visualization. There is also discussion about transfer learning, handling large datasets, and deploying models in production.

By the end of this blog post, you will have a comprehensive understanding of how to harness the full potential of PyTorch to enhance your deep learning models. So let's dive in and unlock the true power of PyTorch!

Getting Started with PyTorch

PyTorch is easy to get started with and offers a user-friendly interface. In this section, you will find about the installation process and how to set up your environment for PyTorch development.

Installation and Setup

To start with PyTorch, first, ensure you have Python installed (preferably 3.6 or later). Then, install PyTorch using pip or conda. For example, with pip, you can run: pip install torch torchvision torchaudio.

For GPU support, ensure you have CUDA installed and use the appropriate PyTorch version. You can verify the installation by importing the torch in a Python environment.

Finally, set up your development environment with your preferred text editor or IDE. PyTorch offers extensive documentation and tutorials to help you get started with deep learning projects efficiently.

Introduction to PyTorch's Autograd

Autograd is an automatic differentiation system in PyTorch that enables computing gradients of tensors. This functionality is crucial for training and optimizing deep learning models.

Preparing Data for Deep Learning Models

To train deep learning models, you need to prepare your data appropriately. In this section, you’ll find various techniques for loading and preprocessing data using PyTorch's DataLoader.

Data Loading with PyTorch's DataLoader

The DataLoader class in PyTorch allows you to efficiently load and preprocess your datasets. You can use this class to load data from different formats, such as CSV files, image folders, and custom datasets. You also need to handle common data preprocessing tasks, such as data normalization and augmentation.

Handling Different Data Formats in PyTorch

Deep learning models often deal with diverse data types, such as images, text, and audio.

For structured data, use libraries like pandas to read CSV files, then convert them into PyTorch tensors. For images, torchvision offers transforms to preprocess and load them easily. Numpy arrays can be directly converted to tensors. Text data can be tokenized and encoded using libraries like NLTK or SpaCy before conversion.

PyTorch's DataLoader class helps in batching, shuffling, and parallelizing data loading. Ensure consistency in data preprocessing across training, validation, and testing sets for reliable model performance

Building Deep Learning Models with PyTorch

In this section, you’ll find the process of building deep learning models using PyTorch's neural network capabilities.

Overview of PyTorch's nn.Module and nn.Sequential

PyTorch provides the nn.Module and nn.Sequential classes as building blocks for constructing neural networks.

PyTorch's nn.Module serves as the base class for all neural network modules, enabling the creation of custom models by defining layers and operations.

Sequential allows the sequential arrangement of layers, simplifying model creation by passing modules sequentially, facilitating concise and readable code for building deep learning models.

Implementing Convolutional Neural Networks (CNNs) with PyTorch

Convolutional neural networks (CNNs) are widely used for image classification and computer vision tasks.

Implementing Convolutional Neural Networks (CNNs) with PyTorch involves defining a sequential model or subclassing nn.Module, configuring layers such as Conv2d, MaxPool2d, and Linear. Specify activation functions like ReLU and apply dropout for regularization.

Finally, define forward propagation logic. PyTorch provides flexibility and efficiency for building and training CNNs.

Enhancing Deep Learning Models

PyTorch provides a flexible framework for developing and enhancing deep neural networks with capabilities like dynamic computation graphs, modular components, and Pythonic syntax.

PyTorch enables building custom loss functions, activation layers, regularization techniques, and other components tailored to model goals.

The ability to tweak every aspect of model architecture gives fine-grained control over model behavior and performance. PyTorch also supports rapid iteration for testing enhancements through seamless integration with Python and gradient tracking for easy debugging.

With strong community support and pre-built modules for vision, text, reinforcement learning, and more, PyTorch empowers developers to move beyond out-of-the-box networks and enhance deep learning capabilities.

If you are the one who likes the no coding chatbot building process, then meet BotPenguin, the home of chatbot solutions. With all the heavy work of chatbot development already done for you, simply use its drag and drop feature to build AI-powered chatbot for platforms like:

- WhatsApp Chatbot

- Facebook Chatbot

- WordPress Chatbot

- Telegram Chatbot

- Website Chatbot

- Squarespace Chatbot

- woocommerce Chatbot

- Instagram Chatbot

Handling Large Datasets with PyTorch

PyTorch offers multi-GPU and distributed training capabilities to accelerate model development and enhance performance by handling large datasets. Through parallelized data loading and model partitioning across GPUs, PyTorch enables leveraging multiple devices for faster batch training and inferencing.

The distributed communication package torch.distributed further scales training across clusters and cloud-based resources by synchronizing gradients and parameters seamlessly. These capabilities allow developers to speed up deep learning pipelines and enhance model accuracy by leveraging more data through PyTorch’s efficient distributed training approaches designed for large datasets.

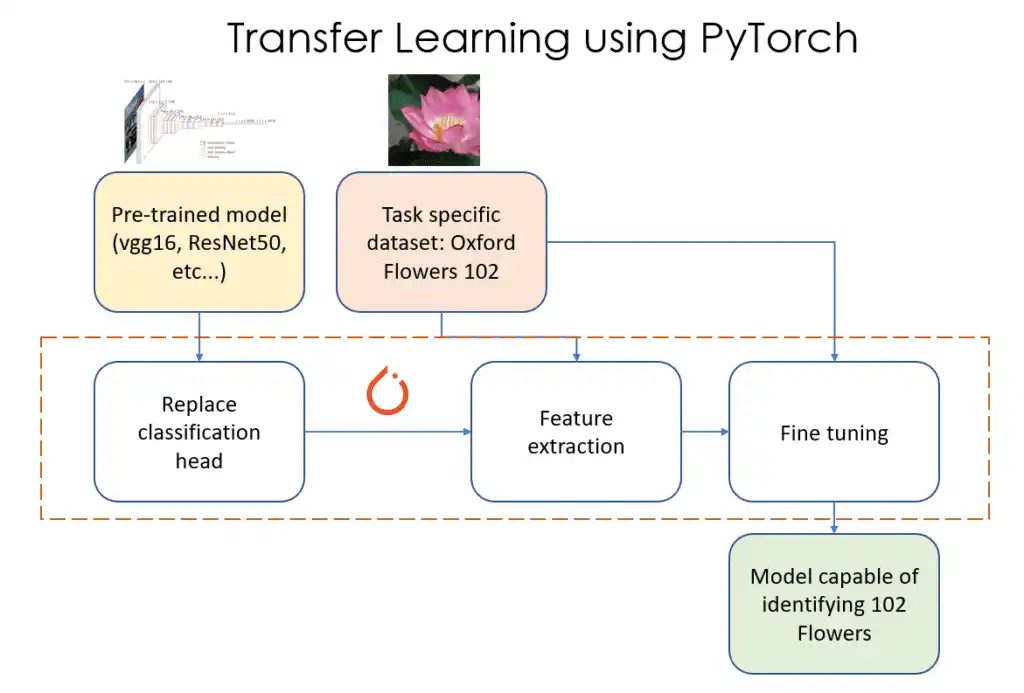

Transfer Learning with PyTorch

PyTorch simplifies transfer learning, which adapts parameters from pre-trained models to enhance new networks. By loading weights from models pretrained on large datasets then fine-tuning to new tasks, PyTorch jumpstarts optimization and enhances model convergence.

Just a few lines of code load pretrained weights before re-training top layers for custom datasets. PyTorch’s modularity makes swapping component layers straightforward to enhance domain-specific performance. Transfer learning with PyTorch provides an efficient method to boost deep learning models through knowledge transfer from related tasks.

Optimizing Deep Learning Models with PyTorch

PyTorch provides a uniquely flexible framework for optimizing deep neural networks to enhance training performance, reduce inferencing latency, and improve model accuracy. Developers can tap into multiple levels of optimization and customization support in PyTorch for incremental and iterative model improvements.

Rapid Iteration for Optimization

PyTorch's define-by-run model building style based on dynamic computational graphs enables rapid optimization iteration compared to static graph frameworks.

By adjusting model architecture, loss functions, and parameters through native Python without graph compilation steps, PyTorch allows quickly testing incremental enhancements. Autograd automatically tracks gradient changes during debugging. This agile approach makes tweaking an individual layer or algorithm painless to identify optimal configurations.

Custom Loss Functions

The flexibility of PyTorch empowers developers to implement custom loss functions matching model needs versus being constrained to predefined losses like MSE or binary cross-entropy. Custom losses provide more fine-grained optimization opportunities.

For example, a multi-class classifier could use an adaptive loss applying higher penalties to frequently misclassified classes versus rare mispredictions to improve minority class recall. Or anomaly detection models may benefit from an asymmetric loss more heavily weighting outliers.

Suggested Reading:

Benefits of Using PyTorch in Deep Learning

Regularization for Generalization

Regularization techniques optimize deep networks by reducing overfitting on training data to improve generalization on new unseen data. PyTorch natively supports common regularizers like L1/L2 weight decay penalties and dropouts.

Developers can also create custom regularizers tailored to model architectures, such as sparse activations and batch normalization layers. Combined with appropriate scheduling, regularization provides a powerful optimization strategy.

Gradient Clipping

PyTorch allows implementing gradient clipping thresholds to address exploding gradients affecting model stability and convergence. By capping gradients to a defined value, clipping prevents harmful oscillations that derail training.

This enables using higher learning rates and training models that otherwise encounter frequent explosions. Clipping gradients to viable ranges is key to optimizing deep networks.

Learning Rate Scheduling

Systematically adjusting learning rates over training epochs enhances convergence and accuracy through more precise optimization phases. PyTorch Schedulers make implementing learning rate schedules seamless.

Beyond stepwise and exponential decay schedules, custom schedulers can incorporate adaptive tuning based on metrics and non-monotonic patterns with restarts or cyclical intervals to escape suboptimal local minima through planned learning rate modulation over time.

Weight Initialization

Strategic weight initialization provides better starting conditions for network optimization. PyTorch offers multiple initialization schemes like Xavier normal or uniform initialization with tunable parameters.

Developers can also build custom init schemes adapted to model size, activation functions, and data distribution statistics to align initial weights with ideal convergence ranges.

Activation Functions

PyTorch supports swapping standard activation functions like ReLU and Tanh with customizable activations. Novel activations enhance model representation power and trainability through properties like bounded ranges.

For vision models, learnable per-channel activations improve fine-tuning. Dynamic activations switch between functions based on elapsed epochs or phases target different training needs.

Profiling for Optimization

PyTorch Profiler traces ops, kernels, and calls during model execution to pinpoint bottlenecks. Optimization efforts can concentrate on heavy operations or poorly utilized GPUs identified through profiling. Common targets include reducing IO, leveraging asynchronous executors, optimizing data pipelines, and strategic batching. Profiling ensures optimization benefits actual workflow frictions.

Conclusion

In closing, deep learning stands poised to revolutionize every industry by unlocking smarter products, predictive analytics, computer vision breakthroughs and more. As these complex neural networks become integral across global tech stacks, versatile yet specialized frameworks like PyTorch provide the bedrock for development and deployment.

PyTorch’s Python-first design with dynamic computational graphs simplifies the construction, debugging and optimization of neural network architectures.

Rapid iteration cycles also accelerate AI innovation and commercialization - enabling a new wave of cutting-edge deep learning applications. Considering venture capital investments in AI topped $93.5 billion worldwide in 2021 according to Statista, the need for agile deep learning frameworks continues growing exponentially.

Yet PyTorch offers more than just strong developmental foundations. Seamless interoperability with Python data science stacks and frictionless exporting to production environments ensure models can launch reliably at scale.

This potent combination of research and applied strengths has made PyTorch the framework of choice for over half of AI developers according to a 2022 Entrepreneur Handbook survey.

PyTorch provides expansive options for optimizing deep learning models through customized loss calculations, robust regularization techniques, strategic learning rate manipulation, tailored weight initialization, flexible activations, and rigorous profiling. PyTorch allows both high-level and low-level control over optimization workflows to enhance model performance.

So whether you are looking to sharpen computer vision, process natural language or pioneer novel algorithms, let PyTorch accelerate your deep learning today.

Suggested Reading:

Comparing TensorFlow and PyTorch for NLP Chatbot Development

Frequently Asked Questions (FAQs)

How can I load image data in PyTorch?

You can use the ImageFolder class in PyTorch's torchvision.datasets module to load image data. It automatically organizes images based on their class labels, making it easy to train image classification models.

What is data augmentation, and how can I apply it in PyTorch?

Data augmentation is a technique of artificially increasing the size of your training dataset by applying random transformations to the data. In PyTorch, you can use the transforms module to apply various augmentation techniques, such as random rotation, scaling, and cropping.

How can I handle sequential data, such as text, in PyTorch?

You can handle sequential data like text by converting it into numerical representations using techniques like word embeddings. PyTorch provides tools for creating and training embedding layers, which can be used to convert text into numerical tensors.

Can I preprocess audio data using PyTorch?

Yes, you can preprocess audio data in PyTorch by converting it into spectrograms, which capture the frequency and time information. PyTorch provides tools for transforming audio signals into spectrograms and using them as input for deep learning models.