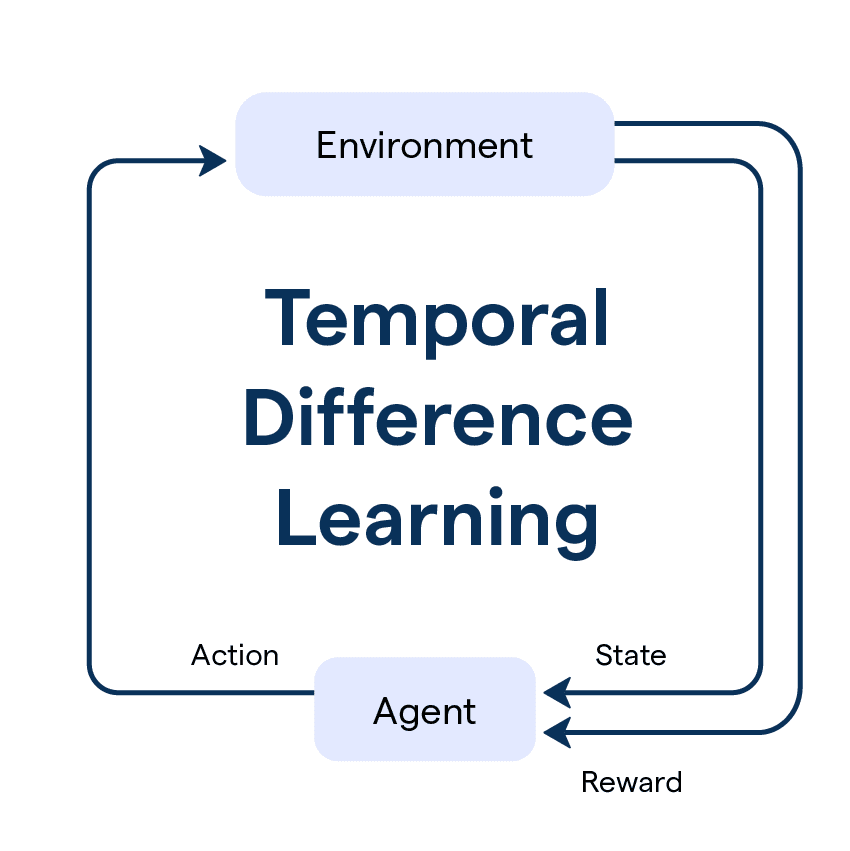

What is Temporal Difference Learning (TD Learning)?

Temporal difference (TD) learning is a method in reinforcement learning for training agents to make optimal decisions. It works by learning from the difference between temporally successive predictions.

For example, a robot may predict that taking an action in a particular state will produce +5 reward. On taking that action, it receives +10 reward. The TD error of +5 causes the robot to update its predictions.

Over time, by minimizing TD errors, the agent learns to make increasingly accurate predictions about long-term rewards. This lets it determine the best actions to take in different situations.

TD learning has key advantages over other reinforcement learning techniques:

- It learns from experience without detailed environment models.

- Can learn from incomplete sequences before final outcome.

- Computationally simple to implement.

TD learning has enabled breakthroughs in game AI, robotics, and complex automation. The ability to make far-sighted decisions from ongoing experience makes TD learning powerful and broadly applicable.

How Does Temporal Difference Learning Work?

Temporal Difference Learning employs a mathematical trick to make predictions more accurate over time.

By utilizing the difference between the predicted value and the actual value of a subsequent state, TD Learning can gradually match expectations with reality. This gradual increase in accuracy is key to the effectiveness of TD Learning.

Applications of Temporal Difference Learning

Temporal Difference Learning finds wide applications in various domains, including:

- Understanding Conditions like Schizophrenia: TD Learning is used to study and understand conditions such as schizophrenia, which involve impairments in learning and decision-making processes.

- Pharmacological Manipulations of Dopamine: It helps explore the effects of pharmacological interventions on learning by manipulating the dopamine system.

- Machine Learning: Temporal Difference Learning is widely used in machine learning algorithms, particularly in reinforcement learning tasks, due to its effectiveness and efficiency.

Parameters in Temporal Difference Learning

In this section, we'll be exploring the specific parameters that come into play when we talk about Temporal Difference Learning. Couldn't be more excited to embark on this learning journey with you!

State-Value Function

Ever heard of 'state' in machine learning? A state-value function reckons the expected long-term return with an initial state s, under policy π.

It's like a grading system for how good or bad states are in a day long adventure.

Action-Value Function

This one's a bit different. An action-value function measures the expected rewards in a long term when one executes a certain action in a particular state.

Imagine it like checking what benefit you'll get if you make a specific move in your favorite board game!

Discount Factor

In TD learning, the discount factor is that cool dude that ensures the sum of the rewards remains finite. It’s a measure between 0 and 1 and defines the weight of future rewards.

The larger the discount factor, the more attention we give to future rewards. It's like choosing between having a small piece of cake right now or a bigger one in a bit!

Learning Rate

Last but not least, is the 'learning rate'. Generally denoted by α, the learning rate is the degree to which the newly acquired information will override the old information. An analogy?

Think of it like balancing between lending your ear to a fresh piece of gossip versus sticking to the old ones.

TD Error

To make the learning process more precise, we have the TD error. It's a measure of the difference between the estimated value of a state-action pair and the observed reward.

If the gossips (new info) were accurate, the TD error would be zero!

Temporal Difference Learning in Neuroscience

In this section, we'll delve into how Temporal Difference Learning has fascinating implications in the world of neuroscience.

Reward Prediction Error Theory

Temporal Difference Learning models are an inspiration for the Reward Prediction Error Theory.

This theory suggests that the release of the neurotransmitter dopamine encodes a prediction error signal, informing animals (including humans) how to adjust their behaviors for maximizing future rewards.

Biological Plausibility

Temporal Difference learning algorithms are biologically plausible, with neural circuits in the brain exhibiting TD-like learning.

Observations of the midbrain dopamine system show similarities in its error training signals and the signals produced by Temporal Difference Learning algorithms.

Neural Basis of Learning

Modeling the neural basis of learning with the use of Temporal Difference Learning has significantly impacted our understanding of the brain.

The algorithms shed light on how the brain computes reward prediction errors and updates representations of stimulus-value associations.

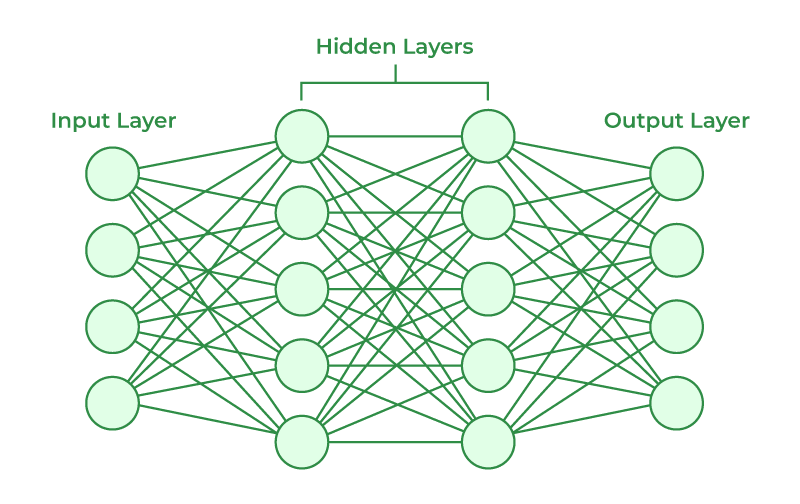

Artificial Neural Networks

Temporal Difference Learning is effectively employed in artificial neural networks.

In neuroscience, this translates to better understanding of neural plasticity mechanisms, synaptic changes, and the integration of artificial learning systems with biological counterparts.

Behavioral and Cognitive Processes

The application of Temporal Difference Learning has enhanced research on behavioral and cognitive processes in psychology and cognitive neuroscience, enriching our awareness of decision-making, goal-directed behaviors, and learning.

Peering into the intersection between Temporal Difference Learning and neuroscience, we find remarkable connections that serve as keystones in unlocking the intricate wonders of the brain and its learning processes.

Benefits of Temporal Difference Learning

In this section, we'll explore the various advantages of Temporal Difference Learning, revealing why it's an integral part of reinforcement learning.

Efficient Learning

By updating value estimates using differences between time-steps, Temporal Difference Learning greatly accelerates the learning process. The result? Efficiency in learning backed by the power of this algorithm family.

Increased Adaptability

Temporal Difference Learning is an adaptive dynamo.

It enables agents to learn and adapt to changing environments due to its online learning ability, which yields better responsiveness to changing rewards and states.

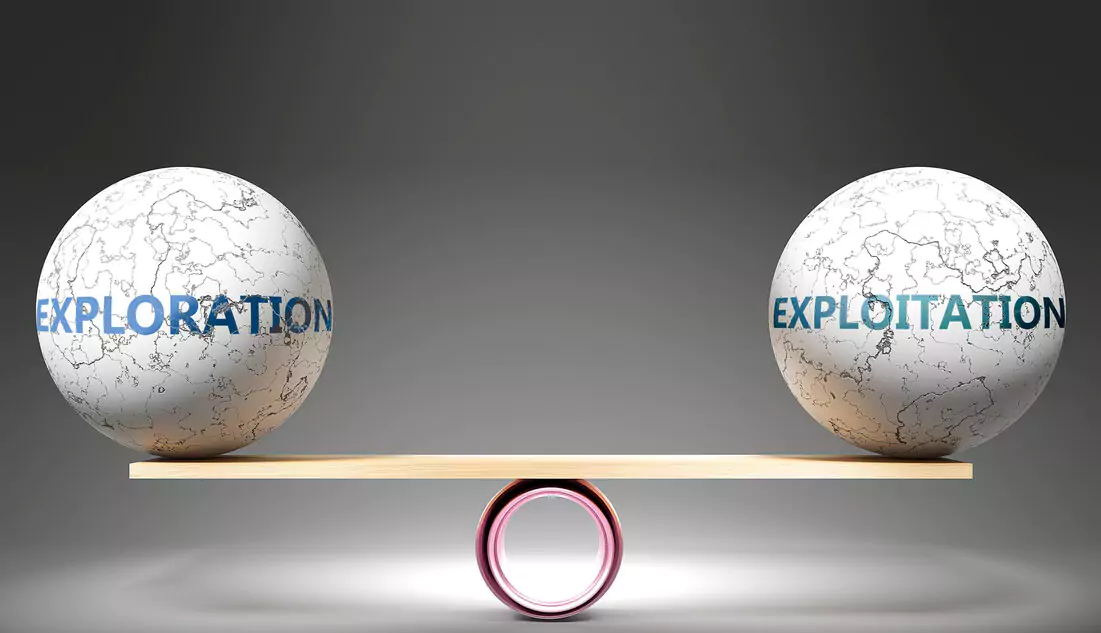

Balancing Exploration and Exploitation

A well-honed equilibrium between exploration and exploitation is essential in reinforcement learning.

Temporal Difference Learning techniques, like SARSA, learn and fine-tune policies that harmonize this delicate balance.

Online Learning Capabilities

One of the significant strengths of Temporal Difference Learning is its ability to learn online in real time.

It doesn't require the full details of an episode to be known in advance, unlike Monte Carlo methods, granting it added versatility in practical applications.

Applicability in Various Domains

The sheer range of Temporal Difference Learning's applicability across numerous domains is truly impressive.

From robotics and control systems to artificial intelligence and game playing, the benefits of this learning method reach far and wide.

Limitations of Temporal Difference Learning

While Temporal Difference Learning offers many benefits, it has certain limitations, including:

Dependence on Initial Estimates

TD learning heavily banks on initial estimates. It might resemble a game of darts where we're trying to hit the bullseye, but our first throw decides a lot. If the initial estimate is not even close, we might unknowingly be practicing our aim in the wrong direction.

Slow Convergence

Remember the childhood game where you blindfolded your friends and directed them towards a target?

Well, that's what convergence feels like in TD learning. Commonly, TD learning tends to converge slowly over iterations; it's like giving blind-folded instructions to a wandering friend where you only make minuscule corrections with each step.

Overfitting

Overfitting is like that annoying dinner guest that most machine learning models can't seem to escape.

TD learning is also susceptible to overfitting, especially when the model complexity increases. It’s like memorizing responses to trivia questions – great for those exact questions, not so much for similar but slightly different ones.

Sensitivity to Learning Rates

TD Learning is like Goldilocks trying to find the perfect porridge – it’s sensitive to the learning rates. Too high or too low, and we run into problems like unstable learning and slow convergence.

Striking that perfect learning rate balance is a bit of a dance, and missing a step may lead to not-so-great results.

Difficulty in Understanding

Last, but certainly not least, is the fact that TD Learning can be a bit of a tough nut to crack.

It requires an understanding of technical concepts like Markov Decision Processes and rewards structures. It’s kind of like learning a new language with its own rules, grammar, and idioms.

Temporal Difference Error

The temporal difference (TD) error is a key concept in reinforcement learning that drives learning in temporal difference methods. It refers to the difference between two successive predictions made by the agent as it interacts with the environment.

Here's an example to illustrate the TD error:

Imagine a robot learning to play chess. It makes the following predictions:

- In state S1, the robot predicts making move M1 will give +2 reward

- It makes move M1, reaches state S2, and receives an actual reward of +5

- In S2, it predicts its reward is +10 for this state

- So the TD error is +5 - +2 = +3

This TD error of +3 indicates the prediction for S1 was off by 3 points.

The key properties of the TD error are:

- It is the difference between successive predictions by the agent.

- The agent uses the TD error to incrementally update its predictions to be more accurate.

- Minimizing the TD error over time leads to optimal predictions.

- TD error provides a computationally simple training signal for temporal difference learning.

In summary, the TD error is a key mechanism for an agent to learn predictions about rewards. By repeatedly minimizing the TD error, the agent becomes progressively better at making decisions to maximize cumulative future reward.

Difference Between Q-Learning and Temporal Difference Learning

Q-Learning is a specific algorithm within the category of Temporal Difference Learning. It aims to learn the Q-function, which represents the expected reward for taking a specific action in a given state.

Temporal Difference Learning, on the other hand, encompasses a broader range of algorithms that can learn both the V-function and the Q-function.

Here’s a detailed comparison between the two.

Basic Framework

Temporal Difference Learning is a framework that uses the difference between estimated values of two successive states to update value estimates.

On the other hand, Q-learning is an algorithm that operates within this Temporal Difference framework – it specifically learns the quality of actions to decide which action to take.

Learning Method

When it comes to learning, Temporal Difference Learning learns from consecutive states in an environment to forecast future rewards.

Q-Learning, however, is a touch more specific. It uses updates in the action-value function to maximize expected rewards.

Policy

Another key point of divergence is their approach to policy. Temporal Difference Learning allows both on-policy learning like SARSA as well as off-policy learning like Q-Learning.

But Q-Learning itself is a type of off-policy learning that estimates the optimal policy, regardless of the policy followed by the agent.

Bias and Variance

In terms of bias and variance, Temporal Difference Learning tries to strike a balance between the two across its different algorithms.

Q-Learning on the other hand, can occasionally be prone to overestimating future rewards due to its max operation, introducing a bit of bias into its action-value estimation.

Use Case

Lastly, Temporal Difference Learning generally serves as a foundation for many learning algorithms like Q-Learning and SARSA, to mention a couple.

Q-Learning, specific in its design, is widely used when the agent need to learn an optimal policy while exploring the environment.

Different Algorithms in Temporal Difference Learning

In this section, we'll cover the key algorithms used in temporal difference learning.

Sarsa Algorithm

The Sarsa algorithm works by estimating the value of the current state-action pair based on the reward and the estimate of the next state-action pair.

This allows it to continually update and adjust to better policy.

Q-Learning

Unlike Sarsa, Q-Learning directly estimates the optimal policy, even if actions are taken according to a more exploratory or random policy.

Q-Learning works by updating the value of an action in a state without considering the next action.

TD-Lambda

TD-Lambda joins the benefits of Monte Carlo and TD Learning, leveraging a parameter 𝜆 to balance the two.

It uses eligibility traces to keep a record of states, giving a proportion of the reward back to recently visited states.

Sarsa-Lambda

Sarsa-Lambda is an extension of the Sarsa algorithm, adding eligibility traces for a more effective 'look back' at previous state-action pairs.

This makes it more efficient by disseminating the reward to all preceding states.

Double Q-Learning

Double Q-learning addresses the overestimation bias of traditional Q-learning by maintaining two independent Q value estimates.

The choice of which Q value to update is random, providing a more unbiased approximation.

Frequently Asked Questions (FAQs)

What is Temporal Difference Learning?

Temporal Difference Learning is a technique in reinforcement learning that predicts future values by gradually adjusting predictions based on incoming information.

It combines elements of Monte Carlo and dynamic programming approaches.

How does Temporal Difference Learning work?

Temporal Difference Learning makes predictions more accurate over time by utilizing the difference between the predicted value and the actual value of a subsequent state. It gradually matches expectations with reality.

What are the parameters in Temporal Difference Learning?

The parameters in Temporal Difference Learning include the learning rate (α), which determines the extent of new information influence, the discount rate (γ), which prioritizes future rewards, and the exploration vs. exploitation parameter (e), balancing new information with known actions.

What are the applications of Temporal Difference Learning?

Temporal Difference Learning finds applications in neuroscience for understanding dopamine neurons, in studying conditions like schizophrenia, and in machine learning algorithms for reinforcement learning tasks.

What are the benefits of Temporal Difference Learning?

Temporal Difference Learning offers benefits such as online and offline learning, handling incomplete sequences, adapting to non-terminating environments, less variance and higher efficiency compared to Monte Carlo methods, and leveraging the Markov property for accurate predictions.