What is Syntax Analysis?

Syntax analysis, also known as parsing, is the process of analyzing a string of symbols in a programming language to determine its grammatical structure and ensure it conforms to the rules of that language.

In syntax analysis, the input string is broken down into its component parts including words, phrases, clauses, and sentences to uncover the hierarchy and relationships between them.

The analyzer checks for proper syntax whether the symbols and structures abide by the formal grammar rules defined for that language.

Key steps in syntax analysis typically include:

- Tokenizing the input into logical pieces like identifiers, operators, delimiters.

- Forming a parse tree to represent the syntactic structure in a hierarchical form.

- Checking for correct syntax, validating things like proper statement termination, balanced parentheses, data types.

- Detecting and reporting errors if syntax rules are violated.

The parser works its way through the input in a systematic manner, applying the syntax rules at each step to ensure formal language conventions are followed. Proper syntax is required for a program to be successfully compiled or interpreted.

Syntax analysis output is often fed into subsequent phases of the compiler like semantic analysis and code generation. It is an essential step in processing source code and deriving meaning from programming language input.

Why is Syntax Analysis Important in NLP?

One critical aspect of NLP is syntax analysis, but what makes it so important? Syntax analysis refers to the process of studying the arrangement of words in sentences to grasp their meaning.

It is akin to the role of grammar in language learning. Let’s shed light on its significance in the realm of NLP.

Syntax Analysis Deciphers Meaning

Syntax analysis, or parsing, helps decipher the meaning of sentences by breaking them down into their syntactical components, such as verbs, nouns, adjectives, etc.

This parsing allows machines to understand the intent of the sentences.

Powers Advanced Language-Based Applications

Syntax analysis is crucial for advanced language-based applications, such as language translation, sentiment analysis, and information extraction.

These applications rely heavily on accurately recognizing the structure of sentences to operate effectively.

Facilitates Efficient Communication With Machines

By leveraging syntax analysis, NLP can make human-machine communication more intuitive and efficient.

This feature is especially important in voice-based AI systems like virtual assistants and chatbots.

Enables Languages Structure Complex Understanding

Syntax analysis is critical in understanding the structure of complex languages.

It supports sentence disambiguation, which is essential when one sentence can have multiple meanings based on its syntax.

Enhances Machine Language Learning

Lastly, syntax analysis enhances machine learning in language models as it provides structure to the training data, which is essential for machines to learn the nuances of human language effectively.

In essence, syntax analysis acts as the bedrock of NLP. It plays a vital role in making human language understandable to machines, enabling them to interact with us in a more sophisticated, meaningful, and human-like way.

How Does Syntax Analysis Work?

In this section, we'll largely delve into syntax analysis, also known as parsing, clarifying how it functions as a vital part of the compilation process in computer programming.

Meaning of Syntax Analysis

Syntax analysis is a significant stage in a compiler's process. Its job is to examine the source code and ensure it adheres to the grammatical rules of the programming language, essentially checking for any syntax errors.

Role of Tokens

In the lexical analysis stage preceding syntax analysis, the source code is broken down into 'tokens,' the smallest elements of a program. Syntax analysis uses these tokens to create a parse tree.

Formation of Parse Trees

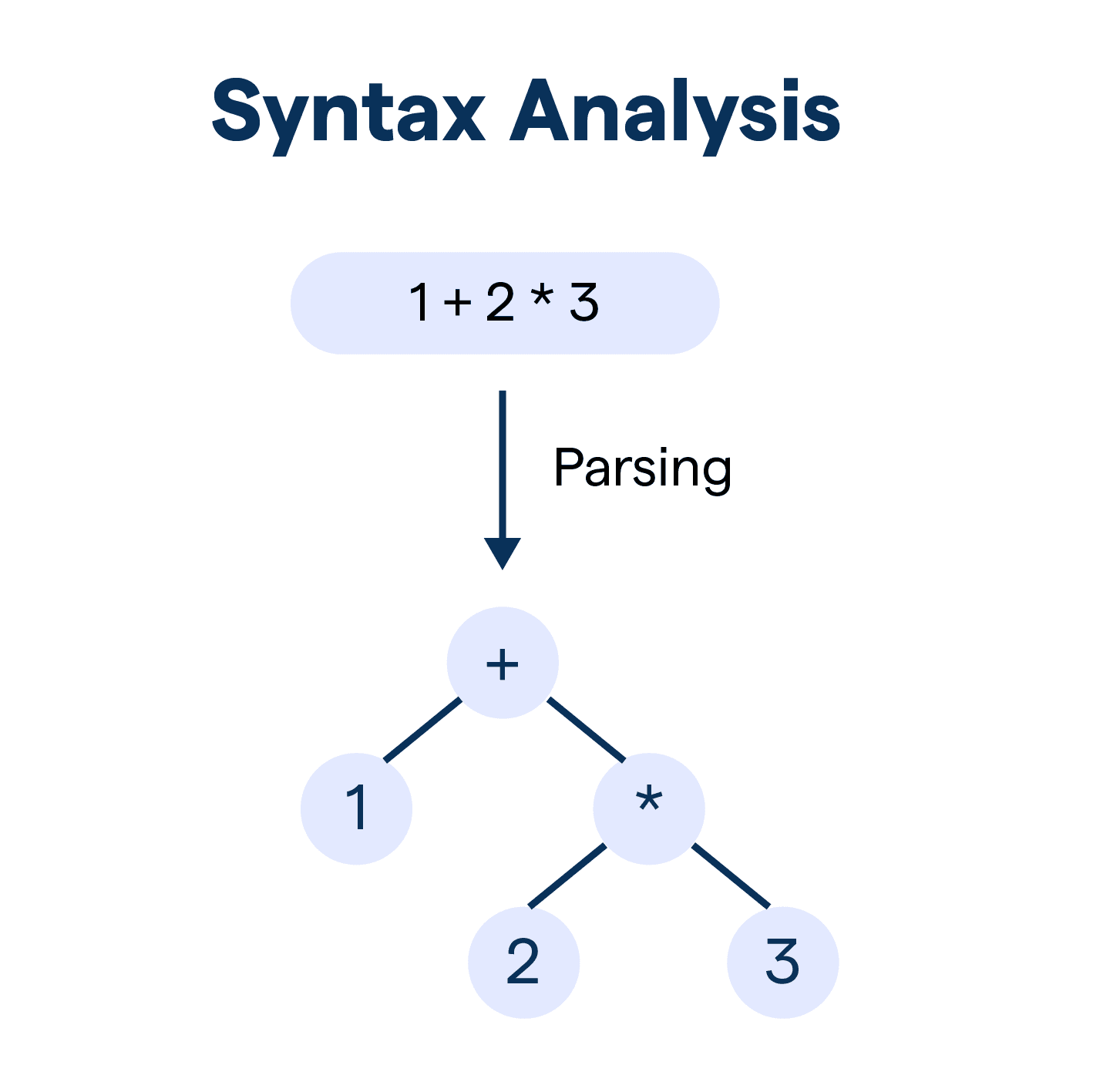

The parse tree, also known as a syntax tree, demonstrates the rules used to check the compliance of the program's syntax.

Each branch represents a rule, and each node denotes a structure derived from applying that rule.

Types of Parsers

There are two main types of parsers in syntax analysis: top-down and bottom-up.

Top-down parsers start at the highest level of the parse tree and work their way down, while bottom-up parsers begin at the bottom and work upwards.

Error Reporting and Recovery

One of the critical functions of syntax analysis is to report errors to the user.

If a syntax error is detected, the compiler should not only point it out but also recover from it in an optimal way to continue with the process.

Production of Intermediate Code

Once the source code has been successfully parsed and proved free of syntax errors, the compiler produces an intermediate code.

This code is a more abstract representation of the original source code, taking us one step closer to machine language.

Understanding the workings of syntax analysis can provide valuable insight into the compilation process, aiding us in writing syntax-error-free code and understanding the root of syntax errors when they occur.

Types of Syntax Analysis Models

In this enlightening section, we're going to delve into the various models used in syntax analysis.

Parsing or syntax analysis models define the rules and methods applied to ensure code correctness, and commonly, they can be segregated into two main categories: top-down and bottom-up parsing.

Top-Down Parsing

Top-down parsing starts from the root node, also called the start symbol, and tries to derive the input string by transforming the start symbol.

There are several protocol variants that fall under this type:

- Recursive Descent Parsing: This is a form of top-down parsing where each grammar rule is translated into a function. These functions mutually call each other, hence the "recursive" name.

- Predictive Parsing: This parsing model is similar to the recursive descent model but eliminates recursion.

In turn, this parsing model requires the grammar to be a predictive or LL(1) grammar, which means the next action can be determined by looking at the next symbol and applying one rule.

Bottom-Up Parsing

Bottom-Up parsing starts from the input string and attempts to construct the parse tree to eventually reach the start symbol. Here are some types of bottom-up parsing:

- Operator-Precedence Parsing: A specific, less general type of bottom-up parsing where the precedence relations between the productions are determined by a set of precedence rules.

- Shift-Reduce Parsing: In this model, the parse tree is built from the leaves up to the root by repetitively applying reductions, which are the reverse form of the grammar productions.

- LR Parsing: LR parsing is a more general form of shift-reduce parsing. It stands for Left-to-right scan and Rightmost derivation.

This parsing model can handle almost all programming language constructs and is used in many modern compilers.

Each of these parsing models serves a unique purpose in the process of syntax analysis. The choice of which parsing model to use often depends on the complexity of the grammar and the specific requirements of the programming language.

Suggested Reading:

Lexical Functional Grammar

Common Terms and Concepts in Syntax Analysis

To understand syntax analysis in depth, it's essential to be familiar with the following key terms and concepts:

Parse Tree

A parse tree is a graphical representation of the syntactic structure of a sentence. It shows how words are combined into phrases and how phrases are combined into larger constituents.

Dependency Tree

A dependency tree represents the grammatical dependencies between words in a sentence. It illustrates how the words are connected and the syntactic relationship between them.

PartofSpeech (POS) Tagging

Partofspeech tagging involves assigning a specific tag to each word in a sentence to classify its grammatical category, such as noun, verb, adjective, etc.

Head Word

The head word in a phrase is the primary word that governs the grammatical and semantic properties of the entire phrase.

Phrase Structure Rules

Phrase structure rules define the permissible structures and combinations of words in a language. They specify how words can be grouped together to form phrases.

Constituent

A constituent is a group of words that functions as a single unit within a sentence. It can be a phrase or a complete sentence.

Phrase Structure Grammar

Phrase structure grammar is a formal system that describes the hierarchical structure of a language by specifying the rules for combining words into phrases and phrases into larger constituents.

ContextFree Grammar (CFG)

Contextfree grammar is a type of phrase structure grammar that defines the syntactic structure of a language using a set of production rules.

Lexical Ambiguity

Lexical ambiguity refers to the situation when a word has multiple possible meanings. Resolving lexical ambiguity is important in syntax analysis to accurately interpret the intended meaning of a sentence.

Syntactic Ambiguity

Syntactic ambiguity occurs when a sentence has multiple possible interpretations due to the ambiguous arrangement of words and phrases. Syntax analysis helps in resolving syntactic ambiguity and identifying the intended structure of the sentence.

Syntax Analysis Evaluation Metrics

To assess the performance of syntax analysis models, several evaluation metrics are commonly used:

Accuracy

Accuracy measures the percentage of correctly parsed sentences out of the total number of sentences evaluated. It indicates how well the model is able to predict the correct syntactic structure.

Recall

Recall measures the ability of the syntax analysis model to identify all the relevant elements, such as words or phrases, in a sentence.

Precision

Precision measures the proportion of correctly identified elements to the total number of elements identified by the model. It indicates how precise the model is in capturing the correct syntactic structure.

F1 Score

The F1 score is a combined measure of both precision and recall. It provides a balanced evaluation metric by considering both the correctness and completeness of the syntax analysis.

Applications of Syntax Analysis in NLP

In this section, we'll discuss the diverse applications of Syntax Analysis, a crucial component of Natural Language Processing (NLP).

Translation Services

Syntax Analysis is key to transforming phrases from one language to another while retaining their intended meanings, thus enhancing the quality of automatic translations.

Speech Recognition

Navigating speech variations requires Syntax Analysis to correctly interpret and transcribe spoken language, greatly improving speech-to-text services.

Search Engines

Search engines benefit from Syntax Analysis to understand search queries and provide accurate, relevant results, bringing refinement to user search experience.

Text Summarization

Syntax Analysis aids in extracting key information from large volumes of text, assisting in creating concise, coherent summaries.

Sentiment Analysis

Use of Syntax Analysis in sentiment analysis allows systems to grasp context, sarcasms, and nuances in text, improving the accuracy of sentiment predictions.

Syntax Analysis, hence, plays a pivotal role in several NLP domains, where understanding language structure and semantics is key.

Challenges in Syntax Analysis

In this section, we’ll delve into some of the difficulties encountered in Syntax Analysis and speculate on the prospective directions for this field.

Handling Ambiguity

Sometimes, sentences carry multiple interpretations, posing a serious challenge to Syntax Analysis. Future research is required to address this ambiguity in NLP tasks.

Computational Complexity

Dependency parsing in Syntax Analysis often entails high computational costs. Optimized algorithms are needed to reduce resource consumption.

Language Diversity

Syntax differs widely across languages, implying the need for versatile models. Future efforts could focus on cross-lingual Syntax Analysis systems.

Lack of Labeled Data

Data annotation is resource-intensive, impeding the training of supervised models. Semi-supervised and unsupervised learning methods promise potential future solutions.

Integrating Syntax with Semantics

There's a need for models merging syntactic structure with semantic interpretation to capture language nuances better. The future of Syntax Analysis could see significant developments in this direction.

Frequently Asked Questions (FAQs)

What is the difference between dependency parsing and constituency parsing?

Dependency parsing focuses on the relationships between words in a sentence, while constituency parsing aims to identify the structure and constituents of a sentence.

How is partofspeech tagging related to syntax analysis?

Partofspeech tagging assigns grammatical tags (e.g., noun, verb) to words in a sentence, which is an important step in syntax analysis as it provides information about the role each word plays in the sentence's structure.

What are parse trees and dependency trees?

Parse trees are graphical representations of the hierarchical structure of a sentence, showing how words form phrases and phrases combine into larger constituents. Dependency trees illustrate the grammatical relationships between words.

What are some evaluation metrics used in syntax analysis?

Common evaluation metrics include accuracy, recall, precision, and F1 score. These metrics measure the model's ability to accurately predict the syntactic structure and identify relevant elements in a sentence.

How can syntax analysis improve machine translation?

Syntax analysis helps align the sentence structures between different languages, enabling more accurate and context-aware translation. It ensures that the translated sentences maintain the correct syntactic relationships and coherence.