What is Lazy Learning?

Lazy learning is an approach to machine learning where the algorithms postpone the processing of examples until it is necessary.

It is also known as instance-based learning, as it generalizes training data by matching new instances to the instances stored in the training data.

Lazy learning algorithms don't construct a specific target function during training but rather defer it until a classification is requested. This approach allows lazy learners to adapt more quickly to changes in the dataset.

When is Lazy Learning Used?

Lazy classifiers are particularly useful in scenarios where computation during training and consultation times is a concern. They save computation time by only processing instances when necessary. Lazy learning is also beneficial when dealing with large datasets as it avoids the expensive upfront computation required by eager learning algorithms.

Why is Lazy Learning Important?

Lazy learning, also known as instance-based learning or memory-based learning, is an important machine learning approach with several advantages over other methods. Here are four key reasons why lazy learning is important:

Flexibility and Adaptability

Lazy learning algorithms make predictions based on the similarities between new examples and instances in the training dataset. This means that lazy learning can handle complex and non-linear relationships in the data, as it adapts to changes and variations in the input space.

As a result, lazy learning is often effective for problems with changing or dynamic environments.

Efficiency and Scalability

Lazy learning avoids the need for costly training or model building phases. Instead, it focuses on storing and indexing the training dataset to efficiently retrieve nearest neighbors when making predictions. This makes lazy learning particularly useful for large datasets, as it can handle high-dimensional and diverse feature spaces without significant preprocessing.

Incremental Learning

Lazy learning allows for easy integration of new training examples without rebuilding the model from scratch. This incremental learning capability makes it suitable for scenarios where data arrives gradually or continuously over time. Unlike eager learning approaches, lazy learning algorithms can incorporate new information without discarding previously learned knowledge.

Interpretability and Explainability

Lazy learning algorithms provide more transparency and interpretability compared to their eager counterparts. As the model predictions are based on the known instances from the training data, it is easier to understand the reasoning behind a particular prediction. This interpretability makes lazy learning more desirable in domains where explainability is crucial, such as healthcare or finance.

How Does Lazy Learning Work?

Lazy learning, also known as instance-based learning or memory-based learning, works by utilizing the training dataset directly when making predictions. Here's how lazy learning works:

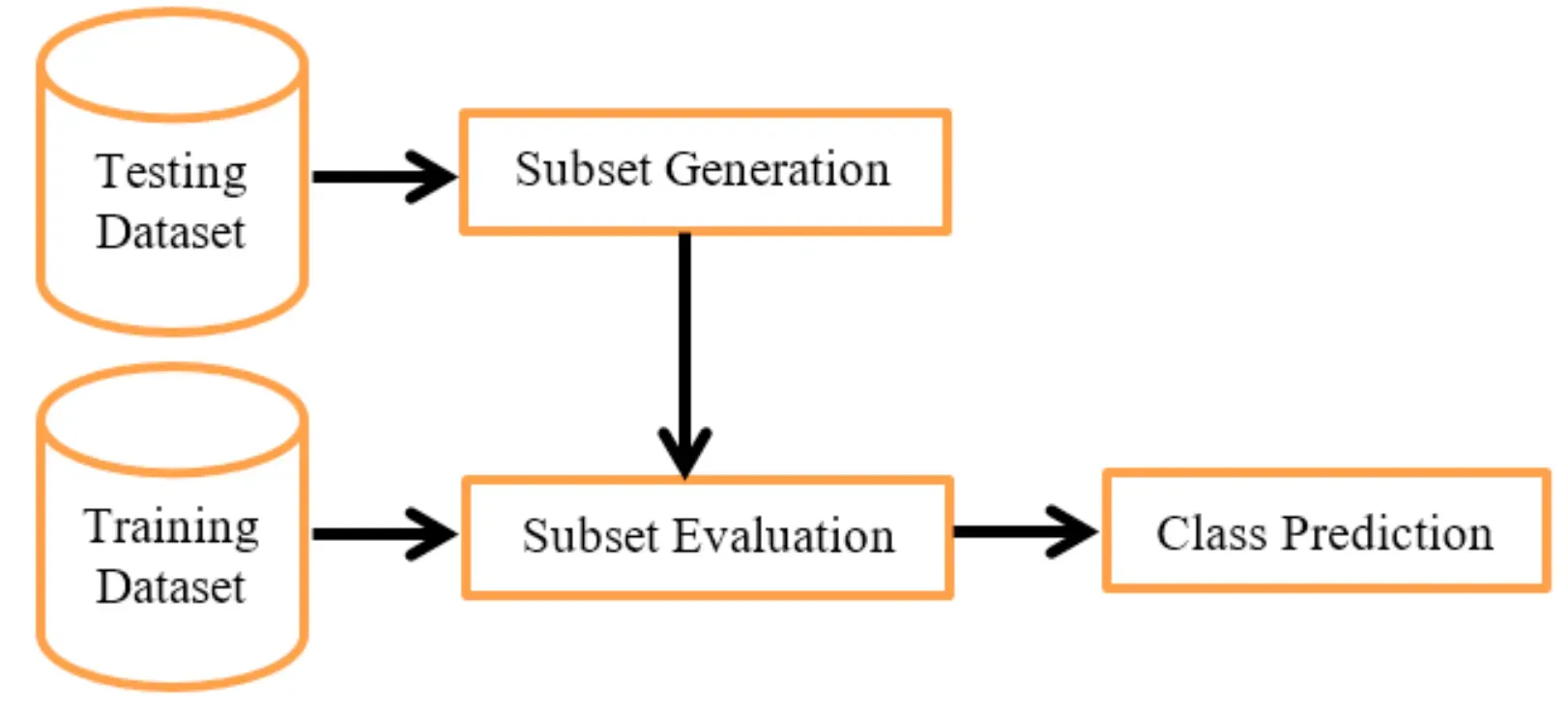

Instance Storage

During the training phase, the lazy learning algorithm stores the entire training dataset in memory without performing any explicit model building or parameter estimation. This allows for efficient storage and retrieval of instances during the prediction phase.

Similarity Measurement

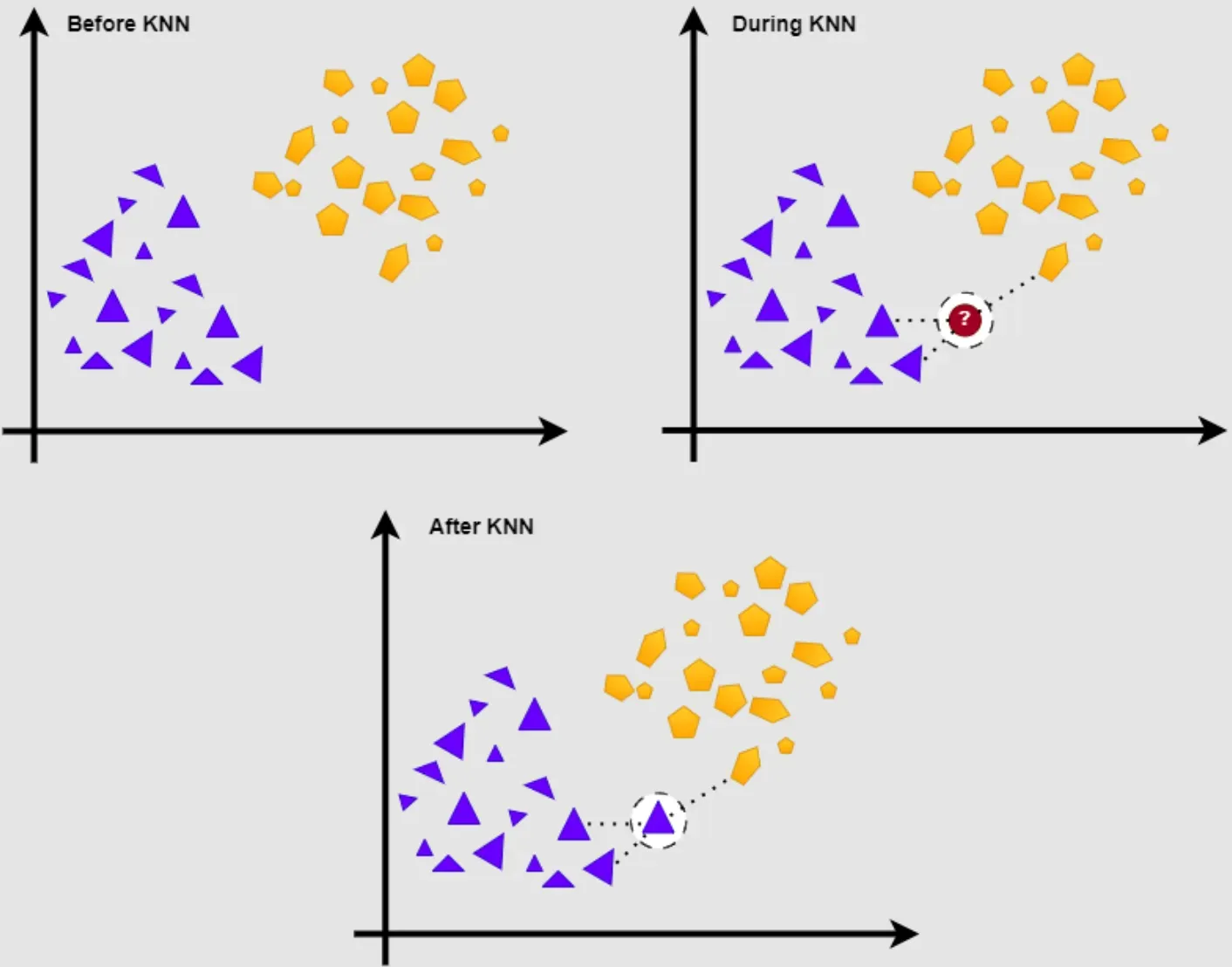

When a new instance or query is provided, lazy learning calculates the similarity between the query and each instance in the training dataset. The choice of similarity measure depends on the specific algorithm and can be based on metrics such as Euclidean distance or cosine similarity.

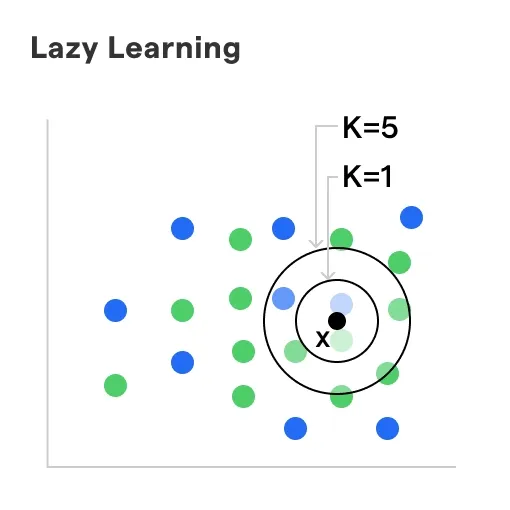

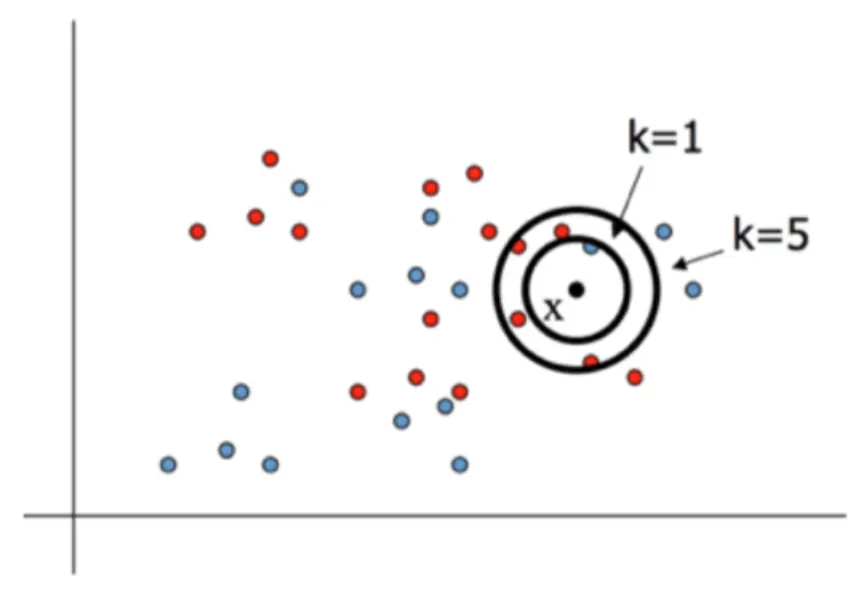

Neighbor Selection

The lazy learning algorithm identifies the k nearest neighbors to the query based on the calculated similarity measure. The number of neighbors (k) is a user-defined parameter that determines the level of local generalization. A higher k value increases the influence of a larger neighborhood on the prediction.

Prediction

Finally, the lazy learning algorithm combines the target values of the k nearest neighbors to make a prediction for the query. This prediction can be obtained through various methods, such as majority voting for classification problems or averaging for regression problems.

Lazy Updating

Unlike eager learning approaches, lazy learning allows for incremental learning. This means that the algorithm can easily incorporate new instances into the existing model without rebuilding it completely. The new instances are stored alongside the existing instances, and the similarity calculation and neighbor selection steps are adjusted accordingly during prediction.

Examples of Lazy Learning

Instance-based learning, local regression, K-Nearest Neighbors (K-NN), and Lazy Bayesian rules are examples of lazy learning algorithms. These algorithms use the stored training instances to make predictions or classifications based on their similarity to new instances.

Advantages of Lazy Learning Algorithms

Incremental Learning

Lazy learning algorithms can easily integrate new training examples into the existing model without the need to rebuild the model from scratch. This incremental learning capability makes it suitable for scenarios where the data arrives gradually or continuously over time.

Bias-Variance Trade-off

Lazy learning has a different bias-variance trade-off than eager learning algorithms. In lazy learning, the model has high variance and low bias, which means it tends to overfit the training data. However, this overfitting can be minimized by controlling the value of the k-nearest neighbors parameter used in the algorithm.

Robustness to Outliers and Noise

Lazy learning algorithms are generally more robust to outliers and noise in the training data. This is because they rely on the local neighborhood of instances to make predictions, rather than assuming a global model. Outliers and noisy instances can have a lesser impact on the final predictions compared to eager learning algorithms.

Feature Relevance Discovery

Lazy learning algorithms can help in discovering the relevance of different features in the dataset. By analyzing the neighbors and their contributions to the prediction, it is possible to determine which features are more informative for the prediction task. This feature relevance discovery can be valuable in feature selection and interpretability of the model.

Cost-Effective Learning

Lazy learning algorithms can be more cost-effective in terms of computational resources and time. Training a lazy learning model is faster and requires less storage compared to eager learning models that need to build and optimize complex models.

Additionally, lazy learning allows for efficient use of computational resources by only calculating predictions when needed.

Disadvantages of Lazy Learning Algorithms

While lazy learning algorithms offer several advantages, they also have some disadvantages. Here are five key disadvantages of lazy learning algorithms:

Computational Cost

Lazy learning algorithms can be computationally expensive during the prediction phase. This is because they require calculating the similarity between the query instance and all training instances. As the dataset size increases, the computational cost grows significantly, impacting the runtime performance of the algorithm.

Storage Requirements

Lazy learning algorithms need to store the entire training dataset in memory, which can be challenging for large datasets with high-dimensional features. The memory requirements can limit the scalability of lazy learning algorithms when dealing with big data scenarios or constrained computing environments.

Sensitivity to Noise and Irrelevant Features

Lazy learning algorithms can be sensitive to noisy or irrelevant features in the dataset. Since the prediction is based on the neighbors' information, noisy or irrelevant features can lead to incorrect predictions or dilute the contribution of informative features. Therefore, preprocessing and feature selection techniques are crucial to mitigate this sensitivity.

Lack of Generalization

Lazy learning algorithms focus on local generalization rather than capturing global patterns in the data. This can lead to difficulties in handling instances that are dissimilar to any training instance within the local neighborhood. In such cases, lazy learning algorithms may struggle to generalize well and produce accurate predictions.

Decision Boundary Interpretability

Lazy learning algorithms typically produce non-linear and irregular decision boundaries. While this allows them to capture complex relationships, it also makes it challenging to interpret and explain the decision boundaries. Eager learning algorithms with explicit models often provide more intuitive decision boundaries.

Comparison with Eager Learning Algorithms

Eager learning algorithms perform upfront computation during the training phase, constructing a specific target function.

In contrast, lazy learning algorithms defer the computation until classification is requested.

The training and consultation times are different in both approaches.

Eager learners have shorter consultation times, as they don't need to process the instances on-demand.

Frequently Asked Questions (FAQs)

What are some popular lazy learning algorithms?

Popular lazy learning algorithms include k-nearest neighbors (KNN), locality-sensitive hashing (LSH), and collaborative filtering-based algorithms1.

How do you determine the optimal number of neighbors in KNN?

The optimal number of neighbors in KNN depends on the dataset, and there is no fixed rule on how to select this value. A common approach is to use cross-validation to evaluate performance metrics such as accuracy, F1 score, or AUC for different k values2.

What are some preprocessing techniques used in lazy learning?

Preprocessing techniques such as feature scaling, missing value imputation, and noise reduction can improve the performance of lazy learning algorithms. Feature selection and dimensionality reduction techniques can also reduce the computational cost and improve generalization capabilities.

How can lazy learning algorithms handle multi-class classification?

Lazy learning algorithms can handle multi-class classification by using k-NN-like variants that support more than two classes, such as multi-label k-NN, fuzzy k-NN, and weighted k-NN. Another option is to use ensemble techniques like random forest or gradient boosting.

Can lazy learning be used for online learning?

Yes, lazy learning can be used for online learning by incrementally updating the model with new instances as they arrive. This approach is called instance-based learning, and it allows the algorithm to adapt to non-stationary data distributions in real-time scenarios.