What is Latent Semantic Analysis (LSA)?

Latent Semantic Analysis (LSA) is a technique used in natural language processing and information retrieval to analyze the relationships between words and documents. It is based on the concept that words that appear in similar contexts are related in meaning, even if their surface form differs.

Why is Latent Semantic Analysis (LSA) important?

LSA allows us to uncover the hidden semantic structure in a collection of documents, enabling us to understand the meaning of words and documents beyond their surface level. It has various applications in information retrieval, text classification, document clustering, automatic summarization, and question answering.

How does Latent Semantic Analysis (LSA) work?

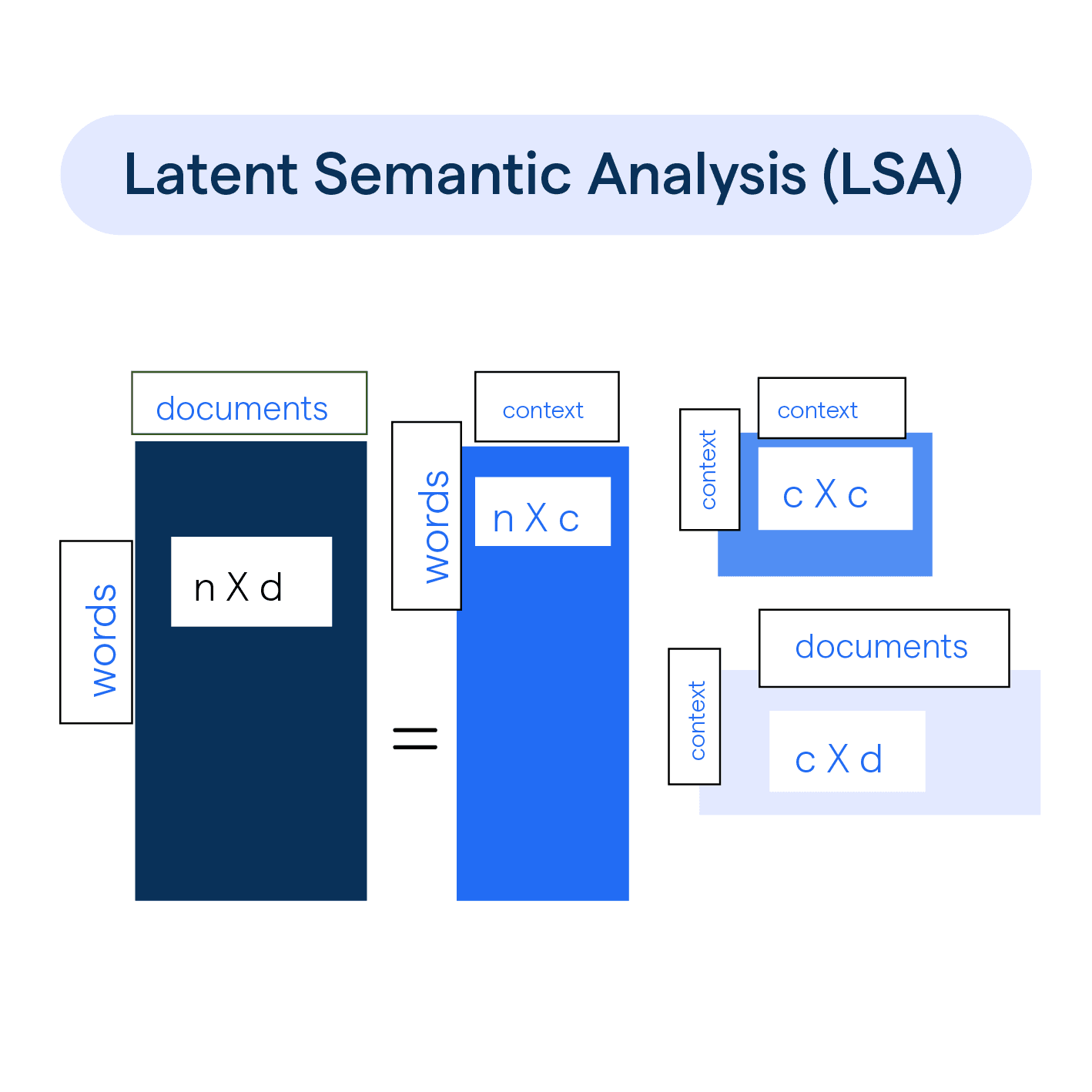

LSA uses a mathematical approach called Singular Value Decomposition (SVD) to convert a collection of text documents into a high-dimensional vector space. The relationships between words and documents are then analyzed based on their similarities in this vector space. By reducing the dimensionality of the data, LSA extracts the latent semantic meaning and enables comparison and classification of documents.

Key Concepts in Latent Semantic Analysis (LSA)

Corpus

In Latent Semantic Analysis (LSA), a corpus refers to a collection of text documents that LSA analyzes. The corpus can range from a small dataset of documents to a large collection of books, articles, or web pages.

Term

A term in Latent Semantic Analysis (LSA) refers to a word or a phrase that appears in the corpus. Terms are the building blocks of the analysis and are represented as vectors in the vector space model.

Document

A document in Latent Semantic Analysis (LSA) refers to a unit of text that LSA analyzes. It can be a paragraph, an article, a book, or any other linguistic unit. Each document is represented as a vector in the vector space model.

Vector Space Model

The vector space model is the mathematical representation of the relationship between terms and documents in Latent Semantic Analysis (LSA). It maps each term and document to a high-dimensional vector in the vector space, where the dimensions correspond to different features or concepts.

Singular Value Decomposition (SVD)

Singular Value Decomposition (SVD) is the mathematical technique used in Latent Semantic Analysis (LSA) to decompose the term-document matrix into three matrices, namely the singular value matrix, the left singular vector matrix, and the right singular vector matrix. SVD helps in reducing the dimensionality of the data and extracting latent semantic meaning.

Applications of Latent Semantic Analysis (LSA)

Information retrieval

Latent Semantic Analysis (LSA) has been widely used in information retrieval systems to improve the accuracy of search results. By analyzing the semantic relationship between words and documents, LSA can retrieve relevant documents even when the terms used in the query don't exactly match the terms in the documents.

Text classification

LSA can be used for automatic text classification, where documents are categorized into pre-defined classes or topics based on their semantic similarity. LSA takes into account the underlying meaning of the documents, allowing for more accurate and robust classification.

Document clustering

LSA can cluster similar documents together based on their semantic content. This allows for organizing large collections of documents into meaningful groups, which can be helpful for tasks such as organizing news articles, grouping research papers, or identifying common themes in a large dataset.

Automatic summarization

LSA can be used to automatically generate summaries of long texts by identifying the most important and relevant sentences or passages. By analyzing the semantic relationships between sentences, LSA can extract the key information and generate concise summaries.

Question answering

LSA can be used in question answering systems to match the query with the relevant information in documents. By analyzing the semantic similarity between questions and answers, LSA helps in finding the most suitable answers to user queries.

Advantages of Latent Semantic Analysis (LSA)

Improved accuracy in information retrieval

LSA improves the accuracy of information retrieval systems by considering the underlying semantic meaning of words and documents. It can retrieve relevant documents even when the exact terms used in the query don't match the documents.

Efficient processing of large volumes of text

LSA can efficiently process large volumes of text by reducing the dimensionality of the data through Singular Value Decomposition (SVD). This allows for faster analysis and retrieval of information from vast collections of documents.

Flexibility in language and domain

LSA is not restricted to a specific language or domain. It can be applied to analyze text in different languages and across various domains, making it a versatile method for understanding the semantic relationships within textual data.

Limitations of Latent Semantic Analysis (LSA)

Sensitivity to term variability

LSA relies on the statistical patterns and co-occurrence of terms in the corpus. It may not handle variations in word forms or synonyms well, as it treats each term as a separate entity without considering their semantic similarity.

Inability to handle ambiguous terms

LSA may struggle with disambiguating words with multiple meanings. It treats each instance of a term separately without considering the contextual sense, which can lead to inaccuracies when dealing with polysemous words.

Computationally demanding for large-scale applications

Performing Latent Semantic Analysis (LSA) on large-scale datasets can be computationally demanding due to the need for matrix operations and Singular Value Decomposition (SVD). This could require significant computational resources and time.

Implementing Latent Semantic Analysis (LSA)

Data preprocessing

Before applying Latent Semantic Analysis (LSA), it is essential to preprocess the text data by removing stopwords, punctuation, and performing stemming or lemmatization to normalize the terms.

Creating the term-document matrix

The term-document matrix represents the frequency of each term in each document. It is a crucial step in preparing the data for Latent Semantic Analysis (LSA) and involves counting the occurrences of each term in the corpus.

Performing Singular Value Decomposition (SVD)

Singular Value Decomposition (SVD) is applied to the term-document matrix to decompose it into three matrices. This helps in reducing the dimensionality of the data and extracting the latent semantic meaning.

Interpreting the results

After performing Latent Semantic Analysis (LSA), the results can be interpreted by examining the relationships between terms and documents in the vector space. Similar terms and documents will have closer vector representations, indicating their semantic similarity.

Tl;DR

Latent Semantic Analysis (LSA) is a powerful technique for analyzing the relationships between words and documents, uncovering the hidden semantic structure in textual data. It has various applications in information retrieval, text classification, document clustering, automatic summarization, and question answering. By understanding the concepts, advantages, limitations, and the implementation process of LSA, you can leverage this method to gain deeper insights from your text data.

Frequently Asked Questions (FAQs)

What is Latent Semantic Analysis (LSA)?

Latent Semantic Analysis (LSA) is a technique in natural language processing that identifies patterns in relationships between terms and concepts in unstructured text.

What is LSI?

LSI stands for Latent Semantic Indexing, a technique used in natural language processing to analyze relationships between a set of documents and the terms they contain. It helps identify the context and meaning of words within a document collection, facilitating more accurate information retrieval and text analysis.

How does LSA work?

LSA works by constructing a term-document matrix and applying a mathematical technique called singular value decomposition (SVD) to reduce the matrix's dimensionality and extract underlying concepts.

What is LSA used for?

LSA is primarily used for information retrieval, document classification, text clustering, and similar tasks where understanding the semantic similarity between texts is important.

Does LSA understand the meaning of words?

No, LSA doesn't understand the meaning of words like a human. It identifies patterns and relationships between terms and concepts, allowing it to capture the underlying semantics.

What are the limitations of LSA?

The main limitations of LSA include its inability to capture polysemy (words with multiple meanings) and synonymy (different words with similar meanings), and its high computation costs for large datasets.