Introduction

Looking to take your machine learning models to the next level? Fine-tuning is the key.

In this post, we’ll explore the top 10 tools to help you optimize your models’ performance.

We've covered everything from popular libraries like Scikit-Learn and TensorFlow to specialized tools like Optuna and Talos.

We’ll break down the key features of each solution to fine-tune hyperparameters, enable transfer learning, speed up training, and more.

Whether you’re just starting in ML or are a seasoned pro, you will surely find tips to boost your models.

Let’s see and unlock the full potential of your machine-learning workflows. You’ll be amazed by how these tools can take your ML projects to new heights!

Scikit-Learn

Scikit-Learn is a popular Python library for machine learning that provides various algorithms and functionality for modeling data and performing analysis.

It contains numerous techniques for tasks such as data preparation, model training, evaluation, and visualization.

The library leverages other Python packages like NumPy, SciPy and matplotlib, making it easy to incorporate into existing Python workflows focused on data science and machine learning.

Main Features for Fine-Tuning in Scikit-Learn

Scikit-Learn provides a rich set of features to fine-tune machine learning models. Some of the key features include:

Cross-Validation: Scikit-Learn allows you to perform cross-validation to estimate your model's performance. This helps you to select the best hyperparameters and assess the model's generalization ability.

Hyperparameter Tuning: Scikit-Learn offers tools like grid search and randomized search that enable exploring various groupings of hyperparameters. This permits finding the best possible hyperparameter configuration to achieve the highest model quality.

TensorFlow

TensorFlow is an open-source library for machine learning and deep learning.

It offers the best interface for creating and training models and low-level operations for fine-grained control over the model architecture.

TensorFlow offers a flexible platform that supports CPU and GPU acceleration, making it suitable for various tasks.

Main Features for Fine-Tuning in TensorFlow

TensorFlow offers several features for fine-tuning machine learning models:

Transfer Learning: With transfer learning, you can leverage pre-trained models and adapt them to your specific task. TensorFlow provides pre-trained models for popular architectures like Inception, ResNet, etc.

Model Deployment: TensorFlow allows you to deploy your models to various platforms, including mobile devices and the web. This makes putting your fine-tuned models into production and making real-time predictions easy.

Keras

Keras is a high-level neural networks API that runs on top of TensorFlow.

It provides a user-friendly and intuitive interface for building and training deep learning models.

Keras simplifies developing complex models by offering a high-level abstraction of TensorFlow's operations.

Main Features for Fine-Tuning in Keras

Keras offers several features for fine-tuning machine learning models:

Early Stopping: Keras allows you to stop the training process early if the model's performance on a validation set stops improving. This prevents overfitting and saves time by avoiding unnecessary training.

Model Checkpoints: Keras allows you to save the best model during training based on specific criteria, such as the validation loss. This ensures that you always have the best version of your model saved for later use.

PyTorch

PyTorch is a popular open-source library for machine learning and deep learning, known for its dynamic computation graph and flexible design.

It provides a powerful platform for building and fine-tuning machine-learning models.

With PyTorch, you have fine-grained control over the model architecture and can easily define custom operations.

Main Features for Fine-Tuning in PyTorch

PyTorch offers several features for fine-tuning machine learning models:

Dynamic Computation: PyTorch uses a dynamic computation graph, allowing efficient model training and easy model modification. You can dynamically adjust the model architecture during training, making it ideal for tasks like neural architecture search and hyperparameter tuning.

Autograd: PyTorch's autograd feature automatically computes gradients for the model parameters, enabling efficient gradient-based optimization algorithms. This simplifies the process of fine-tuning models and makes it easy to incorporate custom loss functions and optimizations.

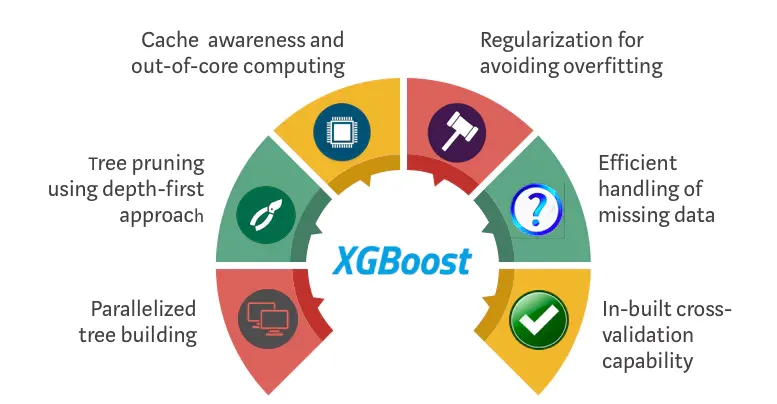

XGBoost

XGBoost is a popular gradient-boosting library known for its powerful performance and ability to handle various machine-learning problems.

It efficiently implements the Gradient boosting framework, a powerful technique for combining weak learners into a strong predictive model.

Main Features for Fine-Tuning in XGBoost

XGBoost offers several features for fine-tuning machine learning models:

Gradient Boosting: XGBoost employs the Gradient boosting framework, which iteratively trains models and combines them to form a final ensemble model. This technique helps improve model performance by reducing bias and variance.

Early Stopping: XGBoost allows for early stopping during training based on a user-specified evaluation metric. This helps prevent overfitting and allows you to automatically find the optimal number of boosting rounds.

LightGBM

LightGBM is a fast and efficient Gradient-boosting library developed by Microsoft.

It is designed to be memory-efficient and handle large-scale datasets.

LightGBM uses a histogram-based approach for gradient boosting, allowing for faster training and lower memory usage than traditional Gradient boosting frameworks.

Main Features for Fine-Tuning in LightGBM

LightGBM offers several features for fine-tuning machine learning models:

Histogram-based Gradient Boosting: LightGBM uses a histogram-based approach for gradient boosting, which discretizes the continuous features into bins. This allows for faster training and reduced memory usage, especially for datasets with many features.

GPU Acceleration: LightGBM supports GPU acceleration, making it even faster for training and inference. By leveraging the parallel processing capabilities of GPUs, LightGBM can significantly reduce training time and improve model performance.

GridSearchCV

GridSearchCV is a tool provided by Scikit-Learn that allows for systematic hyperparameter tuning using cross-validation.

It is a widely used technique for finding a machine-learning model's optimal combination of hyperparameters.

GridSearchCV exhaustively searches through a predefined parameter grid and evaluates the performance of each combination using cross-validation.

Main Features of GridSearchCV for Fine-Tuning Hyperparameters

GridSearchCV offers several features for fine-tuning hyperparameters:

Cross-Validation: GridSearchCV performs cross-validation to estimate the performance of each parameter combination. This helps to select the hyperparameters that generalize well to unseen data and avoid overfitting.

Scoring Metrics: GridSearchCV allows you to select scoring metrics to evaluate the model's performance. You can choose metrics such as accuracy, precision, recall, or custom evaluation functions, depending on the specific problem.

Optuna

Optuna is an open-source hyperparameter optimization library for machine learning.

It provides an easy-to-use and efficient framework for automating the search for optimal hyperparameter configurations.

Optuna uses a Bayesian optimization algorithm, which intelligently explores the hyperparameter space based on past evaluation results.

Main Features of Optuna for Fine-Tuning Hyperparameters

Optuna offers several features for fine-tuning machine-learning hyperparameters:

Multi-objective Optimization: Optuna supports multi-objective optimization, allowing you to optimize multiple objectives simultaneously. This is useful when you have multiple metrics to consider, such as accuracy and computational cost, and want to find a balance between them.

Pruning Algorithms: Optuna supports pruning algorithms, which can stop unpromising trials early to speed up the hyperparameter optimization process. By monitoring the intermediate results during trial training, Optuna can determine if it's worth continuing or if the trial should be pruned.

AutoKeras

AutoKeras is an open-source library for automated machine learning.

It aims to simplify the process of model selection, hyperparameter tuning, and architecture search by automating these tasks.

AutoKeras uses neural architecture search algorithms to automatically search for the best neural network architecture for a given dataset and prediction task.

Main Features of AutoKeras for Automated Model Selection

AutoKeras offers several features for automated model selection:

Neural Architecture Search: AutoKeras uses neural architecture search algorithms, such as Bayesian optimization and genetic algorithms, to search for the optimal model architecture automatically. It explores various possible architectures and selects the one that performs best on the given dataset.

Hyperparameter Tuning: AutoKeras performs automatic hyperparameter tuning to optimize the model's hyperparameters. It searches the hyperparameter space to find the best combination of values that maximizes the model's performance. This includes tuning parameters such as learning rate, batch size, and regularization strength.

Talos

Talos is a hyperparameter optimization library for Keras, specifically designed for deep learning models.

It provides a simple and flexible interface for fine-tuning machine-learning models, allowing for efficient hyperparameter tuning, model evaluation, and GPU acceleration.Talos uses a combination of grid search and random search techniques to explore the hyperparameter space.

Main Features of Talos for Hyperparameter Tuning

Talos offers several features for hyperparameter tuning:

Hyperparameter Search: Talos performs efficient hyperparameter search by systematically exploring the hyperparameter space. It supports grid and random search methods, allowing you to select the one that suits your needs. Talos evaluates multiple hyperparameter combinations in parallel, speeding up the search process.

Model Evaluation: Talos provides comprehensive model evaluation capabilities, including performance metrics, learning curves, and visualizations. You can easily compare the performance of different models and select the best one based on your evaluation criteria.

Conclusion

Want to unlock your models' full potential? These top 10 tools provide everything you need to take your machine learning to the next level.

With capabilities to optimize hyperparameters, enable transfer learning, speed up training, and more, you're sure to find the perfect solution.

Don't settle for subpar model performance. Arm yourself with these fine-tuning tools instead.

Your models will thank you - and your projects will reach new heights.

What are you waiting for? Start fine-tuning today and watch your machine learning workflows excel.

Frequently Asked Questions (FAQs)

What is fine-tuning in machine learning?

Fine-tuning refers to adjusting pre-trained models to fit a specific task or dataset better. It involves training the model on new data while keeping the knowledge learned from the original training.

Which are the best tools for fine-tuning machine learning models?

Some popular tools for fine-tuning machine learning models include TensorFlow, Keras, PyTorch, scikit-learn, Hugging Face Transformers, and Ludwig. These tools provide essential functionalities and resources for model optimization.

How does TensorFlow help in fine-tuning models?

TensorFlow provides a robust framework for fine-tuning machine learning models. With its flexible architecture and extensive libraries, TensorFlow enables efficient and effective fine-tuning, empowering researchers and developers to optimize models for specific tasks.

What is the role of Keras in fine-tuning machine learning models?

Keras, a high-level neural network API, simplifies the process of fine-tuning models. It offers a user-friendly interface, making it easier to modify pre-trained models, retrain them, and achieve better performance in different tasks or domains.

Can scikit-learn be used for fine-tuning machine learning models?

Scikit-learn primarily focuses on classical supervised and unsupervised learning, but it offers utility for model selection and hyperparameter tuning, which can be useful for fine-tuning and optimizing machine learning models.

How does Hugging Face Transformers assist in fine-tuning natural language processing models?

Hugging Face Transformers is a powerful library for fine-tuning NLP models. It provides pre-trained models and easy-to-use APIs, allowing developers to fine-tune models for various NLP tasks such as text classification, question-answering, and language generation.