Understanding Explainable AI

Let’s begin with the ‘what is explainable AI’ and understand more about it in detail.

What is Explainable AI?

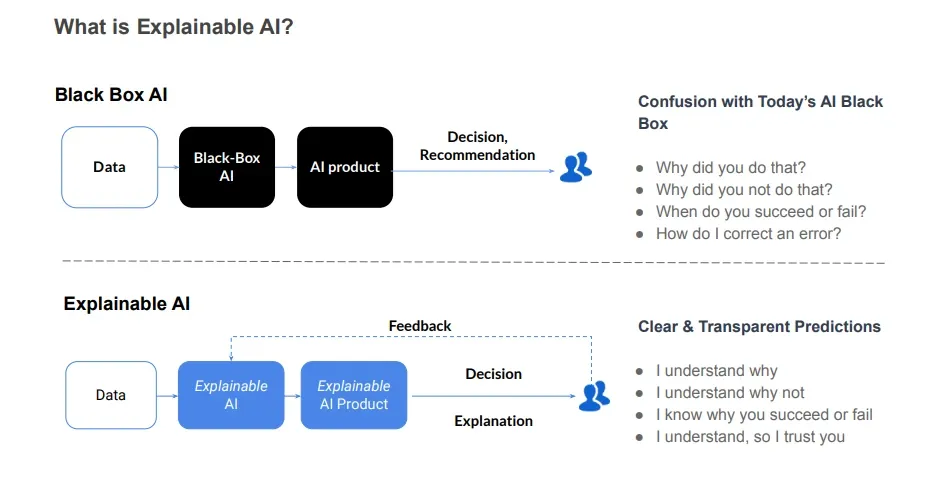

Explainable AI refers to AI systems designed to make their decisions and actions understandable to humans. Unlike traditional AI, where the decision-making process is often a "black box," Explainable AI design provides clear explanations of how conclusions are reached. This transparency is key, as it allows users to see the reasoning behind AI outputs.

Traditional AI vs. Explainable AI design

Traditional AI systems focus on achieving high accuracy, often at the cost of transparency. In contrast, Explainable AI design balances accuracy with explainability. It doesn’t just provide an answer but also shows how the answer was derived.

Importance of Transparency

In AI systems, transparency builds trust. When users understand how an AI decision is made, they are more likely to trust and rely on it. Transparency also helps in identifying and fixing errors, making the AI more reliable over time.

Benefits of Explainable AI

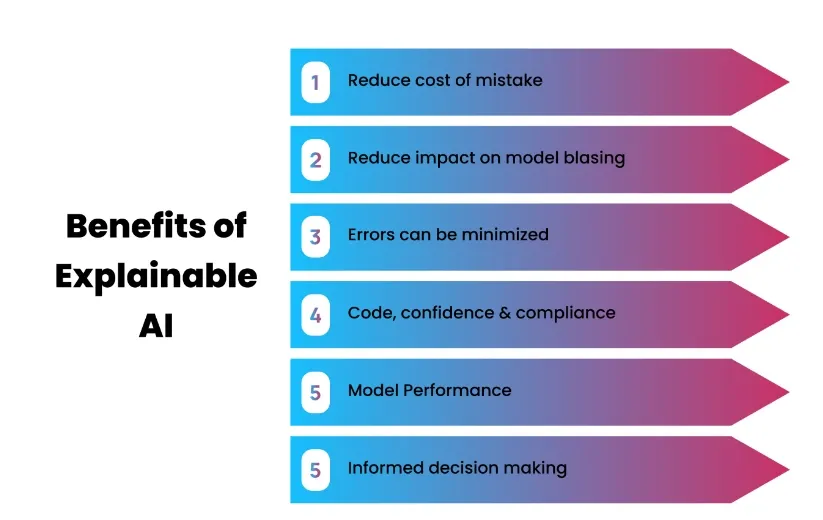

The benefits of Explainable AI design are the following:

Trust Building

When AI systems explain their decisions, users gain confidence in their accuracy and fairness.

For example, in healthcare, doctors are more likely to trust an AI that not only predicts a patient’s condition but also explains the reasoning, like identifying key symptoms from patient data. This trust is essential for broader adoption of AI in sensitive fields.

Improved Decision-Making

Explainable AI enhances decision-making by allowing users to understand and validate the AI’s output. In financial services, for instance, an AI might approve a loan application based on various factors.

With Explainable AI design, the system explains which factors were most influential, helping bank managers confirm that the decision aligns with their policies.

Regulatory Compliance

As laws around AI transparency tighten, Explainable AI helps organizations meet legal requirements. For example, the General Data Protection Regulation (GDPR) in the EU requires that individuals can understand and challenge automated decisions.

Explainable AI design makes it easier for companies to comply with such regulations by providing clear, understandable explanations.

Error Detection

Explainable AI design allows users to identify and correct mistakes in AI systems. For example, in autonomous driving, if an AI system incorrectly identifies a pedestrian as a traffic sign, Explainable AI design can help engineers trace back the error. Thus making the system safer and more accurate over time.

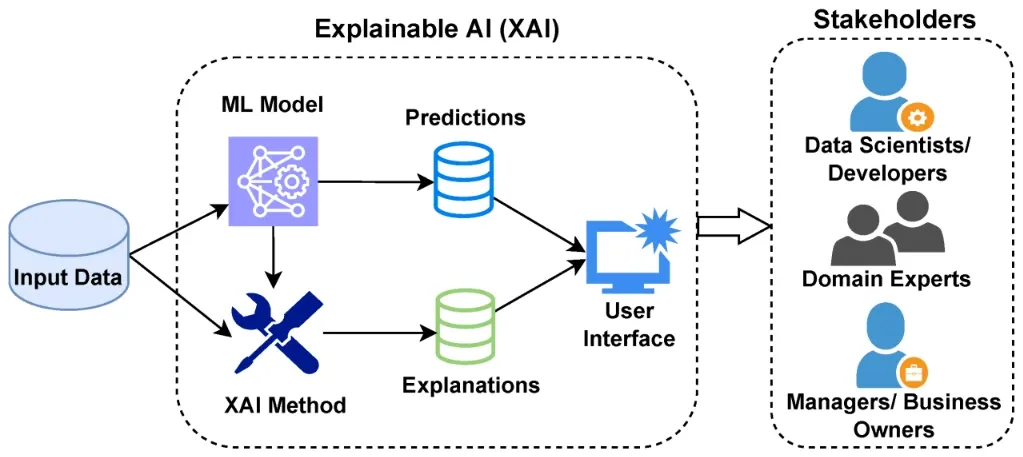

How Explainable AI Works

In this section, you’ll find how Explainable AI design works.

LIME (Local Interpretable Model-agnostic Explanations)

LIME helps explain the predictions of any AI model by approximating it with an interpretable model. For instance, it can highlight the most critical features in an image that led an AI to identify it as a cat.

SHAP (SHapley Additive exPlanations)

SHAP provides a unified measure of feature importance for a prediction. If an AI predicts high risk in loan applications, SHAP can show which specific factors (e.g., income, credit history) influenced the decision.

Counterfactual Explanations

This method explains how altering input data could change the AI's decision. For example, in hiring algorithms, counterfactuals might show how changing certain qualifications would have led to a different hiring decision.

Step-by-Step Process of Explainable AI

The step-by-step process of Explainable AI design are the following:

Step 1:Input Analysis

The AI first analyzes the input data, such as images, text, or numerical data.

Step 2:Decision Generation

The system then processes the data through its models to produce a decision or prediction.

Step 3:Explanation Creation

Using methods like LIME or SHAP, the AI generates an explanation that details how specific inputs influenced the outcome.

Step 4:User Presentation

The explanation is presented in a user-friendly way, often with visual aids, so users can easily grasp how the AI arrived at its decision.

Example Scenarios: In the medical field, Explainable AI might analyze a patient’s medical history and explain how specific symptoms contributed to a diagnosis. In law enforcement, Explainable AI design could be used to explain how certain data patterns led to the identification of a suspect.

Suggested Reading:

Narrow AI

Challenges of Explainable AI

The challenges of Explainable AI design are the following:

Complexity

One of the main challenges is balancing the need for detailed explanations with the simplicity required for users to understand them. Overly complex explanations can confuse users, defeating the purpose of Explainable AI design.

Performance Trade-offs

Making AI systems explainable can sometimes affect their efficiency. For example, adding layers to make decisions understandable might slow down the AI’s processing speed.

Limitations

Despite its benefits, Explainable AI might not provide full clarity in all situations. Some decisions might still be too complex to explain completely, especially in highly intricate AI models.

Suggested Reading:

Data Integration

Future of Explainable AI

The future of Explainable AI design are the following:

Expected Advancements

As AI becomes more widespread, the need for transparency will grow. Future advancements in Explainable AI are expected to make explanations more accurate and easier to understand.

Growing Need for Transparency

With AI’s increasing role in decision-making, the demand for transparent AI systems will continue to rise. Users will expect clear insights into how AI decisions are made, pushing for more refined Explainable AI design techniques.

Impact on AI Development

The push for transparency will likely drive innovation in AI, leading to new models that balance performance with explainability. This shift could transform how AI is developed and used across industries.

Frequently Asked Questions (FAQs)

Why is Explainable AI important?

Explainable AI is important because it builds trust, ensures transparency, and helps users understand and validate AI decisions. It also aids in regulatory compliance by providing clear explanations for AI outputs.

How does the concept of Explainable AI work?

Explainable AI uses techniques like LIME, SHAP, and counterfactual explanations. These methods break down AI decisions into understandable components, allowing users to see the factors influencing the AI’s choices.

What are the advantages of Explainable AI?

The benefits of Explainable AI include increased trust in AI systems, improved decision-making, better error detection, and easier compliance with legal and ethical standards.

What are the shortcomings of Explainable AI?

Explainable AI faces challenges like balancing explanation and simplicity, potential performance trade-offs, and limitations in fully clarifying complex AI models, especially in deep learning.

What effects will Explainable AI have on the future of artificial intelligence?

Explainable AI will likely drive advancements in AI transparency, making AI systems more user-friendly and trustworthy. It will also become crucial as AI continues to play a larger role in decision-making across industries.