In AI, computational number theory emerges as a crucial field, standing at the intersection of mathematics and computer science. At its core, it involves the analysis and manipulation of numbers, aiding AI in solving complex problems.

Here, you can explore how this discipline amplifies the capabilities of AI, hacking away at puzzles that require brains and brawn to decode.

What is Computational Number Theory?

In this section, you’ll find the basic information about computational number theory.

Unveiling the Secrets of Numbers

Computational Number Theory is about understanding and manipulating integers using algorithms. Every number is a treasure trove awaiting exploration. This branch of number theory uses computation to solve problems that are infeasible to tackle by hand, thanks to their labyrinthine nature.

Suggested Reading: Computational Complexity Theory

The Math Toolkit for AI

AI relies on this mathematical firepower to process, analyze, and make sense of numerical data at lightning speeds. Computational Number Theory offers AI the precision tools needed to compute probabilities, recognize patterns, and solve equations that are essential for intelligent decision-making.

Why is Computational Number Theory Important for AI?

Computational number theory is important for AI and here are the reasons.

Powering Secure Communication (Cryptography)

AI and cybersecurity go hand in hand, with Computational Number Theory often playing the role of a gatekeeper. It is the bedrock of cryptography, ensuring that communication remains a private affair between intended parties.

Finding Patterns in Big Data (Efficient Processing)

In the quest to sift through mountains of data, AI finds a reliable partner in Computational Number Theory. It helps in identifying and exploiting numerical patterns swiftly, making big data look less daunting.

Making AI More Efficient (Faster Algorithms)

Time is of the essence, and Computational Number Theory continually seeks ways to trim down computation time. It streamlines AI tasks by optimizing algorithms to be quicker and less resource-heavy.

Core Concepts of Computational Number Theory in AI

The core concepts of computational number theory in AI are the following:

Prime Numbers: The Building Blocks

Like the atoms of the digital universe, prime numbers are the indivisible entities that hold immense significance in Computational Number Theory. They are fundamental in factoring and encryption algorithms.

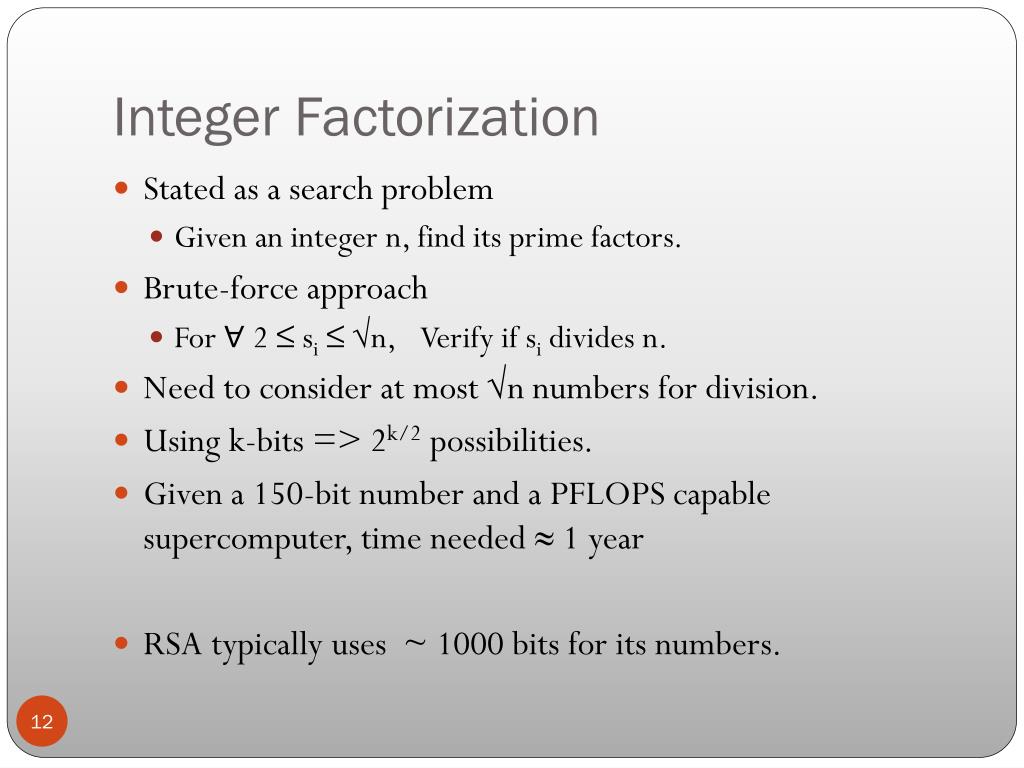

Integer Factorization: Breaking Down Numbers

Decomposing numbers into factors might seem basic, but it's a puzzle that gets trickier as numbers balloon. AI systems utilize integer factorization in cryptography and computational complexity analyses.

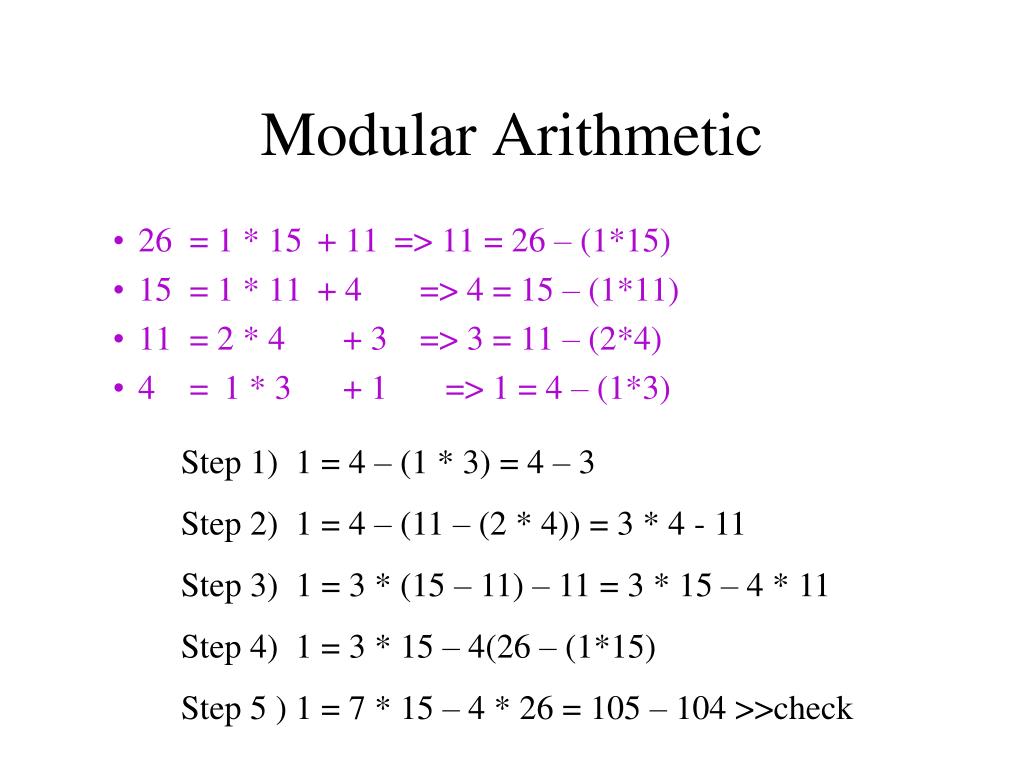

Modular Arithmetic: Clock-like Math

Modular arithmetic is math with a wraparound effect, akin to how clock hands cycle back after hitting the top. It's used in everything from hashing algorithms to error detection in data transmission.

Applications of Computational Number Theory in AI

The applications of computational number theory in AI are the following:

Encryption: Keeping Your Data Safe

Encryption techniques secure data by scrambling it into indecipherable text, with Computational Number Theory playing the lead role as the scrambler and protector of information.

Digital Signatures: Verifying Identities

In a digital world, signing off on something involves no ink. Digital signatures use number theory to confirm the authenticity of digital documents. It is also an integral part of legal and financial operations.

Machine Learning: Training Smarter AI

Intelligent pattern recognition and prediction lie at the heart of machine learning. Computational Number Theory empowers these AI systems to train more effectively, mapping out solutions in predictive models and learning frameworks.

Advanced Topics in Computational Number Theory for AI

Here are the beyond the basic topics in computational number theory for AI:

Elliptic Curve Cryptography: The Future of Encryption

By leveraging the properties of elliptic curves, this advanced application of number theory could define next-gen encryption techniques, fortifying data against evolving threats.

Post-Quantum Cryptography: Preparing for the Unknown

With quantum computing on the horizon, Computational Number Theory is pivoting to develop cryptographic methods that can withstand the power of quantum algorithms.

Number Theory and Machine Learning: A Powerful Marriage

Integrating Computational Number Theory with machine learning creates a hybrid powerhouse, capable of innovating across disciplines like finance, healthcare, and security.

Unlocking the Potential: Challenges and Future Directions

Like any other, computational number theory may poses some challenges and its important to know their future directions.

Making it Faster: The Quest for Efficient Algorithms

The race is on to devise algorithms that can keep up with the growing computational demands. As AI evolves, so must the computational complexity of number theory to maintain pace.

The Security Arms Race: Staying Ahead of Hackers

Cybercriminals never rest, and neither can Computational Number Theory. It must constantly evolve to patch vulnerabilities and protect data against threats both known and unforeseen.

Bridging the Gap: Bringing Theory to Real-World Applications

Translating theoretical math into practical solutions is an essential part of innovation in AI. Blending Computational Number Theory with real-world applications is the key to unlocking its true potential.

Frequently Asked Questions (FAQs)

What is computational learning theory in artificial intelligence?

Computational learning theory is the study of algorithms that learn and make predictions from data to improve AI's decision-making ability.

Is number theory useful for machine learning?

Absolutely! Number theory techniques can improve cryptographic algorithms at the heart of securing machine learning data and operations.

What is the main goal of computational learning theory?

The main goal is to understand the learning process and develop efficient algorithms for training artificial intelligence systems.

What are the 3 divisions of computational theory?

They are complexity theory, computability theory, and algorithmic information theory, each dealing with different computational capabilities and limitations.

Is computational learning theory the same as machine learning?

Not quite. Computational learning theory is a theoretical subfield studying machine learning's principles and algorithmic processes.