What is the Bag of Words Model?

In natural language processing (NLP), the Bag of Words Model stands out for its straightforward yet powerful approach to text analysis. This method transforms text into numerical data, enabling computers to understand language much like humans do, albeit in a more structured form.

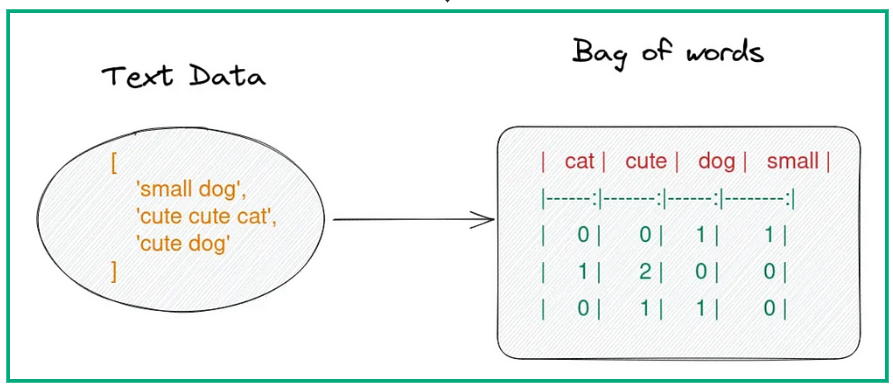

Core Concept: Representing Text as a Collection of Words

The essence of the Bag of Words Model lies in its simplicity. It considers the frequency of words within a document, ignoring grammar and word order. This model visualizes text as a bag filled with words, where each word’s importance is denoted by its occurrence.

Building a Bag of Words Model

Here are the steps for building a Bag of Words Model:

Step 1

Vocabulary Creation - Building Your Word Dictionary

The first step involves crafting a comprehensive dictionary from the corpus (a collection of texts). Each unique word forms an entry in this dictionary, setting the foundation for further analysis.

Step 2

Tokenization - Breaking Down Text into Individual Words

Tokenization slices the text into individual words or tokens. This crucial step prepares the raw text for quantitative analysis by separating words based on spaces and punctuation.

Step 3

Feature Extraction - Counting Word Occurrences

Lastly, the model counts how often each dictionary word appears in the text. These counts transform the text into a numerical format, ripe for various types of analysis.

Features in a Bag of Words Model

The features in a Bag of Words Model are the following:

Word Frequency - How Many Times Each Word Shows Up

Word frequency is the bedrock of the Bag of Words Model. It quantifies the presence of words, offering insights into the text's focus.

Alternative Weighting Schemes: Beyond Simple Counts

While counting words is informative, alternative methods like TF-IDF provide a more nuanced view by considering word rarity across documents, enhancing the model’s descriptive power.

Using the Bag-of-Words Model

The uses of a Bag of Words Model are the following:

Text Classification: Sorting Documents into Categories

The Bag of Words in machine learning Model shines in text classification. It enables algorithms to categorize documents based on their content, from spam detection to sentiment analysis.

Suggested Reading: Machine Learning Methods

Information Retrieval: Finding Relevant Information

In information retrieval, this model helps sift through vast datasets to find documents that best match a query. Thus powering search engines and recommendation systems.

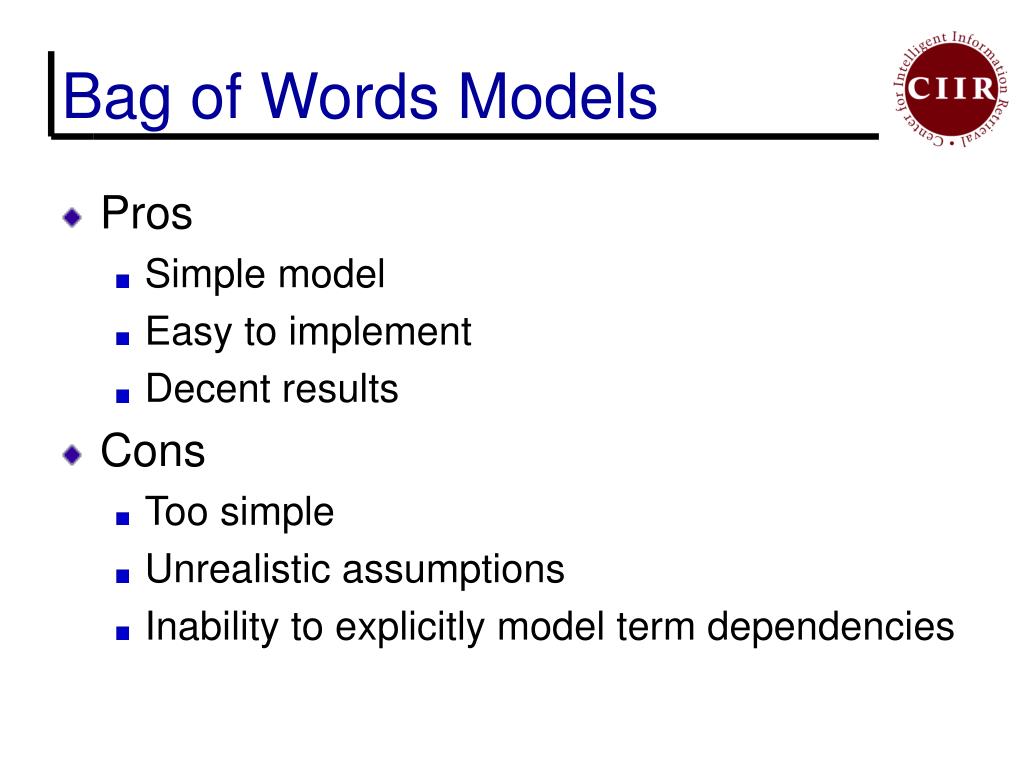

Advantages of the Bag of Words Model

The advantages of a Bag of Words Model are the following:

Simplicity: Easy to Understand and Implement

Its straightforward nature makes the Bag of Words Model in NLP especially accessible and easy to apply, even for those new to machine learning.

Efficiency: Works Well for Large Datasets

The model's simplicity translates into high efficiency, making it suitable for processing large volumes of text quickly.

Interpretability: Provides Clear Insights into Word Usage

The transparency of the Bag of Words approach allows for an easy understanding of how decisions are made based on word frequencies.

Versatility: Applicable to Various NLP Tasks

From spam filtering to topic modeling, the model’s adaptability makes it a valuable tool across a broad spectrum of applications.

Limitations of the Bag of Words Model

The limitations of a Bag of Words Model are the following:

Ignores Word Order: Misses Context and Meaning

Focusing solely on word counts, the Bag of Words Model overlooks the syntactic nuances and sequential context of language, sometimes missing the text's subtleties.

Overlooking Relationships: Words in Isolation

By considering words in isolation, the bag of words model in the NLP model can miss out on the relationships and dependencies between them, potentially ignoring the bigger picture.

Sensitivity to Preprocessing: Cleaning Matters

The results heavily depend on the preprocessing steps like stemming and lemmatization, making thorough cleaning essential.

High Dimensionality: Large Feature Vectors for Complex Tasks

The vast vocabulary can lead to high-dimensional data, complicating the model's applicability to more complex analytical tasks.

Beyond the Basic Bag: Advanced Techniques

Some advanced techniques of bag of words in machine learning are the following:

TF-IDF: Weighting Words Based on Importance

TF-IDF scales down the impact of frequently appearing words, thereby highlighting the more meaningful terms in the document.

N-grams: Capturing Word Sequences

Incorporating N-grams, sequences of N words, injects an awareness of word order and context, partially addressing one of the model's key limitations.

Part-of-Speech (POS) Tagging: Considering Word Types

POS tagging categorizes words into their respective parts of speech, adding an extra layer of linguistic structure to the analysis.

Stemming and Lemmatization: Reducing Words to Their Root Form

These preprocessing steps streamline the vocabulary by consolidating different forms of a word into a single representation, improving the model’s efficiency.

The Bag of Words Model in machine learning offers a gateway into text analysis, balancing simplicity with effectiveness. It lays the groundwork for interpreting text through a quantitative lens, albeit with some noted limitations.

Looking Ahead: Exploring Advanced Text Processing Techniques

As we advance in NLP, blending the Bag of Words Model in NLP with more sophisticated methods like word embeddings and deep learning models promises to overcome its constraints, paving the way for richer, more nuanced text analysis.

Frequently Asked Questions (FAQs)

What is the bag of words generative model?

The bag of words generative model treats text as a collection of unordered words used for building probabilistic language models.

What is the bag-of-words model in sentiment analysis?

In sentiment analysis, the bag-of-words model uses word frequency to predict the sentiment conveyed in the text.

What are the disadvantages of the bag of words?

Bag of words ignores word order, grammar, and often words' semantic relationships, which can affect model accuracy and context understanding.

What is the difference between TF-IDF and bag of words?

While both convert text into vector forms, TF-IDF weights term frequency against how common a word is across all documents, unlike the simpler Bag of Words model.

What is the bag of words in AI?

In AI, the bag of words models text data by converting it into fixed-length numerical vectors, ignoring syntax and order of words.