Introduction

Large Language Models (LLMs) excel at understanding and generating human language.

They are the driving forces behind several innovative applications like chatbots, virtual assistants, and much more. However, the cost of training these models might be one of the biggest secrets in tech.

The numbers are staggering, but the reasons behind them are even more surprising. Few people realize how much strategy, optimization, and hidden costs define this process.

So, have you ever wondered why the cost of training large language models is so expensive? This guide unpacks the key factors shaping these costs and why they matter for the future of AI. Let us get going.

What is the Cost of Training LLM Models?

The cost of training large language models involves huge financial investments as they require vast parameter spaces and computational power.

According to Epoch AI, the technical creation cost of ChatGPT-4 was between $41 million to $78 million. Let us explore this in detail below.

Cost to Train LLM with Cloud Servers

Cloud services are considered to be one of the easiest ways to train LLM models. They are very flexible and can meet the fluctuating needs of AI training cycles.

A good example is Amazon SageMaker which allows the LLMs to be trained using billions of parameters.

However, the cost of training an LLM is very high, due to which a majority of users won't prefer to train a model from scratch. They will simply opt for pre-trained models like ChatGPT, Llama2, etc. There are two methods to train LLMs with cloud services. Let us take a look.

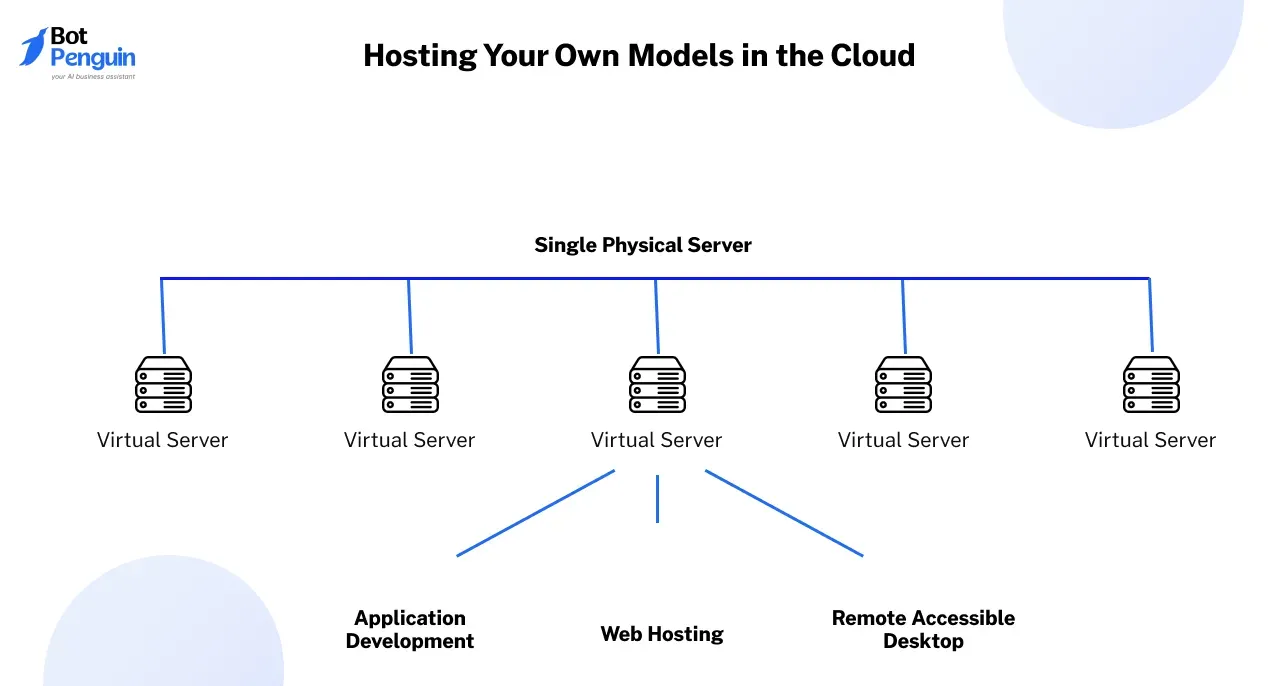

Hosting Your Own Models in the Cloud

In this method, businesses manage the complete machine learning cycle like storing data, computing, deploying, and managing.

However, when it comes to cost, you should not only consider that of GPUs, but also the cost of Virtual CPUs, Memory, and storage.

All these components will only incur additional costs. Hence, you have to optimize your resources effectively to manage costs.

Cloud providers charge users based on factors like compute time, memory allocation, and data storage or transfer which ultimately increases the cost of training large language models.

Pay Per Token Model

With the large learning model training cost becoming increasingly expensive, it is time to explore the pay-per-token model. In this method, LLMs are already trained with huge datasets that the public can access through APIs.

This allows developers and businesses to use LLMs without worrying about the needs and challenges of training these expensive models themselves.

Also, instead of bearing the upfront costs needed for training and infrastructure, users can pay the fees for the number of tokens processed by the LLMs when performing tasks like text generation, or translation.

General Cost Ranges for Small, Medium, and Large LLMs

Training small LLMs (with a few hundred million parameters) can cost tens of thousands of dollars.

For example, OpenAI's GPT-2 reportedly cost around $50,000 to train. As for medium-sized models (with several billion parameters), you can see that the costs can increase to hundreds of thousands of dollars. These models need more computing resources and storage.

Large models like GPT-3 (with over 175 billion parameters) cost millions of dollars to train. The cost to train LLM at this scale is driven by the sheer compute hours and data size involved. OpenAI spent an estimated $4.6 million on training GPT-3.

How to Reduce the Cost of Training Large Language Models?

While the LLM training cost is inevitable, there are ways in which you can reduce the cost required for this process. Let us consider a few of them below.

Model Optimization Techniques

In this method, you can choose a model architecture that can handle complexity without compromising performance. You can then feed relevant and high-quality training data to this model which will help speed up the training time while reducing compute costs.

Also, use techniques like dataset distillation to focus on retaining essential information while reducing volume. Smaller datasets require fewer compute cycles, helping decrease the large language model training cost.

You can also implement mixed precision training to reduce computational requirements by using lower-precision formats without sacrificing model accuracy.

Using distributed training divides workloads across multiple GPUs or TPUs, reducing training time and costs.

Hardware Optimizations

You can choose hardware that fulfills your training needs and also balances performance and cost. You can try the latest GPUs like the H100 which can perform better than their previous generations.

Keep an eye on how resources are utilized during training. You can use techniques like gradient accumulation to utilize higher GPU which results in accelerating the training while minimizing costs.

Check out various cloud service providers and their low llm hosting cost options. For example, if your training schedule is unpredictable, you can opt for on-demand pricing, where you can pay only for the resources you use, thereby reducing the cost of training large language models.

Meanwhile, if you have a clear training schedule, you can go for reserved instances where you can commit to a specific amount of resources for a longer period which can help lower the overall cost of training LLM in the long run.

Training Configurations Optimizations

You can use different learning rates and other training hyperparameters and find the configuration that maintains the balance between training speed and accuracy, ultimately optimizing the LLM training cost.

Use techniques that can monitor the training progress and stop it once the required performance level is reached. This helps to avoid unnecessary resource consumption.

Also, during training, you should regularly save the model state. In case you encounter any interruptions or hardware failures, you can resume training from the state where the model was previously. This can save time and resources, and lower the cost of training an LLM.

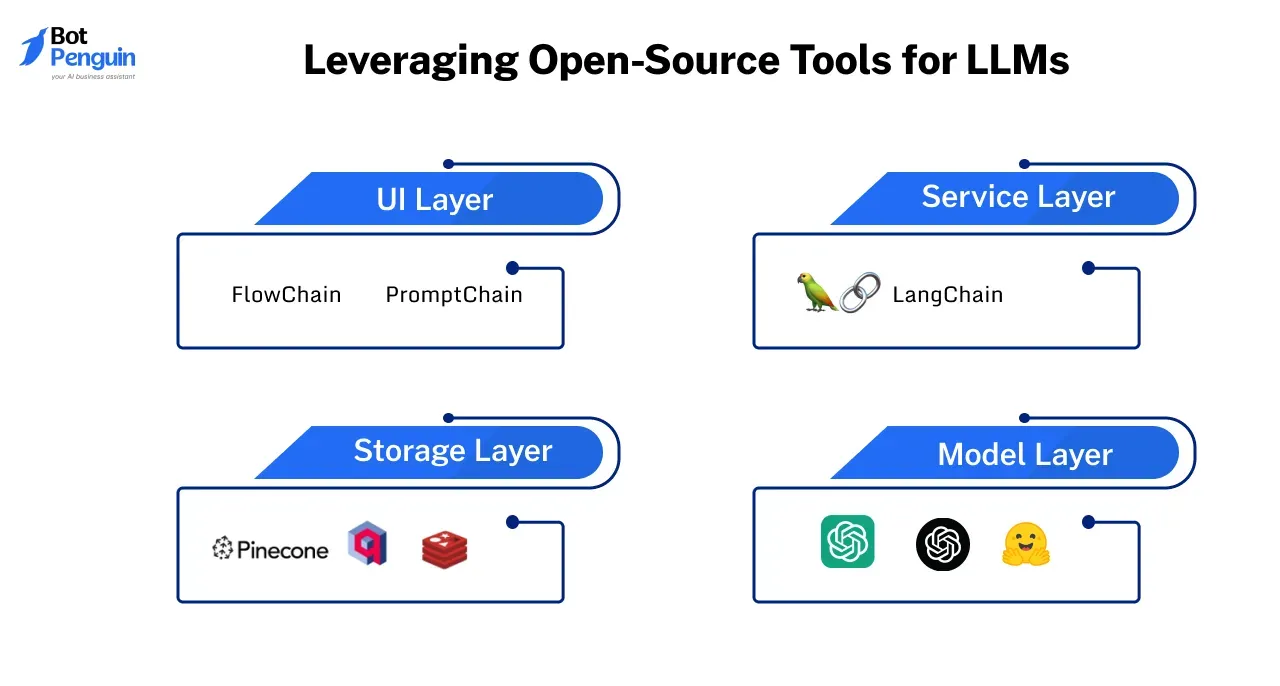

Leveraging Open-Source Tools for LLMs

Open-source frameworks provide solutions for LLM training at reduced LLM hosting cost.

Tools like Hugging Face and PyTorch are widely used for their efficiency and community support. They offer pre-trained models, training scripts, and optimization features at no additional cost.

Also, developers worldwide constantly update these open-source tools. These updates improve performance and reduce the cost of training LLM, allowing users to focus resources on other areas.

Choosing the Right Hosting Options for LLMs

When you choose the right hosting options for LLMs, you can reduce both training and post-deployment costs. Here are some of the key factors to consider.

Compare Pricing Options

When selecting cloud providers, compare LLM hosting cost, GPU availability, and scaling options.

Platforms like AWS, Azure, and Google Cloud offer tools to estimate the LLM hosting cost for specific workloads.

Suggested Reading:

Custom LLM Models vs Pretrained Models: A Complete Analysis

Use Cost Calculators

Cloud providers often provide cost calculators to help users make informed decisions about LLM hosting cost.

These tools highlight potential savings by optimizing resource usage and ensuring efficient spending on the cost of training LLM and deployment.

Examining Real-World Examples and Lessons Learned

Understanding the cost of training large language models requires examining real-world examples.

These models represent significant investments, offering insights into how organizations allocate resources and manage expenses. Here’s a breakdown of notable LLMs and the lessons learned from their development.

Cost Breakdowns of Training Well-Known LLMs- GPT-3, and LLaMa

OpenAI’s GPT-3, with 175 billion parameters, is one of the most advanced LLMs to date. Training this model reportedly cost around $4.6 million. This figure includes compute resources, storage, and labor costs.

The use of advanced GPUs like NVIDIA’s V100 played a key role, along with cloud services to handle the large-scale computing needs. The LLM training cost for GPT-3, reflects its reliance on massive datasets and extended training durations.

Meta’s LLaMA models focus on efficiency by offering comparable performance to larger models while requiring fewer parameters.

While exact figures for LLaMA’s training costs are not publicly available, the focus on smaller-scale, optimized training likely kept expenses lower than GPT-3.

This demonstrates how strategic optimizations can reduce the cost of training large language models without compromising effectiveness.

Lessons Learned From Computing Requirements

Both examples highlight how compute-intensive tasks dominate the large language model training cost.

Cloud platforms and specialized hardware account for a significant share of expenses, influencing decisions around the LLM hosting cost during deployment.

Lessons Learned From Organizations

OpenAI’s use of mixed-precision training for GPT-3 showcases the importance of resource efficiency.

This approach minimized computational demands, reducing the cost to train LLM without impacting performance. Companies looking to train their own models can adopt similar techniques to save costs.

Meta’s focus on smaller, optimized models like LLaMA highlights the role of data in managing costs. High-quality, curated datasets allowed the company to achieve strong performance with fewer parameters, lowering the cost of training an LLM.

While the lessons above are about reducing the cost of training large language models, another factor that you have to consider is the LLM hosting cost after training. Let us explore this further.

Balancing Hosting Costs and Leveraging Collaborations

After training, hosting large models becomes a significant expense.

Companies like OpenAI offer pay-per-token pricing for GPT-3, helping users manage the LLM hosting cost for inference. Businesses deploying LLMs should consider hosting expenses into their budgets to ensure sustainability.

Organizations like Hugging Face have demonstrated the value of open-source collaborations. By sharing pre-trained models and frameworks, they reduce barriers for smaller companies to train and deploy LLMs. This reduces the cost of training LLM and encourages community-driven innovation.

Conclusion

Training large language models is a monumental task requiring significant investment in computing resources, data, energy, and expertise.

Understanding the LLM training cost is crucial for organizations aiming to leverage AI effectively.

By optimizing resources, leveraging open-source tools, and selecting cost-efficient hosting options, businesses can reduce expenses while maintaining performance. Real-world examples like GPT-3 and LLaMA demonstrate the importance of strategy in balancing costs and scalability.

Platforms like BotPenguin make it easy for businesses to use AI without needing a lot of expensive infrastructure. By using cloud-based services, BotPenguin ensures businesses don’t need to invest in heavy hardware or complex setups.

It also supports integration with various tools, making it a flexible and affordable option for businesses looking to use AI-powered chatbots. With such solutions, businesses can stay competitive and manage costs effectively in today’s digital world.

Also, as AI continues to evolve, smarter approaches to training and hosting will be essential for sustainable innovation in this rapidly advancing field.

Frequently Asked Questions (FAQs)

What is the cost of training LLM models?

Training large models like GPT-3 requires millions of dollars, while smaller-scale LLMs or fine-tuning pre-trained models can be done with a much smaller budget.

Open-source tools and frameworks like Hugging Face and PyTorch make it possible to experiment with LLMs cost-effectively, even with limited resources.

What are the affordable ways to experiment with LLMs?

The most affordable approach is using pre-trained models and fine-tuning them for specific tasks. Open-source platforms provide access to these models, reducing the need for extensive training.

Cloud services with pay-as-you-go pricing also allow experimentation without large upfront investments. For even lower costs, consider using lightweight models like DistilBERT.

How does LLM hosting cost differ for training and inference?

Training costs are typically much higher due to the need for prolonged computing power, large datasets, and specialized hardware.

Inference costs, however, depend on how frequently the model is used. Hosting your model involves infrastructure expenses, while pay-per-token services allow for predictable, usage-based pricing, often more economical for occasional use.

What is the difference between hosting your own model and pay-per-token?

Hosting your own model offers greater control over data, security, and long-term costs. It’s ideal for organizations with frequent usage and sensitive data.

On the other hand, pay-per-token services eliminate infrastructure management, making them a flexible option for businesses with variable or lower usage needs.

What does model size mean in LLMs?

Model size refers to the number of parameters in an LLM. If there are more parameters, the performance can be improved. However, it requires more memory and computational power.