Introduction

Large Language Models (LLMs) have already gained prominence across various industries, transforming the way businesses use AI.

However, developers are constantly seeking innovative methods to enhance the performance of these LLMs. One strategy that assists them in this process is Retrieval-Augmented Generation (RAG), which improves accuracy by incorporating relevant external data.

This ensures that LLMs generate more precise and contextually aligned responses tailored to specific business needs.

However, not all large language models (LLMs) are created equal. Some models underperform while others excel in unexpected ways.

This isn’t about picking the most popular model or the one with the biggest budget behind it. It is about choosing the right tool for the job, specifically, for Retrieval-Augmented Generation (RAG).

In this guide, you will learn about the best LLM for RAG implementations and why they matter. Let us get started.

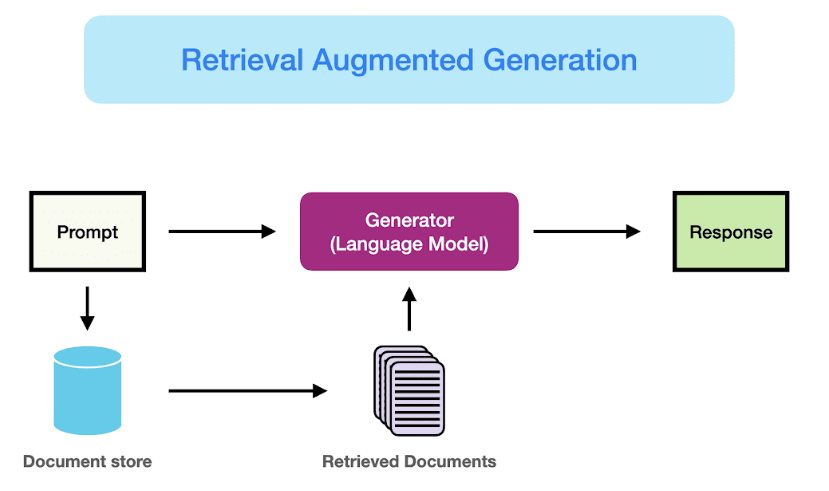

What is Retrieval-Augmented Generation (RAG)?

RAG is a method that combines retrieval-based systems with generative AI. The retrieval system searches and fetches relevant information, while the generative model uses this data to produce coherent, contextual outputs.

Retrieval sources can include databases, embeddings (vector stores), or live web search results. These sources ensure that responses are accurate and up to date, making RAG especially useful for real-world applications that require precise and dynamic information retrieval.

How RAG Combines Retrieval Systems and Generative AI?

The retrieval system acts as a knowledge base, providing facts or context. The generative model takes it further by crafting human-like responses based on the retrieved data.

For instance, when asked a specific question, the retrieval component finds relevant documents, and the AI generates a clear, nuanced reply.

Why Does the Quality of the LLM Matter?

Not all models can handle RAG workflows equally. The best open source LLM for RAG ensures seamless integration, factual accuracy, and high-quality outputs.

Poor model performance can lead to irrelevant or incorrect responses, which defeats the purpose of RAG entirely. Picking the best LLM models for RAG is crucial for delivering reliable results.

Hence, choosing the best LLM for RAG ensures that retrieval-augmented systems generate precise, contextually aware, and high-quality responses, making them invaluable for businesses relying on AI-driven insights.

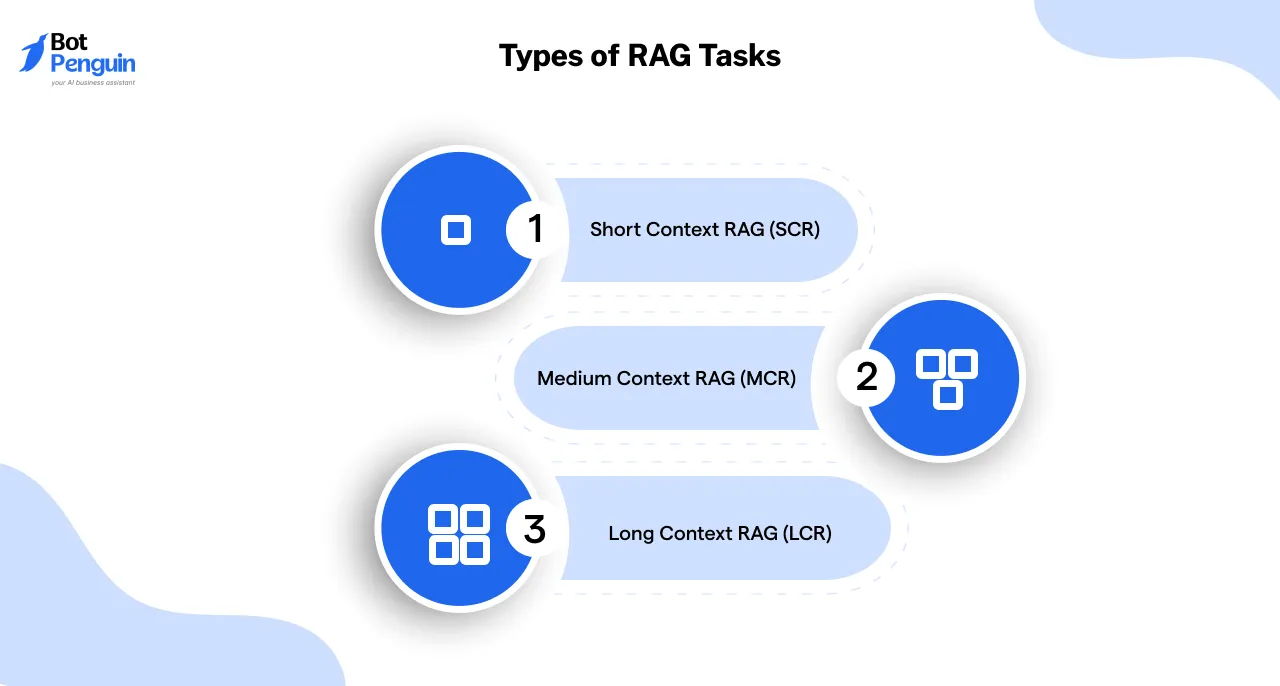

Types of RAG Tasks

Choosing the best LLM for RAG depends heavily on the type of RAG task you are tackling. These tasks are classified based on the context length they manage.

Let us break down the main categories of RAG tasks and how they align with specific use cases.

Short Context RAG (SCR)

SCR can handle contexts of less than 5000 tokens. This is equivalent to processing a short article or a brief report. It is most appropriate for tasks that involve brief, direct queries.

Think FAQ systems, customer support bots, or any application that requires quick, concise answers. The primary focus here is speed and precision. An LLM optimized for SCR should excel in delivering fast responses without compromising accuracy.

Choosing the best LLM for RAG ensures high performance, while opting for the best open source LLM for RAG can offer cost-effective and customizable solutions for specific needs.

Medium Context RAG (MCR)

MCR can handle contexts between 5000 to 25000 tokens, comparable to analyzing a detailed research paper or an extensive report. It is suited for tasks like multi-turn dialogues in chatbots or dynamic conversation systems.

These scenarios require balancing retrieval precision with high-quality text generation. Maintaining conversational coherence across multiple exchanges is key.

Selecting the best LLM for RAG enhances accuracy and contextual understanding, while choosing the best LLM model for RAG ensures seamless transitions between questions and answers, ultimately improving the user experience.

Long Context RAG (LCR)

LCR can handle extensive contexts ranging from 40,000 to 128,000 tokens, making it suitable for tasks like document summarization, research assistance, and complex data retrieval.

With the ability to process an entire comprehensive report, thesis, or large knowledge base, it ensures that information remains coherent and contextually accurate throughout retrieval and generation.

For long-form retrieval tasks, GPT-4 Turbo (128K tokens) and Gemini 1.5 (128K tokens) are the best LLM models for RAG, offering unmatched efficiency in handling vast datasets.

These models excel at maintaining contextual consistency, reducing loss of key details, and ensuring accurate and relevant responses across extended text inputs.

Using the best LLM models for RAG helps maintain contextual flow, while selecting the best LLM for RAG ensures optimized retrieval performance, scalability, and efficiency when working with high-volume, long-context data.

Hence, understanding these different RAG task types allows you to select the best LLM for RAG based on your specific needs. Whether handling short, medium, or long contexts, the right model ensures accuracy, efficiency, and scalability in your applications.

Key Factors to Consider When Choosing the Best LLM for RAG

Selecting the best LLM for RAG isn’t just about performance, it is about finding a model that fits your specific needs.

From technical capabilities to practical integration, several factors play a critical role in determining the right choice for your Retrieval-Augmented Generation workflows.

Choosing the best LLM for RAG enhances reliability, ensuring the model consistently delivers precise and context-aware responses across different use cases.

Accuracy

Accuracy is the backbone of a successful RAG implementation. The model must retrieve and generate contextually relevant, precise information.

A highly accurate LLM reduces errors and improves user satisfaction. When evaluating the best LLM models for RAG, focus on their track record in handling complex queries with consistency.

Deploying the best LLM for RAG strengthens reliability, enabling the model to generate accurate, context-aware responses tailored to diverse applications.

Scalability

A scalable LLM is essential for handling large datasets and high user demands. Whether you are deploying a chatbot for thousands of users or summarizing extensive documents, scalability ensures the system can keep up without compromising performance.

The best LLM model for RAG should handle these demands seamlessly, ensuring the best LLM for RAG performs without lag or loss of accuracy.

Ease of Integration

Integration challenges can slow down implementation. Look for models that easily fit into your existing RAG pipeline, whether through APIs, plugins, or customizable workflows.

The best open source LLM for RAG often excels here, providing adaptable tools with minimal friction. Ensuring seamless compatibility allows the best LLM for RAG to function efficiently without unnecessary technical hurdles.

Cost-Effectiveness

Balancing quality with budget constraints is critical. High-performing LLMs can be expensive, but there are cost-effective options that don’t sacrifice too much on performance.

Choosing the best LLM for RAG often involves finding this balance while keeping long-term scalability in mind.

Flexibility

Flexibility allows you to fine-tune the model for your specific needs.

Whether it is adapting to a niche industry or enhancing multilingual support, a flexible model ensures the best outcomes. The best open source LLM for RAG often provides this adaptability.

Community Support and Documentation

An active developer community and robust documentation make a significant difference. They ensure smoother troubleshooting, faster updates, and access to shared best practices.

Many of the best LLM models for RAG benefit from vibrant communities that actively contribute to their development.

By weighing these factors, you can confidently select the best LLM for RAG that aligns with your goals, budget, and technical requirements.

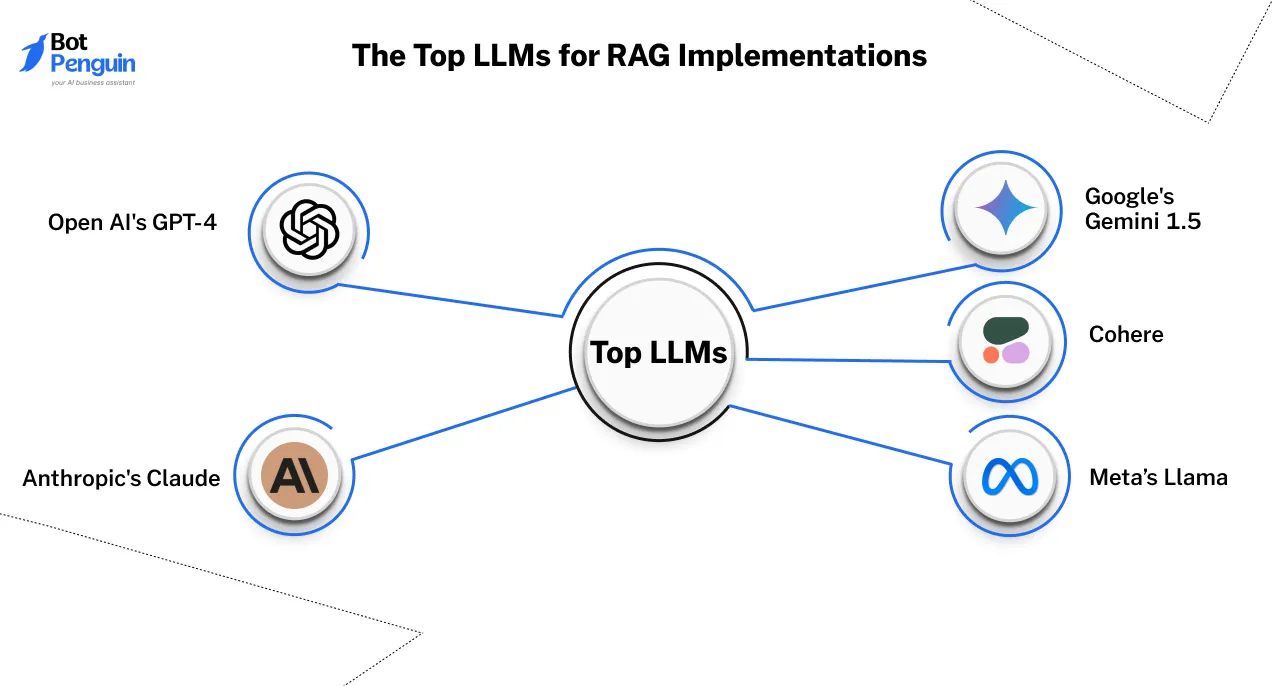

The Top LLMs for RAG Implementations

Choosing the best LLM for RAG involves understanding the unique strengths and limitations of each model.

Below, we delve into five leading contenders who excel in various RAG workflows, highlighting what makes them stand out and where they excel in practical applications.

1. Open AI's GPT-4

OpenAI’s GPT-4 is a leading model in the generative AI space, delivering exceptional performance in both language understanding and creation, making it the best LLM for RAG.

Known for its versatility and contextual accuracy, GPT-4 is often seen as the gold standard for RAG workflows. Whether you need to summarize research papers, generate creative content, or assist customers in real-time, GPT-4 delivers top-tier results.

Why is it Effective for Retrieval-Augmented Tasks?

OpenAI’s GPT-4 is renowned for its unmatched generative quality. Its ability to understand nuanced queries and deliver contextually rich responses makes it ideal for RAG workflows.

For example, in healthcare, GPT-4 can retrieve patient data and generate precise treatment recommendations based on the latest research. In customer service, it powers intelligent bots that resolve complex issues effectively.

Use Cases

- E-commerce: Personal shopping assistants that recommend products based on customer preferences.

- Legal: Summarizing contracts and identifying potential legal risks.

- Research: Assisting academics by summarizing literature or generating hypotheses.

Pros

- GPT-4 generates contextually rich and coherent responses, making it highly effective for complex RAG workflows.

- It adapts well to various industries, from healthcare to legal and e-commerce, enhancing productivity across multiple domains.

Cons

- Running GPT-4 for RAG implementations can be expensive, making it less accessible compared to the best open source LLM for RAG options.

- Despite its accuracy, GPT-4 may sometimes generate misleading or incorrect information if the retrieved data is incomplete or biased.

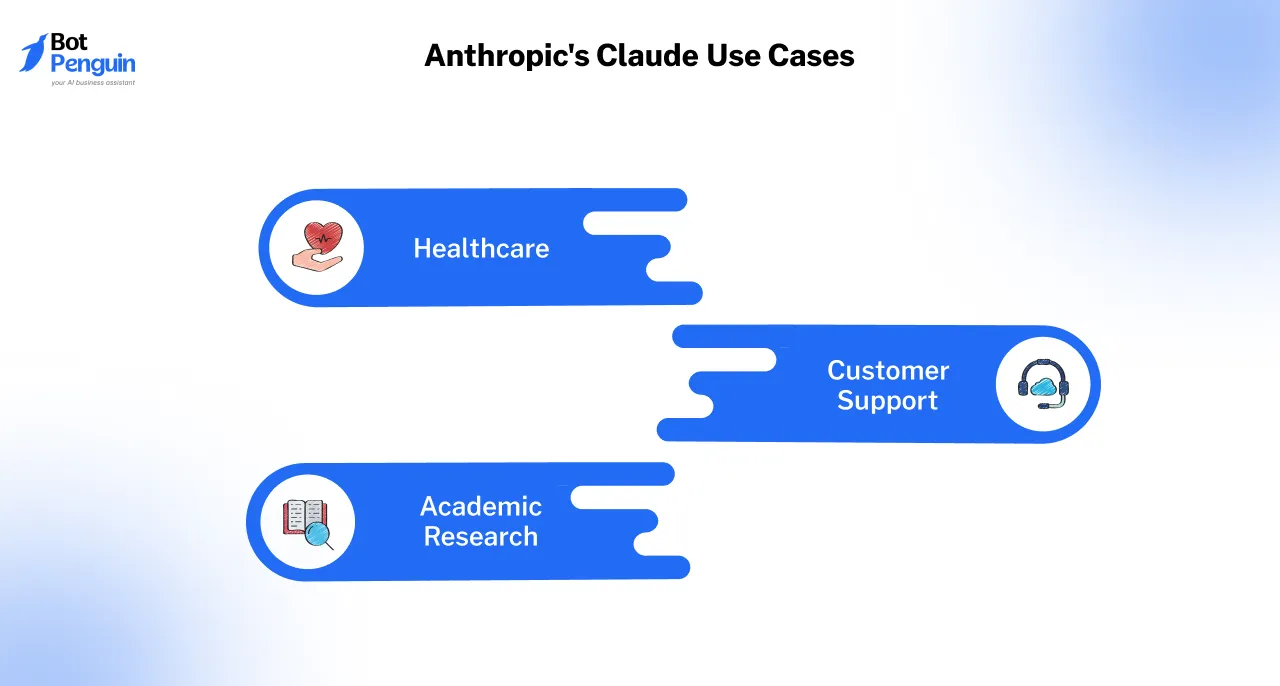

2. Anthropic's Claude

Anthropic’s Claude is designed with a strong emphasis on ethical AI and safe usage. It is particularly well-suited for industries like healthcare, finance, and law, where trustworthiness and transparency are paramount.

Claude is less flashy than some competitors but excels in delivering accurate and reliable outputs for sensitive tasks. Its affordability and focus on precision make it a popular choice for cost-conscious organizations looking for the best LLM for RAG to ensure accurate and context-aware responses.

Why is it Effective for Retrieval-Augmented Tasks?

Anthropic's Claude is a powerful choice when it comes to retrieval-augmented tasks, particularly due to its emphasis on safety, ethical AI, and transparent decision-making.

This makes it an excellent choice for industries where misinformation or bias can have severe consequences. For instance, in financial advisory, Claude ensures that recommendations are accurate, transparent, and unbiased.

Use Cases

- Healthcare: Claude assists in summarizing medical research, ensuring that clinicians access the most relevant and up-to-date findings without misinformation.

- Customer Support: Provides safe and responsible responses to customer queries, ensuring compliance with industry guidelines.

- Academic Research: Helps researchers synthesize large volumes of data, summarizing papers while minimizing bias and ensuring factual accuracy.

Pros

- Reliable in generating factually accurate responses.

- Affordable for businesses on a budget.

Cons:

- Compared to some competitors, Claude may produce less creative or diverse outputs, which can be a drawback for certain RAG applications.

- Claude may take longer to generate responses when dealing with highly complex RAG workflows, especially those requiring deep multi-document retrieval.

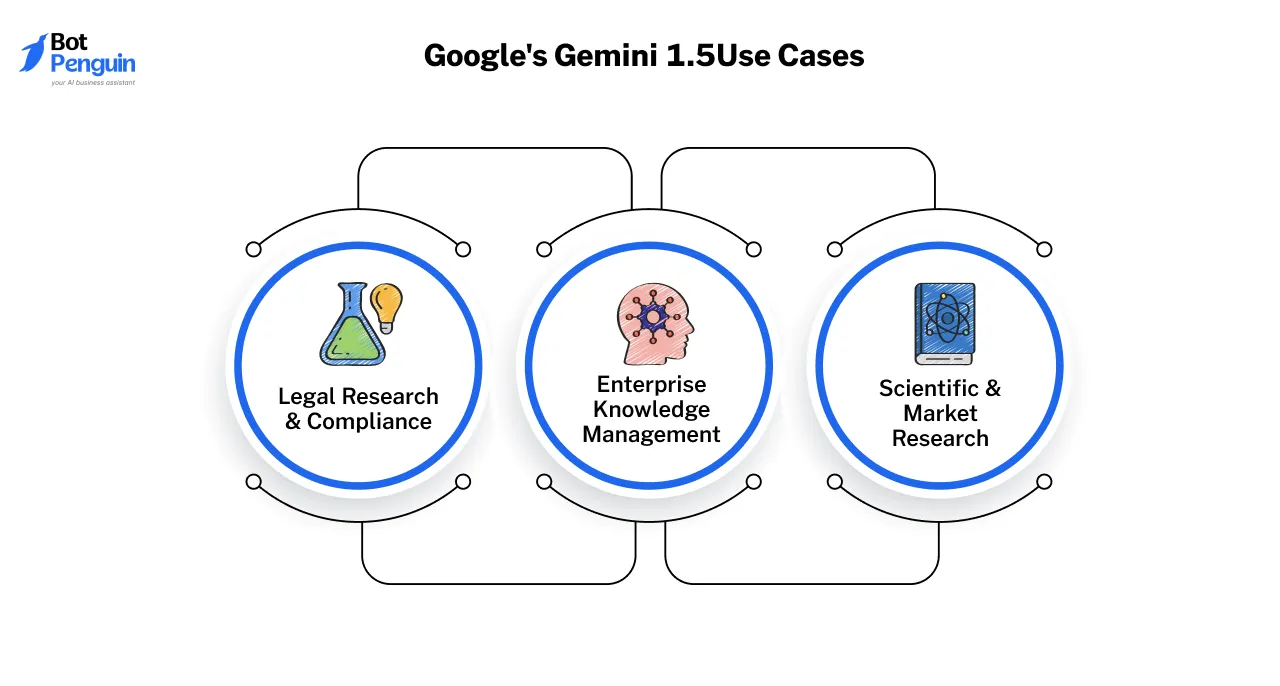

3. Google's Gemini 1.5

Google’s Gemini 1.5 is a powerful long-context language model designed for retrieval-augmented tasks.

With one of the longest context windows in LLMs, it can process vast amounts of information in a single prompt, making it a top choice for RAG implementations.

Gemini 1.5 Pro & Ultra outperform older models by handling long-context retrieval seamlessly, reducing response fragmentation, and improving knowledge synthesis.

This makes Gemini 1.5 particularly effective for industries that rely on processing large datasets, such as legal research, enterprise knowledge management, and scientific research.

However, its premium computational requirements make it more suitable for enterprises with the infrastructure to fully use its capabilities.

Why is it Effective for Retrieval-Augmented Tasks?

Google’s Gemini 1.5 is widely considered the best LLM for RAG due to its ability to retrieve, process, and generate highly contextual responses from large datasets in a single pass.

Unlike older models, it excels at long-context retrieval, making it ideal for applications that require accurate information synthesis across extensive documents.

Use Cases

- Legal Research & Compliance: Retreiving, summarizing, and analyzing case laws, contracts, and regulations, enabling legal professionals to quickly extract relevant precedents and compliance guidelines.

- Enterprise Knowledge Management: Powering internal knowledge bases, allowing employees to retrieve company policies, technical documentation, and historical reports effortlessly.

- Scientific & Market Research: Scanning thousands of research papers, extracting key insights, and providing structured literature reviews, saving time and improving decision-making.

Pros

- Gemini 1.5 Pro & Ultra process vast amounts of information in a single query, making it highly efficient for retrieval-based applications.

- Unlike older models, it generates coherent and contextually rich responses without needing excessive chunking or reprocessing.

Cons

- Running and fine-tuning Gemini 1.5 for RAG applications demands significant infrastructure and expertise.

- As an enterprise-focused model, it may not be feasible for smaller organizations looking for a best open-source LLM for RAG, which could offer cost-effective alternatives.

4. Cohere

Cohere offers a unique approach with a focus on fine-tuning and domain-specific optimization. This model is a great fit for businesses with specialized needs, such as personalized marketing, customer support, or niche knowledge bases.

Its flexible pricing structure and ease of integration make it an attractive choice for small to medium-sized enterprises.

While it may not rival GPT-4 in generative depth, Cohere excels in delivering tailored solutions and can be considered the best LLM for RAG when it comes to domain-specific applications.

Why is it Effective for Retrieval-Augmented Tasks?

Cohere’s specialization in domain-specific optimization makes it highly effective for Retrieval-Augmented Generation (RAG) tasks, particularly where personalized responses and precise knowledge retrieval are critical.

For example, in the marketing industry, Cohere can generate ad copy based on real-time customer behavior data. Its emphasis on fine-tuning makes it particularly effective for businesses with niche requirements.

Use Cases

- Personalized Marketing: Cohere's ability to fine-tune based on specific customer data makes it ideal for creating personalized ad campaigns and recommendations, improving customer engagement.

- Customer Support: The model's domain-specific optimization allows it to provide quick, accurate responses for businesses, making it ideal for automating customer service inquiries and improving support efficiency.

- Knowledge Base Management: Cohere excels in retrieving and generating responses for niche industries by tapping into domain-specific knowledge, enhancing the user experience by offering more accurate information.

Pros

- Cohere's pricing structure is designed to be affordable for small to medium-sized businesses, making it an attractive alternative to larger, more expensive models like GPT-4.

- Cohere offers easy integration with existing systems and APIs, making it a solid choice for businesses looking to quickly implement AI capabilities without overhauling their infrastructure.

Cons

- While Cohere is great for domain-specific tasks, it may not match the generative depth or versatility of other top models like GPT-4 in more complex, open-ended tasks.

- For organizations looking to implement RAG at a global scale with massive datasets, Cohere may not offer the same level of robustness as some enterprise-focused LLMs like PaLM.

5. Meta’s Llama and Open-Source Alternatives

Meta’s Llama remains a strong contender in the open-source AI space, offering flexibility and customization for Retrieval-Augmented Generation (RAG) tasks.

However, Llama 2 (4K tokens) does not support Long-Context RAG (LCR), making it less suitable for applications requiring extensive retrieval.

For businesses looking for the best LLM for RAG in the open-source category, Mistral 7B and Mixtral 8x7B have emerged as superior alternatives. These models outperform Llama 2 in retrieval efficiency, making them ideal for complex data-heavy applications.

If long-context retrieval is required, integrating Llama 2 or Mistral 7B with an external memory store like FAISS, ChromaDB, or Weaviate is necessary, while Mixtral 8x7B (32K tokens) supports longer retrieval natively.

Why is it Effective for Retrieval-Augmented Tasks?

Meta’s Llama, along with Mistral 7B and Mixtral 8x7B, is among the most effective open-source solutions for retrieval-based AI applications.

Their fine-tuning flexibility and efficient retrieval mechanisms allow businesses to tailor them for domain-specific RAG implementations.

Unlike proprietary models, these open-source LLMs can be deployed without licensing restrictions, giving startups, academic institutions, and enterprises full control over data processing and retrieval workflows.

When integrated with vector databases, they significantly improve retrieval speed and accuracy, making them ideal for handling vast amounts of structured and unstructured data.

Use Cases

- Academic Research Assistance: Open-source models like Llama, Mistral 7B, and Mixtral 8x7B help researchers summarize large academic papers, analyze datasets, and generate literature reviews, offering an accessible and customizable alternative to proprietary LLMs.

- Customer Support Automation: Businesses can deploy fine-tuned models to retrieve and generate real-time responses from extensive knowledge bases.

However, Llama 2 and Mistral 7B require external memory stores, while Mixtral 8x7B supports longer retrieval tasks more effectively.

- Legal Research & Compliance: AI-powered tools using Mistral 7B or Mixtral 8x7B efficiently analyze and summarize lengthy legal documents, assisting law firms in extracting key insights quickly.

Pros

- Open-source models allow fine-tuning for domain-specific RAG applications, making them the best LLM for RAG when businesses need tailored AI solutions.

- Mistral 7B and Mixtral 8x7B offer better retrieval performance than Llama 2 while remaining free from licensing restrictions, making them affordable for startups and research institutions.

Cons

- Llama 2 (4K tokens) and Mistral 7B require external memory stores (FAISS, ChromaDB, or Weaviate) for Long-Context RAG (LCR). Mixtral 8x7B (32K tokens) supports long-context retrieval more effectively.

- Open-source models need manual optimization, domain-specific training data, and vector database integration for optimal retrieval accuracy.

Each of these models brings unique strengths to Retrieval-Augmented Generation (RAG), catering to different needs based on scalability, cost, and specialization. Also, businesses looking for more specialized AI solutions for customer interactions can consider platforms like BotPenguin.

While BotPenguin is not specifically built as the best LLM for RAG, it is an advanced conversational AI platform that can be enhanced with Retrieval-Augmented Generation (RAG) by integrating a vector database LLM like GPT-4. This allows businesses to use contextual retrieval for more accurate and dynamic responses.

By selecting the best LLM for RAG, businesses can enhance automation, improve knowledge retrieval, and drive smarter decision-making across various industries.

Exploring Practical Uses of RAG with LLMs

The actual applications of RAG demonstrate how combining retrieval systems and generative AI can transform workflows.

Whether it is customer support, knowledge management, or search engines, the best LLM for RAG plays a pivotal role in achieving accuracy and efficiency. Here are a few case studies below:

Case Study 1

In the e-commerce industry, handling high volumes of customer queries efficiently is a constant challenge, especially during peak seasons.

Let us explore how Shopify used GPT-4 to streamline their customer support to improve overall service quality.

- Company: Shopify

- Challenge: Managing high volumes of customer queries during peak sales seasons.

- Solution: Shopify integrated GPT-4 into its customer support chatbot to handle first-level inquiries, such as order tracking, payment issues, and return policies.

GPT-4 worked alongside a retrieval system that fetched relevant customer data from Shopify's database, ensuring responses were accurate and personalized.

- Outcome: The system resolved a majority of customer queries without human intervention, reducing response times and allowing human agents to focus on complex cases.

Shopify’s choice of GPT-4, one of the best LLM models for RAG, was instrumental in achieving this efficiency.

Case Study 2

Managing and accessing huge amounts of data is crucial for large organizations like Siemens. This case study explores how Siemens used Google PaLM to enhance their knowledge management tools.

- Company: Siemens

- Challenge: Centralizing and simplifying access to technical manuals and internal documents for engineers worldwide.

- Solution: Siemens used Google PaLM to develop a knowledge management system. PaLM retrieved technical documents and generated concise, context-aware answers to engineers' queries. The model’s multilingual capabilities were particularly valuable, supporting teams in multiple countries.

- Outcome: Engineers reduced the time spent searching for documentation, boosting productivity and minimizing project delays. Google PaLM’s scalability and integration into Siemens’ RAG pipeline showcased why it is among the best LLM for RAG in enterprise environments.

Case Study 3

Researchers may find it time-consuming to find and summarize relevant studies from the vast amount of academic research available. Let us find out how ArXiv implemented Llama to boost their search engine capabilities.

- Company: ArXiv (online repository for research papers)

- Challenge: Helping researchers find and summarize relevant studies across vast academic archives.

- Solution: ArXiv deployed Meta’s Llama, an open source LLM for RAG, to power a domain-specific search engine. Llama was fine-tuned on academic datasets, allowing it to retrieve papers and generate easy-to-understand summaries tailored to researchers’ needs.

- Outcome: Researchers reported a reduction in time spent searching for and analyzing papers. Llama’s flexibility made it the best open source LLM for RAG for ArXiv, as it could be customized to align with the organization’s unique goals.

These case studies highlight how businesses and organizations use retrieval-augmented generation (RAG) with advanced language models to improve efficiency, streamline workflows, and enhance user experiences. By integrating the best LLM for RAG, companies can ensure higher accuracy, better automation, and smarter decision-making across various domains.

Best Practices for Implementing RAG with LLMs

Successfully implementing RAG requires more than just picking the best LLM for RAG. You need to focus on seamless integration, data quality, and ongoing optimization.

These best practices will help ensure your RAG workflows perform effectively.

Ensure Data Quality

It is vital to constantly update the data sources used by the RAG model to ensure data quality. You can maintain a schedule and refresh data regularly so that the model can consistently access the latest information.

High-quality data ensures the best LLM models for RAG produce accurate, contextually relevant outputs.

Training and Maintenance

You have to retrain the RAG model with fresh datasets to help it adapt to changes in language usage and information.

Implement metrics to check if the responses of the model are accurate and relevant. Regular monitoring ensures the best LLM model for RAG continues delivering optimal results.

Scalability

You should design your RAG system architecture to efficiently handle growing data volume and user load over time.

Ensure that your computational infrastructure, such as cloud-based solutions, has the necessary resources to support intensive data processing, especially when integrating the best LLM for RAG to maximize performance and scalability.

Seamless Integration

Choose an LLM that aligns with your infrastructure. Use APIs or plugins to simplify integration. For open-source projects, models like Llama are great options.

The best open source LLM for RAG offers flexibility, especially in niche applications.

Using Community Support and Documentation

For troubleshooting, use active communities and detailed documentation. Open-source models like Llama thrive on community support, making them ideal for experimentation and troubleshooting.

Access to a strong developer community can be a key advantage when working with the best LLM for RAG, ensuring smoother implementation and faster problem-solving.

By following these best practices, you can optimize your RAG implementation for accuracy, efficiency, and scalability, ensuring that you get the most out of the best LLM for RAG in your specific use case.

Conclusion

The best LLM for RAG implementations can transform workflows, improve efficiency, and deliver outstanding results across industries.

By choosing the right model, ensuring data quality, and following best practices, businesses can unlock the full potential of the Retrieval-Augmented Generation.

For a seamless RAG solution, consider BotPenguin, an AI agent and a no-code AI chatbot maker platform. BotPenguin enables businesses to build intelligent chatbots that use advanced LLMs for accurate, contextual responses.

From enhancing customer support to streamlining internal operations, BotPenguin makes AI accessible and effective for businesses of all sizes. It is a simple yet powerful way to harness the benefits of RAG without technical complexity.

Frequently Asked Questions (FAQs)

How to choose the best LLM for RAG?

The best LLM for RAG depends on your specific needs. If you require top-tier performance and scalability, GPT-4 is a great option.

For cost-effective, open-source flexibility, Meta’s Llama is a solid choice. Consider factors like accuracy, integration ease, and budget when selecting a model.

Why is it important to choose the best LLM for RAG?

The best LLM for RAG ensures accurate context understanding, seamless integration, and high-quality outputs.

Poor model choice can result in irrelevant responses, compromising the effectiveness of your RAG workflow.

What are the advantages of using RAG over fine-tuning?

RAG allows your model to fetch real-time information, making it ideal for dynamic fields like news, research, and customer support.

Fine-tuning, on the other hand, works best for stable domains where deep contextual understanding is needed. The best LLM for RAG will balance both approaches based on your requirements.

Can open-source LLMs be used for RAG?

Yes. Open-source models like Meta’s Llama are excellent choices for RAG implementations. They offer flexibility, customization, and strong community support. If you're looking for the best LLM for RAG while keeping costs low, open-source models are worth considering.

Can a no-code platform like BotPenguin help with RAG?

Yes, BotPenguin enables businesses to build intelligent chatbots using RAG, simplifying integration without coding.

It is a cost-effective way to use AI for improved customer service and operational efficiency. By integrating the best LLM for RAG, businesses can ensure their chatbots deliver accurate, context-aware responses while maintaining seamless automation.