What is a Validation Set?

A validation set in machine learning is a subset of data used to evaluate the performance of a trained model, after initial training on the training set. This data is not used in the training process to avoid overfitting.

Why is a Validation Set important?

Enhancing Model Performance

A validation set helps in tuning model parameters to optimize its performance. By analyzing the error rates on the validation set, we can adjust our model to improve its predictive accuracy.

Preventing Overfitting

The validation set is crucial in preventing overfitting, a scenario where a model performs well on the training data but poorly on new, unseen data. It provides an unbiased evaluation and helps ensure generalizability.

Model Selection

Different machine learning models can be compared using a validation set. The model that performs the best on the validation set is generally chosen as the final model.

Hyperparameter Tuning

A validation set is vital for hyperparameter tuning, the process of adjusting the settings within the model to improve its performance. It provides a reliable evaluation of the hyperparameter adjustments.

Assuring Confidence in the Model

By using a validation set, we gain a level of confidence that our model will perform well on real-world data. This assurance is highly valuable before deploying the model into production.

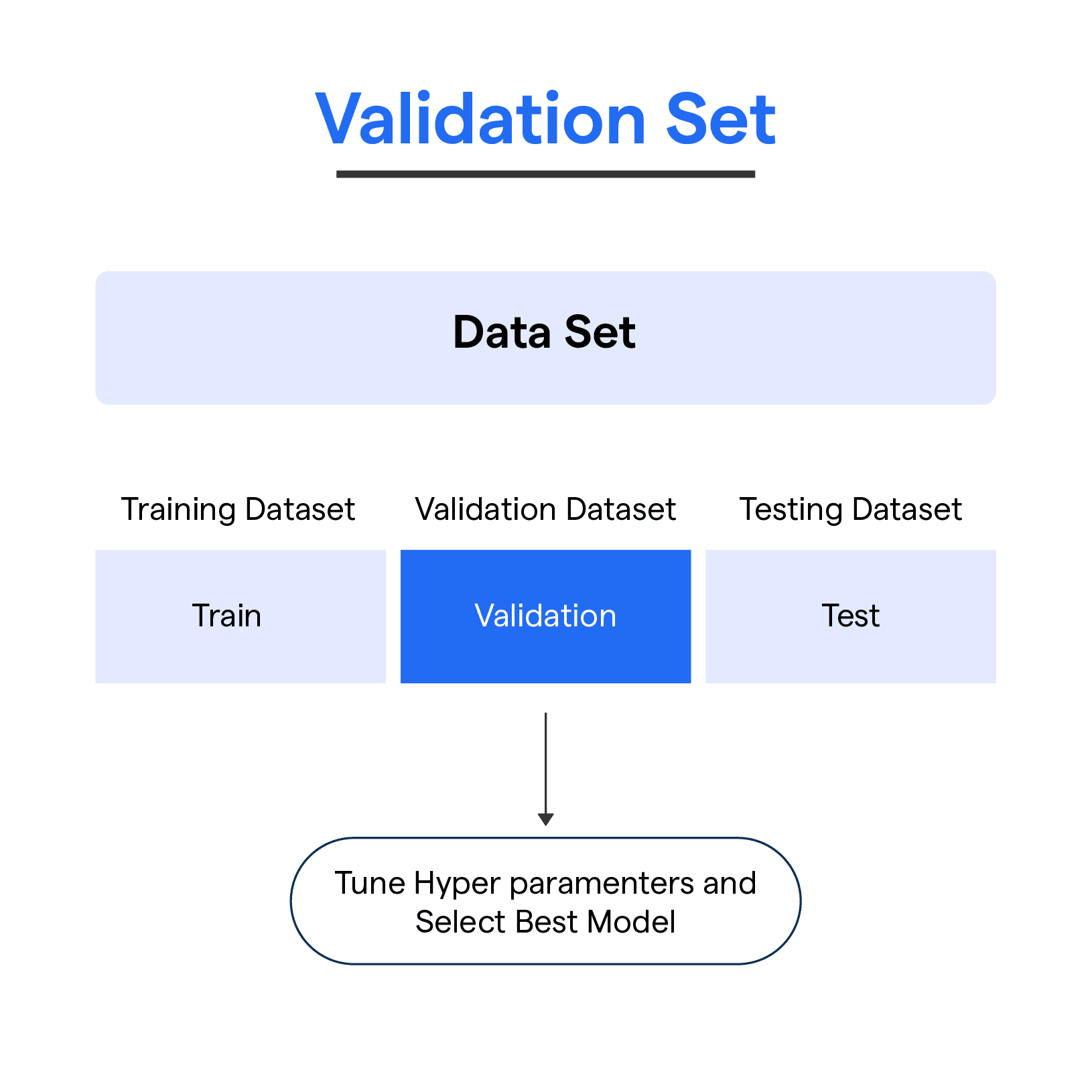

How does a Validation Set work?

Purpose of a Validation Set

Validation sets act as a checkpoint to gauge a model's performance on unseen data during the training process. They are essential to help avoid overfitting and adjust the model's parameters for optimal results.

Creating the Validation Set

To create a validation set, you'll need to partition your dataset into two or three sections, typically: a training set, a validation set, and optionally, a test set. Common splits are 70-15-15% or 80-20% for training-validation-test sets.

Monitoring Performance

During the model training process, periodically evaluate performance on the validation set. This helps monitor your model's progress, detect overfitting, and determine when to stop training to avoid poor generalization.

Hyperparameter Tuning

The validation set allows you to fine-tune hyperparameters, such as learning rate or network architecture. By comparing model performance on the validation set, you can select the best hyperparameters, which enhance generalization on unseen data.

Model Selection

In cases where you have multiple models or algorithms to choose from, a validation set can be invaluable in selecting the best performing model. The model with the highest performance on the validation set is likely to generalize better on new data.

Types of Machine Learning Model Evaluation

Training set

A training set is the subset of data used to train the model.

Validation set

A validation set is the subset of data used to evaluate the performance of the model during the training process.

Test set

A test set is the subset of data used to evaluate the performance of the final model after training and hyperparameter tuning.

Creating a Validation Set

How to create a validation set?

To create a validation set, a portion of the training data is held out, typically 20-30% of the total training data.

What is the ideal size for a validation set?

There is no one-size-fits-all answer to this question, but a good rule of thumb is to set aside around 20% of the total training data for the validation set.

Techniques for Cross-Validation

K-fold cross-validation

K-fold cross-validation is a popular technique for evaluating the performance of machine learning models. The training data is split into k equal parts, and the model is trained on k-1 parts while being evaluated on the remaining part. This process is repeated k times, with each part being used as the validation set once.

Stratified k-fold cross-validation

Stratified k-fold cross-validation is similar to k-fold cross-validation but ensures that each class is represented proportionally in each fold.

Shuffle split cross-validation

Shuffle split cross-validation randomly splits the data into training and validation sets, and repeats the process a number of times. This technique is useful when the size of the dataset is small.

Hyperparameter Tuning with Validation Set

How validation set used in tuning hyperparameters?

Validation set is used to evaluate the performance of a model trained with different hyperparameters, in order to select the best hyperparameters for the given task.

Why hyperparameter tuning is necessary?

Hyperparameter tuning is necessary to ensure that a machine-learning model is performing at its best on a given task.

Choosing the Right Metric for Model Evaluation

Accuracy

Accuracy is the ratio of correctly predicted observations to the total number of observations. It is a popular metric for binary classification problems.

Precision

Precision is the ratio of true positive predictions to the sum of true positive and false positive predictions.

Recall

Recall is the ratio of true positive predictions to the sum of true positive and false negative predictions.

F1 score

The F1 score is the harmonic mean of precision and recall. It is a good metric for imbalanced datasets.

ROC-AUC score

ROC-AUC score measures the area under the Receiver Operating Characteristic (ROC) curve. It is a good metric for binary classification problems.

Confusion Matrix

A confusion matrix is a table that shows the number of true and false positives and negatives for a given model.

Validation Curves and Learning Curves

What are Validation Curves and Learning Curves?

Validation Curves and Learning Curves are graphical tools used to visualize the performance of machine learning models.

How are they used in machine learning model evaluation?

They are used to diagnose various problems with a model, such as overfitting, underfitting, and high bias or variance.

Overfitting and Underfitting

What is overfitting and underfitting?

Overfitting occurs when a model is overly complex and fits the training data too closely, whereas underfitting occurs when a model is too simple and cannot fit the training data well.

How can validation set help identify and avoid these issues?

Validation set can help identify these issues by allowing the model to be evaluated on data that it has not seen before. If the model is overfitting or underfitting, the performance on the validation set will be poor.

Best Practices for Validation Sets

In the world of machine learning, using validation sets correctly is crucial for building efficient models. Here are some recommended practices.

Choosing the Right Size

The size of your validation set matters. It should be large enough to provide statistically significant results, but not so large that you lose valuable training data. A usual practice is to allocate 15-30% of the dataset for validation.

Ensuring Data Diversity

The validation set should be representative of the data the model will encounter in the real world. Ensuring an array of examples in your validation set can lead to a model that performs better on unseen data.

Using Cross-Validation

Cross-validation is a technique where the data is split into 'k' subsets. The model is trained on 'k-1' subsets and validated on the remaining subset. This is repeated 'k' times so all data is used for both training and validation.

Regular Evaluations

Ensure to regularly evaluate your model with the validation set during the training process. It will help you monitor the model's performance and serve as an early warning system for overfitting.

Independent Validation Sets

Prevent leakage between your training and validation sets by keeping them separate and independent. Any similarities can lead to optimistic validation scores and a model that can't generalize well on unseen data.

Tl;DR

Validation set is a crucial tool in machine learning for ensuring that a model is both accurate and generalizes well to unseen data. With the help of this glossary, you now have a better understanding of the role of validation set in machine learning, how to choose an appropriate evaluation metric, and how to avoid common pitfalls.

Frequently Asked Questions

What is the purpose of a validation set?

A validation set is used to evaluate the performance of a trained model and select the best hyperparameters. It helps prevent overfitting and ensures the model generalizes well to unseen data.

How do I create a validation set?

To create a validation set, set aside around 20% of your training data. This subset of data is not used during training to avoid overfitting and to evaluate the model's performance.

What is the ideal size for a validation set?

There's no one-size-fits-all answer, but generally, setting aside around 20% of the total training data for the validation set is a good rule of thumb.

What metrics should I use to evaluate my model with the validation set?

Common metrics include accuracy, precision, recall, F1 score, and ROC-AUC score. Choose the metric that is most appropriate for your specific classification or regression problem.

Why is it important to avoid using the test set as a validation set?

The test set should only be used after model training and hyperparameter tuning. Using it as a validation set can lead to biased evaluation results, as the model would have indirectly seen the test set during the training phase.