Introduction

A chatbot can answer basic queries without much effort. However, when it comes to complex or dynamic questions, the same cannot be said about it. Have you ever wondered why?

It is because it relies too much on generic data, without a way to pull in real, updated information. This is where a Retrieval-Augmented Generation (RAG) chatbot can take over.

By combining generative AI with real-time data retrieval, RAG chatbots can provide smarter, more accurate responses. They bridge the gap between static AI and dynamic, real-time information.

In this guide, you will learn what a RAG chatbot is, why it is different, and how you can build one yourself, without needing to be an AI expert.

What is a RAG Chatbot?

Imagine a chatbot that not only generates answers but actively retrieves up-to-date information to make its responses more accurate.

That is the essence of a RAG chatbot. Unlike standard bots, it combines retrieval and generative AI, enabling it to answer complex questions with precision and relevance.

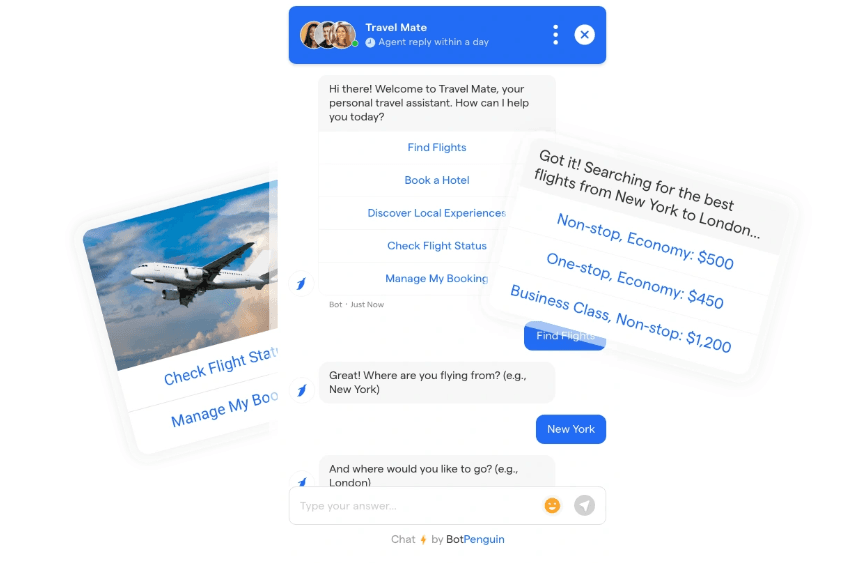

Let us understand this better with an example. Consider an airline’s customer service chatbot. A traveler asks if their flight is on time. A standard chatbot might give a generic response: “Please check your booking details or visit our flight status page.” This forces the user to do extra work.

A RAG chatbot, however, can fetch real-time flight data and respond with: “Your flight, XYZ123, is currently on schedule and will depart from Gate 14 at 6.30 PM. Let me know if you need help with anything else!”

By retrieving live updates instead of providing static responses, a RAG chatbot eliminates frustration and creates a seamless, efficient user experience.

How RAG Chatbots Combine Retrieval and Generative AI Techniques?

A RAG chatbot operates through two distinct processes. First, it retrieves relevant data from external sources like databases, APIs, or the internet.

Then, it uses generative AI to craft meaningful responses based on the retrieved information.

This approach ensures answers are both factually correct and contextually appropriate. For instance, while traditional bots rely on pre-trained static knowledge, chatbots with RAG stay updated by pulling fresh data as needed.

This makes them ideal for dynamic applications such as news analysis or customer queries requiring real-time accuracy.

How Does a RAG Chatbot Work?

A RAG chatbot operates by seamlessly integrating retrieval and generative processes to deliver accurate, context-aware responses.

This dual mechanism allows it to combine external data with AI-driven conversational abilities, ensuring it stays dynamic and relevant.

However, while a RAG chatbot significantly reduces hallucinations, it does not eliminate them entirely.

The reliability of responses depends heavily on retrieval quality, meaning constant fine-tuning of retrieval mechanisms and knowledge sources is essential to maintain accuracy.

Retrieval Stage

The first step for a RAG chatbot is retrieving information from external sources such as structured databases, vector databases, or public APIs.

Vector databases like FAISS, Pinecone, or Weaviate are crucial for storing and retrieving relevant documents, enabling the chatbot to fetch semantically similar information with high accuracy.

These sources can include structured databases, public APIs, or even real-time web content. The retrieval mechanism ensures the RAG chatbot finds the most relevant and up-to-date data.

Most RAG implementations do not retrieve data from the live internet unless explicitly designed with web scraping or API integration. Instead, they rely on pre-indexed knowledge bases to ensure faster and more reliable retrieval.

For example, in customer service, a RAG based chatbot might query a vector database storing product specifications or stock details to provide accurate, real-time responses.

This distinguishes RAG chatbots from traditional models, which rely on static, pre-trained knowledge and lack the ability to retrieve updated data dynamically.

Generation Stage

After retrieving data, the RAG chatbot enters the generation stage. Here, generative AI models like GPT process the retrieved information to generate coherent, context-appropriate responses.

Instead of simply relaying raw data, the rag bot interprets the context of the user query. For instance, if asked about a product’s compatibility, a chatbot using RAG will retrieve the specs and articulate an answer tailored to the query, delivering clarity and relevance.

Integration of Retrieval and Generation for Seamless Output

The true strength of a RAG chatbot lies in its ability to integrate these two stages seamlessly. By tightly coupling retrieval and generation, the rag based chatbot avoids generic answers and ensures each response is both data-backed and conversationally fluent.

This integration enables smoother, more accurate interactions. For example, when asked about policy updates, a chatbot using RAG will retrieve the latest policy documents, summarize the key points, and deliver them as user-friendly responses.

By seamlessly combining real-time data retrieval with AI-driven conversation, a RAG chatbot ensures users receive accurate, up-to-date, and context-aware responses—making interactions more informative, efficient, and engaging.

Now that we understand how a RAG chatbot combines retrieval and generation for intelligent interactions, let us simplify its workflow step by step to see how each stage functions in practice.

A Simplified Workflow of a RAG Chatbot Functionality

Building a RAG chatbot involves multiple stages, each playing a crucial role in delivering accurate, context-aware, and real-time responses. Here is how a rag chatbot works in a simplified flow:

User Query

The chatbot receives a question or request from the user, which can range from a simple factual inquiry to a complex, multi-step question requiring detailed information.

Retrieval

The chatbot searches for relevant information using external knowledge sources such as vector databases (e.g., FAISS, Pinecone, Weaviate) for semantic similarity search, structured databases (e.g., SQL, NoSQL) for retrieving specific records, APIs and internal document repositories for fetching real-time business data.

Data Filtering & Ranking

Once the chatbot retrieves data, it applies ranking and filtering mechanisms to ensure the most relevant and high-quality information is used.

This step ranks retrieved documents based on relevance to the user query, and removes redundant or low-confidence data to enhance response accuracy.

Generation

A generative AI model (such as GPT-4, Gemini 1.5, or Hugging Face models) processes the retrieved, filtered data and crafts a coherent, context-aware response.

This step ensures that the chatbot doesn’t just relay raw data but interprets it for clarity, and the response remains natural, engaging, and relevant to the conversation.

Response Delivery

The RAG chatbot presents the final response to the user. Advanced implementations may also cite sources for transparency, summarize large documents into concise, easy-to-understand insights, and adapt responses based on user feedback for continuous improvement.

By combining retrieval and generative capabilities, building a RAG chatbot results in a tool that is far more powerful and adaptive than standard chatbots. This dual-stage process is key to making RAG for chatbot applications successful in various practical scenarios.

Key Differences Between RAG Chatbots and Standard AI Chatbots

The primary difference lies in adaptability. Standard AI bots rely on static, pre-trained models, limiting their ability to provide accurate responses for dynamic queries. In contrast, RAG chat bots dynamically retrieve relevant information before generating responses.

While traditional bots can access external knowledge bases, they often rely on pre-programmed rules rather than dynamically retrieving relevant information in real-time.

In contrast, a RAG chatbot retrieves contextually relevant documents before generating a response, ensuring that the answers are not only AI-generated but also backed by up-to-date data.

However, the quality of a RAG chatbot depends heavily on the retrieval accuracy and the relevance of the retrieved documents. Without high-quality retrieval, even the best generative model may hallucinate or provide misleading responses.

Moreover, building a RAG chatbot doesn’t just mean better answers; it means using up-to-date resources for a competitive edge. For example, while a traditional bot might miss contextual nuances, a rag based chatbot integrates actual data to deliver nuanced and actionable insights.

This blend of retrieval and generation ensures RAG chatbots remain relevant, accurate, and far more effective than their standard counterparts.

For organizations that require AI-driven engagement with structured data integration, solutions like BotPenguin provide an accessible way to automate customer queries, lead generation, and support workflows without the complexities of large-scale retrieval models.

Examples of RAG Chatbots in Action

Businesses across various industries are increasingly adopting RAG based chatbots to enhance efficiency, improve customer experiences, and streamline operations.

Below are some key applications where a RAG chatbot provides a competitive advantage.

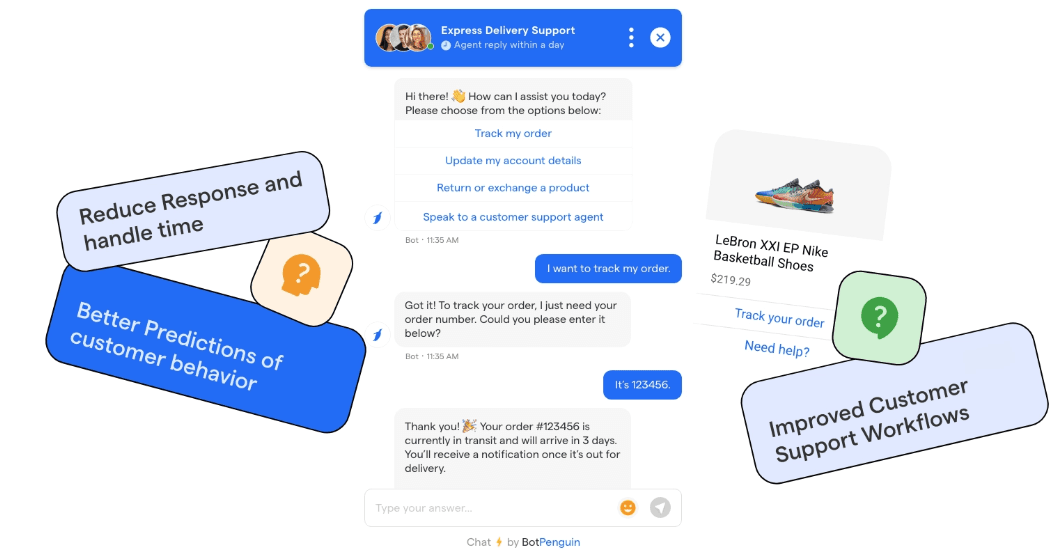

Customer Support

A RAG chatbot can handle complex customer service queries by dynamically retrieving relevant information from product manuals, internal knowledge bases, or support tickets.

Unlike traditional chatbots that rely on pre-scripted responses, a RAG-based chatbot can:

- Retrieve real-time policy updates and troubleshooting steps.

- Provide multilingual support by pulling translated documentation when needed.

- Assist human agents by summarizing past customer interactions for better issue resolution.

Example: A telecom provider uses a RAG chatbot to answer billing inquiries, retrieve contract details, and suggest best-fit plans based on real-time offers.

Research Assistants

Researchers rely on RAG chatbots to process large datasets, retrieve relevant papers, and generate concise insights. Instead of manually searching through hundreds of academic articles, a RAG chatbot can:

- Summarize scientific studies with citations.

- Retrieve the latest market trends from structured datasets.

- Generate comparative analyses between different research findings.

Example: A pharmaceutical company employs a RAG chatbot to scan medical journals, extract key findings, and assist researchers in drug discovery.

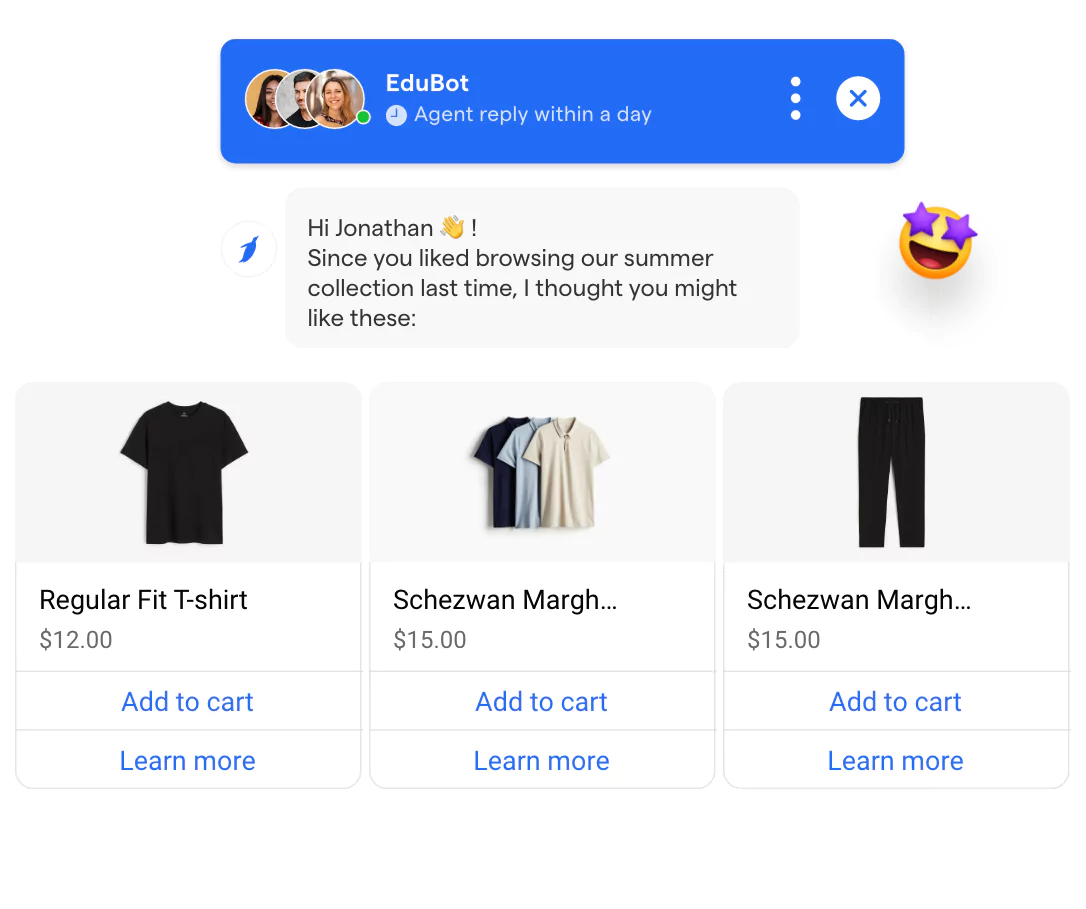

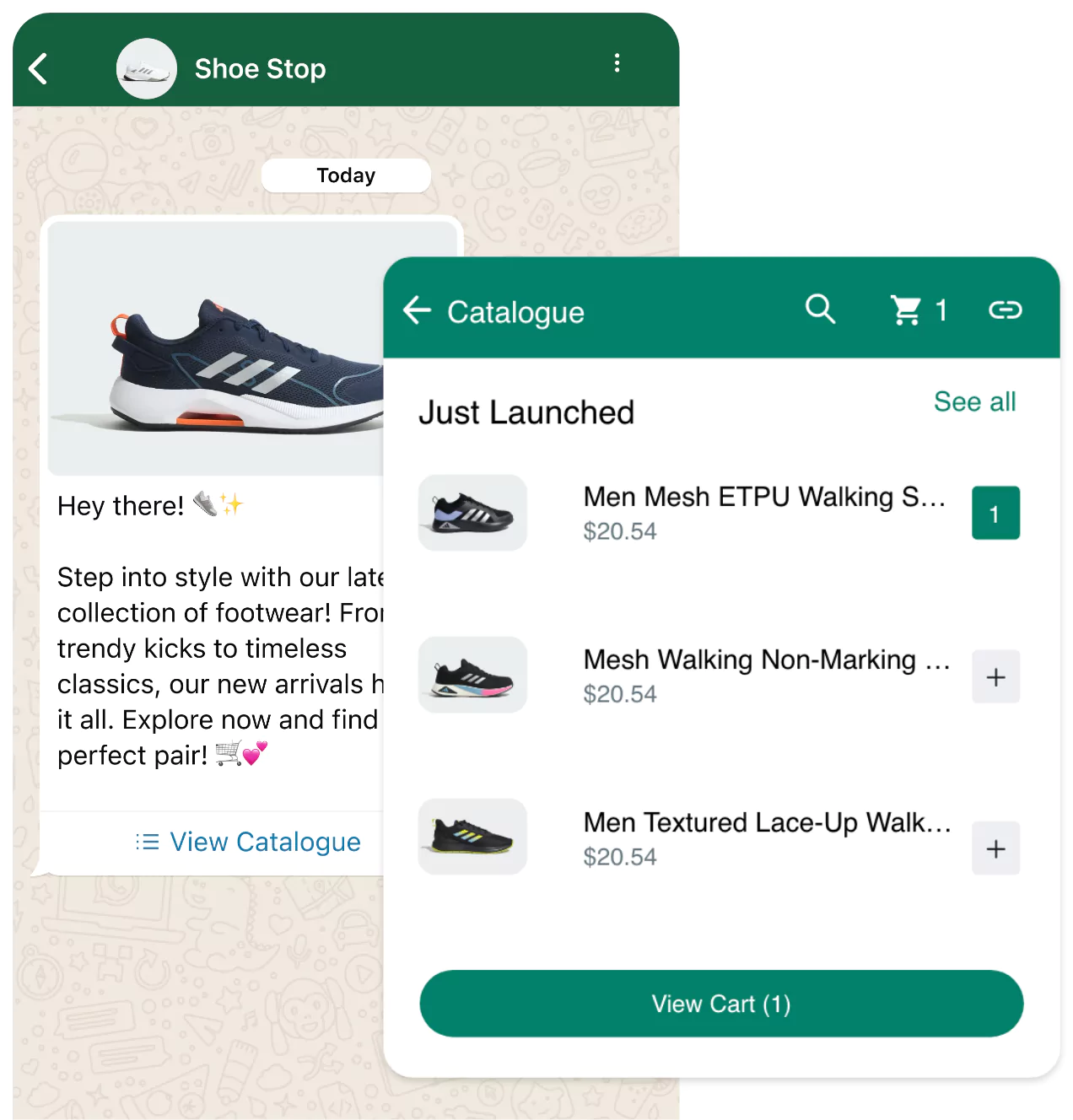

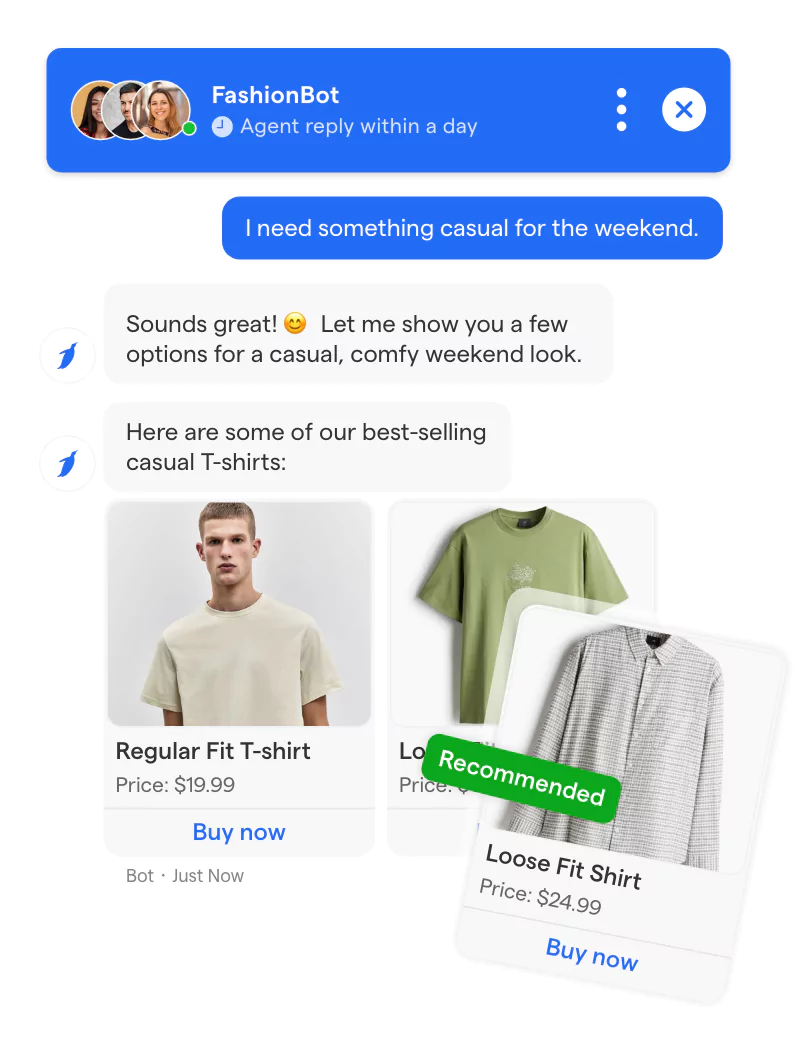

E-Commerce & Retail

In online retail, a RAG chatbot enhances user experience by retrieving live inventory updates, pricing details, and delivery timelines. Unlike traditional bots, which rely on static FAQs, a RAG-based chatbot can:

- Fetch stock availability in real time.

- Recommend personalized products based on browsing history.

- Answer warranty and return policy questions by retrieving updated documentation.

Example: An e-commerce platform integrates a RAG chatbot that provides real-time product availability, suggests personalized bundles, and fetches customer reviews to help shoppers make informed decisions.

By applying RAG chatbots in these fields, companies can enhance efficiency, reduce manual workload, and improve overall user satisfaction while delivering real-time, data-backed insights.

Benefits of Building a RAG Chatbot

A RAG chatbot is not just another AI tool; it is a smarter, more efficient way to handle dynamic and complex conversations.

By combining retrieval and generative AI, building a RAG chatbot unlocks a range of benefits that traditional chatbots simply can’t match. Let us explore a few benefits below:

Improved Accuracy and Relevance in Responses

A RAG LLM chatbot pulls real-time, relevant data from trusted sources before crafting its responses. This ensures that the information it delivers is both accurate and up-to-date, unlike static AI systems that rely solely on pre-trained data.

By combining retrieval with advanced language models, a RAG LLM chatbot enhances contextual understanding and delivers more precise, well-informed answers.

Ability to Handle Complex Queries with Ease

Complex questions often stump traditional bots. A rag bot, however, thrives in such situations.

By retrieving external knowledge and interpreting it through generative AI, a rag chatbot delivers precise answers even for nuanced or technical queries.

Scalability for Different Industries and Use Cases

Whether in healthcare, e-commerce, or customer service, chatbots with RAG adapt effortlessly.

A rag based chatbot can scale its capabilities, retrieving and generating content tailored to specific industry needs, from legal advice to troubleshooting technical issues.

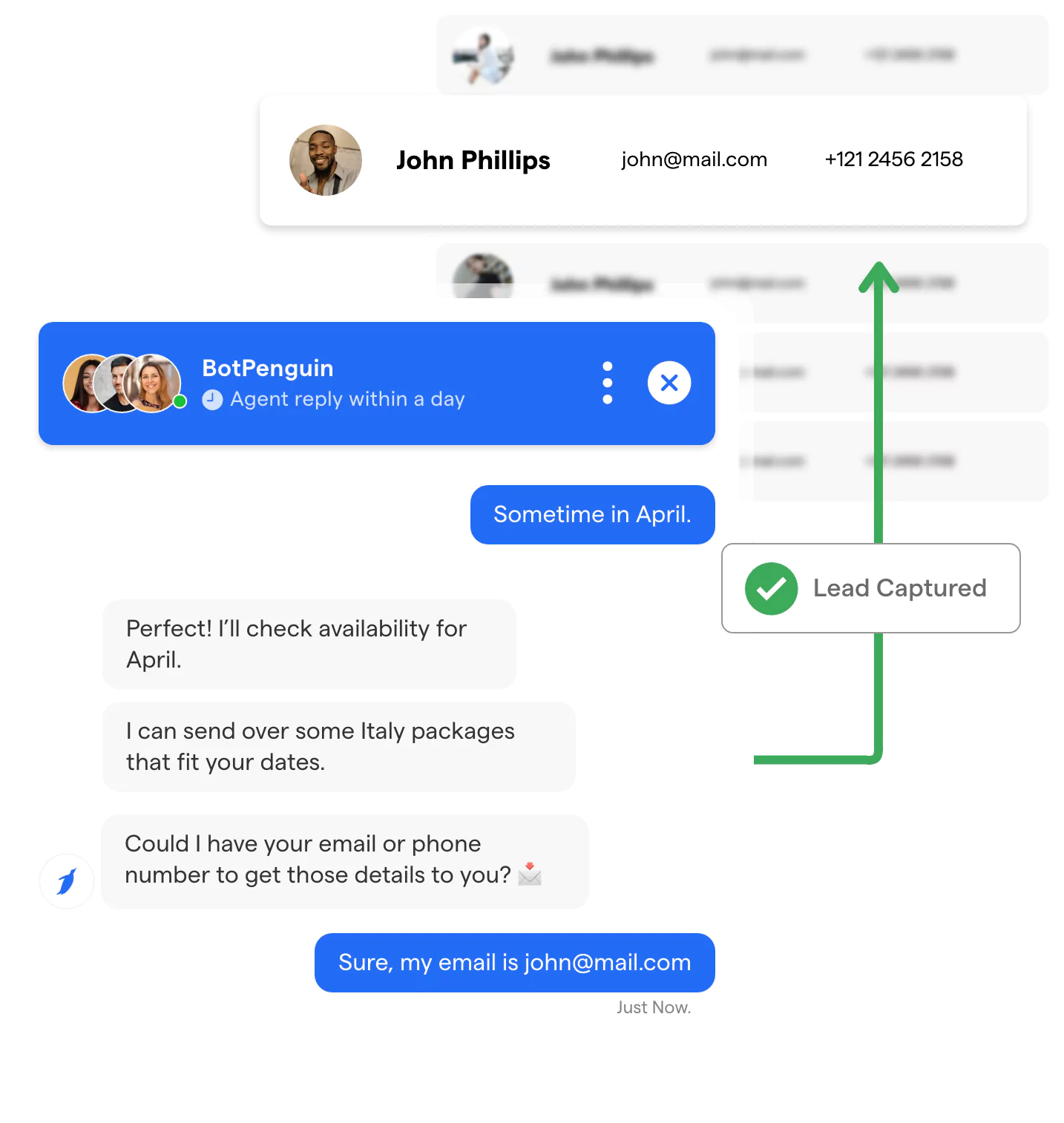

Enhanced User Satisfaction with Context-Aware Replies

Users demand personalized, context-aware interactions. A chatbot using RAG analyzes the query context, retrieves the right data, and delivers conversationally fluent responses. This level of relevance dramatically boosts user satisfaction and engagement.

With its ability to retrieve real-time information, handle complex queries, and provide context-aware responses, a RAG based chatbot offers a powerful upgrade over traditional bots, making interactions more accurate, scalable, and user-friendly across industries.

Tools and Technologies Needed to Build a RAG Chatbot

Creating a RAG chatbot requires the right blend of tools, frameworks, and data sources.

With the right technology stack, building a RAG based chatbot becomes a streamlined process that delivers powerful and accurate results. Let us explore more about this:

Key Tools

To build an effective RAG based chatbot, using the right tools is essential. They are given below:

- AI Frameworks: Platforms like OpenAI, Hugging Face, and LangChain are essential for implementing the generative AI aspect of a rag chatbot. They provide pre-trained models and libraries to accelerate development.

- Retrieval Systems: For accessing and ranking external data, systems like Elasticsearch, Pinecone, or FAISS are commonly used. These tools help the rag bot efficiently retrieve the most relevant information.

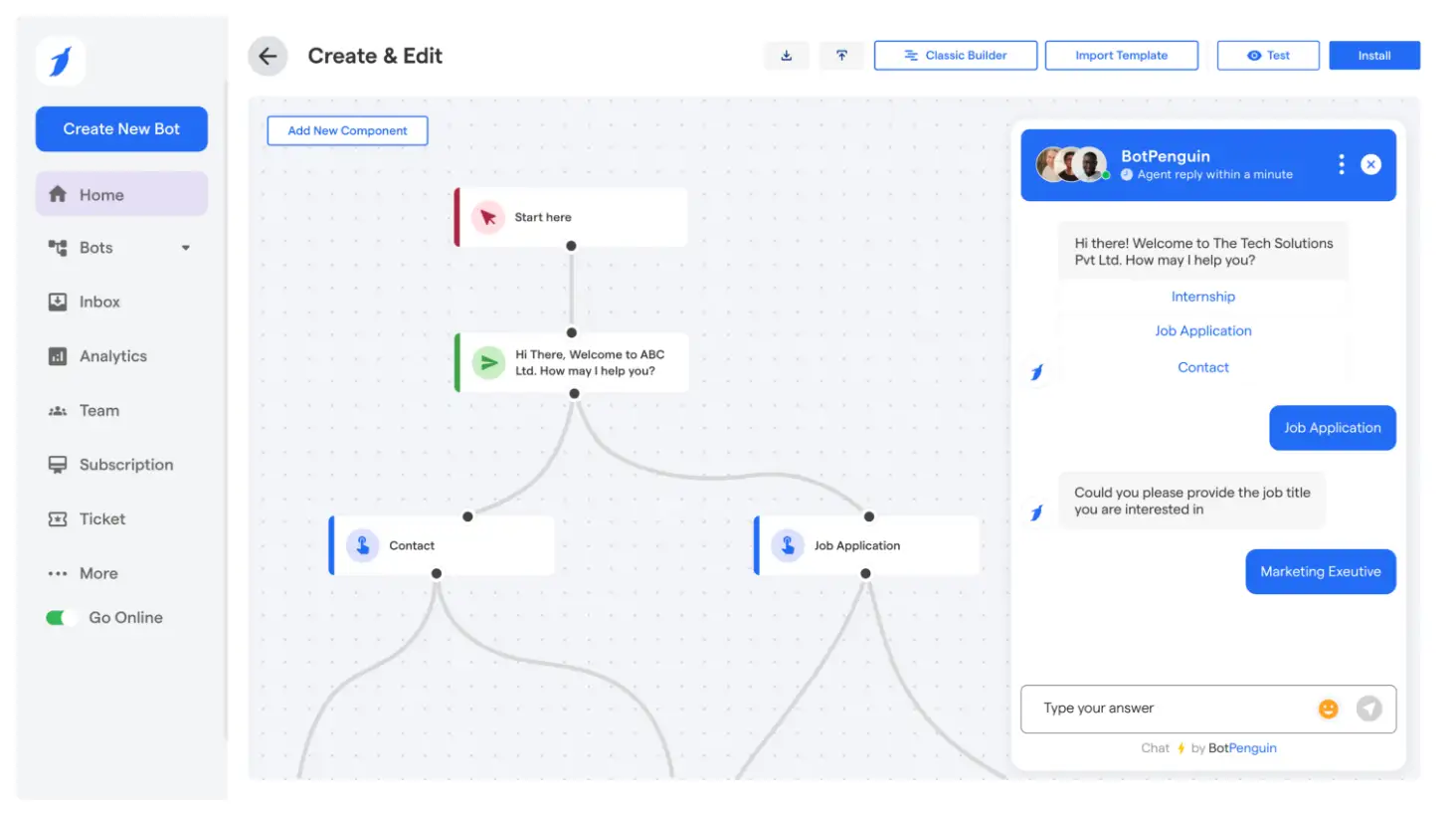

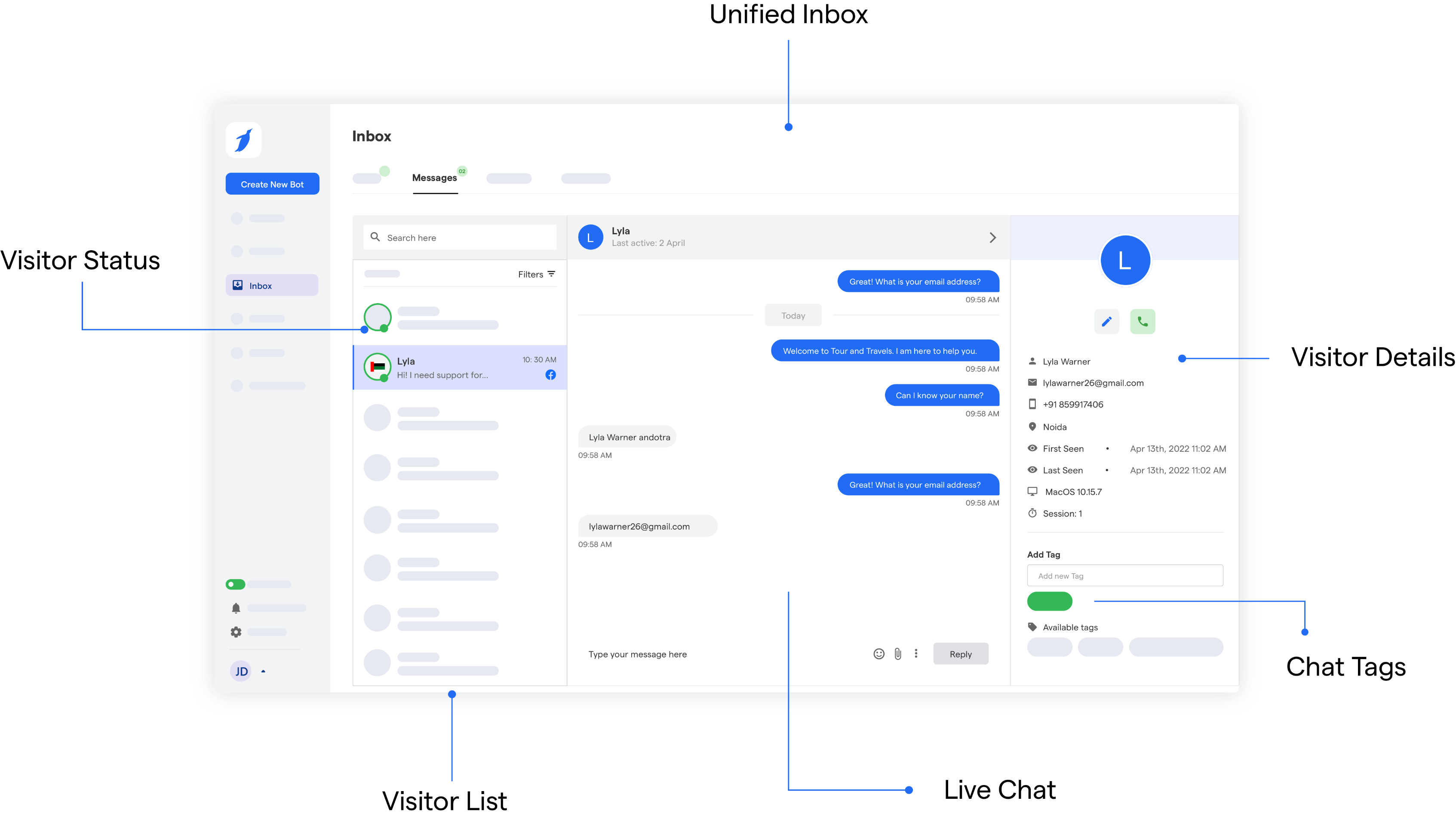

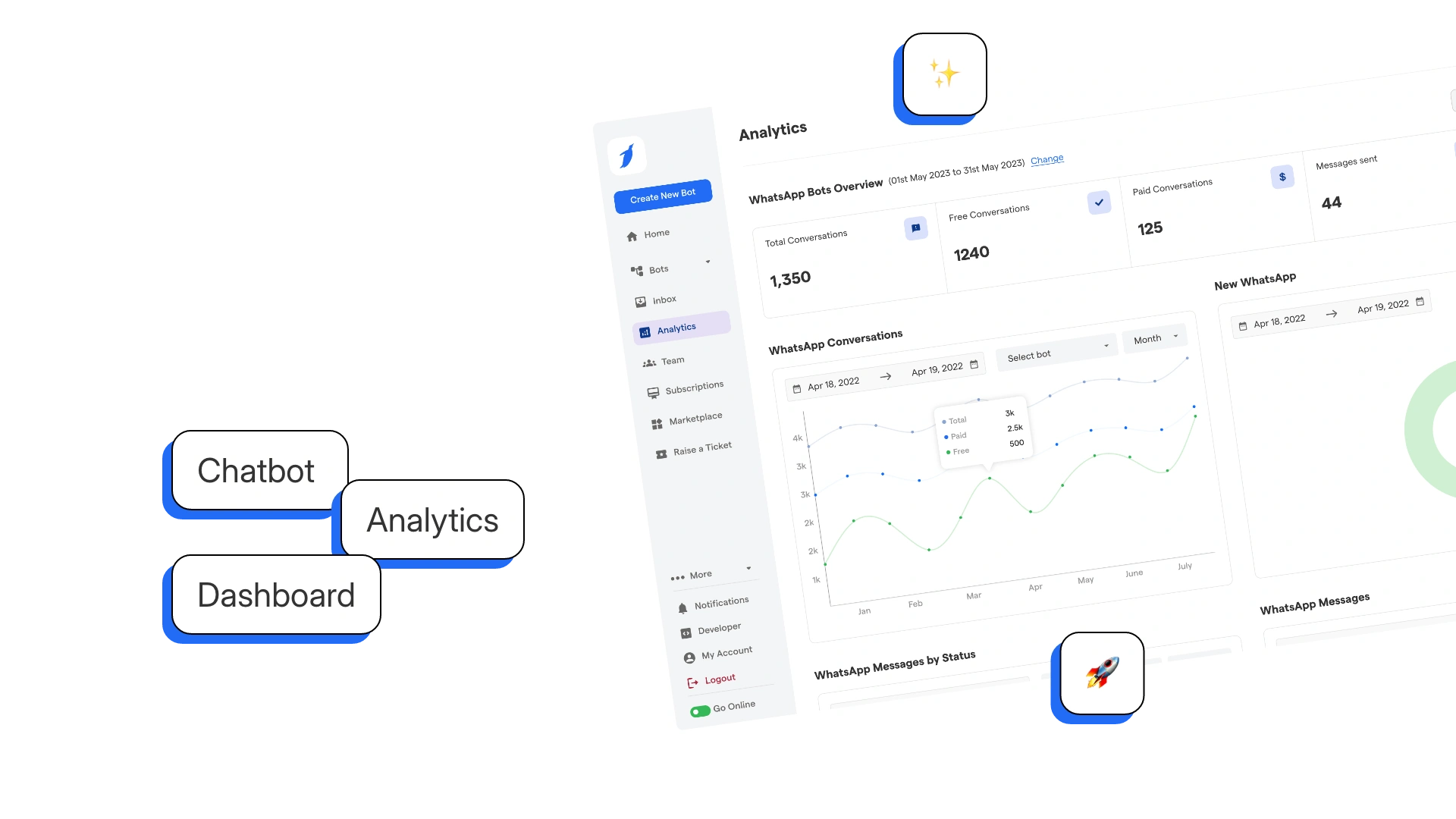

- Chatbot Platforms: An easy-to-use AI agent and chatbot-building platform, BotPenguin integrates AI capabilities to simplify the process of building a rag chatbot, even for users without extensive technical expertise.

With the right AI frameworks, retrieval systems, and chatbot platforms, you can create a RAG chatbot that delivers accurate, real-time, and context-aware interactions.

Data Sources

A RAG chatbot relies on diverse data sources to retrieve accurate and relevant information in real-time. These sources can be categorized as follows:

- Structured Databases: Databases like SQL or enterprise data repositories are vital for accessing organized data that supports a chatbot using RAG.

- Unstructured Databases: Tools like Elasticsearch can handle unstructured data such as PDFs, articles, or reports to enhance the knowledge base of chatbots with RAG.

- External APIs: APIs from Wikipedia or enterprise systems enable real-time access to dynamic knowledge, crucial for creating a rag based chatbot that handles diverse queries.

By using structured databases, unstructured data, and external APIs, a RAG based chatbot ensures its responses remain accurate, comprehensive, and up-to-date, enhancing user experience across various applications.

Languages and Frameworks

Choosing the right programming languages and frameworks is essential for building a robust and efficient RAG chatbot. A few of them are given below:

- Python: The preferred programming language for most AI development, including rag chatbots, thanks to its robust libraries and ease of use.

- LangChain: A powerful framework designed to streamline RAG chatbot development by managing retrieval and response generation workflows, enabling seamless communication between vector databases and large language models.

- FAISS, Pinecone, and Weaviate: These vector databases play a critical role in a RAG chatbot by efficiently storing and retrieving high-dimensional embeddings, ensuring faster and more accurate responses based on instant data retrieval.

- Hugging Face Models: These pre-trained and customizable LLMs enhance RAG chatbot capabilities by delivering context-aware, conversational responses while integrating smoothly with retrieval mechanisms to minimize inaccuracies.

Selecting the right languages and frameworks ensures a seamless development process, enabling a RAG chatbot to deliver accurate, intelligent, and efficient responses.

Tips for Selecting the Right Tools for Your Project

The right choice of tools is crucial for building an efficient and high-performing RAG based chatbot. A few tips are given below to help you in your selection.

- Match the tools to your use case. For instance, use Pinecone if speed is critical, or FAISS for efficient similarity search.

- Choose frameworks that are compatible with your data. A rag LLM chatbot working with unstructured data may need both AI and retrieval tools optimized for NLP tasks.

- Consider scalability. If the RAG for chatbot application needs to grow, select cloud-based solutions like AWS or Azure for seamless integration.

By combining the right tools, technologies, and frameworks, building a RAG LLM chatbot becomes both manageable and highly rewarding. The result is a dynamic, context-aware system that stands out in the AI chatbot landscape.

Step-by-Step Guide to Building a RAG Chatbot

Building a RAG chatbot involves a systematic approach that combines data retrieval and generative AI.

By breaking the process into well-defined steps, you can create a chatbot that is both efficient and highly accurate. Here is a detailed guide to help you through each stage of building a rag chatbot.

Step 1

Define Use Case and Scope

The first step is to decide what problem your rag chat bot will address. Is it designed for customer support, assisting researchers, or handling e-commerce queries?

Clearly de fine its purpose and scope. Platforms like BotPenguin make this process easier by providing industry-specific templates and flexible chatbot-building options.

For example, a customer service rag chatbot might focus on resolving technical issues or product inquiries, while a research assistant bot could retrieve and summarize articles.

Defining the use case will help you choose the right tools, data sources, and AI models.

Step 2

Gather Data

Next, identify the data sources your rag based chatbot will need, such as structured databases, unstructured documents, or external APIs.

To make your data sources accessible for retrieval, follow these steps:

- Connect structured databases using SQL queries or API endpoints.

- Index unstructured data with Elasticsearch or Pinecone for efficient searching.

- Set up API connections for real-time data retrieval from external sources like Wikipedia or business systems.

Ensure the data is relevant, accurate, and sufficient to cover the scope of the chatbot’s use case.

Step 3

Set Up the Retrieval System

To allow the chatbot to fetch relevant information, set up a robust retrieval system. You can do the following:

- Use Elasticsearch or Pinecone to enable fast, relevant searches.

- Connect these systems to your database or document storage.

- Build an API layer that allows your chatbot to fetch the latest relevant information dynamically.

These tools index your data, enabling the chatbot using RAG to quickly locate the most pertinent information for any query. This is a crucial step to ensure fast and reliable performance.

Step 4

Create the Chatbot

Now that your retrieval system is in place, the next step is to build the chatbot itself using BotPenguin. This includes:

- Sign up for BotPenguin and create a new chatbot.

- Use BotPenguin’s AI capabilities to handle natural language processing and response generation.

- Customize the bot’s workflow and conversational flow based on your needs.

With BotPenguin’s AI-driven capabilities, your chatbot is ready to understand queries, retrieve relevant information, and deliver natural, context-aware responses.

Step 5

Implement Generative AI

To enable your chatbot to generate intelligent responses, you need to implement generative AI.

BotPenguin simplifies this process with its built-in AI models, eliminating the need for manual integration with frameworks like OpenAI’s GPT or Hugging Face Transformers. Here is how to set it up:

- Utilize BotPenguin’s AI capabilities to generate human-like responses.

- Ensure conversational coherence by using BotPenguin’s natural language processing engine.

- Customize response generation through fine-tuning, allowing the chatbot to adapt based on user interactions and business needs.

- Train the bot with domain-specific data to improve accuracy and contextual relevance.

With these enhancements, your RAG based chatbot can deliver well-structured, meaningful, and highly relevant responses, ensuring an engaging user experience.

Step 6

Combine Retrieval and Generation

Once your external retrieval system is set up via APIs, you need to integrate it with BotPenguin’s AI response generation. Here is how the process works:

- The user query is processed by BotPenguin’s chatbot engine.

- The retrieval system fetches relevant information from structured or unstructured sources.

- The retrieved data is fed into BotPenguin’s AI engine, which crafts a natural, context-aware response.

- The chatbot delivers the response in a conversational manner.

This integration is what makes chatbots with RAG superior, combining real-time data access with natural language capabilities.

Step 7

Test and Optimize

Test the rag chat bot to evaluate its performance. You can do the following:

- Run test queries to check if the chatbot retrieves and generates accurate responses.

- Optimize retrieval accuracy by fine-tuning search algorithms.

- Improve response fluency with BotPenguin’s AI model training options.

Regular testing and optimization ensure that your RAG bot delivers precise, context-aware, and engaging responses, enhancing the overall user experience.

Step 8

Deploy and Monitor Performance

Finally, deploy your RAG for chatbot application.

- Deploy your chatbot on websites, WhatsApp, or other channels through BotPenguin.

- Continuously monitor performance and refine integrations for better accuracy.

Ensure the interface is user-friendly and supports the chatbot’s functionality. Deployment marks the point where your rag chatbot becomes operational and ready for user interaction.

By following these steps, you can successfully build a high-performing RAG chatbot that seamlessly combines retrieval and generative AI.

With continuous monitoring and optimization, your chatbot will evolve to provide even more accurate, context-aware, and engaging responses, enhancing user satisfaction and driving better interactions.

Challenges and Limitations of RAG Chatbots

While RAG chatbots provide more accurate, data-driven responses than traditional AI bots, they come with their own challenges and limitations.

Understanding these constraints is key to optimizing their performance and ensuring a seamless user experience.

Common Challenges in Retrieval

Retrieval is a critical step for a RAG chatbot, but it often faces challenges like:

- Data Relevancy & Ranking Issues: Ensuring that the retrieved information is precise and contextually relevant can be difficult, especially when dealing with large, unstructured, or outdated datasets. Poor ranking algorithms may lead to irrelevant responses.

- Latency & Processing Time: The retrieval process must balance speed and accuracy. Latency issues arise when querying large vector databases, especially in real-time applications like customer service or financial analysis. Optimizing indexing and query speed is essential for improving response times.

- Dependence on Data Quality: The accuracy of a RAG chatbot depends entirely on the quality of the knowledge base. If the data contains errors, biases, or gaps, the chatbot may return misleading or incomplete answers.

Importance of Regular Updates to the Knowledge Base

A RAG chatbot is only as good as its knowledge base. Without frequent updates, the chatbot can:

- Provide outdated or incorrect responses, reducing user trust.

- Fail to retrieve the latest information, making it ineffective in dynamic industries like finance, healthcare, or legal tech.

- Struggle with new terminologies, regulations, or product details if the data is not refreshed regularly.

To maintain reliability, businesses must:

- Implement automated data pipelines for continuous updates.

- Regularly fine-tune retrieval models to improve accuracy and ranking mechanisms.

- Ensure data validation and quality control to eliminate inconsistent or biased information.

Limitations in Generative AI

While RAG chatbots improve AI-generated responses, generative AI models still have inherent limitations:

- Hallucinations (Fabricated Information): If retrieved data is insufficient, ambiguous, or missing, a RAG chatbot may generate plausible but incorrect responses.

This is a major issue in legal, medical, and financial AI applications, where misinformation can have severe consequences.

- Context Misinterpretation & Inaccuracy: Even with accurate retrieval, the LLM may misinterpret user intent, leading to irrelevant or poorly structured responses.

For example If a RAG chatbot retrieves information about "investment risks" but misinterprets the user's risk tolerance, the response may not align with the user’s expectations.

- High Computational Costs: Running RAG chatbots requires powerful infrastructure due to the retrieval and generative processes happening in real-time.

This makes scalability a challenge, especially for businesses with limited resources.

Despite these challenges, careful optimization, regular data updates, and fine-tuning can significantly enhance a RAG chatbot’s performance. When you address these limitations proactively, you can ensure a more reliable, accurate, and efficient chatbot that delivers a seamless user experience.

Future of RAG Chatbots

The future of RAG chatbots looks promising as emerging trends and technological advancements push their capabilities further. Here is what lies ahead for this innovative AI approach.

Emerging Trends in Retrieval-Augmented AI

Advances in retrieval systems, such as improved ranking algorithms and faster indexing, are making building a rag chatbot more efficient.

Integration with advanced APIs and real-time data feeds is enabling chatbots with RAG to handle increasingly complex queries.

Potential Improvements in Scalability and Integration

Scalability remains a focus. Enhanced cloud-based solutions and modular architectures will make rag based chatbot deployment seamless for businesses of all sizes.

Better integration with existing tools, such as CRM systems or analytics platforms, will expand their use cases across industries.

Predictions for the Role of RAG Chatbots Across Industries

In the future, RAG for chatbot applications will likely dominate industries such as healthcare, e-commerce, and education.

These bots will handle intricate queries, provide instant access to vast data, and support decision-making processes. As retrieval-augmented AI evolves, the relevance and impact of RAG chatbots will only grow.

As retrieval-augmented AI continues to advance, RAG chatbots will become even more sophisticated, providing faster, more accurate, and context-aware interactions.

With ongoing innovations in retrieval, scalability, and integration, these chatbots are set to revolutionize the way businesses and users access information, making them an indispensable part of the digital landscape.

Conclusion

RAG chatbots represent a new standard in AI-driven interactions, combining real-time data retrieval with intelligent response generation. By understanding their benefits, challenges, and tools, businesses can create chatbots that are accurate, dynamic, and user-friendly.

For those looking to simplify the process, platforms like BotPenguin offer a no-code solution. With BotPenguin, businesses can easily build and deploy RAG chatbots without needing extensive technical expertise.

It streamlines the integration of AI capabilities, enabling companies to enhance customer support, boost productivity, and scale across industries.

Embracing tools like BotPenguin can make the journey to smarter chatbots seamless and accessible.

Frequently Asked Questions (FAQs)

What tools are needed to build a RAG chatbot?

Key tools include AI frameworks like OpenAI or Hugging Face, retrieval systems like Elasticsearch or Pinecone, and AI agent and chatbot platforms like BotPenguin for easy deployment. Python and TensorFlow are common for development.

Can I build a RAG chatbot without coding expertise?

Yes, platforms like BotPenguin provide no-code solutions for creating and deploying RAG chatbots, making them accessible even for non-technical users to enhance customer interactions and business operations.

How can I ensure my RAG chatbot retrieves accurate information?

Regularly updating your chatbot’s knowledge base, fine-tuning retrieval algorithms, and integrating high-quality data sources ensure your RAG chatbot delivers accurate and reliable responses.

What industries benefit the most from a RAG chatbot?

A RAG chatbot is highly effective in industries like customer support, healthcare, e-commerce, research, and education. It helps businesses provide precise answers, automate workflows, and improve user engagement.

Can a RAG chatbot be integrated with existing business tools?

Yes, a RAG chatbot can integrate with CRM systems, customer support platforms, and analytics tools. This enhances its ability to fetch, process, and generate responses based on business-specific data.