Large language models have transformed the area of natural language processing (NLP) by enabling unprecedented comprehension and generation of coherent text.

Among these models, Transformers stand out, having demonstrated to be highly effective in various NLP tasks.

The Transformer model is a deep learning architecture transforming natural language processing (NLP). It is built on a self-attention mechanism that enables it to handle long-term dependencies in text data, making it suitable for language translation, summarization, and question-answering tasks.

The Transformer model comprises several fundamental components that interact to process text data. A study by Stanford University found that Transformer models outperformed previous NLP models by up to 10% on a variety of NLP tasks, including machine translation, text summarization, and question-answering

This blog explores what Transformers in NLP are and how they work.

What are NLP Transformer Models?

NLP Transformer Models are a groundbreaking type of neural network architecture that revolutionizes how machines understand and process language.

Traditional approaches like Recurrent Neural Networks (RNNs) and Convolutional Neural Networks (CNNs) have been amazing, but Transformer models bring a whole new level of efficiency and performance.

The Power of Attention Mechanism

It allows the model to selectively focus on different parts of the input sequence, making it incredibly effective at capturing long-range dependencies and understanding the context of a sentence.

Why are Transformer Models a Game-Changer in NLP?

Transformer models are crucial in Natural Language Processing (NLP) for several reasons:

Effective Language Representation

Transformers excel at capturing contextual relationships in language, making them proficient at understanding and generating text. This contextual understanding is essential for many NLP tasks.

Pre-training and Fine-tuning

Pre-trained transformer models, like BERT and GPT, can be fine-tuned for various NLP tasks, reducing the need for task-specific feature engineering and significantly improving performance.

Versatility

Transformers can handle various NLP tasks, including text classification, translation, sentiment analysis, summarization, and more, making them versatile and applicable to various industries.

Multimodal Capabilities

Transformers can process multiple data modalities, such as text and images, to handle complex tasks like image captioning and cross-modal retrieval.

How do NLP Transformer Models Work?

NLP Transformer models, like BERT and GPT, work through a neural network architecture that excels at understanding and generating natural language. Here's a simplified overview of how they work:

Tokenization

The input text is split into smaller units called tokens, such as words or subwords. Each token is assigned a unique numerical representation.

Embedding

These tokenized inputs are converted into high-dimensional vectors through an embedding layer. This step allows the model to represent words or tokens in a continuous vector space, capturing their semantic meaning.

Self-Attention Mechanism

The core innovation of transformers is the self-attention mechanism. It allows the model to weigh the importance of each token about all other tokens in the input sequence. This attention mechanism helps capture contextual information and relationships between words.

Multiple Layers

Transformers consist of multiple layers (e.g., 12 to 24) of self-attention and feedforward neural networks. Each layer refines the model's understanding of the text by considering different aspects of context.

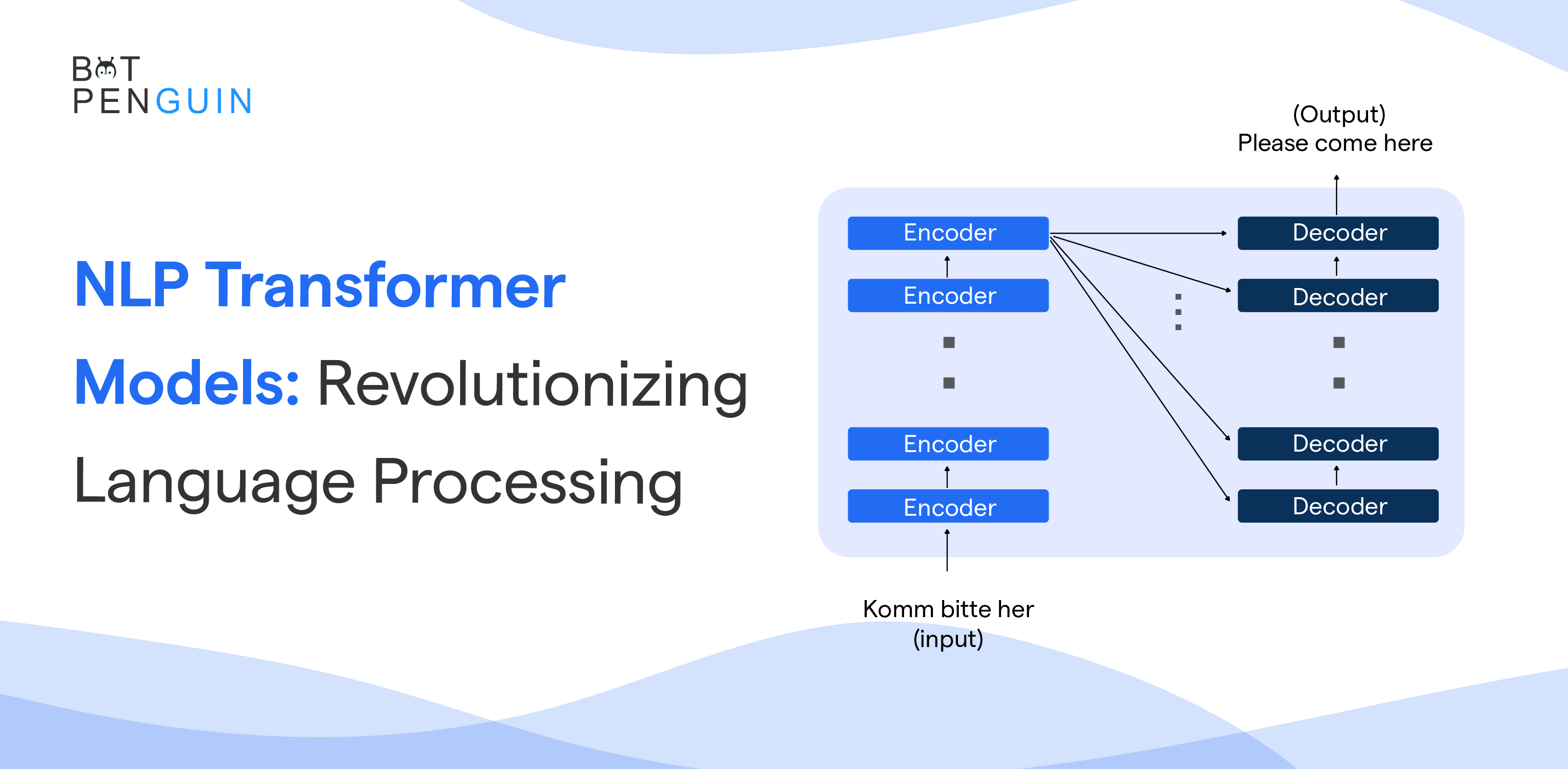

Encoder and Decoder (Optional)

In some cases, transformers use an encoder-decoder architecture. The encoder processes the input text, and the decoder generates the output, making them suitable for tasks like translation.

Use Cases of NLP Transformer Models

NLP transformer models have many use cases across various industries and applications. Some prominent use cases include:

Sentiment Analysis

Analyzing customer reviews and social media posts to determine sentiment and gather business insights.

Text Classification

Categorizing text data into predefined classes, such as spam detection, topic classification, and sentiment classification.

Machine Translation

Translating text from one language to another facilitates communication across linguistic barriers.

Question-Answering Systems

Building intelligent chatbots and virtual assistants capable of answering user questions and providing information.

Named Entity Recognition (NER)

Identifying and classifying entities like names, dates, and locations in text is often used in information extraction.

Text Summarization

Automatically generating concise summaries of long texts or articles, aiding content curation and information retrieval.

Impact of NLP Transformer Models on the Industry

NLP transformer models have profoundly impacted various industries, revolutionizing how businesses operate and interact with data. Some of their key impacts include:

Enhanced Customer Experience

Customer service chatbots and virtual assistants powered by NLP transformers provide quick and accurate responses, improving user satisfaction and engagement.

Efficient Data Processing

NLP models automate text analysis tasks, enabling organizations to process and extract insights from large volumes of unstructured data more efficiently.

Personalized Marketing

NLP models help businesses understand customer preferences and behaviors, allowing for personalized marketing campaigns with higher conversion rates.

Improved Healthcare

NLP models assist in medical record analysis, disease detection, and drug discovery, leading to more accurate diagnoses and improved patient care.

Efficient Content Creation

NLP transformers aid content creators by automating content generation, suggesting improvements, and streamlining the content creation process.

Conclusion

The Transformer model is a potent deep-learning architecture transforming natural language processing. It is well-suited for a wide range of NLP applications due to its capacity to handle long-term dependencies in text data.

The tokenizer, embedding, positional encoding, Transformer block, attention, feedforward, and softmax components all work together, allowing the model to estimate the next word in a text sequence accurately.

It is expected to remain at the forefront of NLP for years as deep learning research improves.

Frequently Asked Questions (FAQs)

1. What is an NLP transformer model?

An NLP transformer model is a neural network-based architecture that can process natural language. Its main feature is self-attention, which allows it to capture contextual relationships between words and phrases, making it a powerful tool for language processing.

2. How do transformer models differ from other NLP tools and techniques?

Transformer models use an attention mechanism that allows them to process entire sentences or documents at once, unlike other NLP techniques that process text sequentially, making them more efficient and powerful.

3. What are the benefits of transformer models in NLP?

Transformer models offer better accuracy, the ability to handle larger volumes of data, automatic feature extraction, and contextual understanding of language, making them ideal for various NLP tasks.

4. What are some real-world applications of transformer models in NLP?

Transformer models have been successfully used in machine translation, named entity recognition, sentiment analysis, and speech-to-text transcription, among others.

5. What is GPT-3, and how does it represent the state of the art in transformer models?

GPT-3 is one of the largest and most advanced transformer models developed to date. It comprises 175 billion parameters and delivers impressive results in various language processing tasks, underscoring the potential of transformer models in NLP.

6. Can transformer models have any ethical concerns when used in NLP?

There are ethical concerns surrounding the use of transformer models, given their ability to generate highly convincing fake content or perpetuate biases found in the training data. It is crucial to develop safeguards that ensure the responsible use of transformer models in NLP.