Introduction

Transformers are the neural networks that analyze relationships between words/tokens using an attention mechanism for AI. The Transformer model allows the understanding of language context and complexity, unlike previous models.

In 2022, 90% of AI projects leveraged transformers, up from just 5% in 2019 per PapersWithCode. One of the popular GPT-3 transformer models boasts 175 billion parameters - surpassing humans' 86 billion neurons as some measure intelligence.

Transformers can digest massive datasets during training, extracting nuanced patterns and correlations. The resulting models like the GPT-3 generate remarkably human-like text - a breakthrough called few-shot learning. With just a text prompt, they can compose songs, explain quantum physics, or recommend Netflix films.

Transformers are triggering an AI renaissance. So knowing how the transformer model works will help you in the long run.

So continue reading to know more about transformer models.

Introduction to Transformer Models

Transformer Models are a class of neural network architectures that revolutionized the field of natural language processing (NLP) and more. These models, unlike traditional recurrent neural networks (RNNs), leverage the power of attention mechanisms to process sequences of data more efficiently.

The Evolution from RNNs to Transformers

Before Transformers entered the scene, RNNs were the go-to choice for sequence tasks. However, they suffered from long-term dependency issues and were computationally expensive. Transformers, with their self-attention mechanism, addressed these limitations.

The Magic Behind Transformer Architecture

The key components of Transformer Models include the self-attention mechanism, encoder-decoder structure, and feedforward neural networks.

The self-attention mechanism allows the model to weigh the significance of different words in a sentence. Meanwhile the encoder-decoder structure enables tasks like machine translation.

Automate your various domains, Try BotPenguin

Why are Transformer Models Revolutionary?

Here's an overview of why Transformer models are considered path-breaking in today's day and age.

Unleashing Parallelization and Scalability

The introduction of self-attention mechanisms in Transformer Models allows for parallelization during training, making them blazingly fast and efficient. This scalability is a game-changer.

As it enables the training of massive models on huge datasets without draining your patience.

Conquering Long-Term Dependencies

Remember the days when RNNs struggled with long sentences, and their memory faded like an old photograph album? Well, fret no more! Transformer Models ace long-term dependencies with their self-attention magic. It captures relationships between words across extensive contexts.

Who Uses Transformer Models?

Transformer models are utilized both indirectly and directly across research, technology, business, and consumer domains due to their ability to understand language.

Adoption is accelerating as capabilities improve and access expands through APIs and apps. The transformer revolution touches virtually every field involving language or text data.

Industries and Applications

Transformer Models have found their way into diverse industries, from healthcare and finance to entertainment and education. Their versatility and adaptability make them a go-to solution for various AI-driven applications.

Success Stories and Use Cases

Some uses cases are the following:

- Natural Language Processing (NLP) Redefined: GPT-3, one of the most famous Transformer Models. It has astonished the world with its language generation capabilities, powering chatbots, content creation, and even aiding in programming tasks.

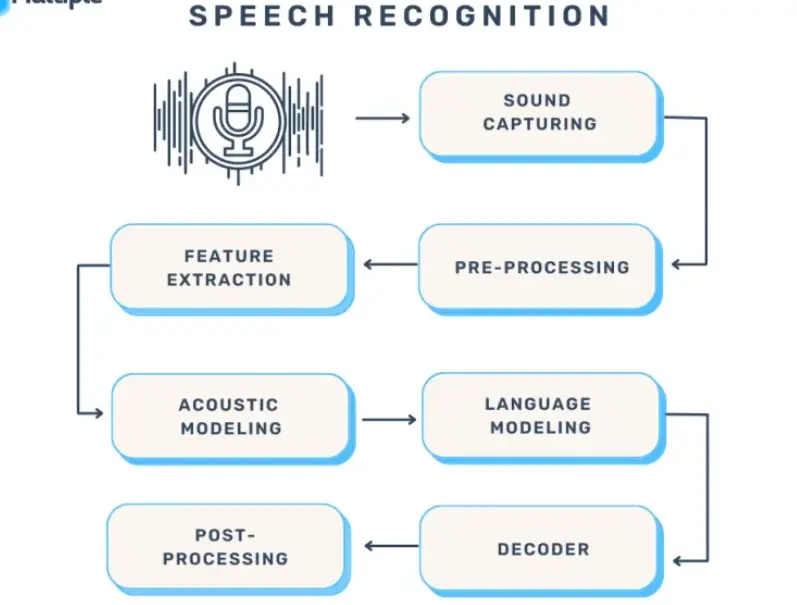

- Speech Recognition: Transformers have left their mark on speech recognition systems. Doing this significantly improves the accuracy and fluency of voice assistants, and makes interactions feel more human-like.

- Image Generation and Recognition: Not just limited to language tasks, Transformers have also made a splash in the world of computer vision. It generates lifelike images and achieves top-notch object recognition.

NLP Transformer Models: Revolutionizing Language Processing

Natural language processing (NLP) has been transformed by the advent of transformer models. By analyzing semantic relationships between words and global contexts within sentences or documents, transformers achieve state-of-the-art results across NLP tasks.

Decoding the Magic of NLP Transformer Models

NLP Transformer Models, like the famous BERT, GPT, and T5, have earned their stripes by grasping the meaning and context of words. It is possible due to their revolutionary self-attention mechanism!

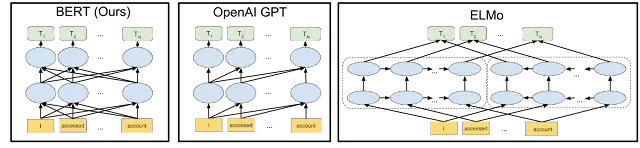

From BERT to GPT: Unraveling Popular NLP Transformer Architectures

Meet the masters of NLP Transformer Models:

- BERT (Bidirectional Encoder Representations from Transformers): This bidirectional wonder can understand the whole context of a sentence, making it ideal for tasks like sentiment analysis and named entity recognition.

- GPT (Generative Pre-trained Transformer): GPT can generate coherent and human-like text, making it a powerful tool for chatbots and language generation tasks.

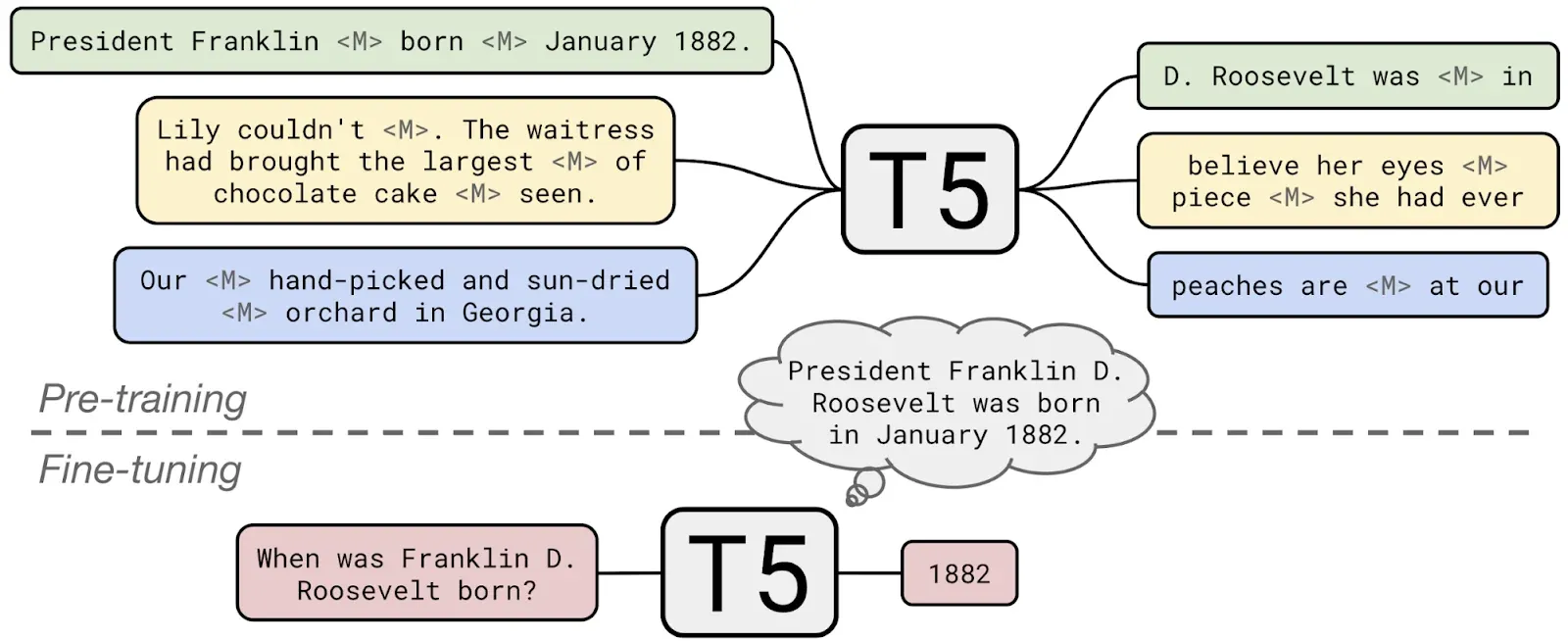

- T5 (Text-to-Text Transfer Transformer): This versatile maestro is a jack-of-all-trades, transforming any NLP task into a text-to-text format. It simplifies the AI pipeline and enhances model performance.

When to Use Transformer Models?

Here are some guidelines on when to use transformer models versus other machine-learning approaches:

Transformers for Complex Tasks

If you're dealing with language-dependent tasks, like language translation, sentiment analysis, or text summarization, Transformer Models is the right choice.

They ace these tasks by understanding the contextual relationships between words, something traditional models often struggle with.

Handling Large and Diverse Datasets

Does your dataset span the size of the entire internet? Fear not, Transformers can handle large datasets with finesse. Their scalability and parallelization prowess allow you to process massive datasets without the usual hair-pulling and desk-banging.

Solving Tasks with Long-Term Dependencies

Remember those long sentences that sent traditional models running for the hills? Well, Transformers have no fear! They're champions at capturing long-term dependencies, making them the perfect choice for tasks involving lengthy texts.

Customizing for Your Unique Needs

With a wide range of pre-trained Transformer Models available, you can choose the one that best fits your needs and fine-tune it for your specific task. It's like having a personal AI tailor at your service!

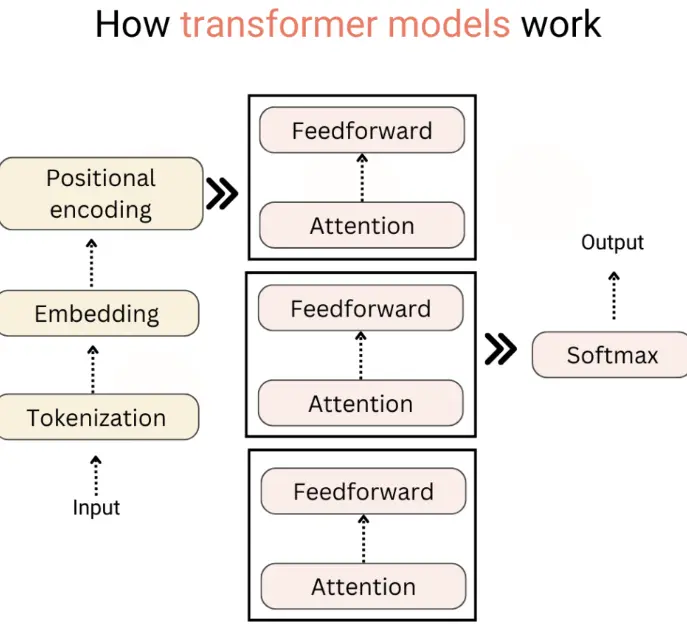

How do Transformer Models Work?

Here is a quick overview of how transformer models like BERT and GPT-3 work

- Based on an attention mechanism rather than recurrence or convolutions used in older models.

- Contains an encoder and decoder. The encoder reads input and generates representations. The decoder uses representations to predict the output

- The encoder contains stacked self-attention layers. Self-attention helps the model look at other words in the input sentences as context.

- The decoder contains self-attention plus encoder-decoder attention layers. This allows focusing on relevant parts of input while generating output.

- Position embeddings encode order information since there is no recurrence.

- Residual connections and layer normalization are used to ease training.

- Trained on massive datasets to build general language representations.

- Fine-tuning on downstream tasks allows applying pre-trained models.

Deep Dive into the Self-Attention Mechanism

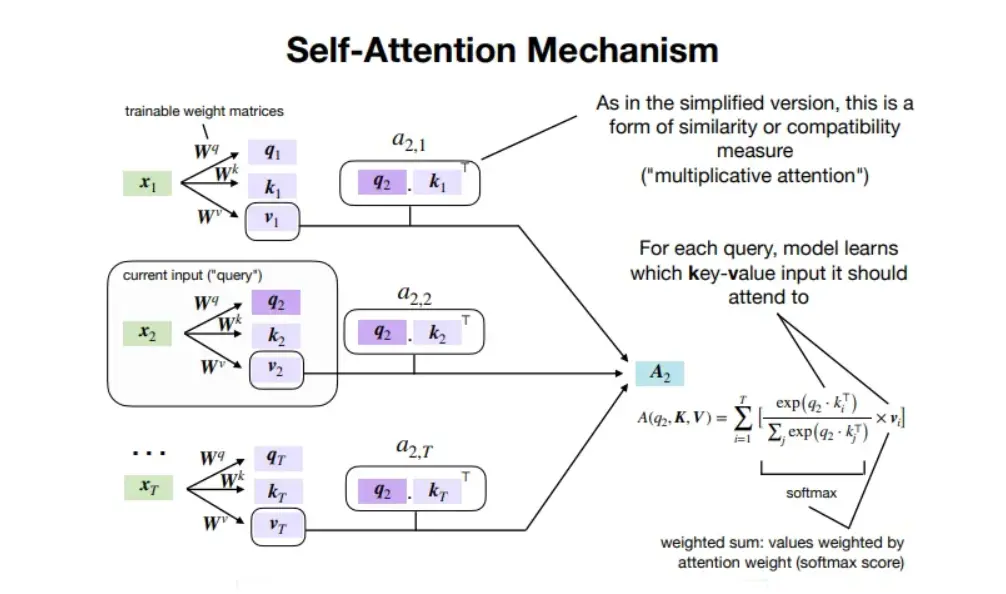

Self-attention relates all positions to every other position using queries, keys, and values to build context-rich representations of the sequence.

Attention Scores and Softmax Sorcery

The self-attention mechanism calculates attention scores, deciding which words should receive more focus in a given context. And it's all done through the magical softmax function!

Multi-Head Attention

Multi-head attention looks at the input from a different perspective. It adds layers of depth and understanding to our language treasure.

Challenges and Limitations of Transformer Models

Here are some key challenges and limitations of transformer models:

High Computational and Memory Requirements

Transformers demand hefty computational power and memory, especially with large models.

Overfitting and Generalization Woes

Transformers must brave the perils of overfitting. Striking the right balance between model complexity and data size is the key to ensure smooth generalization.

Mitigation Techniques and Ongoing Research

No challenge is too big for the AI community. There are many research-backed strategies and mitigation techniques that AI researchers are using to tame these challenges and propel the field forward.

Transformer Models in Deep Learning: Advancements & Applications

Here is an overview of transformer model advancements and applications in deep learning:

Key Innovations

The key innovations are the following:

- Self-attention mechanism relating all positions

- Learned positional embeddings encoding order information

- Encoder-decoder structure

- Multi-head attention for multiple representations

Notable Models

The notable models are the following:

- BERT (2018) - Bidirectional encoder, powerful language model

- GPT-2 (2019) - Unidirectional decoder model for text generation

- GPT-3 (2020) - Scaled-up version of GPT-2 with 175 billion parameters

Applications

The application of training models in deep learning are the following:

- Natural language processing tasks like translation, summarization

- Conversational AI - chatbots, virtual assistants

- Text and code generation - articles, essays, software

- Computer vision - image captioning, classification

- Drug discovery, healthcare, and more

Future Outlook

The future outlook of transformer models in deep learning are the following:

- Larger, more capable foundation models

- Increased multimodal and multi-task capabilities

- Addressing bias, safety, and alignment challenges

- Making models more interpretable and explainable

Conclusion

Transformer models have captivated imaginations with their ability to analyze, generate, and reason about language at an unprecedented level.

What began as an AI architecture tailored for natural language processing now promises to reshape education, healthcare, business, the arts, and fields we can scarcely imagine.

Their prowess stretches from chatbots conversing naturally to models identifying promising new molecules and materials.

BotPenguin is a chatbot development platform that uses transformer models to power its chatbots. BotPenguin also offers several features that make it easy to build and deploy chatbots using transformer models. These features include a drag-and-drop chatbot builder, a pre-trained model, a deployment dashboard, etc.

Using BotPenguin, you can quickly and easily build chatbots that are powered by transformer models. Challenges around efficiency, ethics, and transparency remain. But the momentum behind transformers is undeniable.

Frequently Asked Questions (FAQs)

What are Transformer Models and how do they differ from traditional neural networks?

Transformer Models are advanced neural network architectures for sequential data, like text. Unlike traditional RNNs, Transformers use self-attention for parallel processing, capturing long-range dependencies efficiently.

How does the self-attention mechanism work in Transformer Models?

The self-attention mechanism calculates attention scores for each word based on its relation to other words in the sequence, allowing the model to focus on important contexts.

What is the role of the encoder-decoder structure in Transformer Models?

The encoder processes input and generates contextual embeddings. The decoder uses these embeddings to generate the output sequence, ideal for tasks like machine translation.

How do Transformer Models handle variable-length sequences?

Padding and masking ensure uniform sequence length. The padding adds special tokens, and masking prevents attention to padded tokens.

What challenges do Transformer Models face in terms of computational requirements?

Large models demand significant computational power and memory. Quadratic complexity in the self-attention mechanism is a challenge being addressed.