Most businesses use generic AI to handle tasks like customer service or data analysis. But what if that’s the problem, not the solution? A one-size-fits-all LLM can’t capture the unique needs of your business or industry.

If you want real results, you need a custom LLM. Generic models may be good for broad applications, but they can’t understand your company’s specific language, culture, or processes. That’s where LLM customization comes in.

Why settle for a generic model when you can build something that works exactly for your needs? Custom large language models are the key to improving customer service, automating complex workflows, and handling specialized tasks.

Whether it’s creating a custom LLM for an HR helpdesk chatbot or automating legal document processing, the opportunities are endless.

It's not just about using AI—it’s about using customized large language models that match your needs.

Business Use Cases for Custom LLMs

The potential applications of customized large language models are vast, especially when it comes to customer-facing and backend processes.

For example, LLM customization is revolutionizing customer support, enabling companies to deploy AI-powered chatbots and virtual assistants that handle queries with high efficiency and a personal touch.

These custom llms can understand specialized industry language, making them more adept at answering complex questions compared to their general-purpose counterparts.

In backend operations, custom LLMs excel in tasks like document analysis, extracting critical data, classifying information, and automating decision-making. Custom LLMs in these areas can cut down operational costs and improve accuracy.

Investing in LLM customization has become a strategic necessity for businesses looking to streamline workflows.

Let's understand the steps to develop your own custom LLM for business applications.

Step 1

Define Your Business Needs

A custom LLM can solve many problems, but you need to understand what exactly is slowing your business down. Look for tasks that are repetitive, time-consuming, or prone to human error.

Identifying these pain points will help you determine where your custom LLM can have the most impact.

What Tasks Will the LLM Perform?

Next, clarify what duties your custom LLM will take on. Will it assist in answering FAQs, generating reports, or managing chatbot interactions?

Defining the scope early on is critical to avoid scope creep and ensure you’re building a model that meets specific needs.

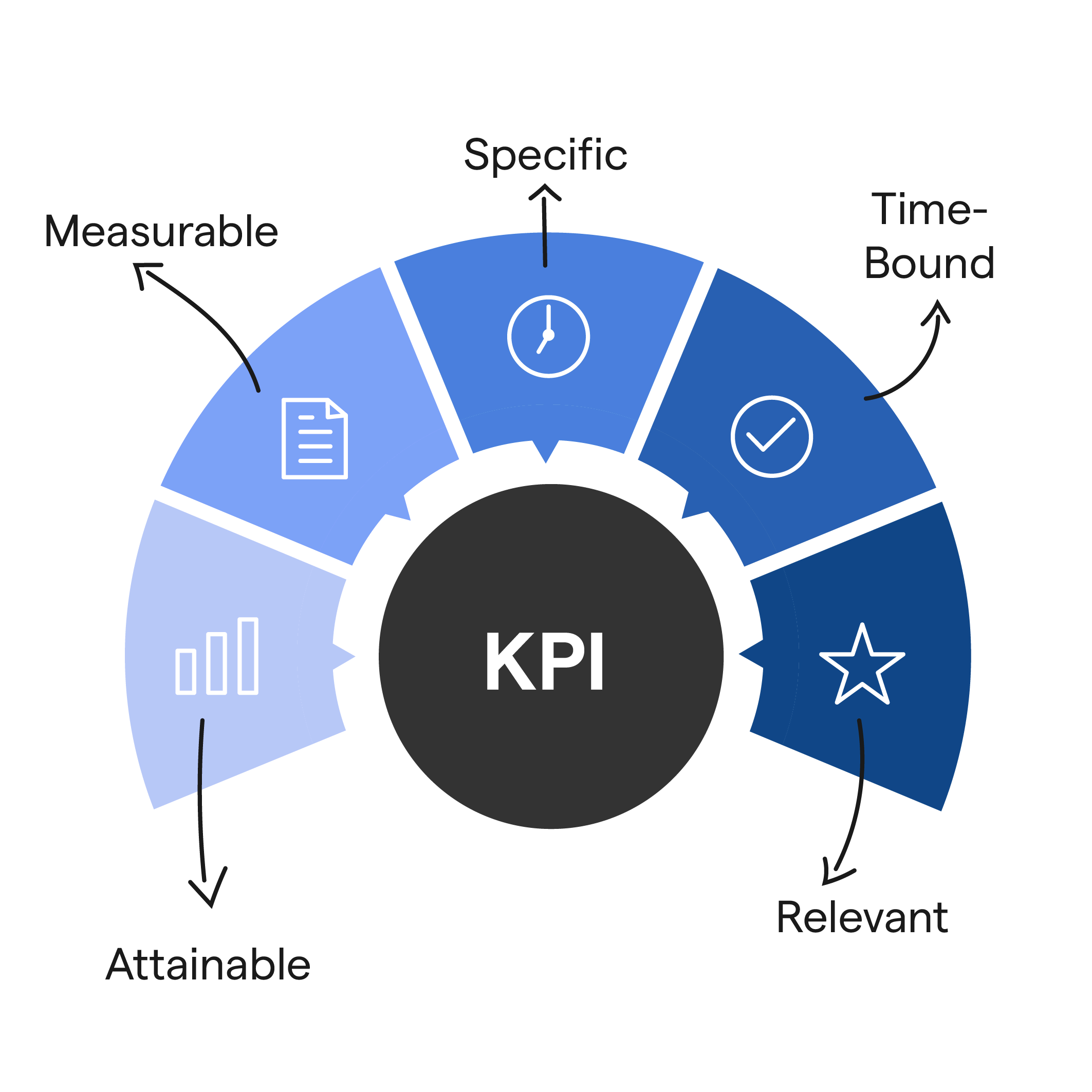

Setting Clear Goals and KPIs

Without clear goals, it’s hard to measure success. Define what success looks like for your custom LLM. Is it a reduction in response time? Improved customer satisfaction?

Make sure your goals are measurable and set key performance indicators (KPIs) accordingly.

Understanding the Target Audience

Who will interact with your custom LLM? If you’re building a customer-facing chatbot, your model will need to cater to external customers, ensuring it understands their needs and tone.

If it’s for internal use, like assisting employees, the llm customization will be geared toward more professional and specific queries. Tailoring your model to the right audience is key to its effectiveness.

Step 2

Collect and Prepare Your Data

If you think you can build a custom LLM without quality data, you’re mistaken. Data is the backbone of any custom large language model.

Without it, the model won’t be able to understand or generate meaningful responses.

Why Data is Key

Your LLM customization is only as good as the data it’s trained on. High-quality, relevant data allows your model to learn patterns, understand language nuances, and generate accurate, context-aware responses. The better your data, the better your model will perform in real-world situations.

If you're building a chatbot for customer service, having a large set of customer interactions will help your custom LLM understand various customer needs and respond appropriately.

Types of Data Needed

To develop an effective custom LLM, you need a variety of data types. This can include

- Text data: Emails, reports, documents, etc.

- Conversation logs: Previous chatbot transcripts, live chats, etc.

- Customer feedback: Surveys, reviews, or other forms of feedback.

- Product data: Descriptions, specifications, and FAQs.

The more closely your dataset mirrors the real-world use cases your custom large language models will face, the better the results.

How to Collect Data

You don’t have to start from scratch. There are multiple ways to collect data:

- Web scraping: Gather publicly available information from relevant websites.

- Internal databases: Use customer interactions, internal reports, or past tickets.

- Third-party datasets: Purchase industry-specific datasets that are already labeled and ready to use.

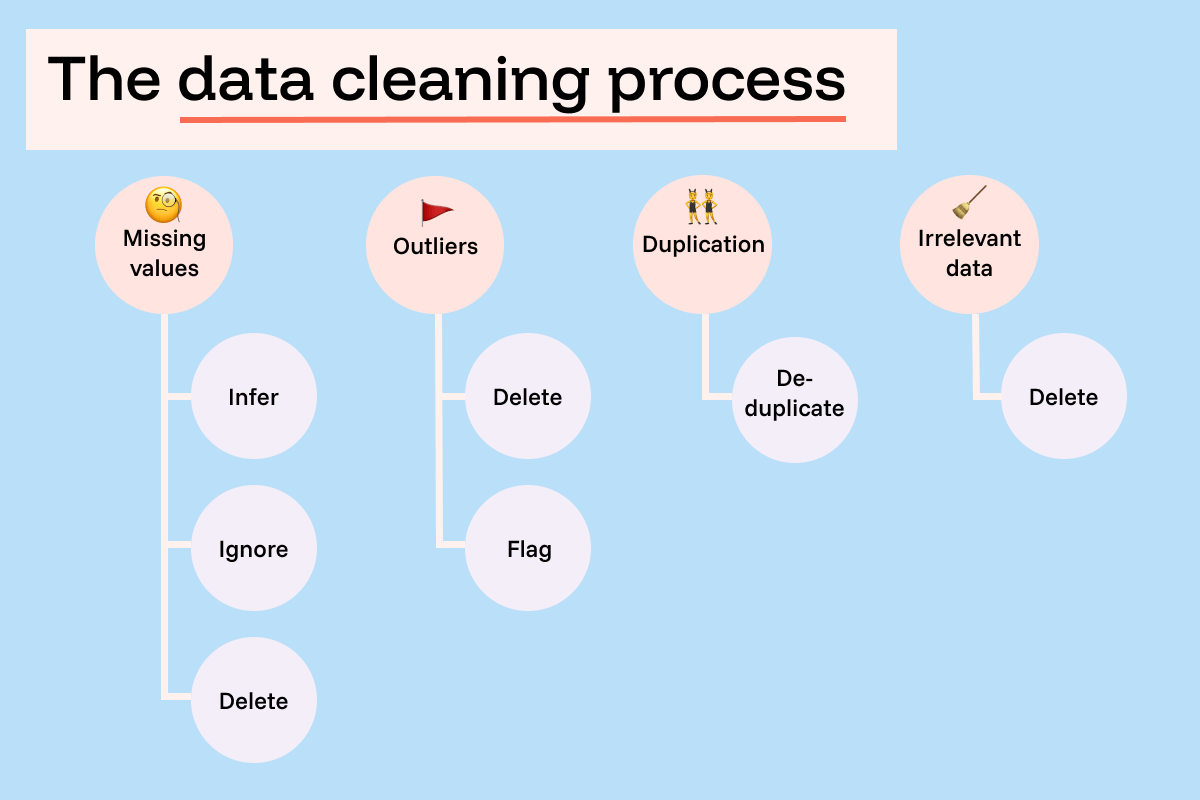

Data Cleaning and Labeling

Once you have your data, it’s time to clean and label it.

- Data cleaning: Remove irrelevant, noisy, or duplicate entries. The cleaner your data, the better the performance of your custom LLM.

- Data labeling: For supervised learning, label the data appropriately. Mark customer queries as "billing", "technical support", or "product inquiry."

This labeling helps the model learn which responses correspond to specific queries.

In the case of a chatbot, labeling customer queries ensures that your customized large language models understand what type of issue is being raised, and they can respond with the most relevant answer.

Step 3

Key Considerations Before Developing a Custom LLM

You might think building a custom LLM is just about collecting data and training a model.

But without careful consideration of a few key factors, your customized large language models could fall short of expectations. It’s about choosing the right model, ensuring data security, and planning for scalability.

Data Privacy and Security

Building a custom LLM means handling sensitive data—whether it’s customer conversations, business strategies, or personal information. If your model mishandles this, the consequences could be disastrous.

Microsoft stresses that businesses, especially in regulated industries, must prioritize data protection and compliance with laws like GDPR and HIPAA.

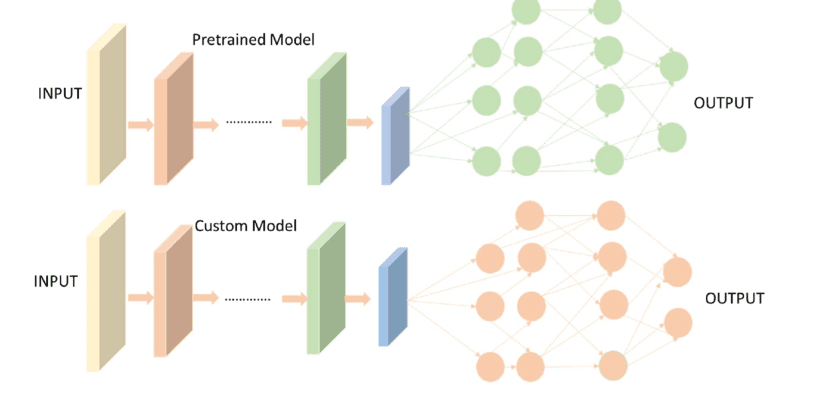

Choosing the Right Model (Pretrained vs. Custom Training)

Many businesses are tempted to build an LLM from scratch, but that’s often not necessary. Pretrained models, like GPT-4 or BERT, can save you time and money.

Fine-tuning means taking a model that’s already been trained on general language tasks and adapting it to your business-specific use cases. This method is faster and more cost-effective.

On the other hand, creating a model from scratch gives you full control, but it’s much more resource-intensive and time-consuming. Deciding between the two comes down to your budget, timeline, and the complexity of your project.

Performance and Scalability

Building a custom LLM is about accuracy and also about how well it performs under pressure. Microsoft highlights that scalability is crucial when deploying AI solutions, particularly if you need the model to handle a high volume of real-time queries.

If your custom large language models are slow or inefficient, they can harm user experience and slow down operations.

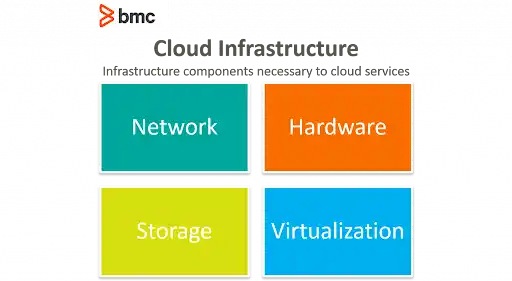

To address scalability, using cloud infrastructure can help. Cloud platforms like AWS, Google Cloud, and Microsoft Azure offer the resources needed to train and run large-scale models.

Considering data security, model choice, and scalability upfront will save you from costly mistakes and ensure that your LLM customization fits your needs in the long run.

Step 4

Set Up the Training Infrastructure

Training a custom LLM can be a resource nightmare if you're not prepared. It’s about collecting data and having the right infrastructure to process and learn from that data. In most cases, the cloud is your best bet.

Cloud Infrastructure vs. Local Hardware

When it comes to training custom large language models, many businesses think they can rely on local servers or existing hardware.

But the reality is that training these models requires massive computational power, often exceeding what typical company hardware can handle.

Cloud platforms like AWS, Google Cloud, and Microsoft Azure provide specialized infrastructure designed for heavy-duty AI tasks. These services offer powerful machines with flexible pricing that scale as needed.

Model Training Platforms

You can also leverage existing training platforms like Hugging Face, OpenAI, or TensorFlow.

These platforms don’t just provide the computational power needed to train a customized large language model—they also offer the tools and frameworks to streamline the process. This helps you save time and avoid reinventing the wheel.

Consider the Scale

As you plan your LLM customization, remember that you’ll need infrastructure that can handle growth.

What works for training a small model today may not be enough when the volume of data increases or when more users start interacting with the model.

Make sure you select an infrastructure that not only meets your current needs but can also grow with your business.

Using cloud-based solutions gives you the flexibility to easily scale your custom large language models and avoid the bottlenecks that come with fixed, on-premise systems.

Step 5

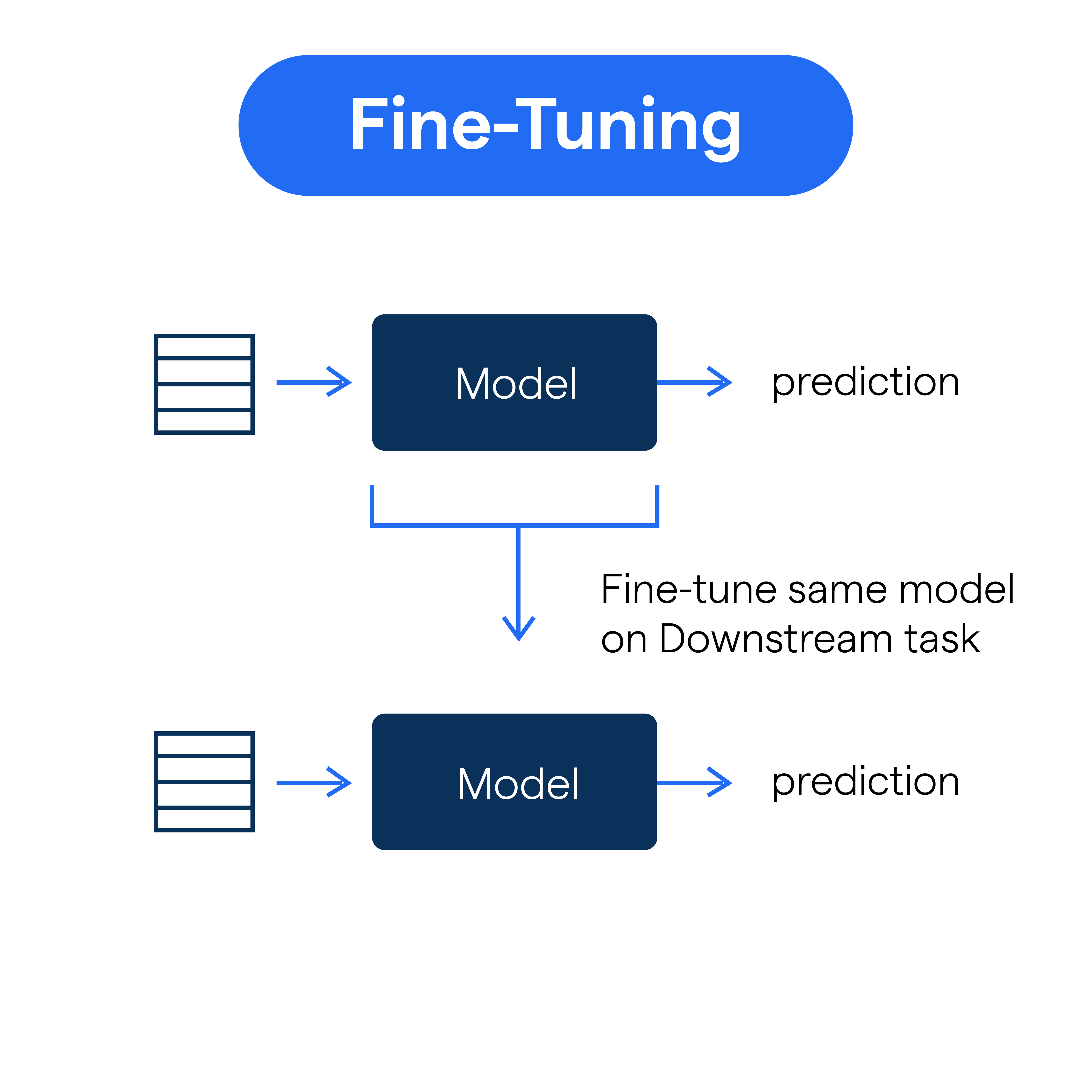

Fine-Tune the Model

Here’s the truth: your custom LLM isn’t perfect out of the box. It needs fine-tuning to truly work for your business.

Fine-tuning is where the magic happens, and surprisingly, this is where many companies go wrong.

Fine-Tuning Techniques

At this stage, you’ll use your domain-specific data to adjust the model. The goal is to make the customized large language models speak your language. There are three main approaches to fine-tuning:

- Supervised Learning: If you have labeled data, this is the easiest route. The model learns to match inputs (like customer queries) to expected outputs (like answers).

- Unsupervised Learning: If your data is unstructured, like raw text, this method helps the model find patterns and structure on its own.

- Reinforcement Learning: For applications like chatbots, this method involves continuous feedback. The model improves as it receives real-time user responses perfect for fine-tuning based on actual usage.

I you're building a custom LLM for a customer service chatbot, you would use past customer interactions (like emails or chat logs) to train the model to respond accurately.

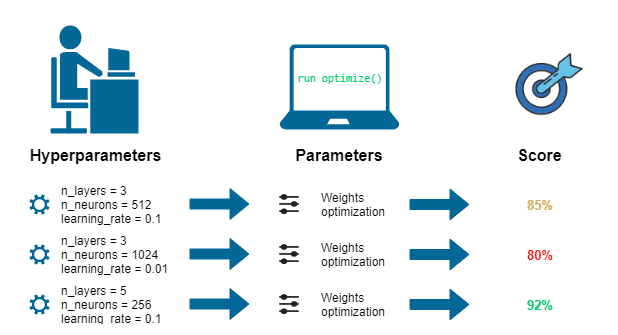

Adjust Hyperparameters

Now, here’s where many developers get stuck: they think fine-tuning is just about feeding data to the model. It’s not.

You also need to adjust hyperparameters, like learning rate or batch size, to ensure the model learns effectively. Even small tweaks can make a big difference in performance.

Monitor Progress

The worst thing you can do during fine-tuning is to walk away. You need to actively monitor the process to ensure your custom LLM is improving and not overfitting.

Overfitting happens when the model becomes too tailored to the training data, which can hurt its performance on new, unseen data.

Step 6

Evaluate the Model

Your custom LLM could be terrible, even after hours of training. You need to test it thoroughly to make sure it actually works.

Testing

Testing is essential. Use a separate set of validation data, which the model hasn’t seen during training, to evaluate its real-world performance.

If you’re building a custom LLM for customer service, for example, run the model through real-world scenarios like answering customer inquiries or generating useful insights from business data. This will show you if it truly meets your needs.

Think about it this way, you wouldn't launch a product without testing it first, and your customized large language models are no different.

Performance Metrics

To measure success, you need more than just gut feeling. Track key metrics like:

- Accuracy: How often is the model getting it right?

- Relevance: Does it provide responses that are useful and on-topic?

- Coherence: Are its responses logically sound and easy to follow?

For some use cases, additional metrics like latency (how quickly the model responds) and precision and recall (how well it handles specific tasks like answering a certain type of query) might also be necessary.

Suggested Reading:

7 Custom LLM Model Development Platforms You Need to Explore

User Feedback

This is the secret weapon many miss: real-world feedback. Let actual users, whether stakeholders or test groups, interact with the model and give you feedback.

Their insights will help you understand how the LLM customization is really performing in live conditions.

By involving test users in the process, you can identify areas where the model is falling short. Maybe it’s generating irrelevant responses, or perhaps it’s too slow. Either way, this feedback will help you refine your model.

Step 7

Integrate the Model into Business Applications

You’ve built your custom LLM, fine-tuned it, and tested it. But unless you integrate it properly into your business, all that work won’t pay off.

API Integration

The quickest way to integrate your custom large language models into existing business applications is through APIs.

By connecting your LLM customization to systems like CRMs, content management platforms, or websites, you can seamlessly enhance operations.

Without API integration, your custom LLM stays a standalone tool instead of becoming a useful part of your business ecosystem.

Embedding into Customer Touchpoints

For customer-facing applications, like chatbots or virtual assistants, your customized large language models need to be accessible where customers interact.

This could mean embedding the model into your website, mobile app, or communication channels like Slack and email.

Backend Systems Integration

For backend systems like document processing or data analysis, your custom LLM needs to be part of the workflow. This means ensuring it can access relevant data sources and work seamlessly with other tools.

Just like with customer-facing applications, the goal is smooth operation. Whether it’s a backend system or a customer-facing interface, a well-integrated LLM customization will boost productivity and efficiency.

Step 8

Continuously Monitor and Improve the Model

A custom LLM doesn’t stop improving after deployment. In fact, the real work starts afterward.

Continuous Feedback Loop

Even a highly refined custom large language model needs constant tuning. User feedback is crucial for identifying areas where the model can improve.

Gathering real-time data from user interactions lets you spot these gaps and fine-tune the model accordingly.

Take a virtual assistant for a retail company, if it keeps misunderstanding common customer queries about shipping policies, it's time to adjust the model based on those real conversations. This is the power of the continuous feedback loop in LLM customization.

Model Updating

As your business evolves, so should your customized large language models. Changes in customer behavior, market trends, or internal processes can make your model outdated. Regular updates ensure the model reflects these shifts.

If new products are launched or the customer service approach changes, retraining the model with fresh data keeps it aligned with business needs.

Common Challenges and How to Overcome Them

Building a custom LLM comes with challenges, but they can be tackled effectively with the right strategies.

Data Limitations & Quality

Bad data = bad results. Make sure your data is clean, structured, and relevant. Inconsistent or biased data will lead to inaccurate predictions and poor performance.

Focus on enrichment and thorough preprocessing.

Bias and Ethical Considerations

Bias can undermine model fairness. Address it by using diverse datasets and regularly auditing your model’s output.

Ethical AI is key to maintaining trust—ensure transparency and fairness in all model outputs.

Training Costs & Infrastructure

Training a custom large language model can be expensive. Use cloud platforms like AWS, Google Cloud, or Azure to avoid costly on-prem hardware.

These platforms allow for flexible, cost-effective scaling. Alternatively, leverage pretrained models to save time and resources.

Model Overfitting

Overfitting is a common issue when training on limited or biased data. Use cross-validation, regularization, and robust datasets to prevent this.

Balance model complexity with the volume of your data.

Suggested Reading:

Custom LLM Development: Build LLM for Your Business Use Case

Integration Issues

Integrating customized large language models into existing business workflows can be tricky. Leverage APIs for easy integration with CRMs, websites, or customer-facing apps.

Ensure the model can handle real-time tasks like chatbots or automated support systems.

Scalability

As demand grows, so must your LLM customization. Ensure your cloud infrastructure can scale to handle more data and users.

Implement load balancing and auto-scaling to keep performance optimal as usage increases.

Security & Privacy

Sensitive data can be a huge concern. Implement encryption and access control mechanisms to protect business and customer data. Stay compliant with regulations like GDPR and HIPAA to avoid legal issues.

By addressing these common challenges, you can maximize the effectiveness and longevity of your custom LLM.

Conclusion

Customized large language models (LLMs) are reshaping business operations by offering solutions tailored to unique challenges. Unlike generic AI, custom LLMs address specific industry needs, improving accuracy and efficiency.

From enhancing customer service to automating workflows, LLM customization streamlines processes and delivers measurable results. By investing in a custom LLM, businesses can unlock new opportunities and stay ahead in competitive markets.

BotPenguin simplifies this journey, offering tools to create tailored chatbots and solutions that align with your business goals. With features focused on scalability, security, and accuracy, BotPenguin empowers businesses to integrate cutting-edge AI seamlessly.

The future belongs to those who adopt customized AI solutions. Start building yours today!

Frequently Asked Questions (FAQs)

How do I start developing a custom LLM for my business?

Begin by identifying your business needs, collecting relevant data, selecting the right tools, and fine-tuning the model to meet your specific objectives, such as chatbot functionalities.

How much does it cost to develop a custom LLM for business?

Costs include data acquisition, cloud computing, tooling, and development time. Budgeting depends on the complexity of the model and the specific tasks you aim to automate or improve.

Can a custom LLM be used for chatbots?

Yes, custom LLMs can be used to develop advanced chatbots capable of handling customer queries, providing personalized responses, and automating support, improving customer satisfaction and reducing labor costs.

How do I measure the success of my custom LLM chatbot?

Success can be measured through KPIs like customer satisfaction, resolution rates, response accuracy, and engagement levels. Continuous monitoring and feedback will help refine the model over time.