Introduction

AI is not just for the elite anymore.

Once a playground for big tech, powerful AI tools are now open to anyone with curiosity and a laptop. Among the most exciting breakthroughs is open source LLM image generation.

A 2024 Artsmart study found that 56% of people who have encountered AI-generated art reported enjoying it. This indicates a positive reception of AI-created visuals among the general public.

The open source LLM image generation models are reshaping creativity. It assists in putting the ability to craft stunning visuals into the hands of everyday people.

This guide explores the fascinating world of open source LLM image generation—what they are, how they work, and why they matter. By the end, you'll see how these tools are breaking barriers and making AI creativity accessible to all.

What is an Open Source LLM?

An open source LLM, or large language model, is an AI tool built on openly available code.

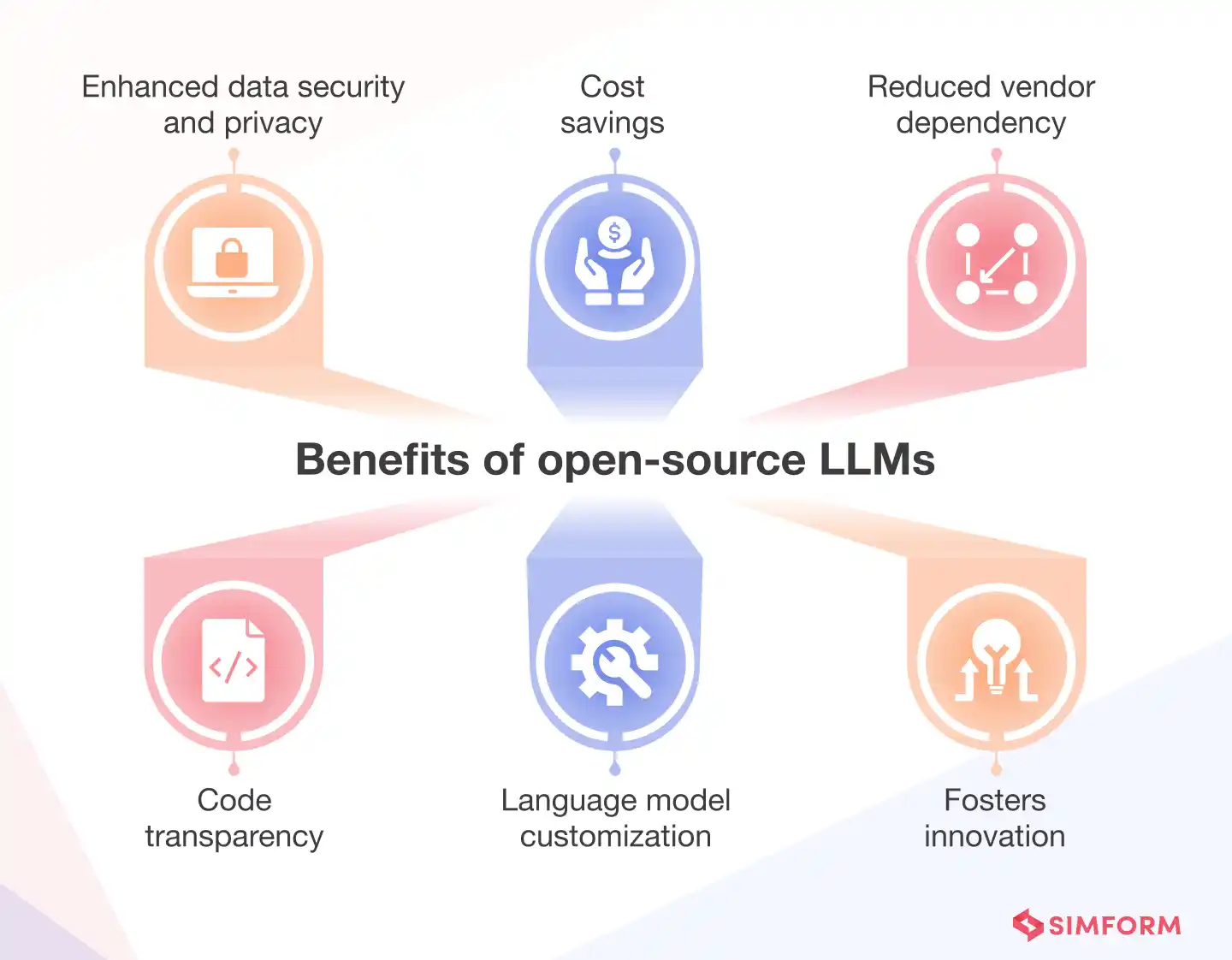

Unlike proprietary models, which are often black boxes controlled by corporations, open source models are fully transparent. Developers can study, modify, and share them freely.

The key characteristics of open source LLMs include accessibility, collaboration, and adaptability. Their transparency ensures anyone can understand how they function. They encourage community-driven improvements, often leading to faster innovations.

Plus, they eliminate reliance on expensive licensing fees. Thus making them highly cost-effective for both individuals and organizations.

Open Source LLMs vs. Proprietary Solutions

Proprietary solutions like GPT-4 or DALL·E offer polished performance but are often restrictive. Open source LLMs, in contrast, thrive on flexibility. They foster community collaboration, are customizable for niche needs, and, crucially, are free to use.

This makes them ideal for resource-intensive applications like open source LLM image generation, where experimentation and scalability are vital.

How LLMs Generate Images

Image generation powered by large language models (LLMs) is transforming creativity. Combining text and visual data, these systems enable precise control over creative outputs.

Let’s explore how open source LLM image generation works, from input to stunning visual output.

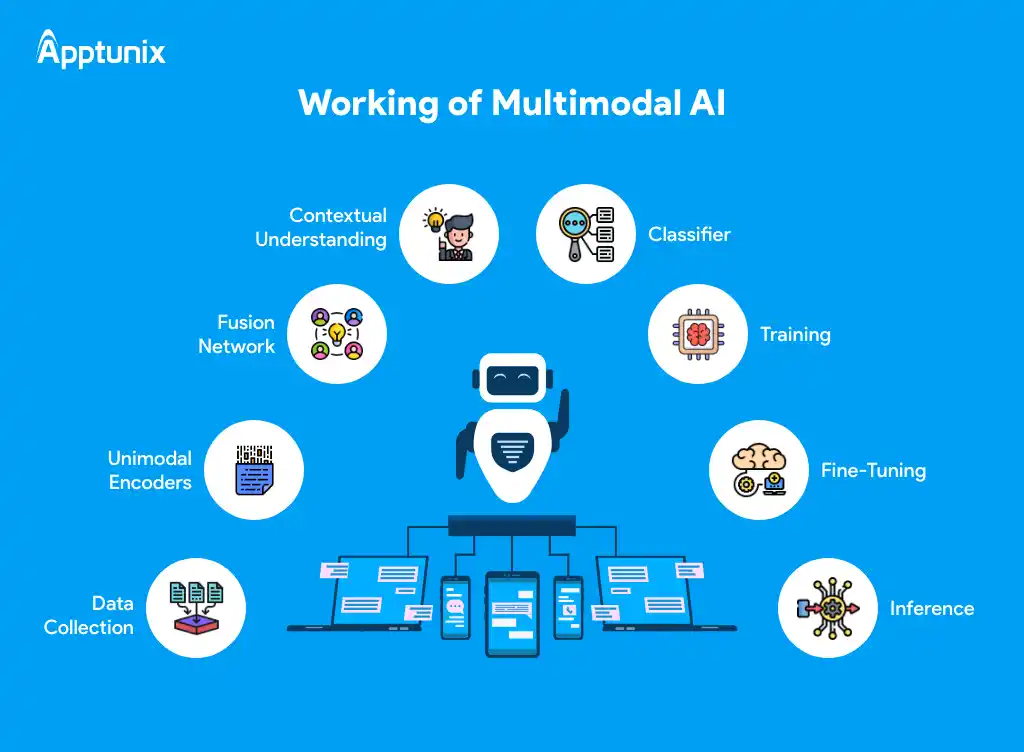

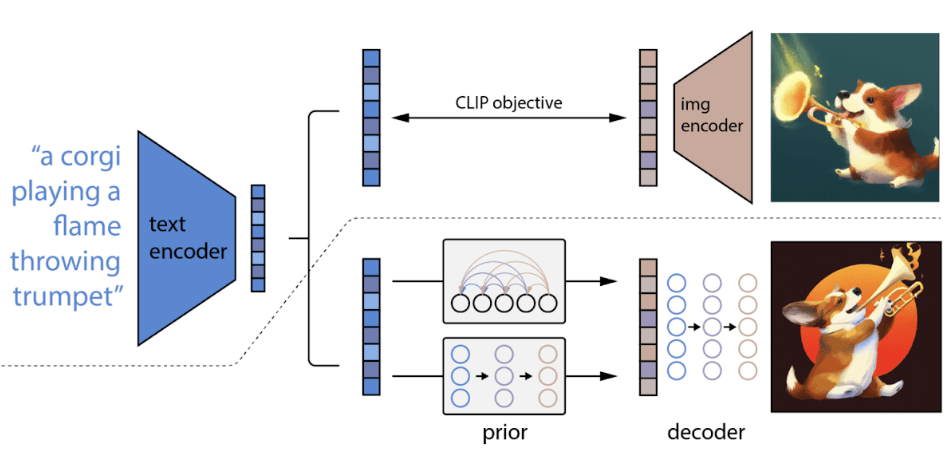

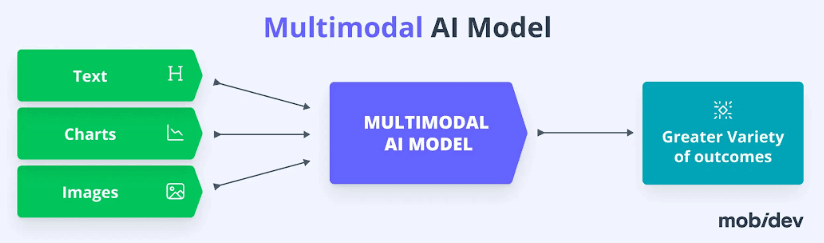

Multimodal AI: Merging Text and Images

At the core of open source LLM image generation are multimodal AI systems. These models can process both text and images, bridging the gap between language understanding and visual creativity.

By analyzing text inputs, they generate highly detailed image outputs that align with the user’s vision.

Interaction with Tools Like Diffusion Models

LLMs often pair with diffusion models like Stable Diffusion or DALL-E. LLMs handle natural language inputs, interpreting detailed prompts with accuracy.

Diffusion models then take over, gradually crafting high-quality images through a process that refines random noise into coherent visuals.

This collaboration enhances the potential of open source LLM image generation, offering flexibility and creativity.

Step-by-Step: From Text to Image

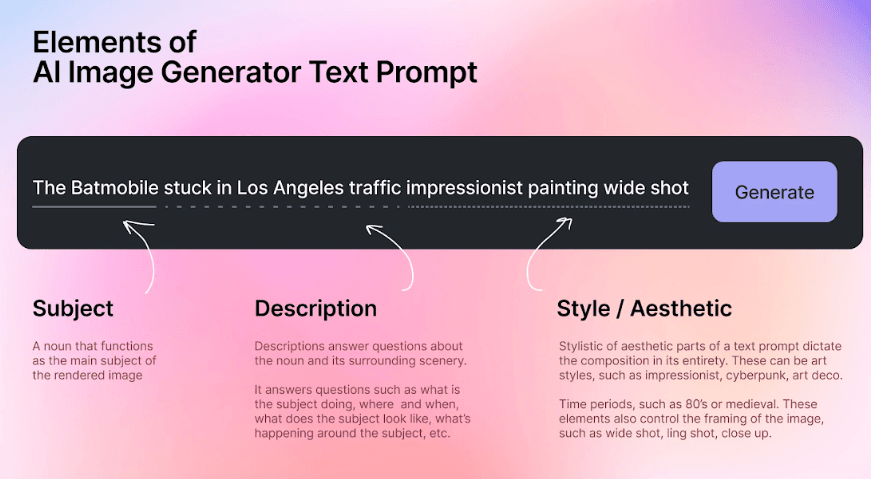

An open source LLM image generation processes your prompt to create a tailored image based on detailed text descriptions. So here are the steps to achieve it:

Step 1

Input the Prompt

The user provides a detailed text description. For example, “A futuristic cityscape with glowing neon lights.”

Step 2

Text Processing

The LLM analyzes the prompt, identifying key themes and visual elements.

Step 3

Model Coordination

The LLM communicates with the diffusion model, translating the processed text into parameters the image model can use.

Step 4

Image Generation

The diffusion model iteratively refines noise into an image based on the parameters.

Step 5

Final Output

The result is a unique image tailored to the prompt.

Real-World Examples of Open Source LLM Image Generation

Open source LLM image generation empowers creators across industries to generate unique visuals, enhancing creativity and efficiency. So let’s see some examples:

Concept Art Creation for Media and Entertainment

Studios and independent creators use tools like Stable Diffusion and DALL-E for concept art. For instance, filmmakers visualize futuristic cityscapes or alien environments using open source LLM image generation.

By providing descriptive text prompts, they can quickly generate visual ideas without expensive design software or artists.

Custom Marketing Campaigns

Companies leverage open source LLM image generation to create tailored visuals for ads.

For example, a small coffee brand could generate unique, hyper-stylized posters with prompts like “A cup of coffee surrounded by steaming mountains of coffee beans in an artistic hand-drawn style.”

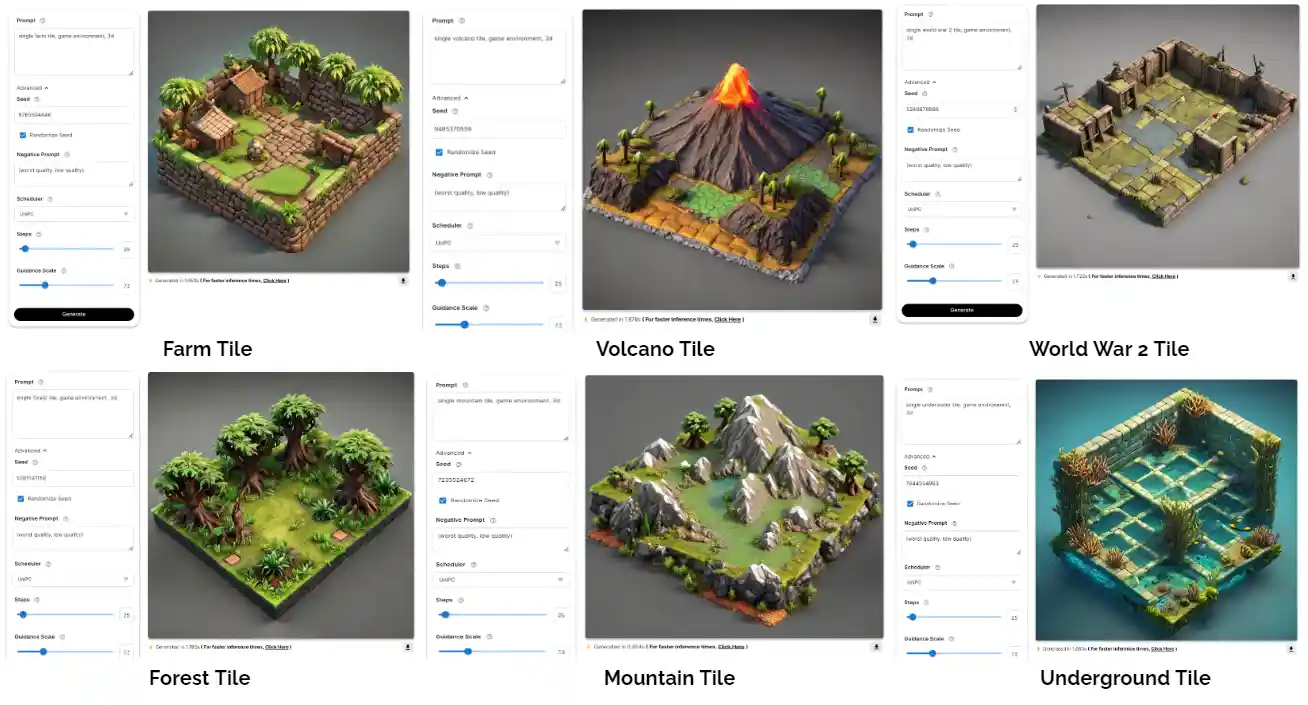

Prototyping in Game Design

Game developers use text-to-image tools for rapid prototyping.

For example, an indie developer might use Stable Diffusion to design a medieval castle by inputting a detailed text prompt like “A foggy castle perched on a rocky hill under a moonlit sky.”

Benefits of Using Open Source LLM Image Generation

Harnessing the power of open source LLM image generation comes with a host of benefits. These models democratize creativity, making advanced AI tools accessible to more people.

Let’s break down why open source solutions are game-changers for image generation.

Cost-Effectiveness

Proprietary tools often come with hefty subscription fees or licensing costs, making them inaccessible to smaller creators or businesses. Open source LLM image generation eliminates these financial barriers.

With free access to the code and pre-trained models, users can experiment and create without worrying about budget constraints. This affordability levels the playing field for innovation.

Flexibility and Customizability

Open source models like Stable Diffusion or Bloom allow developers to tweak the code to fit specific needs.

Whether it’s optimizing the model for a unique style or integrating it into a larger application, open source LLM image generation offers unparalleled flexibility.

Developers can adjust parameters, train models on niche datasets, or even combine them with other tools to enhance functionality.

Community-Driven Innovation

The open source community plays a vital role in improving these models. Developers worldwide collaborate to refine code, address bugs, and share ideas.

This collaborative environment accelerates progress, ensuring open source LLM image generation tools remain cutting-edge. Frequent updates and the addition of new features come directly from community contributions.

Access to Pre-Trained Models and Datasets

One of the standout benefits is access to pre-trained models and high-quality datasets. These resources significantly reduce the time and computational effort needed to get started.

For example, users can leverage models like GPT-Neo or LLaMA, pre-trained on diverse datasets, to generate compelling images without starting from scratch.

Popular Open Source LLM Image Generation Tools

Choosing the right tool is crucial for making the most of open source LLM image generation. Each tool has unique features, strengths, and use cases.

Here’s a closer look at five popular tools and how they compare.

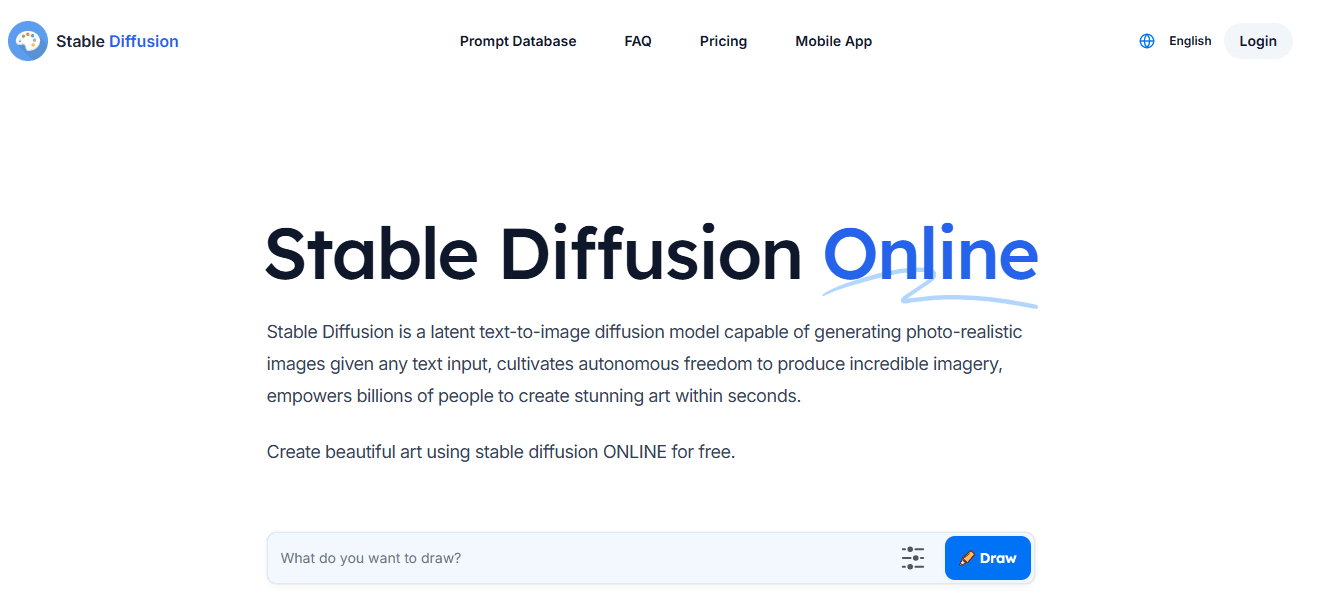

Stable Diffusion

Stable Diffusion is a top choice for many in open source LLM image generation. It’s a diffusion-based model known for generating high-quality images from detailed prompts.

Its flexibility allows users to run it on local hardware or cloud platforms. The tool supports extensive customization, making it ideal for projects ranging from concept art to realistic renderings. Its active community ensures robust support and frequent updates.

Example: A graphic designer can create photorealistic images of landscapes or product mockups with minimal effort, all while customizing the style to fit specific branding.

DreamBooth

DreamBooth excels in fine-tuning existing models for personalized text-to-image outputs.

It allows users to train a model on specific datasets, such as photos of a person or object, and generate images tailored to those references. This makes it perfect for niche applications or hyper-personalized content.

Example: A wedding photographer can use DreamBooth to create stylized portraits of a couple based on their original photos, adding unique artistic flair.

ControlNet

ControlNet is an advanced tool that enhances control over image outputs.

By incorporating additional input data, such as pose or depth maps, users can achieve greater precision and alignment with their creative vision. It’s ideal for technical users who need exact results.

Example: Game developers can use ControlNet to ensure character designs meet specific pose requirements, making it a valuable asset in animation pipelines.

DeepArt

DeepArt is a user-friendly tool that transforms images into artistic renditions. It’s less about generating images from text and more about applying artistic styles to existing photos.

Its simplicity makes it an excellent starting point for beginners exploring AI-driven creativity.

Example: An artist can turn a standard photograph into a Van Gogh-style painting with just a few clicks.

Disco Diffusion

Disco Diffusion is well-known for generating surreal and abstract imagery. Its outputs often resemble dreamlike, painterly art, making it a favorite among experimental artists. The interface may be complex, but the results are stunning.

Example: A conceptual artist can create otherworldly visuals for an exhibition, capturing themes of fantasy and surrealism.

Comparison and Selection Tips

When choosing an open source LLM image generation, consider ease of use, customization, and community support to find the best fit for your needs.

- Ease of Use: DeepArt and DreamBooth are beginner-friendly, while ControlNet and Disco Diffusion cater to advanced users.

- Customization: Stable Diffusion and DreamBooth excel here, offering flexibility for developers and creatives.

- Community Support: Stable Diffusion has the most extensive community, ensuring continuous improvements and help for newcomers.

Tips for Selection

Select the right tool based on your project needs be it for versatility, personalization, or artistic projects. Like:

- Use Stable Diffusion for versatility.

- Choose DreamBooth for personalizing outputs.

- Try Disco Diffusion for artistic projects.

- Opt for ControlNet for precision control.

- Explore DeepArt for style transformations.

With these tools, open source LLM image generation becomes a powerful ally in creative and professional endeavors, catering to a wide range of needs.

Challenges and Limitations of Open Source LLM Image Generation

While open source LLM image generation offers exciting opportunities, it also comes with challenges.

From technical hurdles to ethical dilemmas, these limitations can impact how effectively these tools are used. Here’s a breakdown of the key issues.

Technical Challenges

One of the biggest hurdles in open source LLM image generation is the significant computing power required.

Running or fine-tuning models like Stable Diffusion often demands high-end GPUs, which can be expensive and inaccessible to many users.

Training these models from scratch is even more resource-intensive, requiring vast datasets and expertise in machine learning.

Example: A small design studio may struggle to afford the necessary hardware to run a model locally, limiting its ability to experiment with advanced image generation tools.

Ethical Concerns

Ethical issues arise in two main areas: misuse and dataset bias. Open source LLM image generation tools can be exploited to create harmful or misleading content, such as deepfakes or offensive imagery.

Additionally, biases in the training data can result in skewed outputs, reinforcing stereotypes or excluding underrepresented groups.

Example: A biased dataset might generate fewer diverse character designs or promote specific aesthetic preferences, reducing inclusivity in creative projects.

Usability Barriers

Despite their flexibility, these tools often have steep learning curves, making them challenging for non-technical users.

Setting up environments, understanding prompts, and optimizing outputs require a level of technical skill that can be intimidating for beginners.

Example: An artist without coding experience might find it difficult to navigate installation processes or adjust settings to achieve desired results.

Getting Started with Open Source LLM Image Generation

Starting with open source LLM image generation might seem daunting, but with the right steps, it’s surprisingly accessible. This guide will help you set up your first project, from choosing tools to generating your first image.

Step 1:

Finding and Downloading Tools/Models

Begin by identifying the tool that suits your needs. Popular options like Stable Diffusion, DreamBooth, or Disco Diffusion are widely supported and user-friendly.

Visit platforms like Hugging Face or GitHub to download pre-trained models and their documentation.

Example: To start with Stable Diffusion, you can download the model weights and installation files from its official GitHub repository.

Step 2

Setting Up a Development Environment

You’ll need an environment to run the model. For local setups, tools like Python and Conda are essential. Install dependencies listed in the tool’s documentation.

If you lack the required hardware, opt for cloud platforms like Google Colab or AWS, which provide GPU access.

Tip: Beginners often find Google Colab a great starting point for exploring open source LLM image generation without needing expensive hardware.

Step 3

Running Your First Text-to-Image Task

Once the environment is ready, input your first text prompt. For instance, a prompt like “A serene lake surrounded by mountains at sunset” will produce a stunning visual output.

Experiment with prompt details and parameters to fine-tune results.

Pro Tip: Use sample prompts provided in the documentation to understand how descriptions affect image outcomes.

Step 4

Learning and Troubleshooting

To refine your skills, explore community forums, tutorials, and repositories. Platforms like Reddit, Hugging Face, and YouTube are excellent for finding guides and resolving issues.

Example: If you encounter errors while running a model, search for specific error codes on GitHub issues or Stack Overflow, where solutions are often shared.

Future of Open Source LLM Image Generation

The future of open source LLM image generation is brimming with possibilities.

As multimodal AI continues to advance, these tools are poised to become more powerful, accessible, and collaborative. Here’s a glimpse into what lies ahead.

Emerging Trends in Multimodal AI

The integration of text, image, and even audio data is accelerating. Future open source LLM image generation models will likely handle increasingly complex prompts, combining multiple modalities for richer outputs.

For instance, AI might soon generate animated sequences or interactive visuals based on simple text descriptions.

Evolution of Open Source Tools

Open source models are expected to become faster, more efficient, and less resource-intensive. Efforts are already underway to reduce hardware requirements, making these tools accessible to a broader audience.

Additionally, more pre-trained models with diverse datasets will emerge, improving inclusivity and reducing bias in outputs.

Opportunities for Collaboration

Community-driven innovation will remain a cornerstone of open source LLM image generation. Developers, artists, and researchers can collaborate on new features, refine tools, and share creative breakthroughs.

These efforts will drive rapid evolution and open up opportunities for unique cross-disciplinary projects.

Conclusion

In conclusion, open source LLM image generation is revolutionizing creativity. It is breaking barriers with accessible, flexible, and community-driven tools.

The open source LLM image generation models empower users across industries to bring ideas to life. It has everything from stunning visuals to personalized designs.

While challenges, like compute requirements and ethical concerns, remain, ongoing innovation and collaboration are addressing these hurdles. As multimodal AI evolves, the potential for creative expression will only expand.

By embracing these tools today, you’re not just exploring cutting-edge technology. You’re shaping the future of creativity. Whether you’re an artist, developer, or entrepreneur, the possibilities are endless with open source LLM image generation.

To have even more edge, you can collaborate with a chatbot like BotPenguin. BotPenguin does not have a direct open-source LLM (Large Language Model) or image generation collaboration.

However, BotPenguin provides a chatbot platform that integrates with various AI and machine learning tools. It allows users to integrate features like image generation, custom AI models, and other automation.

Frequently Asked Questions (FAQs)

What is open source LLM image generation?

Open source LLM image generation uses freely available AI models to create images from text prompts.

These models enable creativity through community-driven, customizable tools like Stable Diffusion and DreamBooth, offering cost-effective alternatives to proprietary solutions.

What are the benefits of open source LLM image generation?

Key benefits of open source LLM image generation include cost-effectiveness, flexibility, community-driven innovation, and access to pre-trained models.

Open source tools empower users to experiment, customize outputs, and create unique visuals without high costs or restrictive licenses.

What tools are best for open source LLM image generation?

Popular open source LLM image generation tools include Stable Diffusion for versatility, DreamBooth for personalization, Disco Diffusion for artistic imagery, DeepArt for style transfer, and ControlNet for enhanced control over outputs. Each serves unique creative needs.

What challenges exist in using open source LLM image generation?

Challenges of open source LLM image generation include high compute requirements, training complexity, ethical concerns like dataset biases, and usability barriers for non-technical users. These issues can limit accessibility and reliability for some creators.

How do open source tools compare to proprietary AI solutions?

Open source LLM image generation tools are free, customizable, and community-supported, offering flexibility and accessibility.

Proprietary solutions, while polished, can be expensive and restrictive. Open source LLM image generation tools democratize creativity, making advanced AI accessible to broader audiences.

What is the future of open source LLM image generation?

The future of open source LLM image generation includes trends like multimodal AI, enhanced efficiency, and collaborative innovation.

Emerging tools will handle complex inputs and diverse outputs, making creativity more inclusive and accessible for developers, artists, and businesses.