What is Hadoop?

Imagine you've got a massive pile of data. And when I say massive, I mean colossal, like mountains of information. Now, dealing with this much data can be a real headache. It's like trying to find a needle in a haystack, except the haystack is the size of a continent.

That's where Hadoop steps in. Think of Hadoop as this giant toolbox designed to handle gigantic amounts of data. It's an open-source software framework built to tackle Big Data challenges head-on. With Hadoop, you can store, process, and analyze data on a mind-boggling scale.

Understanding the Big Data Ecosystem

Understanding the Big Data ecosystem involves recognizing the interconnected technologies and processes used to collect, store, process, and analyze vast datasets. Examples include Hadoop for storage and processing, Apache Spark for real-time analytics, and Tableau for data visualization.

Hadoop Ecosystem Components

The Hadoop ecosystem equips a Big Data Engineer with tools like HDFS and MapReduce for storage and processing, frameworks like Spark, Hive, and Pig for data tasks, and solutions like Flume, Kafka, HBase, and Cassandra for ingestion and storage in Hadoop Big Data.

Core Hadoop Ecosystem Components

Hadoop Distributed File System (HDFS): Think of HDFS as a giant storage warehouse for your data. It breaks down your files into smaller chunks and spreads them across multiple machines, ensuring reliability and scalability.

MapReduce: This is the brains behind the operation. MapReduce helps you process vast amounts of data in parallel across a cluster of computers. It's like having a hundred mini-data processors working together to crunch numbers at lightning speed.

Data Processing Frameworks.

Apache Spark: Spark is like the Ferrari of data processing frameworks. It's fast and versatile and can easily handle complex tasks like machine learning and graph processing.

Apache Hive: Hive is your go-to tool for querying and analyzing data using a SQL-like language called HiveQL. It's perfect for those familiar with SQL who want to leverage their skills in the Big Data world.

Apache Pig: Pig is all about simplicity. It lets you write data pipelines using a scripting language called Pig Latin, making it easy to process and analyze data without getting bogged down in complex code..

Data Ingestion and Integration

Apache Flume: Flume is like a data pipeline, allowing you to efficiently collect, aggregate, and move large amounts of data from various sources into Hadoop.

Apache Kafka: Kafka is like a supercharged messaging system, enabling real-time data processing by efficiently handling data streams from multiple sources.

Data Storage

HBase: HBase is a high-performance database designed for big data applications. It's perfect for storing large volumes of semi-structured data with low-latency access requirements.

Apache Cassandra: Cassandra is your go-to choice for distributed databases. It's highly scalable, fault-tolerant, and designed to handle massive amounts of data across multiple nodes.

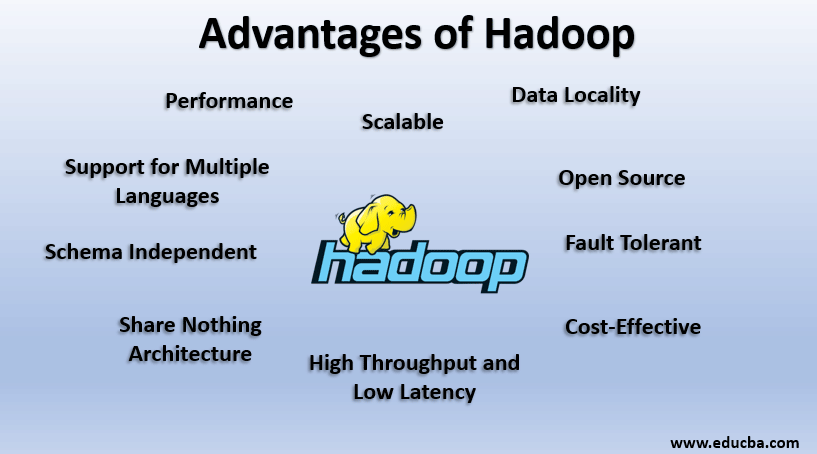

Advantages of the Hadoop Ecosystem

The Hadoop ecosystem, integral to Hadoop Big Data, offers unparalleled advantages. Efficient data processing, scalability, and cost-effectiveness empower businesses to manage vast data volumes effortlessly and affordably.

Efficient Data Processing

Hadoop utilizes distributed computing, spreading tasks across a cluster of computers for speedy data processing.

With Hadoop, processing massive amounts of data becomes lightning-fast, akin to having numerous chefs working together in the kitchen.

Scalability

Hadoop easily scales up to handle growing data volumes, accommodating data growth without compromising performance.

Whether dealing with gigabytes or zettabytes, Hadoop can handle it, likened to adding more lanes to a highway for increased traffic.

Cost-Effectiveness

Hadoop runs on affordable commodity hardware and open-source software, reducing infrastructure costs.

Its scalable nature ensures you only pay for the resources you need, making it a cost-effective solution for businesses of any size.

Challenges of Implementing the Hadoop Ecosystem

Implementing the Hadoop ecosystem presents multifaceted challenges. From its intricate structure to the scarcity of skilled professionals and security considerations, navigating these hurdles demands careful planning and expertise.

Complexity

Hadoop can be daunting for beginners due to its complexity, resembling a vast labyrinth of interconnected systems.

Managing and configuring various Hadoop ecosystem components requires careful attention and expertise.

Skill Gap

Implementing Hadoop often requires specialized skills that may not be readily available, creating a secondary keyword gap.

Finding professionals with experience in Hadoop administration, development, and maintenance can be challenging.

Security Concerns

Security is a major concern when implementing Hadoop, akin to safeguarding a fortress from potential intruders.

Managing access controls, encryption, and compliance within the Hadoop ecosystem requires thorough planning and vigilance.

Frequently Asked Questions(FAQs)

What is the Hadoop Ecosystem, and how does it support Big Data?

The Hadoop Ecosystem is a suite of services that provide data storage, processing, and analysis for Big Data, enabling efficient handling of vast datasets across clusters of computers.

What are the core Hadoop Ecosystem components?

Core components in Hadoop include HDFS for storage, MapReduce for processing, and YARN for cluster resource management, facilitating scalable and distributed data handling.

How does Hadoop ensure data safety and reliability?

Hadoop ensures data safety through HDFS, replicating data across multiple nodes, safeguarding against hardware failures and ensuring reliable data storage.

What role does YARN play in the Hadoop Ecosystem?

YARN (Yet Another Resource Negotiator) manages resources and schedules tasks, allowing multiple data processing applications to run efficiently on the same Hadoop cluster.

How does the Hadoop Ecosystem handle real-time data processing?

The Hadoop Ecosystem integrates with tools like Apache Storm and Apache Flink for real-time data processing, enabling fast data analytics and processing without batch delays.