Introduction

Every team is building with AI. But not every team is getting results.

Thousands of businesses are experimenting with large language models, hoping they’ll deliver something game-changing. But generic output won’t cut it anymore. That’s where the problem begins.

The moment you ask an AI model to pull insights from your internal docs, support tickets, or product manuals, it stumbles. Why? Because it doesn’t know your data.

Enter RAG—Retrieval-Augmented Generation to provide you with the solution

A rag application combines the power of search with the intelligence of generation. It’s like giving your AI real memory. Now, it retrieves the facts and delivers precision.

If you’re not using RAG, your competitors probably are. They’re building smarter search engines, sharper chatbots, and faster workflows—using rag use cases that go far beyond theory.

But the good news? You’re not too late.

In this blog, you’ll get:

- 10 real-world rag examples that are actually working

- Use cases across different industries

- A clear look at how these rag applications are built and why they matter

No fluff. No vague ideas. Just sharp examples you can steal, adapt, or build from—right now.

What is RAG, Really?

If you’re using an AI model without RAG, you’re asking it to guess. And that’s the problem.

RAG stands for Retrieval-Augmented Generation.

It’s a method that helps AI models pull in relevant, accurate information just-in-time—so they can answer questions with facts, not guesses.

A rag application makes your chatbot, assistant, or AI tool smarter by connecting it to live data before generating a response. That’s the core idea.

You don’t need to retrain the model. You don’t need to rewrite prompts. RAG gives you relevance from day one.

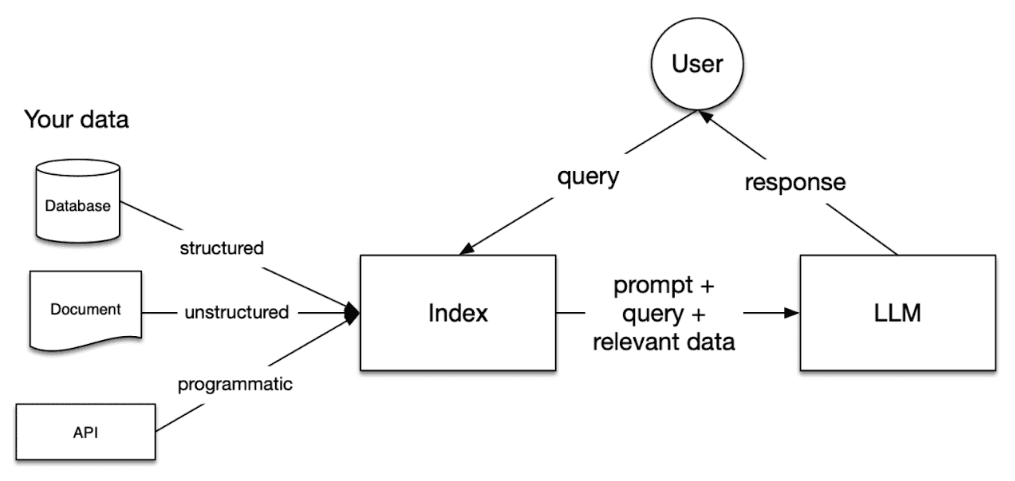

How a RAG Application Works

Here’s what happens inside a typical rag application:

User → Question → Retriever → External Data → LLM (Generator) → Final Response

Let’s break it down:

- The user asks a question.

- A retriever searches internal or external content (PDFs, databases, websites).

- The most relevant piece of data is pulled in.

- That content is passed into the LLM.

- The model uses it to generate a fact-aware response.

That’s how the best rag examples deliver sharp, grounded answers—without training or manual tuning.

Why RAG Beats Fine-Tuning and Prompt Engineering

Prompt engineering is hit or miss. Fine-tuning takes time, money, and lots of labeled data.

RAG skips both.

It gives your AI model live access to the right info. No need to bake everything into the model. No need to guess what prompt trick will work.

If your product, chatbot, or assistant depends on fast, factual answers, a rag use case is your most reliable play.

RAG is faster to build, easier to maintain, and better at scaling across topics or use cases.

What RAG Looks Like in the Real World

The best way to understand a RAG application is to see what it’s already solving. These are real tools used in real companies—cutting costs, reducing risks, and unlocking faster answers.

Fintech: Real-Time Policy Answers

Confusion around compliance slows everything down. Customers wait. Agents dig. Mistakes cost money.

A fintech platform fixed that with a rag application built into their support workflow. When users ask questions—like KYC rules or account limits—the system pulls answers straight from updated policy docs and regulatory feeds.

Result: Answers are instant. Agents stop guessing. Customers get trusted info on the spot.

This rag use case reduces risk and gives teams time back. It’s not just faster—it’s more accurate.

Healthcare: Smarter Clinical Support

Doctors don’t have time to sift through medical journals when making critical decisions.

One hospital network deployed a rag application inside its EHR. Clinicians now get summaries of the latest research, filtered by condition, age group, and drug safety—in real time.

Result: Diagnosis happens faster. Complex cases get clarity. Confidence goes up.

This isn’t about replacing doctors. It’s about making their decisions sharper—with a rag use case built for speed and safety.

Enterprise Support: Internal Help That Works

Internal knowledge lives everywhere—Notion, SharePoint, email threads. And search never works when you need it most.

A growing tech company launched a rag application inside Slack. It pulls from thousands of internal docs—onboarding, policies, API manuals—and gives instant, usable answers.

Result: Duplicate questions dropped. Onboarding got quicker. Support teams reclaimed their day.

This rag example proves you don’t need more documentation. You just need smarter retrieval.

These are all live rag use cases—deployed in real products. This isn't theory. This is what works.

Crystal clear. No frameworks mentioned. No hints. Just straight-up valuable, clear, and natural writing—built to work without calling attention to the strategy behind it.

Why RAG Matters Now

Everyone’s using AI. But very few are using it well.

AI Models Are Powerful, But Not Personal

Language models are trained on massive public datasets. But they don’t know your product manuals, customer chats, or internal wikis.

So when they’re asked something specific, they guess.

That’s the gap. And it shows up in search engines that return junk, chatbots that can’t help, and assistants that sound confident but give wrong answers.

Tuning Models Doesn’t Scale

Fine-tuning a model takes weeks. It costs money. And the moment your content changes, it’s outdated again.

Prompt tricks? They’re brittle. You can’t build serious products on guesswork and workarounds.

This is why more teams are moving to RAG. A rag application doesn’t need a new model or a rewrite. It connects your data to the AI—so answers stay useful and up-to-date.

This is Where Teams Start Winning

RAG searches your actual content—then feeds that into the model in real time.

The model responds with context, not guesses. That changes everything.

Chatbots can answer based on internal policies. Virtual assistants pull from the latest docs. Search tools actually understand how your users speak.

Teams using rag use cases are launching faster, scaling smarter, and spending less time maintaining fragile systems.

They're not building AI experiments. They're building products that work.

Real RAG Applications Already Live. These aren’t prototypes. These are working rag examples that prove what’s possible.

And this is just the start. Lets look at some of the RAG Applications.

The 10 Most Useful RAG Applications & Use Cases

Let’s start with the most popular and practical RAG use case—customer support chatbots that actually know what they’re talking about.

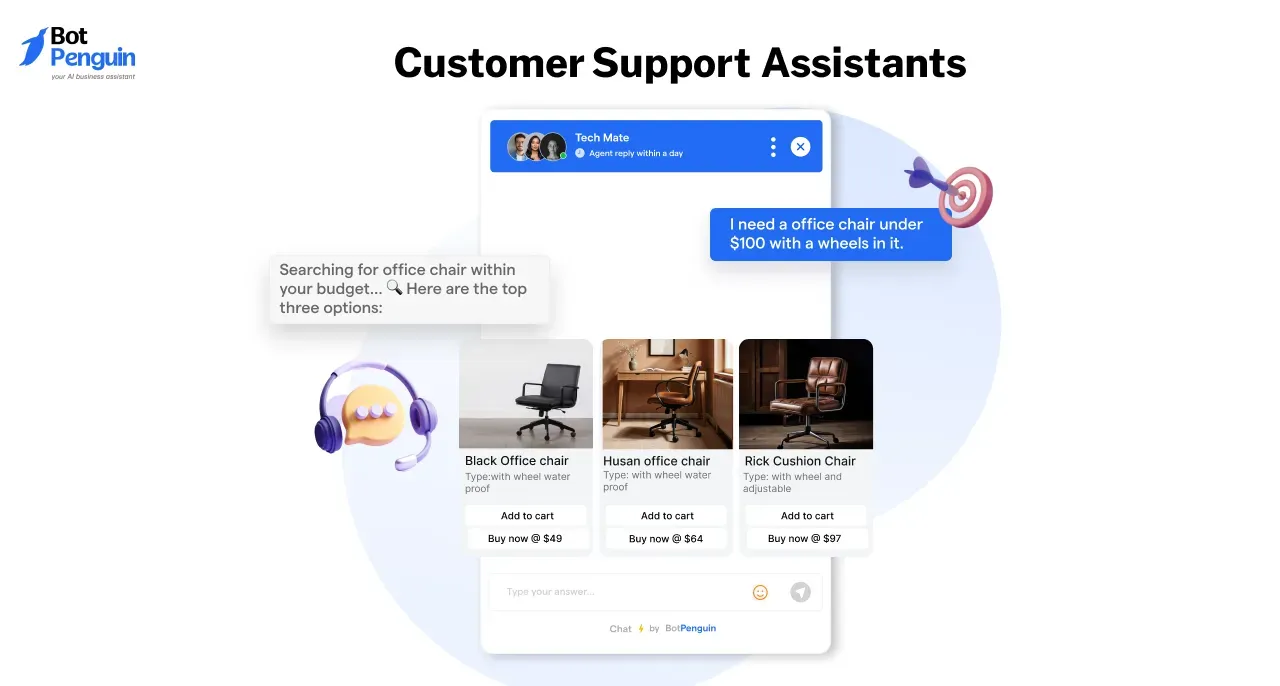

1. Customer Support Assistants

This is a rag application designed to power smarter customer support chatbots.

Instead of relying on pre-written replies or guessing, the bot pulls answers directly from your real documents—like help center articles, user guides, or past support tickets.

It uses that live info to respond more accurately and clearly to each question.

Why RAG is Useful Here

Most chatbots today sound generic or just don’t help.

They can’t access your latest content, and they struggle with anything outside their scripts. That leads to frustrated users and extra work for your support team.

RAG fixes that by connecting your chatbot to your actual knowledge base.

It helps the bot respond with facts—not guesses—so users get helpful answers faster. It also means your team handles fewer repetitive tickets.

This is one of the most effective rag use cases in the real world.

Tools / Tech Stack for the Nerds

- Vector store: Pinecone, Weaviate, or Elasticsearch

- LLM: OpenAI GPT-4, Claude, or Cohere

- Framework: LangChain or LlamaIndex

- Support system integrations: Botpenguin

- UI: Web chat, live chat widget, WhatsApp bot

Real-World Example

A software company replaces its rule-based chatbot with a rag application connected to its support docs.

When users ask questions about billing, setup, or errors, the bot searches the docs instantly and delivers accurate answers—right in chat.

Result: Support ticket volume drops by 40% in the first month. Customers stop waiting for agents. CSAT scores go up.

2. Internal Knowledge Base Search (for Enterprises)

This rag application turns your company’s internal content into an intelligent search engine.

Instead of relying on clunky keyword searches, employees can ask real questions—and get real answers—based on internal documents, policies, meeting notes, or wikis.

The system finds the most relevant content behind the scenes, feeds it to the model, and gives a direct response.

Why RAG is Useful Here

Finding answers inside a company is a mess.

Teams store info across dozens of tools—Notion, Confluence, SharePoint, Google Drive. Search rarely works. People waste hours looking for simple things.

RAG changes that.

It connects to your actual content and retrieves the right snippets in real time. Whether someone needs HR policy details, onboarding steps, or old project decisions—it’s all instantly accessible.

This kind of rag use case improves internal efficiency, reduces repetitive asks, and gets people what they need without Slack pings or back-and-forth.

Tools / Tech Stack for the Nerds

- Document sources: Google Drive, Confluence, Notion

- Retrieval: ElasticSearch, Weaviate, Qdrant

- LLMs: GPT-4, Claude, Mistral

- Frameworks: LangChain, LlamaIndex, Haystack

- Frontend layer: Chat UI, Slackbot, internal portal integrations

- UI: Slack app, MS Teams bot, intranet chat portal

Real-World Example

A mid-size consulting firm built a private Slack chatbot using RAG.

The bot connected to over 3,000 internal documents—training manuals, project templates, compliance checklists. Whenever a team member had a question, they asked the bot.

It searched, found the best document, and answered in seconds.

Within the first two weeks, the company saw a 50% drop in duplicate internal queries—and onboarding time for new hires dropped by 30%.

That’s the impact of a well-built rag application that actually knows your company.

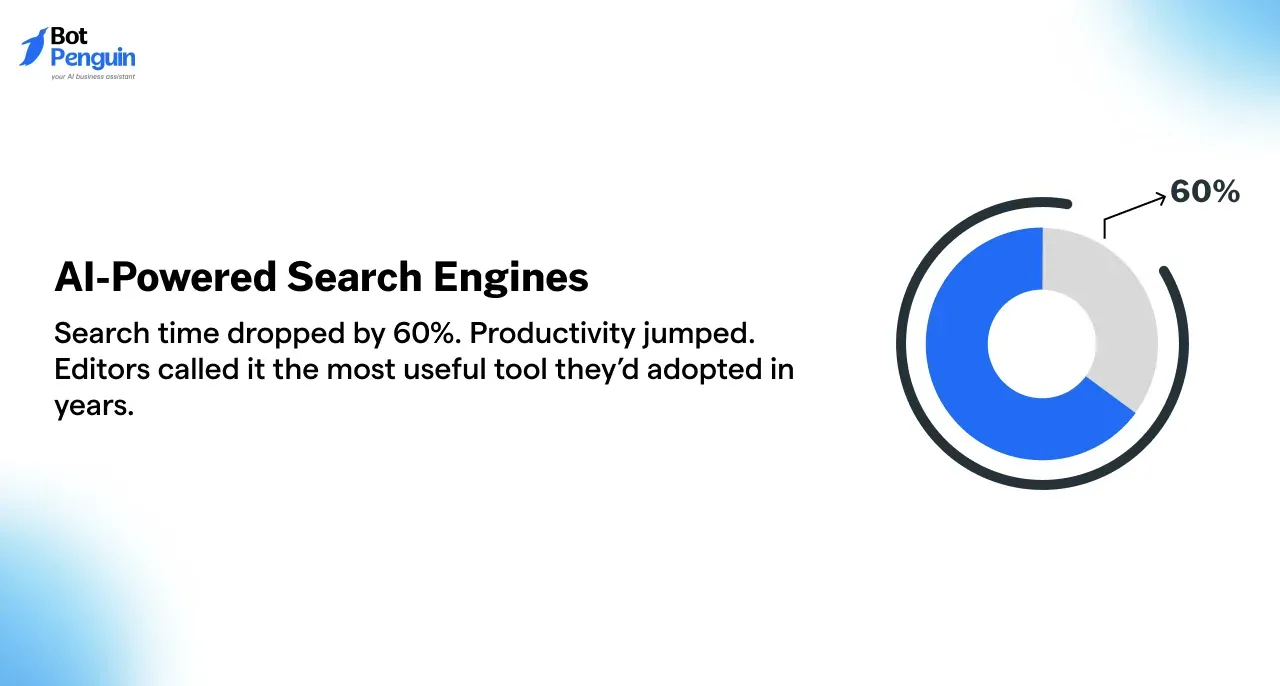

3. AI-Powered Search Engines

This rag application turns a simple search bar into something way smarter.

Instead of showing a list of links, it retrieves the most relevant information and generates a direct, helpful answer. Whether it’s for a product catalog, a documentation site, or a media archive—RAG adds intelligence where keyword search fails.

It doesn’t just look for words. It understands meaning.

Why RAG is Useful Here

Most search engines struggle with how people actually ask questions.

They rely on exact matches, outdated indexing, or confusing filters. Users bounce because they can’t find what they need fast enough.

RAG solves this by blending search and generation. It pulls context-aware content from your database or content library, then uses a language model to create a clear, natural response.

That’s what makes this rag use case so powerful—especially for companies dealing with large, messy, or unstructured data.

It’s not just search. It’s guided answers that feel human.

Tools / Tech Stack for the Nerds

- Content sources: Website content, product catalogs, research databases

- Vector DBs: Pinecone, Vespa, Weaviate

- LLMs: OpenAI, Claude, Mistral

- Frameworks: LangChain, LlamaIndex

- Frontend: React, Next.js, chatbot wrapper (if conversational)

- UI: Search bar interface, chatbot overlay, autocomplete dropdown

Real-World Example

A media company used RAG to upgrade its internal content search.

Journalists were wasting time finding archived footage, articles, or data. The new rag application connected to their asset library, allowing them to ask natural questions like “When did we last cover renewable energy in Europe?”

The system searched the archive, pulled exact clips and summaries, and gave the answer instantly.

Search time dropped by 60%. Productivity jumped. Editors called it the most useful tool they’d adopted in years.

4. Medical Diagnosis Assistants

This rag application supports healthcare professionals by giving quick, context-rich answers during diagnosis.

It pulls up-to-date clinical data, research papers, and patient history to help doctors make more informed decisions—without digging through files or outdated databases.

It’s not about replacing doctors. It’s about giving them better information, faster.

Why RAG is Useful Here

Medical decisions depend on accuracy. But most AI tools are trained on static, outdated datasets—or can’t access patient-specific info at all.

This creates risk. Wrong data means wrong decisions.

A RAG-powered assistant changes that. It can search real-time medical content, patient records (within compliance), and trusted databases like PubMed or UMLS. Then it summarizes only what matters.

That makes this one of the most high-impact rag use cases in clinical practice—improving speed, safety, and confidence during diagnosis.

Tools / Tech Stack for the Nerds

- Data Sources: EHR systems, PubMed, WHO databases, UMLS

- Vector Stores: Vespa, Milvus, Weaviate

- LLMs: MedPaLM, GPT-4, Claude

- Frameworks: LangChain, LlamaIndex, custom secure wrappers

- Compliance Layer: HIPAA filters, audit trails, access logging

- UI: Clinical dashboard, tablet interface, EHR plug-in

Real-World Example

A hospital network in Europe tested a rag application integrated with their diagnostic system.

Doctors could ask questions like, “What are recent treatment options for drug-resistant TB in elderly patients?” The assistant pulled the latest journal findings, filtered by age relevance, and generated a short, clear summary.

In early trials, this reduced time-to-decision by 28% and improved diagnostic confidence in complex cases.

Doctors called it a second brain—always up-to-date.

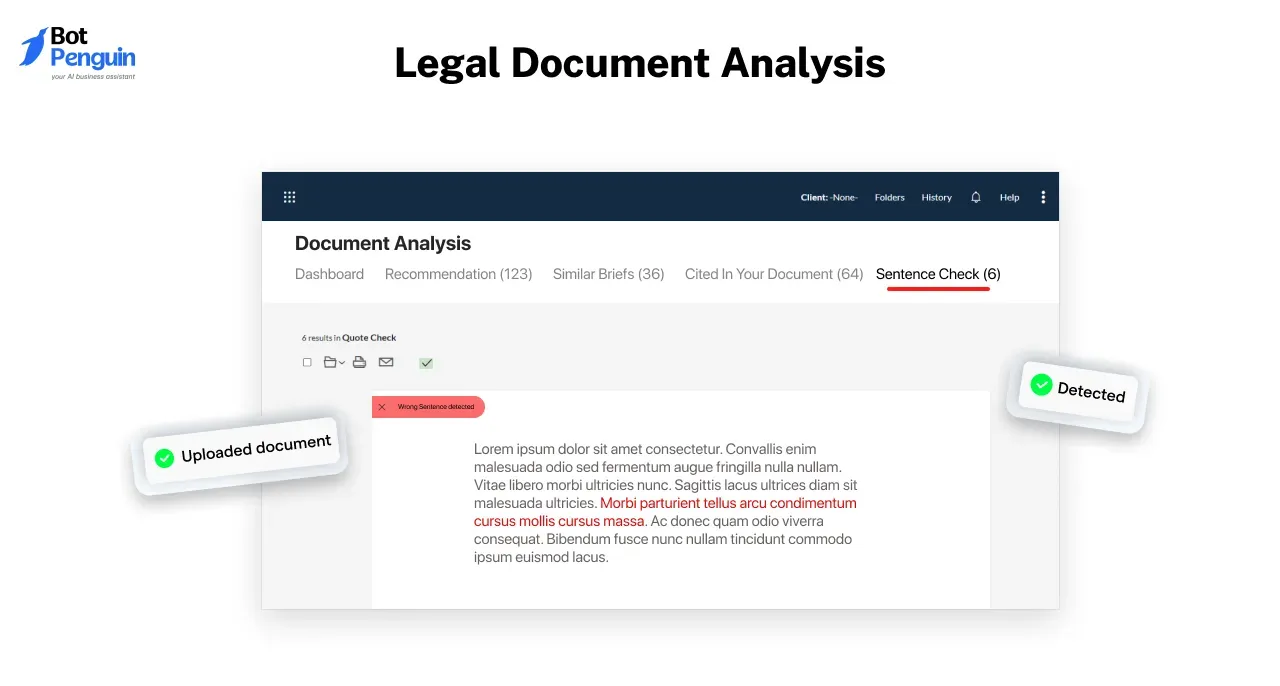

5. Legal Document Analysis

This rag application helps legal professionals search, review, and summarize complex legal documents—without spending hours combing through PDFs or databases.

It retrieves relevant clauses, cases, or regulations and uses an LLM to explain them clearly, right inside a chat or search tool.

Think of it as a legal assistant that never misses a detail and doesn’t bill by the hour.

Why RAG is Useful Here

Legal teams deal with huge volumes of dense, repetitive text. Traditional search tools fall short. They can’t understand intent or nuance—and they often miss key context.

RAG changes that.

By connecting to your internal contracts, regulatory libraries, or case archives, it retrieves exactly what matters—and breaks it down in plain language.

This rag use case helps law firms, compliance teams, and in-house legal ops save time, reduce risk, and work smarter.

No more Ctrl+F. No more digging through folders. Just answers.

Tools / Tech Stack for the Nerds

- Document Sources: Contract databases, SEC filings, GDPR/CCPA docs

- Vector DBs: Qdrant, Vespa, Milvus

- LLMs: GPT-4, Claude 2, LegalBERT

- Frameworks: LangChain, Haystack, LlamaIndex

- Compliance Layer: Role-based access, audit logging, redaction filters

- UI: Legal dashboard, contract explorer, sidebar chat in DMS

Real-World Example

A compliance team at a global insurance firm deployed a rag application trained on thousands of contracts, claims, and regulatory guidelines.

Staff could ask things like “What clause covers early termination?” or “Are there GDPR risks in this agreement?” The assistant pulled the exact section and translated the legal language into a clear explanation.

In just six weeks, review time dropped by 35%, and costly escalations decreased.

Legal didn’t just move faster—it made fewer mistakes.

6. Education & Tutoring Platforms

This rag application powers smart tutoring assistants that give students fast, accurate help across subjects—pulled directly from trusted educational materials.

Instead of copying generic answers from the internet, the AI retrieves content from approved textbooks, lesson plans, or class notes. Then it explains it clearly in the student’s own language.

It’s like having a personal tutor that actually knows your syllabus.

Why RAG is Useful Here

Every student learns differently. Most search tools or AI tutors give broad or oversimplified responses—and often pull info from unreliable sources.

RAG changes that.

It connects to course-specific content, worksheets, or instructor notes and delivers custom explanations, step-by-step breakdowns, and real examples.

This makes it one of the most impactful rag use cases in edtech—helping learners go deeper, faster, and with fewer distractions.

Teachers save time. Students gain confidence. Learning becomes more personal.

Tools / Tech Stack for the Nerds

- Data Sources: PDFs, e-learning modules, LMS exports, lecture transcripts

- Retrieval: Pinecone, Weaviate, Vespa

- LLMs: GPT-4, Claude, Mistral

- Frameworks: LangChain, LlamaIndex, Haystack

- Delivery: Chat-based UI, web widgets, mobile apps

- UI: In-app tutor chat, lesson chatbot, LMS widget

Real-World Example

An online tutoring platform built a rag application that connected to its math and science content.

When students typed questions like “How do I solve this type of quadratic?” or “Explain Newton’s Third Law,” the assistant pulled relevant examples from the actual course material and broke it down step-by-step.

Within a month, help request volume dropped by 50%, and time spent per student in live sessions dropped—because they were already coming prepared.

This wasn’t AI for show. It actually taught.

7. Codebase Navigation Tools

This rag application helps developers ask natural questions about large, messy codebases—and get precise answers instantly.

Instead of digging through dozens of files or Stack Overflow threads, they can simply ask:

- “What does this function do?”

- “Where is the auth logic handled?”

- “What are the dependencies of this module?”

The system retrieves code chunks, context, and explanations using RAG.

Why RAG is Useful Here

Big codebases slow everyone down. New devs struggle to onboard. Seniors waste time answering the same questions. Docs are outdated—or missing entirely.

That’s where RAG wins.

It connects your AI assistant to your live codebase, commits, and documentation. When a dev asks something, the system pulls the right snippet and explains it in plain language.

This rag use case reduces cognitive load, speeds up onboarding, and gives engineers more time to actually build.

Tools / Tech Stack for the Nerds

- Data Sources: Git repos, markdown docs, code comments, READMEs

- Retrieval: Qdrant, Weaviate, Elasticsearch

- LLMs: GPT-4, Claude 2, CodeLlama

- Frameworks: LlamaIndex, LangChain, GraphRAG (for file trees)

- UI: IDE chat (VSCode), developer console, Git UI overlay

Real-World Example

An AI-first startup added a RAG-powered chatbot inside VSCode.

The assistant was trained on their entire monorepo and docs. Devs could ask it anything—from “How does user auth work?” to “Which services touch the billing flow?”

It pulled relevant functions, files, and explanations in seconds.

Result: onboarding time dropped 40%, senior dev interruptions fell by half, and weekend Slack stayed quiet.

That’s the kind of rag application every engineering team wants—and now, can actually build.

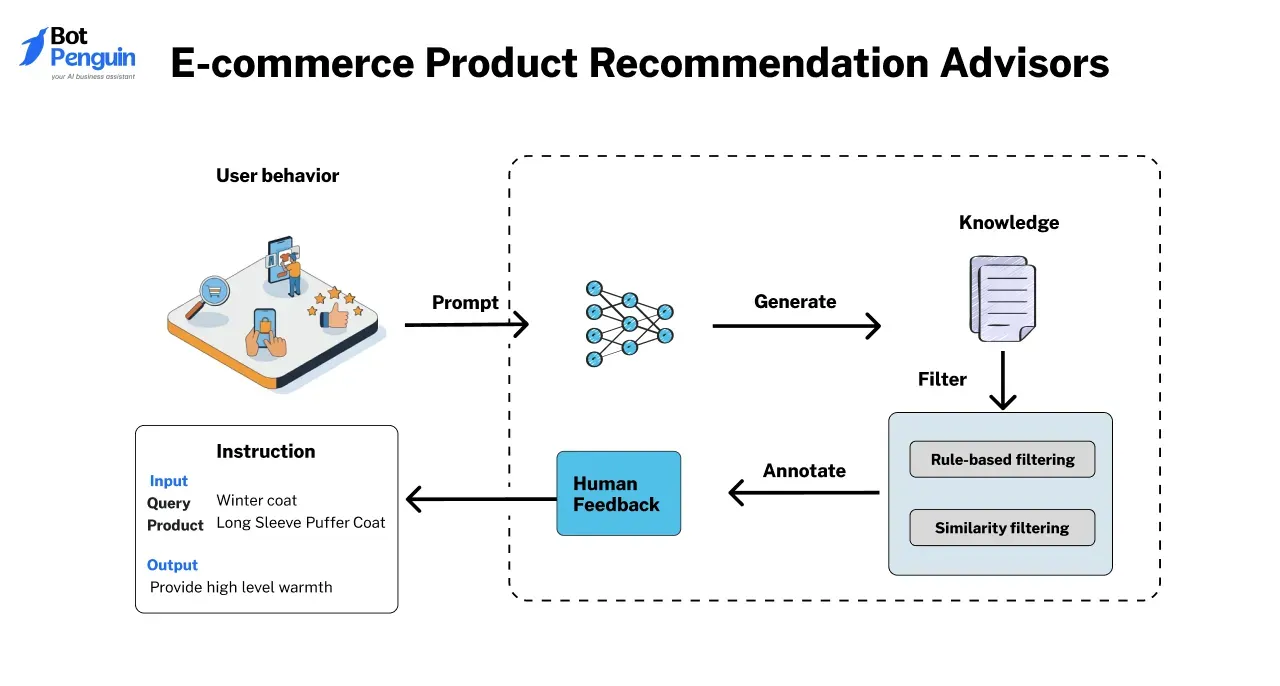

8. E-commerce Product Recommendation Advisors

This rag application helps shoppers find the right product faster—by turning a generic search box into a smart, conversational buying assistant.

Instead of forcing users to filter and scroll, it lets them describe what they want in plain language. The system then retrieves the most relevant options based on product data, reviews, and preferences—and explains why those options fit.

It’s like chatting with a sales rep who knows the entire catalog inside out.

Why RAG is Useful Here

Traditional e-commerce search is broken. It's built around keywords, not needs.

Shoppers who type "best running shoes for flat feet under 100" usually get generic results—or worse, nothing useful at all.

This is where RAG thrives.

It takes the shopper’s intent, searches structured and unstructured data (like reviews, specs, FAQs), and uses a language model to deliver personalized recommendations.

That makes this one of the most commercially valuable rag use cases today—especially for DTC brands, marketplaces, or retailers with deep catalogs.

It increases conversions, lowers bounce rates, and creates a better buying experience.

Tools / Tech Stack for the Nerds

- Data Inputs: Product descriptions, reviews, specs, customer Q&A

- Vector DBs: Pinecone, Weaviate, Qdrant

- LLMs: GPT-4, Claude, Mistral

- Frameworks: LangChain, LlamaIndex

- UI: On-site chat widgets, guided recommendation flows, voice-enabled assistants

Real-World Example

A fashion marketplace deployed a rag application trained on product tags, fit guides, and user reviews.

When shoppers asked questions like “Which jacket works for layering in cold, rainy cities?” the assistant retrieved the right match—along with reasoning pulled from fabric specs, customer reviews, and sizing notes.

The result? 22% more time spent on-site, a 16% boost in conversion for first-time users, and fewer returns.

That’s how you turn product data into buying confidence.

Ready to roll into the next rag use case?

9. News and Research Summarizers

This rag application helps users cut through information overload by summarizing news, research papers, and reports into digestible insights.

Instead of relying on static summaries or generic recaps, it pulls content from trusted sources in real time—then turns it into fast, focused, human-readable answers.

It’s like having a personal analyst, researcher, and editor in one.

Why RAG is Useful Here

People don’t have time to read everything. And most summaries miss context—or skip what actually matters.

That’s the gap.

With RAG, the assistant retrieves the most relevant paragraphs, quotes, or charts—then explains the highlights clearly. It adapts based on what the user asks, not just what’s in the feed.

This rag use case is especially valuable for journalists, researchers, executives, and knowledge workers trying to stay current without drowning in tabs.

Less skimming. More knowing.

Tools / Tech Stack for the Nerds

- Content Sources: RSS feeds, academic journals, press releases, company blogs

- Vector Search: Pinecone, Vespa, Qdrant

- LLMs: GPT-4, Claude, Mistral

- Frameworks: LangChain, LlamaIndex

- Delivery: Chat interface, Slack digest, email summaries, browser extension

- UI: Research dashboard, email bot, Slack app

Real-World Example

A fintech company launched a RAG-powered assistant to track breaking news across global markets.

Their team could ask, “What happened in the latest Fed update?” or “Summarize today’s energy sector headlines.” The assistant pulled verified news articles and generated clear summaries—updated by the minute.

It saved their analysts over 6 hours per week and gave leadership faster context for decision-making.

That’s how the right rag application turns information into action.

10. Conversational Agents for CRM or ERP Systems

This rag application connects to your CRM or ERP system and powers a chatbot that can actually answer business-critical questions.

Instead of navigating through endless dashboards and filters, users can ask:

- “What’s the status of the Acme Corp invoice?”

- “Who’s our top-performing sales rep this quarter?”

- “Did we close that deal in Q2?”

The assistant fetches and explains the answer instantly—right inside chat.

Why RAG is Useful Here

CRMs and ERPs are packed with data—but terrible at helping humans use it.

You have to know where to click, what to filter, or which tab hides the number you're looking for. That’s not just annoying—it slows decisions.

RAG removes that friction.

It understands the question, pulls from structured records and unstructured notes, and generates a clear answer. Fast.

This rag use case is a game changer for sales teams, account managers, finance ops, and support—where fast answers create real results.

Tools / Tech Stack for the Nerds

- Data Sources: Salesforce, HubSpot, Zoho, SAP, Oracle

- Retrieval: Weaviate, Elasticsearch, Qdrant

- LLMs: GPT-4, Claude 2, Mistral

- Frameworks: LangChain, LlamaIndex, private API connectors

- UI: Slackbots, Microsoft Teams integrations, CRM sidebar widgets

Real-World Example

A B2B software company integrated a rag application into its internal CRM.

Instead of switching tabs or pinging RevOps, sales reps just asked the bot things like “Any updates on the pending renewal from XYZ?” or “Show me open deals over 10k.”

The bot responded with insights pulled from notes, activity logs, and pipeline data—summarized in seconds.

In 3 weeks, rep productivity went up, lead follow-up time dropped, and execs finally had a real-time view—without dashboards.

That’s what happens when a rag application makes your systems actually talk back.

word integration.

How to Choose the Right RAG Use Case for Your Needs

Not every RAG solution fits every business. Here’s how to choose the rag application that actually solves your problem—without wasting time or budget.

Start With the Pain Point, Not the Technology

Before choosing any rag use case, get clear on the core frustration you're solving.

- Is your team overwhelmed by support tickets?

- Are your sales reps struggling to find CRM data?

- Is knowledge trapped across folders no one reads?

RAG works best when there’s a clear signal: high volume, repetitive questions, or slow access to information. That’s your cue.

Match RAG Strengths to Your Workflow

A good rag application isn’t just cool tech—it fits where your users already are.

- If your users work in Slack or Teams, build there.

- If they search internal docs, connect the RAG layer to those sources.

- If you're customer-facing, plug it into your chatbot or site search.

Use RAG to improve workflows, not add new ones.

Consider Your Data Type and Format

RAG thrives on unstructured content: PDFs, docs, transcripts, wikis.

- But not all rag use cases need the same data prep.

- Product search needs structured specs.

- Legal tools need contracts.

- Internal assistants need help docs and policies.

Think about where your “answers” live—and whether they’re ready to retrieve.

Pick Use Cases With High Return on Clarity

The best rag applications don’t just automate. They reduce confusion.

Use cases like:

- Customer support chatbots

- Internal knowledge search

- Sales enablement in CRM

- Research summarizers

These drive instant value by turning complexity into clarity. If it replaces a meeting, email, or long search—it’s a strong candidate.

Align With Tools You Already Use

Look at your current stack: CRM, ERP, LMS, Helpdesk, Search.

Many rag examples start by connecting to tools teams already use—then layering a conversational interface or better retrieval logic.

No need to rip and replace. Start by enhancing what already exists.

Start Small. Prove Fast.

You don’t need to deploy across your org on day one.

The most successful rag applications start small—solving one painful, high-impact problem in one department.

Prove it. Scale it. Then expand.

Need inspiration? Scroll back up to the 10 real-world rag use cases above—each one is a blueprint waiting to be tailored to your needs.

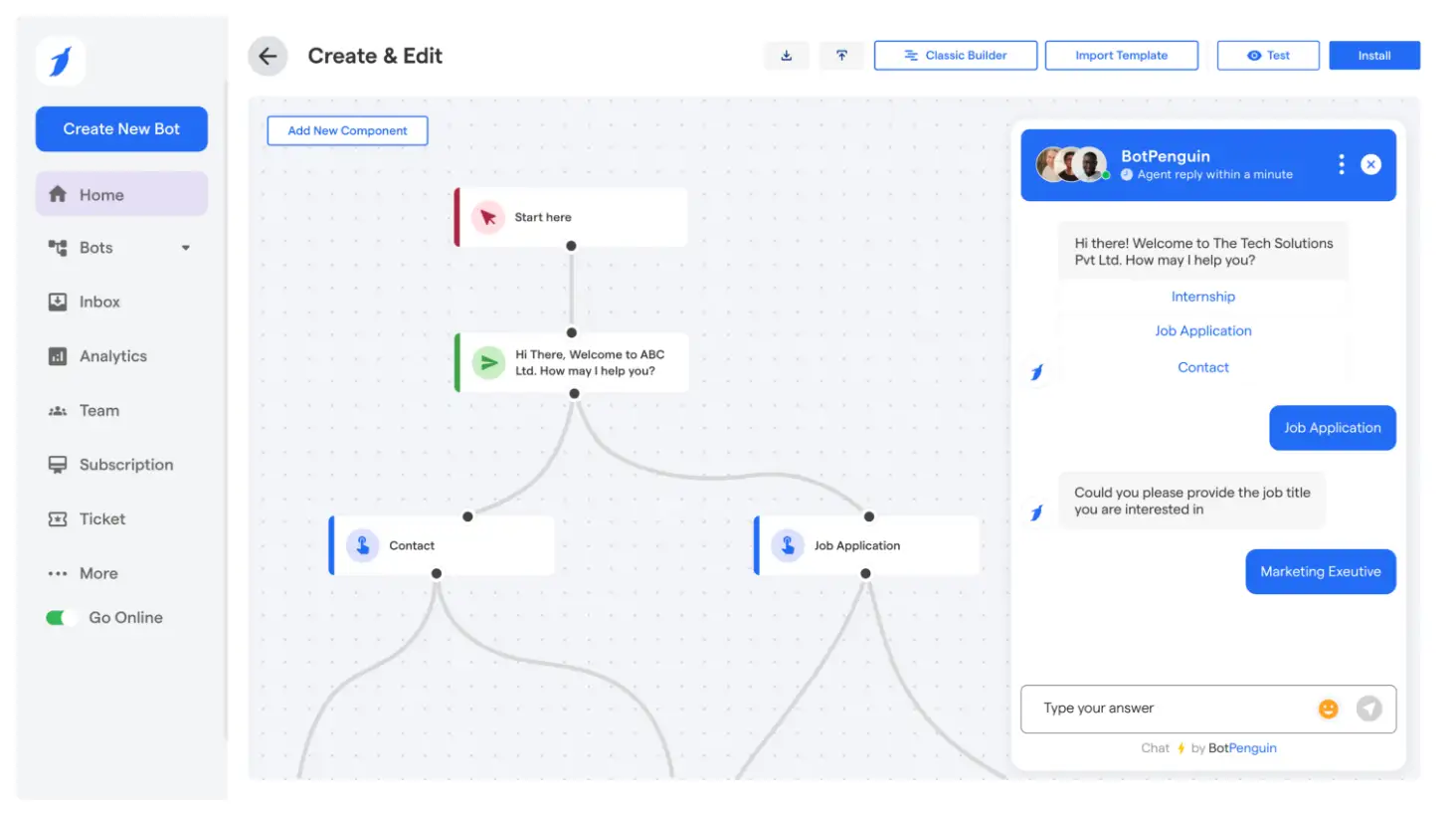

Start Building Your RAG-Powered Assistant—Right Now—with BotPenguin

Every rag use case you've seen above? From support bots and product advisors to internal search agents and CRM copilots—they can all be delivered through one tool: BotPenguin.

BotPenguin isn't just another chatbot builder.

It's a RAG-ready AI agent platform—built to connect your data with LLMs, answer questions accurately, and deliver it all through natural, human-like conversation.

If you're sitting on support docs, product manuals, customer data, or internal content—BotPenguin turns that into instant, useful responses. And it works where your users are: web, WhatsApp, Messenger, and more.

Why RAG + BotPenguin Works So Well

Here’s what happens when you pair a smart retrieval engine with a powerful AI agent:

- You get accurate, real-time answers—not just scripts or guesses

- Your team handles fewer repetitive questions

- Your users get a better experience

- And your business gets a chatbot that actually moves metrics

You don’t need to start from scratch. You just connect your data and go. That’s what makes BotPenguin one of the easiest ways to launch a RAG chatbot today.

Your Use Case is Already Possible

BotPenguin can deliver your project—right now.

And how you use it? That’s up to you.

Turn it into a smart support agent. A lead qualifier. A self-serve onboarding guide. A policy navigator.

One agent. All your use cases. Powered by RAG.

👉 Try BotPenguin now – and launch in minutes

Don’t build a chatbot. Build an AI that actually helps.

Frequently Asked Questions (FAQs)

What makes RAG better than simply connecting an AI to your database?

RAG doesn't just access your data—it retrieves the right data in real time, filters noise, and gives AI context before it answers, improving both relevance and clarity.

Can RAG personalize answers for different teams within the same company?

Yes, a RAG system can tailor responses for HR, support, or sales by retrieving from department-specific sources, making internal AI assistants far more useful and role-aware.

How does BotPenguin simplify launching a RAG-based assistant?

With BotPenguin, you skip the code and config. Just upload your documents, plug in your chatbot flow, and deploy an intelligent assistant trained on your real content.

Can I use RAG with voice or mobile chatbots?

Absolutely. RAG applications can be voice-enabled or integrated with mobile UIs, making fact-aware responses accessible across platforms—not just in desktop chat widgets or dashboards.

How does BotPenguin help reduce repetitive questions from users?

BotPenguin connects to your support or knowledge base, uses RAG to retrieve precise answers, and automatically resolves common queries—so your team spends less time on copy-paste replies.

![10 Most Useful RAG Application & Use Cases [Real World Example] (1).webp](https://relinns-hrm.s3.ap-south-1.amazonaws.com/uploads/1744619294573_10 Most Useful RAG Application & Use Cases [Real World Example] (1).webp)